1. K8S运行Redis服务,并通过PV/PVC结合NFS进行数据持久化

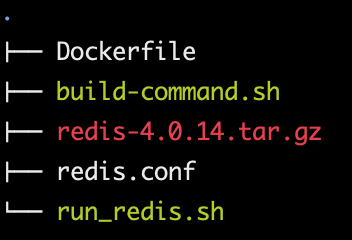

1.1 构建Redis镜像

# cat Dockerfile

#Redis Image

FROM harbor.k8s.local/k8s/centos-base:7.9.2009

MAINTAINER ericzhang "***@126.com"

ADD redis-4.0.14.tar.gz /usr/local/src

RUN ln -sv /usr/local/src/redis-4.0.14 /usr/local/redis && cd /usr/local/redis && make && cp src/redis-cli /usr/sbin/ && cp src/redis-server /usr/sbin/ && mkdir -pv /data/redis-data

ADD redis.conf /usr/local/redis/redis.conf

ADD run_redis.sh /usr/local/redis/run_redis.sh

EXPOSE 6379

CMD ["/usr/local/redis/run_redis.sh"]

#cat build-command.sh

#!/bin/bash

TAG=$1

docker build -t harbor.k8s.local/k8s/redis:${TAG} .

sleep 3

docker push harbor.k8s.local/k8s/redis:${TAG}

# cat run_redis.sh

#!/bin/bash

/usr/sbin/redis-server /usr/local/redis/redis.conf

tail -f /etc/hosts

#cat redis.conf | grep -Ev "^#|^$"

bind 0.0.0.0

protected-mode yes

port 6379

tcp-backlog 511

timeout 0

tcp-keepalive 300

daemonize yes

supervised no

pidfile /var/run/redis_6379.pid

loglevel notice

logfile ""

databases 16

always-show-logo yes

save 900 1

save 5 1 #快照,5秒中之内有一个key发生变化,就做一次快照

save 300 10

save 60 10000

stop-writes-on-bgsave-error no # 生产配置,快照存储写满后,redis是否停止响应,建议为no

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

dir /data/redis-data #redis的数据存放位置

slave-serve-stale-data yes

slave-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

slave-priority 100

requirepass 123456

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

slave-lazy-flush no

appendonly no

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

aof-use-rdb-preamble no

lua-time-limit 5000

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit slave 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

aof-rewrite-incremental-fsync yes

1.2 启动Redis服务

1.2.1 PV

# cat redis-persistentvolume.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-datadir-pv-1

namespace: test

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8sdata/redis-datadir-1

server: 172.16.244.141

1.2.2 PVC

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: redis-datadir-pvc-1

namespace: test

spec:

volumeName: redis-datadir-pv-1

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

1.2.3 Deployment

# cat redis.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: devops-redis

name: deploy-devops-redis

namespace: test

spec:

replicas: 1

selector:

matchLabels:

app: devops-redis

template:

metadata:

labels:

app: devops-redis

spec:

containers:

- name: redis-container

image: harbor.k8s.local/k8s/redis:v4.0.14

imagePullPolicy: Always

volumeMounts:

- mountPath: "/data/redis-data/"

name: redis-datadir

volumes:

- name: redis-datadir

persistentVolumeClaim:

claimName: redis-datadir-pvc-1

---

kind: Service

apiVersion: v1

metadata:

labels:

app: devops-redis

name: srv-devops-redis

namespace: test

spec:

type: NodePort

ports:

- name: http

port: 6379

targetPort: 6379

nodePort: 36379

selector:

app: devops-redis

sessionAffinity: ClientIP #类似源地址的会话保持,但用的不多

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

1.3 服务验证

# kubectl exec -it deploy-devops-redis-5f874fd856-65z8f -n test bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

[root@deploy-devops-redis-5f874fd856-65z8f /]# ss -anlptu

Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port

tcp LISTEN 0 511 *:6379 *:* users:(("redis-server",pid=9,fd=6))

[root@deploy-devops-redis-5f874fd856-65z8f /]# redis-cli

127.0.0.1:6379> AUTH 123456

OK

127.0.0.1:6379> SET test-key test-value

OK

127.0.0.1:6379> get test-key

"test-value"

127.0.0.1:6379>

备:

kompose是一个可以帮助用户把docker-compose的转移到Kubernetes上的工具。把Docker Compose文件并将其转换为Kubernetes资源。

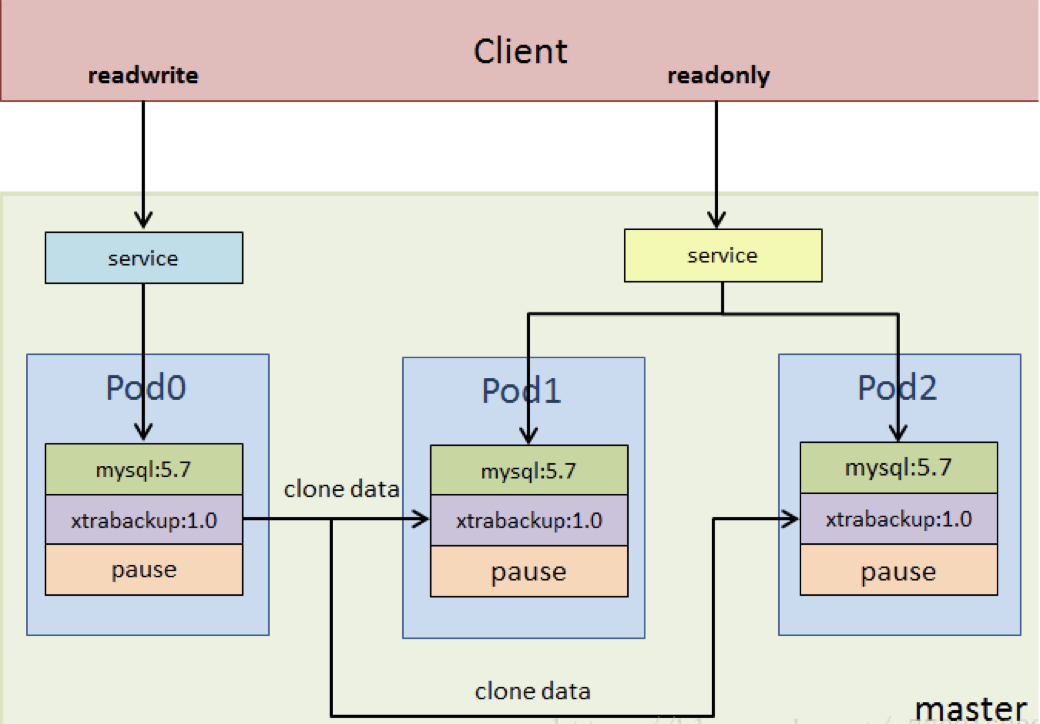

2. K8S 基于StatefulSet运行mysql 一主多从

https://kubernetes.io/zh/docs/tutorials/stateful-application/mysql-wordpress-persistent-volume/

https://www.kubernetes.org.cn/statefulset

基于StatefulSet实现: https://kubernetes.io/zh/docs/tasks/run-application/run-replicated-stateful-application/

Pod调度运行时,如果应用不需要任何稳定的标示、有序的部署、删除和扩展,则应该使用一组无状态副本的控制器来部署应用,例如 Deployment 或 ReplicaSet更适合无状态服务需求,而StatefulSet适合管理所有有状态的服务,比如MySQL、MongoDB集群等。

- StatefulSet本质上是Deployment的一种变体,在v1.9版本中已成为GA版本,它为了解决有状态服务的问题,它所管理的Pod拥有固定的Pod名称,启停顺序,在StatefulSet中,Pod名字称为网络标识(hostname),还必须要用到共享存储。

- 在Deployment中,与之对应的服务是service,而在StatefulSet中与之对应的headless service,headless service,即无头服务,与service的区别就是它没有Cluster IP,解析它的名称时将返回该Headless Service 对应的全部Pod的Endpoint列表。

- StatefulSet 特点:

- 给每个pod分配固定且唯一的网络标识符。

- 给每个pod分配固定且持久化的外部存储。

- 对pod进行有序的部署和扩展。

- 对pod进有序的删除和终止。

- 对pod进有序的自动滚动更新。

2.1 StatefulSet的组成部分

- Headless Service:用来定义Pod网络标识( DNS domain),指的是短的serfvice(丢失了domainname)。

- StatefulSet:定义具体应用,有多少个Pod副本,并为每个Pod定义了一个域名。

- volumeClaimTemplates::存储卷申请模板,创建PVC,指定pvc名称大小,将自动创建pvc,且pvc必须由存储类供应。

2.2 镜像准备

https://github.com/docker-library/ #github 下载地址

#准备mysql 镜像

# docker pull mysql:5.7.35

# docker tag mysql:5.7.35 harbor.k8s.local/k8s/mysql:5.7.35

# docker push harbor.k8s.local/k8s/mysql:5.7.35

#准备xtrabackup镜像

# docker pull registry.cn-hangzhou.aliyuncs.com/hxpdocker/xtrabackup:1.0

# docker tag registry.cn-hangzhou.aliyuncs.com/hxpdocker/xtrabackup:1.0 harbor.k8s.local/k8s/xtrabackup:1.0

# docker push harbor.k8s.local/k8s/xtrabackup:1.0

2.3 创建PV

# tree

.

├── mysql-configmap.yaml

├── mysql-services.yaml

├── mysql-statefulset.yaml

└── pv

└── mysql-persistentvolume.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-1

namespace: test

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8sdata/mysql-datadir-1

server: 172.16.244.141

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-2

namespace: test

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8sdata/mysql-datadir-2

server: 172.16.244.141

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-3

namespace: test

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8sdata/mysql-datadir-3

server: 172.16.244.141

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-4

namespace: test

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8sdata/mysql-datadir-4

server: 172.16.244.141

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-5

namespace: test

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8sdata/mysql-datadir-5

server: 172.16.244.141

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-6

namespace: test

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8sdata/mysql-datadir-6

server: 172.16.244.141

2.4 验证PV

# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

mysql-datadir-1 50Gi RWO Retain Available 14s

mysql-datadir-2 50Gi RWO Retain Available 14s

mysql-datadir-3 50Gi RWO Retain Available 14s

mysql-datadir-4 50Gi RWO Retain Available 14s

mysql-datadir-5 50Gi RWO Retain Available 14s

mysql-datadir-6 50Gi RWO Retain Available 14s

2.5 运行Mysql服务

# cat mysql-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql

namespace: test

labels:

app: mysql

data:

master.cnf: |

# Apply this config only on the master.

[mysqld]

log-bin

log_bin_trust_function_creators=1

lower_case_table_names=1

slave.cnf: |

# Apply this config only on slaves.

[mysqld]

super-read-only

log_bin_trust_function_creators=1

# cat mysql-services.yaml

# Headless service for stable DNS entries of StatefulSet members.

apiVersion: v1

kind: Service

metadata:

namespace: test

name: mysql

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

clusterIP: None

selector:

app: mysql

---

# Client service for connecting to any MySQL instance for reads.

# For writes, you must instead connect to the master: mysql-0.mysql.

apiVersion: v1

kind: Service

metadata:

name: mysql-read

namespace: test

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

selector:

app: mysql

# cat mysql-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

namespace: test

spec:

selector:

matchLabels:

app: mysql

serviceName: mysql

replicas: 3

template:

metadata:

labels:

app: mysql

spec:

initContainers:

- name: init-mysql

image: harbor.k8s.local/k8s/mysql:5.7.35

command:

- bash

- "-c"

- |

set -ex

# Generate mysql server-id from pod ordinal index.

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

echo [mysqld] > /mnt/conf.d/server-id.cnf

# Add an offset to avoid reserved server-id=0 value.

echo server-id=$((100 + $ordinal)) >> /mnt/conf.d/server-id.cnf

# Copy appropriate conf.d files from config-map to emptyDir.

if [[ $ordinal -eq 0 ]]; then

cp /mnt/config-map/master.cnf /mnt/conf.d/

else

cp /mnt/config-map/slave.cnf /mnt/conf.d/

fi

volumeMounts:

- name: conf

mountPath: /mnt/conf.d

- name: config-map

mountPath: /mnt/config-map

- name: clone-mysql

image: harbor.k8s.local/k8s/xtrabackup:1.0

command:

- bash

- "-c"

- |

set -ex

# Skip the clone if data already exists.

[[ -d /var/lib/mysql/mysql ]] && exit 0

# Skip the clone on master (ordinal index 0).

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

[[ $ordinal -eq 0 ]] && exit 0

# Clone data from previous peer.

ncat --recv-only mysql-$(($ordinal-1)).mysql 3307 | xbstream -x -C /var/lib/mysql

# Prepare the backup.

xtrabackup --prepare --target-dir=/var/lib/mysql

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

containers:

- name: mysql

image: harbor.k8s.local/k8s/mysql:5.7.35

env:

- name: MYSQL_ALLOW_EMPTY_PASSWORD

value: "1"

ports:

- name: mysql

containerPort: 3306

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

resources:

requests:

cpu: 500m

memory: 1Gi

livenessProbe:

exec:

command: ["mysqladmin", "ping"]

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

readinessProbe:

exec:

# Check we can execute queries over TCP (skip-networking is off).

command: ["mysql", "-h", "127.0.0.1", "-e", "SELECT 1"]

initialDelaySeconds: 5

periodSeconds: 2

timeoutSeconds: 1

- name: xtrabackup

image: harbor.k8s.local/k8s/xtrabackup:1.0

ports:

- name: xtrabackup

containerPort: 3307

command:

- bash

- "-c"

- |

set -ex

cd /var/lib/mysql

# Determine binlog position of cloned data, if any.

if [[ -f xtrabackup_slave_info ]]; then

# XtraBackup already generated a partial "CHANGE MASTER TO" query

# because we're cloning from an existing slave.

mv xtrabackup_slave_info change_master_to.sql.in

# Ignore xtrabackup_binlog_info in this case (it's useless).

rm -f xtrabackup_binlog_info

elif [[ -f xtrabackup_binlog_info ]]; then

# We're cloning directly from master. Parse binlog position.

[[ `cat xtrabackup_binlog_info` =~ ^(.*?)[[:space:]]+(.*?)$ ]] || exit 1

rm xtrabackup_binlog_info

echo "CHANGE MASTER TO MASTER_LOG_FILE='${BASH_REMATCH[1]}',\

MASTER_LOG_POS=${BASH_REMATCH[2]}" > change_master_to.sql.in

fi

# Check if we need to complete a clone by starting replication.

if [[ -f change_master_to.sql.in ]]; then

echo "Waiting for mysqld to be ready (accepting connections)"

until mysql -h 127.0.0.1 -e "SELECT 1"; do sleep 1; done

echo "Initializing replication from clone position"

# In case of container restart, attempt this at-most-once.

mv change_master_to.sql.in change_master_to.sql.orig

mysql -h 127.0.0.1 <<EOF

$(<change_master_to.sql.orig),

MASTER_HOST='mysql-0.mysql',

MASTER_USER='root',

MASTER_PASSWORD='',

MASTER_CONNECT_RETRY=10;

START SLAVE;

EOF

fi

# Start a server to send backups when requested by peers.

exec ncat --listen --keep-open --send-only --max-conns=1 3307 -c \

"xtrabackup --backup --slave-info --stream=xbstream --host=127.0.0.1 --user=root"

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

resources:

requests:

cpu: 100m

memory: 100Mi

volumes:

- name: conf

emptyDir: {}

- name: config-map

configMap:

name: mysql

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 10Gi

2.6 验证Mysql服务

# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

deploy-devops-redis-5f874fd856-65z8f 1/1 Running 1 7h46m

mysql-0 2/2 Running 0 2m31s

mysql-1 2/2 Running 1 112s

mysql-2 2/2 Running 1 66s

# kubectl exec -it mysql-0 -n test bash

root@mysql-0:/# mysql

mysql> show master status;

+--------------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+--------------------+----------+--------------+------------------+-------------------+

| mysql-0-bin.000003 | 154 | | | |

+--------------------+----------+--------------+------------------+-------------------+

1 row in set (0.00 sec)

mysql> show slave status;

Empty set (0.00 sec)

# kubectl exec -it mysql-1 -n test bash

root@mysql-1:/# mysql

mysql> show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: mysql-0.mysql

Master_User: root

Master_Port: 3306

Connect_Retry: 10

Master_Log_File: mysql-0-bin.000003

Read_Master_Log_Pos: 154

Relay_Log_File: mysql-1-relay-bin.000002

Relay_Log_Pos: 322

Relay_Master_Log_File: mysql-0-bin.000003

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

...

2.7 高可用测试

2.7.1 删除Mysql Master

root@master1:~# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

deploy-devops-redis-5f874fd856-65z8f 1/1 Running 1 8h

mysql-0 2/2 Running 0 22m

mysql-1 2/2 Running 1 21m

mysql-2 2/2 Running 0 58s

...

root@master1:~# kubectl delete pod mysql-0 -n test

pod "mysql-0" deleted

root@master1:~# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

deploy-devops-redis-5f874fd856-65z8f 1/1 Running 1 8h

mysql-0 1/2 Running 0 6s

mysql-1 2/2 Running 1 22m

mysql-2 2/2 Running 0 2m11s

...

2.7.2 删除Mysql Slave

root@master1:~# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

deploy-devops-redis-5f874fd856-65z8f 1/1 Running 1 8h

mysql-0 2/2 Running 0 56s

mysql-1 2/2 Running 1 23m

mysql-2 2/2 Running 0 3m1s

...

root@master1:~# kubectl delete pod mysql-1 -n test

pod "mysql-1" deleted

root@master1:~# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

deploy-devops-redis-5f874fd856-65z8f 1/1 Running 1 8h

mysql-0 2/2 Running 0 107s

mysql-1 1/2 Running 0 3s

mysql-2 2/2 Running 0 3m52s

...

2.8 节点的弹性伸缩

-

遵循顺序原则,增加、删除均从末尾的编号开始。

-

增加pod节点,同步数据实际是从其前一个pod上进行同步,如新建的mysql-3,则其是从mysql-2上进行同步。

root@master1:~# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

deploy-devops-redis-5f874fd856-65z8f 1/1 Running 1 8h

mysql-0 2/2 Running 0 16m

mysql-1 2/2 Running 1 15m

mysql-2 2/2 Running 1 15m

...

root@master1:~# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

deploy-devops-redis-5f874fd856-65z8f 1/1 Running 1 8h

mysql-0 2/2 Running 0 17m

mysql-1 2/2 Running 1 16m

mysql-2 2/2 Running 1 15m

mysql-3 0/2 Init:1/2 0 7s

...

root@master1:~# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

deploy-devops-redis-5f874fd856-65z8f 1/1 Running 1 8h

mysql-0 2/2 Running 0 17m

mysql-1 2/2 Running 1 16m

mysql-2 2/2 Running 1 16m

mysql-3 2/2 Running 1 30s

...

root@master1:~# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

deploy-devops-redis-5f874fd856-65z8f 1/1 Running 1 8h

mysql-0 2/2 Running 0 18m

mysql-1 2/2 Running 1 17m

mysql-2 2/2 Running 1 17m

mysql-3 2/2 Terminating 1 92s

...

root@master1:~# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

deploy-devops-redis-5f874fd856-65z8f 1/1 Running 1 8h

mysql-0 2/2 Running 0 19m

mysql-1 2/2 Running 1 18m

mysql-2 2/2 Terminating 1 17m

...

root@master1:~# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

deploy-devops-redis-5f874fd856-65z8f 1/1 Running 1 8h

mysql-0 2/2 Running 0 20m

mysql-1 2/2 Running 1 19m

...

2.9 客户端写

只有读查询才能使用负载平衡的客户端服务。 因为只有一个 MySQL 主服务器,所以客户端应直接连接到 MySQL 主服务器 Pod (通过其在无头服务中的 DNS 条目)以执行写入操作。

root@deploy:~# kubectl get pod -n test -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-devops-redis-5f874fd856-65z8f 1/1 Running 1 8h 10.200.255.150 172.16.244.111 <none> <none>

mysql-0 2/2 Running 0 5m39s 10.200.99.77 172.16.244.112 <none> <none>

mysql-1 2/2 Running 0 3m55s 10.200.147.202 172.16.244.113 <none> <none>

mysql-2 2/2 Running 0 7m44s 10.200.255.153 172.16.244.111 <none> <none>

...

root@master1:~# kubectl exec -it deploy-devops-redis-5f874fd856-65z8f -n test bash

[root@deploy-devops-redis-5f874fd856-65z8f /]# ping mysql-0.mysql

PING mysql-0.mysql.test.svc.k8s.local (10.200.99.77) 56(84) bytes of data.

64 bytes from mysql-0.mysql.test.svc.k8s.local (10.200.99.77): icmp_seq=1 ttl=62 time=0.594 ms

64 bytes from mysql-0.mysql.test.svc.k8s.local (10.200.99.77): icmp_seq=2 ttl=62 time=0.415 ms

64 bytes from mysql-0.mysql.test.svc.k8s.local (10.200.99.77): icmp_seq=3 ttl=62 time=0.512 ms

root@deploy:~# kubectl get svc -n test

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mysql ClusterIP None <none> 3306/TCP 30m

mysql-read ClusterIP 10.100.112.152 <none> 3306/TCP 30m

...

3. 运行java类型服务-Jenkins

基于java命令,运行java war包或jar包,本次以jenkins.war 包部署方式为例,且要求jenkins的数据保存至外部存

储(NFS或者PVC),其他java应用看实际需求是否需要将数据保存至外部存储。

3.1 构建Jenkins镜像

# tree .

.

├── Dockerfile

├── build-command.sh

├── jenkins-2.164.3.war

├── jenkins-2.190.1.war

└── run_jenkins.sh

0 directories, 5 files

# cat Dockerfile

#Jenkins Version 2.190.1

FROM harbor.k8s.local/k8s/jdk-base:v8.212

MAINTAINER ericzhang "***@***"

ADD jenkins-2.190.1.war /apps/jenkins/

ADD run_jenkins.sh /usr/bin/

EXPOSE 8080

CMD ["/usr/bin/run_jenkins.sh"]

# cat run_jenkins.sh

#!/bin/bash

cd /apps/jenkins && java -server -Xms1024m -Xmx1024m -Xss512k -jar jenkins-2.190.1.war --webroot=/apps/jenkins/jenkins-data --httpPort=8080

# chmod a+x *.sh #给run_jenkins.sh 添加执行权限

# cat build-command.sh

#!/bin/bash

docker build -t harbor.k8s.local/k8s/jenkins:v2.190.1 .

echo "镜像制作完成,即将上传至Harbor服务器"

sleep 1

docker push harbor.k8s.local/k8s/jenkins:v2.190.1

echo "镜像上传完成"

3.2 验证镜像

docker run -it --rm -p 8088:8080 harbor.k8s.local/k8s/jenkins:v2.190.1

3.3 PV/PVC/Deployment Yaml文件

# tree .

.

├── jenkins.yaml

└── pv

├── jenkins-persistentvolume.yaml

└── jenkins-persistentvolumeclaim.yaml

1 directory, 3 files

# cat pv/jenkins-persistentvolume.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: jenkins-datadir-pv

namespace: test

spec:

capacity:

storage: 100Gi

accessModes:

- ReadWriteOnce

nfs:

server: 172.16.244.141

path: /data/k8sdata/jenkins-data

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: jenkins-root-datadir-pv

namespace: test

spec:

capacity:

storage: 100Gi

accessModes:

- ReadWriteOnce

nfs:

server: 172.16.244.141

path: /data/k8sdata/jenkins-root-data

# cat pv/jenkins-persistentvolumeclaim.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-datadir-pvc

namespace: test

spec:

volumeName: jenkins-datadir-pv

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 80Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-root-data-pvc

namespace: test

spec:

volumeName: jenkins-root-datadir-pv

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 80Gi

# cat jenkins.yaml

apiVersion: apps/v1

#apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

app: test-jenkins

name: test-jenkins-deployment

namespace: test

spec:

replicas: 1

selector:

matchLabels:

app: test-jenkins

template:

metadata:

labels:

app: test-jenkins

spec:

containers:

- name: test-jenkins-container

image: harbor.k8s.local/k8s/jenkins:v2.190.1

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

volumeMounts:

- mountPath: "/apps/jenkins/jenkins-data/"

name: jenkins-datadir-test

- mountPath: "/root/.jenkins"

name: jenkins-root-datadir

volumes:

- name: jenkins-datadir-test

persistentVolumeClaim:

claimName: jenkins-datadir-pvc

- name: jenkins-root-datadir

persistentVolumeClaim:

claimName: jenkins-root-data-pvc

---

kind: Service

apiVersion: v1

metadata:

labels:

app: test-jenkins

name: test-jenkins-service

namespace: test

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 38080

selector:

app: test-jenkins

3.6 创建PVC/PV,运行Jenkins服务

# kubectl apply -f pv/jenkins-persistentvolume.yaml

# kubectl apply -f pv/jenkins-persistentvolumeclaim.yaml

# kubectl apply -f pv/jenkins.yaml

3.7 验证pod及服务

# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

...

test-jenkins-deployment-58d6bfbcfb-sst2c 1/1 Running 0 2m2s

...

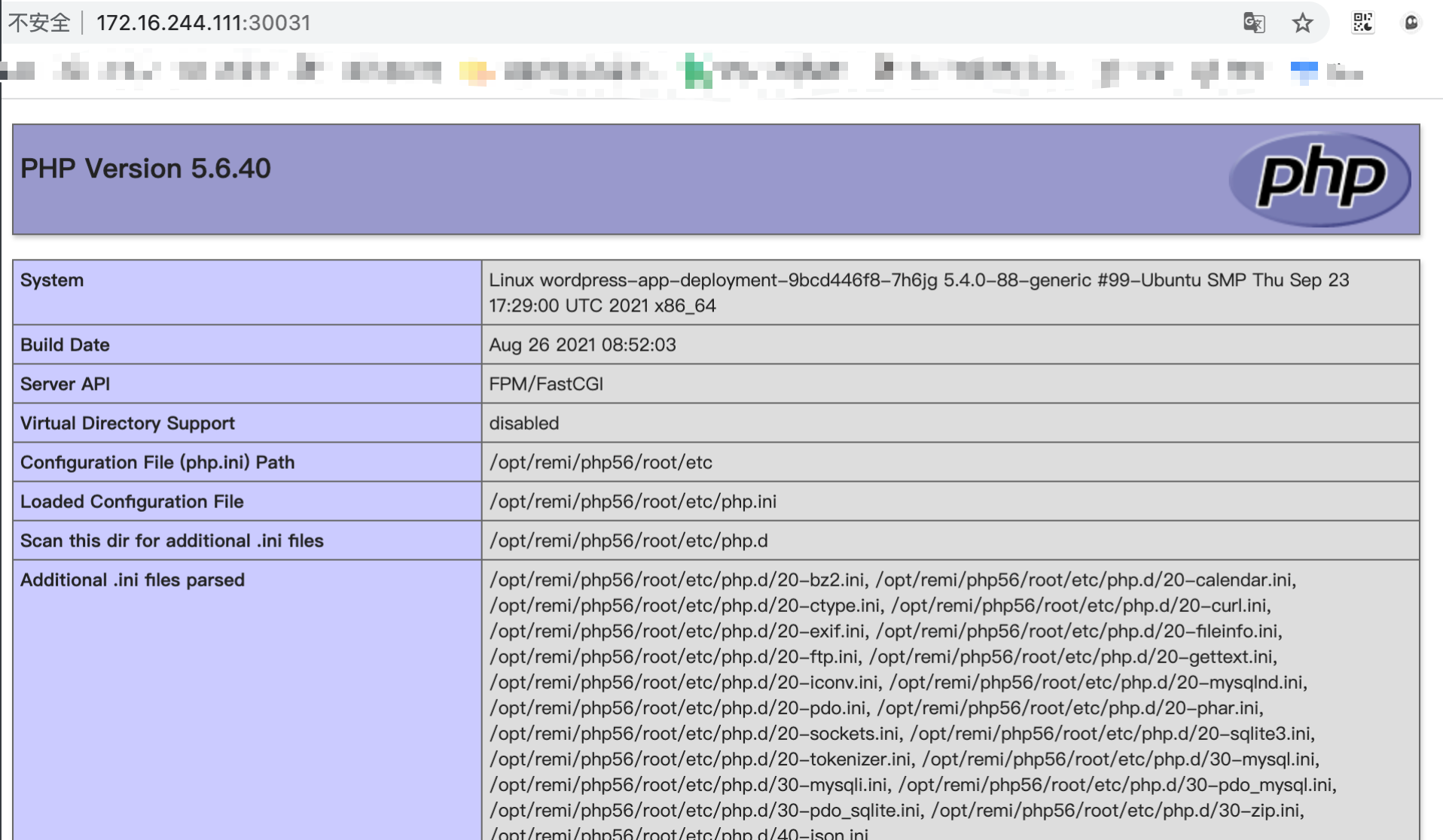

4. K8S实现Nginx+Php+WordPress+MySQL实现完全容器化的web站点案例

LNMP案例之基于Nginx+PHP实现WordPress博客站点,要求Nginx+PHP运行在同一个Pod的不同容器,MySQL

运行与default的namespace并可以通过service name增删改查数据库。

https://cn.wordpress.org/

https://cn.wordpress.org/download/releases/

4.1 准备PHP镜像

4.1.1 官方镜像

https://hub.docker.com/

# docker pull php:5.6.40-fpm

# docker tag php:5.6.40-fpm harbor.magedu.net/linux36/php:5.6.40-fpm

# docker push harbor.magedu.net/linux36/php:5.6.40-fpm

4.1.2 自制镜像

tree

.

├── Dockerfile

├── build-command.sh

├── run_php.sh

└── www.conf

0 directories, 4 files

# cat Dockerfile

#PHP Base Image

FROM harbor.k8s.local/k8s/centos-base:7.9.2009

MAINTAINER xxx@xxx

RUN yum install -y https://mirrors.tuna.tsinghua.edu.cn/remi/enterprise/remi-release-7.rpm && yum install php56-php-fpm php56-php-mysql -y

ADD www.conf /opt/remi/php56/root/etc/php-fpm.d/www.conf

#RUN useradd nginx -u 2019

ADD run_php.sh /usr/local/bin/run_php.sh

EXPOSE 9000

CMD ["/usr/local/bin/run_php.sh"]

# cat run_php.sh

#!/bin/bash

#echo "nameserver 10.20.254.254" > /etc/resolv.conf

/opt/remi/php56/root/usr/sbin/php-fpm

#/opt/remi/php56/root/usr/sbin/php-fpm --nodaemonize

tail -f /etc/hosts

# cat www.conf | grep -Ev "^;|^$"

[www]

user = nginx

group = nginx

listen = 0.0.0.0:9000

pm = dynamic

pm.max_children = 50

pm.start_servers = 5

pm.min_spare_servers = 5

pm.max_spare_servers = 35

slowlog = /opt/remi/php56/root/var/log/php-fpm/www-slow.log

php_admin_value[error_log] = /opt/remi/php56/root/var/log/php-fpm/www-error.log

php_admin_flag[log_errors] = on

php_value[session.save_handler] = files

php_value[session.save_path] = /opt/remi/php56/root/var/lib/php/session

php_value[soap.wsdl_cache_dir] = /opt/remi/php56/root/var/lib/php/wsdlcache

# cat build-command.sh

#!/bin/bash

TAG=$1

docker build -t harbor.k8s.local/k8s/wordpress-php-5.6:${TAG} .

echo "镜像制作完成,即将上传至Harbor服务器"

sleep 1

docker push harbor.k8s.local/k8s/wordpress-php-5.6:${TAG}

echo "镜像上传完成"

4.2 准备nginx镜像

4.2.1 基础镜像

# cat Dockerfile

#Nginx Base Image

FROM harbor.k8s.local/k8s/centos-base:7.9.2009

MAINTAINER xxx@xxx

RUN yum install -y vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop

ADD nginx-1.14.2.tar.gz /usr/local/src/

RUN cd /usr/local/src/nginx-1.14.2 && ./configure --prefix=/apps/nginx && make && make install && ln -sv /apps/nginx/sbin/nginx /usr/sbin/nginx &&rm -rf /usr/local/src/nginx-1.14.2.tar.gz

# cat build-command.sh

#!/bin/bash

docker build -t harbor.k8s.local/k8s/nginx-base-wordpress:v1.14.2 .

sleep 1

docker push harbor.k8s.local/k8s/nginx-base-wordpress:v1.14.2

# tree

.

├── Dockerfile

├── build-command.sh

└── nginx-1.14.2.tar.gz

0 directories, 3 files

4.2.2 服务镜像

# tree

.

├── Dockerfile

├── build-command.sh

├── index.html

├── nginx.conf

└── run_nginx.sh

# cat Dockerfile

#FROM harbor.magedu.local/pub-images/nginx-base-wordpress:v1.14.2

FROM harbor.k8s.local/k8s/nginx-base-wordpress:v1.14.2

ADD nginx.conf /apps/nginx/conf/nginx.conf

ADD run_nginx.sh /apps/nginx/sbin/run_nginx.sh

RUN mkdir -pv /home/nginx/wordpress

RUN chown nginx.nginx /home/nginx/wordpress/ -R

EXPOSE 80 443

CMD ["/apps/nginx/sbin/run_nginx.sh"]

# cat build-command.sh

#!/bin/bash

TAG=$1

docker build -t harbor.k8s.local/k8s/wordpress-nginx:${TAG} .

echo "镜像制作完成,即将上传至Harbor服务器"

sleep 1

docker push harbor.k8s.local/k8s/wordpress-nginx:${TAG}

echo "镜像上传完成"

# cat nginx.conf

user nginx nginx;

worker_processes auto;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

#daemon off;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

client_max_body_size 10M;

client_body_buffer_size 16k;

client_body_temp_path /apps/nginx/tmp 1 2 2;

gzip on;

server {

listen 80;

server_name blogs.magedu.net;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

root /home/nginx/wordpress;

index index.php index.html index.htm;

#if ($http_user_agent ~ "ApacheBench|WebBench|TurnitinBot|Sogou web spider|Grid Service") {

# proxy_pass http://www.baidu.com;

# #return 403;

#}

}

location ~ \.php$ {

root /home/nginx/wordpress;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

#fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}

# another virtual host using mix of IP-, name-, and port-based configuration

#

#server {

# listen 8000;

# listen somename:8080;

# server_name somename alias another.alias;

# location / {

# root html;

# index index.html index.htm;

# }

#}

# HTTPS server

#

#server {

# listen 443 ssl;

# server_name localhost;

# ssl_certificate cert.pem;

# ssl_certificate_key cert.key;

# ssl_session_cache shared:SSL:1m;

# ssl_session_timeout 5m;

# ssl_ciphers HIGH:!aNULL:!MD5;

# ssl_prefer_server_ciphers on;

# location / {

# root html;

# index index.html index.htm;

# }

#}

}

# cat run_nginx.sh

#!/bin/bash

#echo "nameserver 10.20.254.254" > /etc/resolv.conf

#chown nginx.nginx /home/nginx/wordpress/ -R

/apps/nginx/sbin/nginx

tail -f /etc/hosts

4.3 运行Wordpress站点

# cat wordpress.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: wordpress-app

name: wordpress-app-deployment

namespace: test

spec:

replicas: 1

selector:

matchLabels:

app: wordpress-app

template:

metadata:

labels:

app: wordpress-app

spec:

containers:

- name: wordpress-app-nginx

image: harbor.k8s.local/k8s/wordpress-nginx:v1

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

- containerPort: 443

protocol: TCP

name: https

volumeMounts:

- name: wordpress

mountPath: /home/nginx/wordpress

readOnly: false

- name: wordpress-app-php

image: harbor.k8s.local/k8s/wordpress-php-5.6:v1

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 9000

protocol: TCP

name: http

volumeMounts:

- name: wordpress

mountPath: /home/nginx/wordpress

readOnly: false

volumes:

- name: wordpress

nfs:

server: 172.16.244.141

path: /data/k8sdata/wordpress

---

kind: Service

apiVersion: v1

metadata:

labels:

app: wordpress-app

name: wordpress-app-spec

namespace: test

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 30031

- name: https

port: 443

protocol: TCP

targetPort: 443

nodePort: 30033

selector:

app: wordpress-app

# kubectl apply -f cat wordpress.yaml

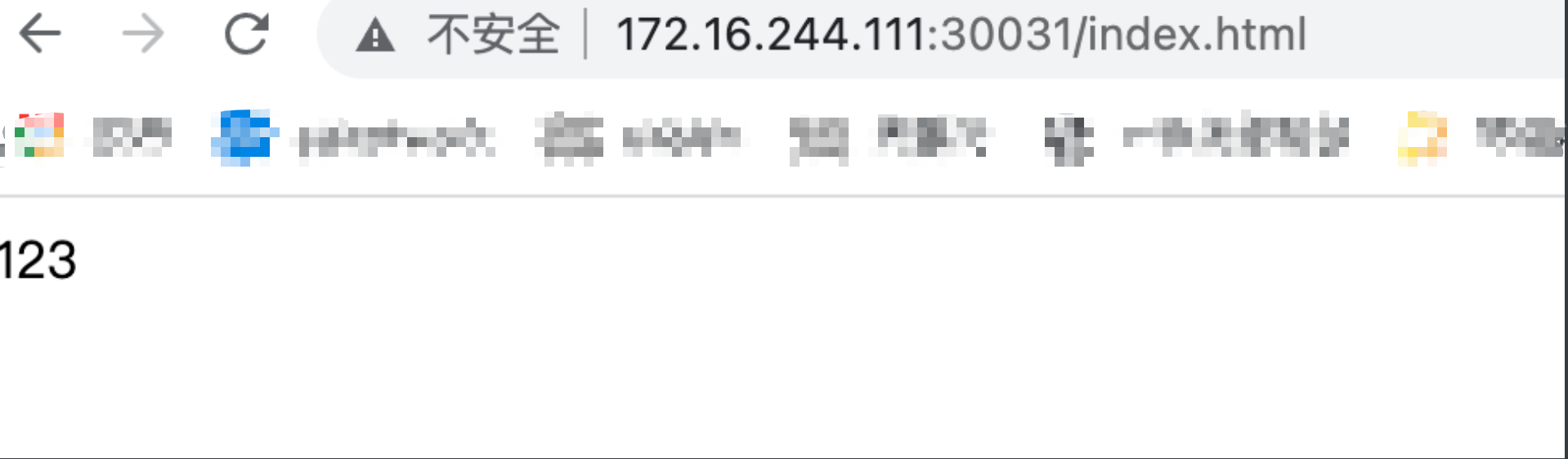

- 测试

root@ha1:/data/k8sdata/wordpress# ls

index.html index.php

root@ha1:/data/k8sdata/wordpress# cat index.php

<?php

phpinfo();

?>

root@ha1:/data/k8sdata/wordpress# cat index.html

123

4.4 初始化Wordpress站点

4.4.1 上传wordpress代码

#上传wordpress代码(直接上传到nfs的目录)

root@ha1:/data/k8sdata/wordpress# tar xf wordpress-5.8.1-zh_CN.tar.gz

root@ha1:/data/k8sdata/wordpress# mv wordpress/* .

root@ha1:/data/k8sdata/wordpress# mv wordpress-5.8.1-zh_CN.tar.gz /opt/

root@ha1:/data/k8sdata/wordpress# ls

index.php readme.html wp-activate.php wp-blog-header.php wp-config-sample.php wp-cron.php wp-links-opml.php wp-login.php wp-settings.php wp-trackback.php

license.txt wordpress wp-admin wp-comments-post.php wp-content wp-includes wp-load.php wp-mail.php wp-signup.php xmlrpc.php

4.4.2 K8S中Mysql创建数据库

# kubectl exec -it mysql-0 -n test bash #要访问主库访问

root@mysql-0:/# mysql

mysql> CREATE DATABASE wordpress;

mysql> GRANT ALL PRIVILEGES ON wordpress.* TO "wordpress"@"%" IDENTIFIED BY "wordpress";

4.4.3 K8S中测试Mysql连接

root@mysql-0:/# mysql -uwordpress -hmysql-0.mysql -pwordpress

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| wordpress |

+--------------------+

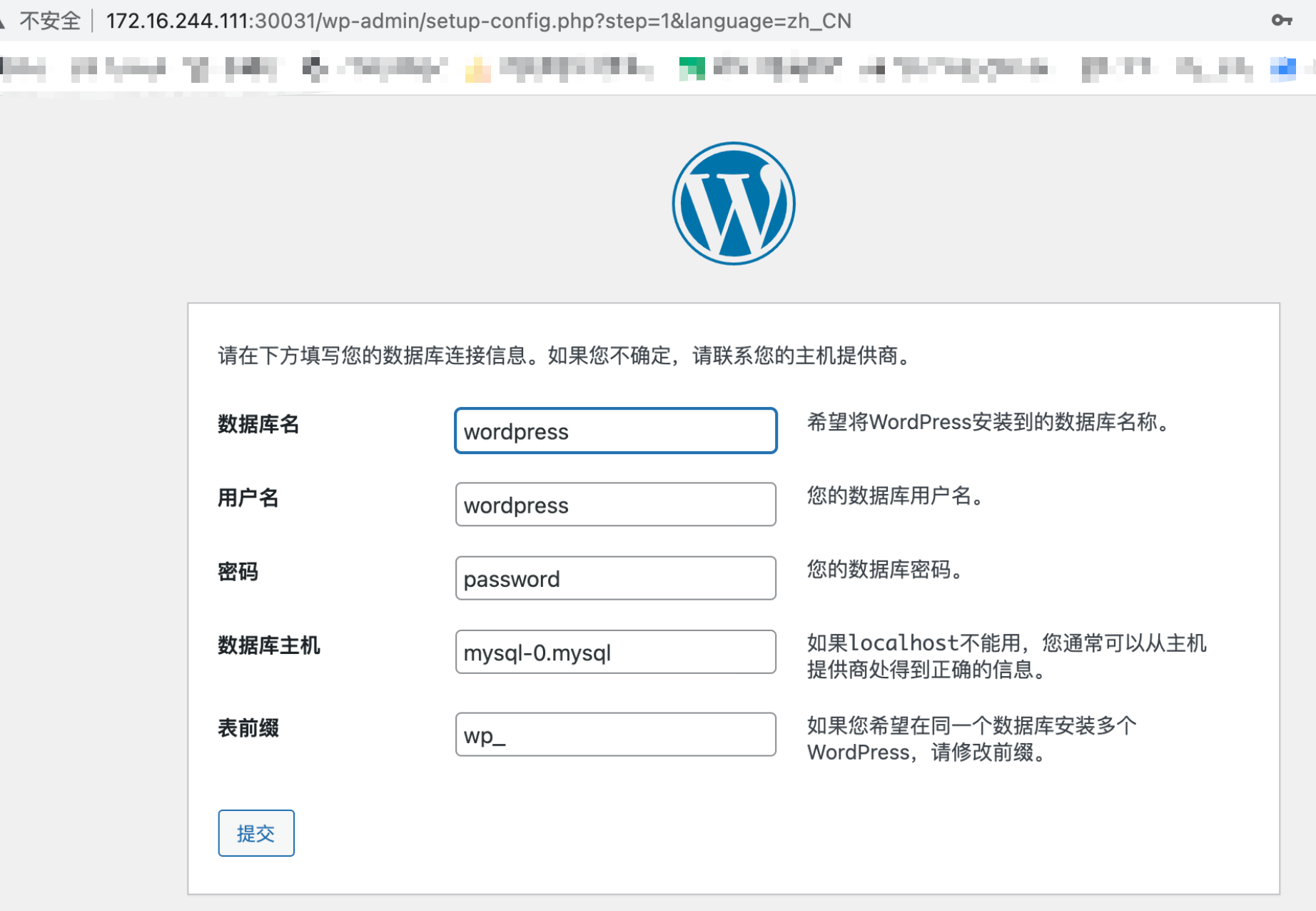

4.4.4 通过web界面初始化数据库

注意:数据库主机,如果和wordpress不在一个namespace,可以填数据库的service全称(mysql-0.mysql.test.svc.k8s.local),实现跨namespace访问。

如果不能自动创建,要修改数据目录(后端存储上的目录)的权限,修改权限后仍然不行,可以将pod删除,重新自动创建一下。

root@ha1:/data/k8sdata# ll -d wordpress/

drwxr-xr-x 6 root root 4096 Oct 20 14:22 wordpress//

root@ha1:/data/k8sdata# chown 2021.2021 wordpress/ -R

root@ha1:/data/k8sdata# ll -d wordpress/

drwxr-xr-x 6 2021 2021 4096 Oct 20 14:22 wordpress//

root@ha1:/data/k8sdata# ll -d wordpress/*

-rw-r--r-- 1 2021 2021 405 Feb 6 2020 wordpress/index.php

-rw-r--r-- 1 2021 2021 19915 Jan 1 2021 wordpress/license.txt

-rw-r--r-- 1 2021 2021 7346 Jul 6 20:23 wordpress/readme.html

drwxr-xr-x 2 2021 2021 6 Oct 20 14:11 wordpress/wordpress/

-rw-r--r-- 1 2021 2021 7165 Jan 21 2021 wordpress/wp-activate.php

drwxr-xr-x 9 2021 2021 4096 Sep 19 15:00 wordpress/wp-admin/

-rw-r--r-- 1 2021 2021 351 Feb 6 2020 wordpress/wp-blog-header.php

-rw-r--r-- 1 2021 2021 2328 Feb 17 2021 wordpress/wp-comments-post.php

-rw-r--r-- 1 2021 2021 3004 May 21 18:40 wordpress/wp-config-sample.php

-rw-r--r-- 1 2021 2021 3195 Oct 20 14:22 wordpress/wp-config.php

drwxr-xr-x 5 2021 2021 69 Sep 19 15:00 wordpress/wp-content/

-rw-r--r-- 1 2021 2021 3939 Jul 31 2020 wordpress/wp-cron.php

drwxr-xr-x 25 2021 2021 8192 Sep 19 15:00 wordpress/wp-includes/

-rw-r--r-- 1 2021 2021 2496 Feb 6 2020 wordpress/wp-links-opml.php

-rw-r--r-- 1 2021 2021 3900 May 16 01:38 wordpress/wp-load.php

-rw-r--r-- 1 2021 2021 45463 Apr 7 2021 wordpress/wp-login.php

-rw-r--r-- 1 2021 2021 8509 Apr 14 2020 wordpress/wp-mail.php

-rw-r--r-- 1 2021 2021 22297 Jun 2 07:09 wordpress/wp-settings.php

-rw-r--r-- 1 2021 2021 31693 May 8 04:16 wordpress/wp-signup.php

-rw-r--r-- 1 2021 2021 4747 Oct 9 2020 wordpress/wp-trackback.php

-rw-r--r-- 1 2021 2021 3236 Jun 9 2020 wordpress/xmlrpc.php

root@deploy:~# kubectl exec -it wordpress-app-deployment-9bcd446f8-7h6jg -c wordpress-app-nginx -n test bash

[root@wordpress-app-deployment-9bcd446f8-7h6jg /]# cat /etc/passwd | grep nginx

nginx:x:2021:2021::/home/nginx:/bin/bash

#重新创建pod

root@deploy:~# kubectl delete pod wordpress-app-deployment-9bcd446f8-7h6jg -n test

4.4.5 Wordpress数据库连接配置

# cat wp-config-sample.php

<?php

/**

* WordPress基础配置文件。 *

* 这个文件被安装程序用于自动生成wp-config.php配置文件,

* 您可以不使用网站,您需要手动复制这个文件,

* 并重命名为“wp-config.php”,然后填入相关信息。 *

* 本文件包含以下配置选项: *

* * MySQL设置

* * 密钥

* * 数据库表名前缀

* * ABSPATH

*

* @link https://codex.wordpress.org/zh-cn:%E7%BC%96%E8%BE%91_wp-config.php *

* @package WordPress

*/

// ** MySQL 设置 - 具体信息来自您正在使用的主机 ** // /** WordPress数据库的名称 */

define('DB_NAME', 'wordpress');

/** MySQL数据库用户名 */ define('DB_USER', 'wordpress');

/** MySQL数据库密码 */ define('DB_PASSWORD', 'wordpress');

/** MySQL主机 */

define('DB_HOST', 'mysql-0.mysql');

/** 创建数据表时默认的文字编码 */ define('DB_CHARSET', 'utf8');

/** 数据库整理类型。如不确定请勿更改 */ define('DB_COLLATE', '');

/**#@+

* 身份认证密钥。

.............................

root@ha1:/data/k8sdata/wordpress# cat wp-config.php

<?php

/**

* The base configuration for WordPress

*

* The wp-config.php creation script uses this file during the installation.

* You don't have to use the web site, you can copy this file to "wp-config.php"

* and fill in the values.

*

* This file contains the following configurations:

*

* * MySQL settings

* * Secret keys

* * Database table prefix

* * ABSPATH

*

* @link https://wordpress.org/support/article/editing-wp-config-php/

*

* @package WordPress

*/

// ** MySQL settings - You can get this info from your web host ** //

/** The name of the database for WordPress */

define( 'DB_NAME', 'wordpress' );

/** MySQL database username */

define( 'DB_USER', 'wordpress' );

/** MySQL database password */

define( 'DB_PASSWORD', 'wordpress' );

/** MySQL hostname */

define( 'DB_HOST', 'mysql-0.mysql' );

/** Database charset to use in creating database tables. */

define( 'DB_CHARSET', 'utf8mb4' );

/** The database collate type. Don't change this if in doubt. */

define( 'DB_COLLATE', '' );

/**#@+

* Authentication unique keys and salts.

*

* Change these to different unique phrases! You can generate these using

* the {@link https://api.wordpress.org/secret-key/1.1/salt/ WordPress.org secret-key service}.

*

* You can change these at any point in time to invalidate all existing cookies.

* This will force all users to have to log in again.

*

* @since 2.6.0

*/

define( 'AUTH_KEY', 'u@JyQUtO?a6xD0p31va>3kA5lT7:&sEraX5f,{YsH:=$B}30f$k`2tMvWdMDs.eD' );

define( 'SECURE_AUTH_KEY', 'qyyuFnfapYOZx>.|8lfb%ARw$RQNN M|PYnqYLFG#hNhOZbygt7^M7Na4WQezUkW' );

define( 'LOGGED_IN_KEY', 'a#UkCT~zp1pQ*!Je`@iK~DdOk3A5vW5Z<GE 5diz=s}6WMc;fSneETY^6Xc#LqZ' );

define( 'NONCE_KEY', '?1/wz=G6,1,#FBS89aqdiiAxg8-Zq3i 4B3>riI#Q.L`LCq|o{ZXNpo7Cuas*e~u' );

define( 'AUTH_SALT', 'z;DSO4>L^i3;ya1LS_i_|qe+^`IfL=7*Gv2Uo`7(D_g+F W:n7GnU2UG{rP,&D!O' );

define( 'SECURE_AUTH_SALT', '(l;R+0$nw*I2c;Dz7FzM2Aih_>ENvO]{#($T,8$kV#I9ty#RMTjz)zX{KWuF>`X^' );

define( 'LOGGED_IN_SALT', 'aPZu/wtAq$oV;tMnc@Mb-=(-ki[rb;bHPOk~Rp>EQT~kcPWUPM#O+bB)P|ajiV]1' );

define( 'NONCE_SALT', 'iz`7Z$CQ7#!*-ruDBB3BLg)fiIu{6piT5|-NYf[81b,c$|t-vMj*d>PMgPE/vF]6' );

/**#@-*/

/**

* WordPress database table prefix.

*

* You can have multiple installations in one database if you give each

* a unique prefix. Only numbers, letters, and underscores please!

*/

$table_prefix = 'wp_';

/**

* For developers: WordPress debugging mode.

*

* Change this to true to enable the display of notices during development.

* It is strongly recommended that plugin and theme developers use WP_DEBUG

* in their development environments.

*

* For information on other constants that can be used for debugging,

* visit the documentation.

*

* @link https://wordpress.org/support/article/debugging-in-wordpress/

*/

define( 'WP_DEBUG', false );

/* Add any custom values between this line and the "stop editing" line. */

/* That's all, stop editing! Happy publishing. */

/** Absolute path to the WordPress directory. */

if ( ! defined( 'ABSPATH' ) ) {

define( 'ABSPATH', __DIR__ . '/' );

}

/** Sets up WordPress vars and included files. */

require_once ABSPATH . 'wp-settings.php';

4.5 验证K8S中Mysql数据

root@deploy:~# kubectl exec -it mysql-0 -n test bash

mysql> show databases;

+------------------------+

| Database |

+------------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

| wordpress |

| xtrabackup_backupfiles |

+------------------------+

6 rows in set (0.01 sec)

mysql> use wordpress;

Database changed

mysql> show tables;

+-----------------------+

| Tables_in_wordpress |

+-----------------------+

| wp_commentmeta |

| wp_comments |

| wp_links |

| wp_options |

| wp_postmeta |

| wp_posts |

| wp_term_relationships |

| wp_term_taxonomy |

| wp_termmeta |

| wp_terms |

| wp_usermeta |

| wp_users |

+-----------------------+

12 rows in set (0.00 sec)

root@deploy:~# kubectl exec -it mysql-1 -n test bash

mysql> show databases;

+------------------------+

| Database |

+------------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

| wordpress |

| xtrabackup_backupfiles |

+------------------------+

6 rows in set (0.01 sec)

mysql> use wordpress;

Database changed

mysql> show tables;

+-----------------------+

| Tables_in_wordpress |

+-----------------------+

| wp_commentmeta |

| wp_comments |

| wp_links |

| wp_options |

| wp_postmeta |

| wp_posts |

| wp_term_relationships |

| wp_term_taxonomy |

| wp_termmeta |

| wp_terms |

| wp_usermeta |

| wp_users |

+-----------------------+

12 rows in set (0.00 sec)

wordpress 的账号:admin/abc@111@b

5. K8S运行dubbo+zookeeper微服务案例

运行dubbo生成者与消费者示例。https://dubbo.apache.org/zh/docs/references/registry/zookeeper/

官方网站:https://dubbo.apache.org/zh/

Dubbo-demo:https://codeup.aliyun.com/614c9d1b3a631c643ae62857/Scripts.git

5.1 微服务相关

5.1.1 什么是单体服务、单体应用

https://about.jd.com/

缺点:随着业务增长及开发团队的不断扩大,遇到的问题如下:

- 部署效率问题

一套代码几十兆上百兆,需要较长的编译时间和部署时间。 - 团队协作问题

一个单体应用中包含多个功能,但是每个功能可能是不同的开发小组单独开发,那么代码怎么一起打包与构建。 - 影响业务稳定

一个tomcat运行的代码过于臃肿,导致出现内存溢出等问题。

5.1.2 什么是微服务(Micro services)

https://spring.io/

- 微服务就是将单体应用拆分为多个应用,每个应用运行在单独的运行环境,应用之间通过指定接口与方式调用,应用之间的代码版本升级互不影响。

- 实现微服务的方式:

- 横向拆分:

按照不同的业务进行拆分,如支付、订单、登录、物流。 - 纵向拆分:

把一个业务中的组件再细致拆分,比如支付系统拆分为微信支付、银联支付、支付宝支付。

- 横向拆分:

5.1.3 实现微服务的几个要素

- 微服务如何落地(docker)

- 微服务之间如何发现对方(注册中心、服务发现)

- 微服务之间如何访问对方(服务访问-> resetful API)

- 微服务如何快速扩容(服务治理)

- 微服务如何监控(服务监控)

- 微服务如何升级与回滚(CI/CD)

- 微服务访问日志如何查看(ELK)

5.1.4 微服务开发环境

-

spring boot:是一个快速开发框架,内置Servlet

https://mvnrepository.com/artifact/org.springframework.boot/spring-boot-starter-tomcat/1.5.11.RELEASE -

Spring Cloud基于Spring Boot,为微服务体系开发中的架构问题,提供了一整套的解决方案——服务注册与发现,服务消费,服务保护与熔断,网关,分布式调用追踪,分布式配置管理等。

https://spring.io/projects/spring-cloud-alibaba -

Dubbo是阿里巴巴开源的分布式服务治理框架,也具备服务注册与发现,服务消费等功能,出现比Spring Cloud早,功能没有Spring Cloud多,但是国内目前依然有很多互联网企业实现dubbo实现微服务。

5.1.5 SpringBoot与Spring Cloud区别

- SpringBoot只是一个快速开发框架,使用注解简化了xml配置,内置了Servlet容器,以Java应用程序进行执行。

- SpringCloud是一系列框架的集合,可以包含SpringBoot。

- SpringBoot专注于方便的开发单个个体微服务。

- SpringCloud是关注于全局的微服务协调治理框架,它将SpringBoot开发的一个个单体微服务整合并管理起来。为各个微服务之间提供配置管理,服务发现,断路器,路由,微代理,事件总线,决策竞选,分布式会话等集成服务。

- Spring boot使用了默认大于配置的理念,很多集成方案已经帮你选择好了,能不配置就不配置,Spring Cloud很大的一部分是基于Spring boot来实现。

- SpringBoot可以离开SpringCloud单独使用,而SpringCloud离不开SpringBoot。

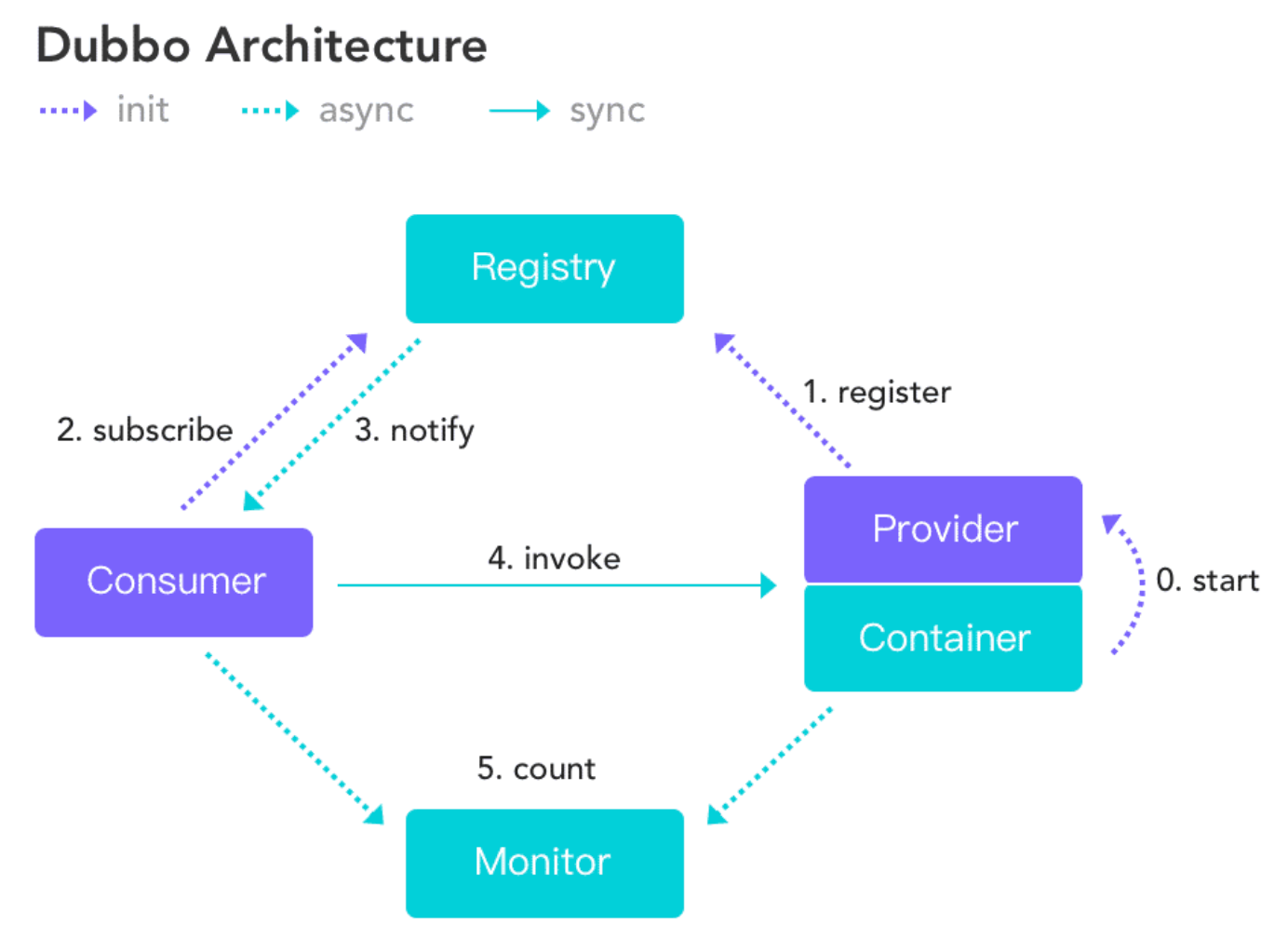

5.1.6 Dubbo的架构

实现服务发现的方式有很多种,Dubbo 提供的是一种 Client-Based 的服务发现机制,通常还需要部署额外的第三方注册中心组件来协调服务发现过程,如常用的 Nacos、Consul、Zookeeper 等,Dubbo 自身也提供了对多种注册中心组件的对接,用户可以灵活选择。

服务发现的一个核心组件是注册中心,Provider 注册地址到注册中心,Consumer 从注册中心读取和订阅 Provider 地址列表。

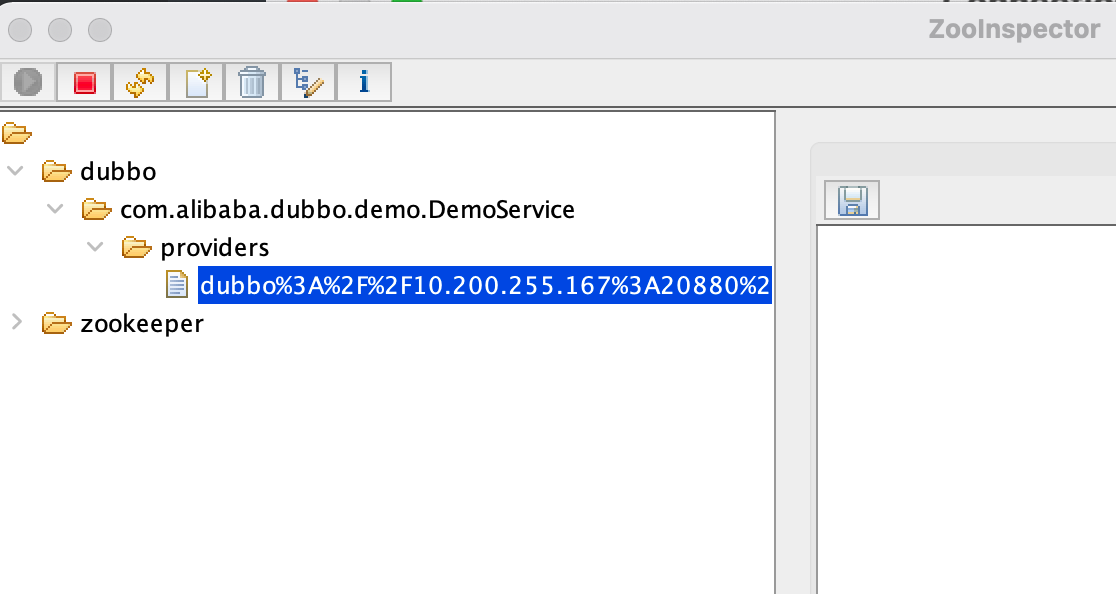

5.2 运行provider

.

├── Dockerfile

├── build-command.sh

├── dubbo-demo-provider-2.1.5

│ ├── bin

│ │ ├── dump.sh

│ │ ├── restart.sh

│ │ ├── server.sh

│ │ ├── start.bat

│ │ ├── start.sh

│ │ └── stop.sh

│ ├── conf

│ │ └── dubbo.properties

│ └── lib

│ ├── cache-api-0.4.jar

│ ├── commons-codec-1.4.jar

│ ├── commons-logging-1.1.1.jar

│ ├── commons-pool-1.5.5.jar

│ ├── dubbo-2.1.5.jar

│ ├── dubbo-demo-2.1.5.jar

│ ├── dubbo-demo-provider-2.1.5.jar

│ ├── fastjson-1.1.8.jar

│ ├── gmbal-api-only-3.0.0-b023.jar

│ ├── grizzly-core-2.1.4.jar

│ ├── grizzly-framework-2.1.4.jar

│ ├── grizzly-portunif-2.1.4.jar

│ ├── grizzly-rcm-2.1.4.jar

│ ├── hessian-4.0.7.jar

│ ├── hibernate-validator-4.2.0.Final.jar

│ ├── httpclient-4.1.2.jar

│ ├── httpcore-4.1.2.jar

│ ├── javassist-3.15.0-GA.jar

│ ├── jedis-2.0.0.jar

│ ├── jetty-6.1.26.jar

│ ├── jetty-util-6.1.26.jar

│ ├── jline-0.9.94.jar

│ ├── log4j-1.2.16.jar

│ ├── management-api-3.0.0-b012.jar

│ ├── mina-core-1.1.7.jar

│ ├── netty-3.2.5.Final.jar

│ ├── servlet-api-2.5-20081211.jar

│ ├── slf4j-api-1.6.2.jar

│ ├── spring-2.5.6.SEC03.jar

│ ├── validation-api-1.0.0.GA.jar

│ └── zookeeper-3.3.3.jar

├── dubbo-demo-provider-2.1.5-assembly.tar.gz

└── run_java.sh

# cat dubbo.properties

dubbo.container=log4j,spring

dubbo.application.name=demo-provider

dubbo.application.owner=

#dubbo.registry.address=multicast://224.5.6.7:1234

dubbo.registry.address=zookeeper://zookeeper1.test.svc.k8s.local:2181 | zookeeper://zookeeper2.test.svc.k8s.local:2181 | zookeeper://zookeeper3.test.svc.k8s.local:2181

#dubbo.registry.address="zookeeper://zookeeper1.linux36.svc.linux36.local:2181,"

#dubbo.registry.address=dubbo://127.0.0.1:9090

dubbo.monitor.protocol=registry

dubbo.protocol.name=dubbo

dubbo.protocol.port=20880

dubbo.log4j.file=logs/dubbo-demo-provider.log

dubbo.log4j.level=WARN

#Dubbo provider

FROM harbor.k8s.local/k8s/jdk-base:v8.212

MAINTAINER ericzhang "xxx@xxx"

RUN yum install file nc -y

RUN mkdir -p /apps/dubbo/provider

ADD dubbo-demo-provider-2.1.5/ /apps/dubbo/provider

ADD run_java.sh /apps/dubbo/provider/bin

RUN chown nginx.nginx /apps -R

RUN chmod a+x /apps/dubbo/provider/bin/*.sh

CMD ["/apps/dubbo/provider/bin/run_java.sh"]

#cat build-command.sh

#!/bin/bash

docker build -t harbor.k8s.local/k8s/dubbo-demo-provider:v1 .

sleep 3

docker push harbor.k8s.local/k8s/dubbo-demo-provider:v1

# cat run_java.sh

#!/bin/bash

#echo "nameserver 223.6.6.6" > /etc/resolv.conf

#/usr/share/filebeat/bin/filebeat -c /etc/filebeat/filebeat.yml -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat &

su - nginx -c "/apps/dubbo/provider/bin/start.sh"

tail -f /etc/hosts

# chmod a+x 8.sh

# bash build-command.sh

# cat provider.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: test-provider

name: test-provider-deployment

namespace: test

spec:

replicas: 1

selector:

matchLabels:

app: test-provider

template:

metadata:

labels:

app: test-provider

spec:

containers:

- name: test-provider-container

image: harbor.k8s.local/k8s/dubbo-demo-provider:v1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 20880

protocol: TCP

name: http

---

kind: Service

apiVersion: v1

metadata:

labels:

app: test-provider

name: test-provider-spec

namespace: test

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 20880

#nodePort: 30001

selector:

app: test-provider

# kubectl apply -f provider.yaml

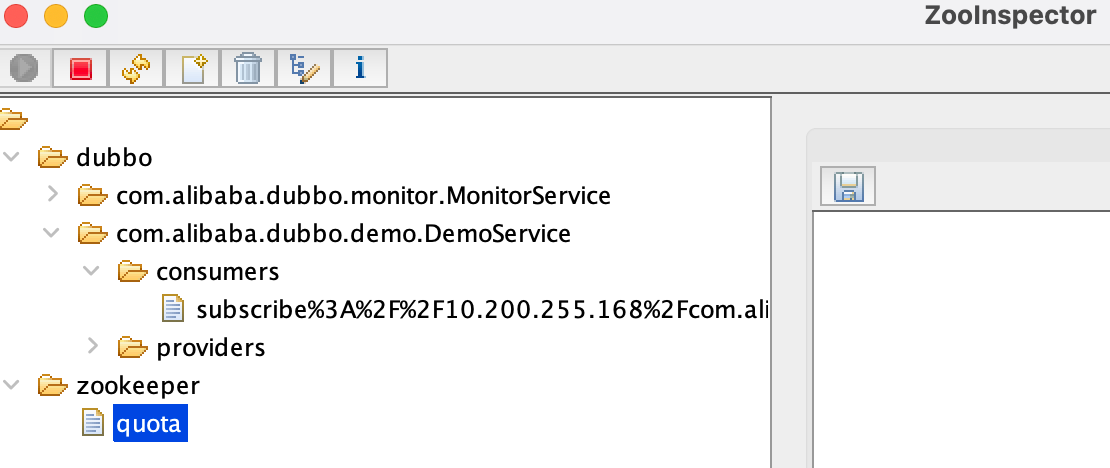

5.3 运行consumer

root@deploy:~/k8s-data/dockerfile/web/magedu/dubbo/consumer# tree

.

├── Dockerfile

├── build-command.sh

├── dubbo-demo-consumer-2.1.5

│ ├── bin

│ │ ├── dump.sh

│ │ ├── restart.sh

│ │ ├── server.sh

│ │ ├── start.bat

│ │ ├── start.sh

│ │ └── stop.sh

│ ├── conf

│ │ └── dubbo.properties

│ └── lib

│ ├── cache-api-0.4.jar

│ ├── commons-codec-1.4.jar

│ ├── commons-logging-1.1.1.jar

│ ├── commons-pool-1.5.5.jar

│ ├── dubbo-2.1.5.jar

│ ├── dubbo-demo-2.1.5.jar

│ ├── dubbo-demo-consumer-2.1.5.jar

│ ├── fastjson-1.1.8.jar

│ ├── gmbal-api-only-3.0.0-b023.jar

│ ├── grizzly-core-2.1.4.jar

│ ├── grizzly-framework-2.1.4.jar

│ ├── grizzly-portunif-2.1.4.jar

│ ├── grizzly-rcm-2.1.4.jar

│ ├── hessian-4.0.7.jar

│ ├── hibernate-validator-4.2.0.Final.jar

│ ├── httpclient-4.1.2.jar

│ ├── httpcore-4.1.2.jar

│ ├── javassist-3.15.0-GA.jar

│ ├── jedis-2.0.0.jar

│ ├── jetty-6.1.26.jar

│ ├── jetty-util-6.1.26.jar

│ ├── jline-0.9.94.jar

│ ├── log4j-1.2.16.jar

│ ├── management-api-3.0.0-b012.jar

│ ├── mina-core-1.1.7.jar

│ ├── netty-3.2.5.Final.jar

│ ├── servlet-api-2.5-20081211.jar

│ ├── slf4j-api-1.6.2.jar

│ ├── spring-2.5.6.SEC03.jar

│ ├── validation-api-1.0.0.GA.jar

│ └── zookeeper-3.3.3.jar

├── dubbo-demo-consumer-2.1.5-assembly.tar.gz

└── run_java.sh

4 directories, 42 files

# cat dubbo.properties

dubbo.container=log4j,spring

dubbo.application.name=demo-consumer

dubbo.application.owner=

#dubbo.registry.address=multicast://224.5.6.7:1234

dubbo.registry.address=zookeeper://zookeeper1.test.svc.k8s.local:2181 | zookeeper://zookeeper2.test.svc.k8s.local:2181 | zookeeper://zookeeper3.test.svc.k8s.local:2181

#dubbo.registry.address="zookeeper://zookeeper1.linux36.svc.linux36.local:2181,"

#dubbo.registry.address=dubbo://127.0.0.1:9090

dubbo.monitor.protocol=registry

dubbo.log4j.file=logs/dubbo-demo-consumer.log

dubbo.log4j.level=WARN

# cat Dockerfile

#Dubbo consumer

FROM harbor.k8s.local/k8s/jdk-base:v8.212

MAINTAINER ericzhang "xxx@xxx"

RUN yum install file -y

RUN mkdir -p /apps/dubbo/consumer

ADD dubbo-demo-consumer-2.1.5 /apps/dubbo/consumer

ADD run_java.sh /apps/dubbo/consumer/bin

RUN chown nginx.nginx /apps -R

RUN chmod a+x /apps/dubbo/consumer/bin/*.sh

CMD ["/apps/dubbo/consumer/bin/run_java.sh"]

# cat build-command.sh

#!/bin/bash

docker build -t harbor.k8s.local/k8s/dubbo-demo-consumer:v1 .

sleep 3

docker push harbor.k8s.local/k8s/dubbo-demo-consumer:v1

# cat run_java.sh

#!/bin/bash

#echo "nameserver 223.6.6.6" > /etc/resolv.conf

#/usr/share/filebeat/bin/filebeat -c /etc/filebeat/filebeat.yml -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat &

su - nginx -c "/apps/dubbo/consumer/bin/start.sh"

tail -f /etc/hosts

# chmod a+x 8.sh

# bash build-command.sh

# cat consumer.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: test-consumer

name: test-consumer-deployment

namespace: test

spec:

replicas: 1

selector:

matchLabels:

app: test-consumer

template:

metadata:

labels:

app: test-consumer

spec:

containers:

- name: test-consumer-container

image: harbor.k8s.local/k8s/dubbo-demo-consumer:v1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

---

kind: Service

apiVersion: v1

metadata:

labels:

app: test-consumer

name: test-consumer-server

namespace: test

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

#nodePort: 30001

selector:

app: test-consumer

# kubectl apply -f consumer.yaml

# kubectl exec -it test-provider-deployment-5b74694449-lzrx5 -n test bash

[root@test-provider-deployment-5b74694449-lzrx5 logs]# pwd

/apps/dubbo/provider/logs

[root@test-provider-deployment-5b74694449-lzrx5 logs]# tail -f *.log

==> dubbo-demo-provider.log <==

at com.alibaba.dubbo.common.bytecode.proxy0.count(proxy0.java)

at com.alibaba.dubbo.monitor.dubbo.DubboMonitor.send(DubboMonitor.java:112)

at com.alibaba.dubbo.monitor.dubbo.DubboMonitor$1.run(DubboMonitor.java:69)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

==> stdout.log <==

[23:56:30] Hello world122, request from consumer: /10.200.255.168:40850

[23:56:32] Hello world123, request from consumer: /10.200.255.168:40850

[23:56:34] Hello world124, request from consumer: /10.200.255.168:40850

[23:56:36] Hello world125, request from consumer: /10.200.255.168:40850

[23:56:38] Hello world126, request from consumer: /10.200.255.168:40850

[23:56:40] Hello world127, request from consumer: /10.200.255.168:40850

[23:56:42] Hello world128, request from consumer: /10.200.255.168:40850

[23:56:44] Hello world129, request from consumer: /10.200.255.168:40850

5.4 运行dubbo admin

root@deploy:~/dubboadmin# tree -L 2

.

├── Dockerfile

├── build-command.sh

├── catalina.sh

├── dubboadmin

│ ├── META-INF

│ ├── SpryAssets

│ ├── WEB-INF

│ ├── crossdomain.xml

│ ├── css

│ ├── favicon.ico

│ ├── images

│ └── js

├── dubboadmin.war

├── dubboadmin.war.bak

├── logging.properties

├── run_tomcat.sh

└── server.xml

# cat dubboadmin/WEB-INF/dubbo.properties

dubbo.registry.address=zookeeper://zookeeper1.test.svc.k8s.local:2181

dubbo.admin.root.password=root

dubbo.admin.guest.password=guest

# cat Dockerfile

#Dubbo dubboadmin

FROM harbor.k8s.local/k8s/tomcat-base:v8.5.43

MAINTAINER ericzhang "xxx@xxx"

RUN yum install unzip -y

ADD server.xml /apps/tomcat/conf/server.xml

ADD logging.properties /apps/tomcat/conf/logging.properties

ADD catalina.sh /apps/tomcat/bin/catalina.sh

ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh

ADD dubboadmin.war /data/tomcat/webapps/dubboadmin.war

RUN cd /data/tomcat/webapps && unzip dubboadmin.war && rm -rf dubboadmin.war && chown -R nginx.nginx /data /apps

EXPOSE 8080 8443

CMD ["/apps/tomcat/bin/run_tomcat.sh"]

# cat run_tomcat.sh

#!/bin/bash

su - nginx -c "/apps/tomcat/bin/catalina.sh start"

su - nginx -c "tail -f /etc/hosts"

root@deploy:~/dubboadmin# cat logging.properties | grep -Ev "^#|^$"

handlers = 1catalina.org.apache.juli.AsyncFileHandler, 2localhost.org.apache.juli.AsyncFileHandler, 3manager.org.apache.juli.AsyncFileHandler, 4host-manager.org.apache.juli.AsyncFileHandler, java.util.logging.ConsoleHandler

.handlers = 1catalina.org.apache.juli.AsyncFileHandler, java.util.logging.ConsoleHandler

1catalina.org.apache.juli.AsyncFileHandler.level = FINE

1catalina.org.apache.juli.AsyncFileHandler.directory = /data/tomcat/logs

1catalina.org.apache.juli.AsyncFileHandler.prefix = catalina.

2localhost.org.apache.juli.AsyncFileHandler.level = FINE

2localhost.org.apache.juli.AsyncFileHandler.directory = /data/tomcat/logs

2localhost.org.apache.juli.AsyncFileHandler.prefix = localhost.

3manager.org.apache.juli.AsyncFileHandler.level = FINE

3manager.org.apache.juli.AsyncFileHandler.directory = /data/tomcat/logs

3manager.org.apache.juli.AsyncFileHandler.prefix = manager.

4host-manager.org.apache.juli.AsyncFileHandler.level = FINE

4host-manager.org.apache.juli.AsyncFileHandler.directory = /data/tomcat/logs

4host-manager.org.apache.juli.AsyncFileHandler.prefix = host-manager.

java.util.logging.ConsoleHandler.level = FINE

java.util.logging.ConsoleHandler.formatter = org.apache.juli.OneLineFormatter

org.apache.catalina.core.ContainerBase.[Catalina].[localhost].level = INFO

org.apache.catalina.core.ContainerBase.[Catalina].[localhost].handlers = 2localhost.org.apache.juli.AsyncFileHandler

org.apache.catalina.core.ContainerBase.[Catalina].[localhost].[/manager].level = INFO

org.apache.catalina.core.ContainerBase.[Catalina].[localhost].[/manager].handlers = 3manager.org.apache.juli.AsyncFileHandler

org.apache.catalina.core.ContainerBase.[Catalina].[localhost].[/host-manager].level = INFO

org.apache.catalina.core.ContainerBase.[Catalina].[localhost].[/host-manager].handlers = 4host-manager.org.apache.juli.AsyncFileHandler

root@deploy:~/dubboadmin# cat server.xml

<?xml version="1.0" encoding="UTF-8"?>

<!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

--><!-- Note: A "Server" is not itself a "Container", so you may not

define subcomponents such as "Valves" at this level.

Documentation at /docs/config/server.html

--><Server port="8005" shutdown="SHUTDOWN">

<Listener className="org.apache.catalina.startup.VersionLoggerListener"/>

<!-- Security listener. Documentation at /docs/config/listeners.html

<Listener className="org.apache.catalina.security.SecurityListener" />

-->

<!--APR library loader. Documentation at /docs/apr.html -->

<Listener SSLEngine="on" className="org.apache.catalina.core.AprLifecycleListener"/>

<!-- Prevent memory leaks due to use of particular java/javax APIs-->

<Listener className="org.apache.catalina.core.JreMemoryLeakPreventionListener"/>

<Listener className="org.apache.catalina.mbeans.GlobalResourcesLifecycleListener"/>

<Listener className="org.apache.catalina.core.ThreadLocalLeakPreventionListener"/>

<!-- Global JNDI resources

Documentation at /docs/jndi-resources-howto.html

-->

<GlobalNamingResources>

<!-- Editable user database that can also be used by

UserDatabaseRealm to authenticate users

-->

<Resource auth="Container" description="User database that can be updated and saved" factory="org.apache.catalina.users.MemoryUserDatabaseFactory" name="UserDatabase" pathname="conf/tomcat-users.xml" type="org.apache.catalina.UserDatabase"/>

</GlobalNamingResources>

<!-- A "Service" is a collection of one or more "Connectors" that share

a single "Container" Note: A "Service" is not itself a "Container",

so you may not define subcomponents such as "Valves" at this level.

Documentation at /docs/config/service.html

-->

<Service name="Catalina">

<!--The connectors can use a shared executor, you can define one or more named thread pools-->

<!--

<Executor name="tomcatThreadPool" namePrefix="catalina-exec-"

maxThreads="150" minSpareThreads="4"/>

-->

<!-- A "Connector" represents an endpoint by which requests are received

and responses are returned. Documentation at :

Java HTTP Connector: /docs/config/http.html (blocking & non-blocking)

Java AJP Connector: /docs/config/ajp.html

APR (HTTP/AJP) Connector: /docs/apr.html

Define a non-SSL/TLS HTTP/1.1 Connector on port 8080

-->

<Connector connectionTimeout="20000" port="8080" protocol="HTTP/1.1" redirectPort="8443"/>

<!-- A "Connector" using the shared thread pool-->

<!--

<Connector executor="tomcatThreadPool"

port="8080" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" />

-->

<!-- Define a SSL/TLS HTTP/1.1 Connector on port 8443

This connector uses the NIO implementation that requires the JSSE

style configuration. When using the APR/native implementation, the

OpenSSL style configuration is required as described in the APR/native

documentation -->

<!--

<Connector port="8443" protocol="org.apache.coyote.http11.Http11NioProtocol"

maxThreads="150" SSLEnabled="true" scheme="https" secure="true"

clientAuth="false" sslProtocol="TLS" />

-->

<!-- Define an AJP 1.3 Connector on port 8009 -->

<Connector port="8009" protocol="AJP/1.3" redirectPort="8443"/>

<!-- An Engine represents the entry point (within Catalina) that processes

every request. The Engine implementation for Tomcat stand alone

analyzes the HTTP headers included with the request, and passes them

on to the appropriate Host (virtual host).

Documentation at /docs/config/engine.html -->

<!-- You should set jvmRoute to support load-balancing via AJP ie :

<Engine name="Catalina" defaultHost="localhost" jvmRoute="jvm1">

-->

<Engine defaultHost="localhost" name="Catalina">

<!--For clustering, please take a look at documentation at:

/docs/cluster-howto.html (simple how to)

/docs/config/cluster.html (reference documentation) -->

<!--

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"/>

-->

<!-- Use the LockOutRealm to prevent attempts to guess user passwords

via a brute-force attack -->

<Realm className="org.apache.catalina.realm.LockOutRealm">

<!-- This Realm uses the UserDatabase configured in the global JNDI

resources under the key "UserDatabase". Any edits

that are performed against this UserDatabase are immediately

available for use by the Realm. -->

<Realm className="org.apache.catalina.realm.UserDatabaseRealm" resourceName="UserDatabase"/>

</Realm>

<Host appBase="/data/tomcat/webapps" autoDeploy="true" name="localhost" unpackWARs="true">

<!-- SingleSignOn valve, share authentication between web applications

Documentation at: /docs/config/valve.html -->

<!--

<Valve className="org.apache.catalina.authenticator.SingleSignOn" />

-->

<!-- Access log processes all example.

Documentation at: /docs/config/valve.html

Note: The pattern used is equivalent to using pattern="common" -->

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs" pattern="%h %l %u %t "%r" %s %b" prefix="localhost_access_log" suffix=".txt"/>

<Context docBase="dubboadmin" path="/" reloadable="true" source="org.eclipse.jst.jee.server:dubboadmin"/>

</Host>

</Engine>

</Service>

</Server>

注意:

这里的appBase="/data/tomcat/webapps 要与Dockerfile 中的ADD dubboadmin.war /data/tomcat/webapps/ 路径对应好

# cat build-command.sh

#!/bin/bash

TAG=$1

docker build -t harbor.k8s.local/k8s/dubboadmin:${TAG} .

sleep 3

docker push harbor.k8s.local/k8s/dubboadmin:${TAG}

# jar uvf dubboadmin.war dubboadmin/WEB-INF/dubbo.properties #更新dubboadmin.war中的dubboadmin/WEB-INF/dubbo.properties

adding: dubboadmin/WEB-INF/dubbo.properties(in = 137) (out= 96)(deflated 29%)

# chmod a+x *.sh

# bash build-command.sh v1

root@deploy:~/dubboadmin# cat dubboadmin.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: test-dubboadmin

name: test-dubboadmin-deployment

namespace: test

spec:

replicas: 1

selector:

matchLabels:

app: test-dubboadmin

template:

metadata:

labels:

app: test-dubboadmin

spec:

containers:

- name: test-dubboadmin-container

image: harbor.k8s.local/k8s/dubboadmin:v1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

---

kind: Service

apiVersion: v1

metadata:

labels:

app: test-dubboadmin

name: test-dubboadmin-service

namespace: test

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 30080

selector:

app: test-dubboadmin

# kubectl apply -f dubboadmin.yaml

5635

5635

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?