Wiki ref: http://en.wikipedia.org/wiki/Minimax

Minimax (sometimes minmax) is a method in decision theory for minimizing the maximum possible loss. Alternatively, it can be thought of as maximizing the minimum gain (maximin). It started from two player zero-sum game theory, covering both the cases where players take alternate moves and those where they make simultaneous moves. It has also been extended to more complex games and to general decision making in the presence of uncertainty.

<script type="text/javascript"> // </script>Minimax theorem

The minimax theorem states:

- For every two-person, zero-sum game there exists a mixed strategy for each player, such that the expected payoff for both is the same value V when the players use these strategies. Furthermore, V is the best payoff each can expect to receive from a play of the game; that is, these mixed strategies are the optimal strategies for the two players.

This theorem was established by John von Neumann[1], who is quoted as saying "As far as I can see, there could be no theory of games … without that theorem … I thought there was nothing worth publishing until the Minimax Theorem was proved".[2]

Tic-tac-toe

A simple version of the minimax algorithm, stated below, deals with games such as tic-tac-toe, where each player can win, lose, or draw. If player A can win in one move, his best move is that winning move. If player B knows that one move will lead to the situation where player A can win in one move, while another move will lead to the situation where player A can, at best, draw, then player B's best move is the one leading to a draw. Late in the game, it's easy to see what the "best" move is. The Minimax algorithm helps find the best move, by working backwards from the end of the game. At each step it assumes that player A is trying to maximize the chances of A winning, while on the next turn player B is trying to minimize the chances of A winning (i.e., to maximize B's own chances of winning).

Minimax criterion in statistical decision theory

In classical statistical decision theory, we have an estimator δ that is used to estimate a parameter  . We also assume a risk function R(θ,δ), usually specified as the integral of a loss function. In this framework,

. We also assume a risk function R(θ,δ), usually specified as the integral of a loss function. In this framework,  is called minimax if it satisfies

is called minimax if it satisfies

-

.

.

An alternative criterion in the decision theoretic framework is the Bayes estimator in the presence of a prior distribution Π. An estimator is Bayes if it minimizes the average risk

Minimax algorithm with alternate moves

A minimax algorithm[3] is a recursive algorithm for choosing the next move in an n-player game, usually a two-player game. A value is associated with each position or state of the game. This value is computed by means of a position evaluation function and it indicates how good it would be for a player to reach that position. The player then makes the move that maximizes the minimum value of the position resulting from the opponent's possible following moves. If it is A's turn to move, A gives a value to each of his legal moves.

A possible allocation method consists in assigning a certain win for A as +1 and for B as −1. This leads to combinatorial game theory as developed by John Horton Conway. An alternative is using a rule that if the result of a move is an immediate win for A it is assigned positive infinity and, if it is an immediate win for B, negative infinity. The value to A of any other move is the minimum of the values resulting from each of B's possible replies. For this reason, A is called the maximizing player and B is called the minimizing player, hence the name minimax algorithm. The above algorithm will assign a value of positive or negative infinity to any position since the value of every position will be the value of some final winning or losing position. Often this is generally only possible at the very end of complicated games such as chess or go, since it is not computationally feasible to look ahead as far as the completion of the game, except towards the end, and instead positions are given finite values as estimates of the degree of belief that they will lead to a win for one player or another.

This can be extended if we can supply a heuristic evaluation function which gives values to non-final game states without considering all possible following complete sequences. We can then limit the minimax algorithm to look only at a certain number of moves ahead. This number is called the "look-ahead", measured in "plies". For example, the chess computer Deep Blue (that beat Garry Kasparov) looked ahead 12 plies, then applied a heuristic evaluation function.

The algorithm can be thought of as exploring the nodes of a game tree. The effective branching factor of the tree is the average number of children of each node (i.e., the average number of legal moves in a position). The number of nodes to be explored usually increases exponentially with the number of plies (it is less than exponential if evaluating forced moves or repeated positions). The number of nodes to be explored for the analysis of a game is therefore approximately the branching factor raised to the power of the number of plies. It is therefore impractical to completely analyze games such as chess using the minimax algorithm.

The performance of the naïve minimax algorithm may be improved dramatically, without affecting the result, by the use of alpha-beta pruning. Other heuristic pruning methods can also be used, but not all of them are guaranteed to give the same result as the un-pruned search.

Pseudocode

Pseudocode for the minimax algorithm (using an evaluation heuristic to terminate at a given depth) is given below.

function minimax(node, depth)

if node is a terminal node or depth = CutoffDepth

return the heuristic value of node

if the adversary is to play at node

let beta := +∞

foreach child of node

beta := min(beta, minimax(child, depth+1))

return beta

else {we are to play at node}

let α := -∞

foreach child of node

α := max(α, minimax(child, depth+1))

return α

Example

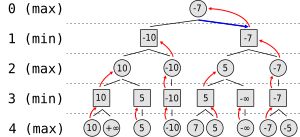

Suppose the game being played only has a maximum of two possible moves per player each turn. The algorithm generates the tree on the right, where the circles represent the moves of the player running the algorithm (maximizing player), and squares represent the moves of the opponent (minimizing player). Because of the limitation of computation resources, as explained above, the tree is limited to a look-ahead of 4 moves.

The algorithm evaluates each leaf node using a heuristic evaluation function, obtaining the values shown. The moves where the maximizing player wins are assigned with positive infinity, while the moves that lead to a win of the minimizing player are assigned with negative infinity. At level 3, the algorithm will choose, for each node, the smallest of the child node values, and assign it to that same node (e.g the node on the left will choose the minimum between "10" and "+∞", therefore assigning the value "10" to himself). The next step, in level 2, consists of choosing for each node the largest of the child node values. Once again, the values are assigned to each parent node. The algorithm continues evaluating the maximum and minimum values of the child nodes alternatively until it reaches the root node, where it chooses the move with the largest value (represented in the figure with a blue arrow). This is the move that the player should make in order to minimize the maximum possible loss.

Minimax theorem with simultaneous moves

| B chooses B1 | B chooses B2 | B chooses B3 | |

|---|---|---|---|

| A chooses A1 | +3 | -2 | +2 |

| A chooses A2 | -1 | 0 | +4 |

| A chooses A3 | -4 | -3 | +1 |

The following example of a zero-sum game, where A and B make simultaneous moves, illustrates the minimax algorithm. Suppose each player has three choices and consider the payoff matrix for A displayed at right. Assume the payoff matrix for B is the same matrix with the signs reversed (i.e. if the choices are A1 and B1 then B pays 3 to A). Then, the minimax choice for A is A2 since the worst possible result is then having to pay 1, while the simple minimax choice for B is B2 since the worst possible result is then no payment. However, this solution is not stable, since if B believes A will choose A2 then B will choose B1 to gain 1; then if A believes B will choose B1 then A will choose A1 to gain 3; and then B will choose B2; and eventually both players will realize the difficulty of making a choice. So a more stable strategy is needed.

Some choices are dominated by others and can be eliminated: A will not choose A3 since either A1 or A2 will produce a better result, no matter what B chooses; B will not choose B3 since B2 will produce a better result, no matter what A chooses.

A can avoid having to make an expected payment of more than 1/3 by choosing A1 with probability 1/6 and A2 with probability 5/6, no matter what B chooses. B can ensure an expected gain of at least 1/3 by using a randomized strategy of choosing B1 with probability 1/3 and B2 with probability 2/3, no matter what A chooses. These mixed minimax strategies are now stable and cannot be improved.

John von Neumann proved the Minimax theorem in 1928, stating that such strategies always exist in two-person zero-sum games and can be found by solving a set of simultaneous equations.

Minimax in the face of uncertainty

Minimax theory has been extended to decisions where there is no other player, but where the consequences of decisions depend on unknown facts. For example, deciding to prospect for minerals entails a cost which will be wasted if the minerals are not present, but will bring major rewards if they are. One approach is to treat this as a game against nature, and using a similar mindset as Murphy's law, take an approach which minimizes the maximum expected loss, using the same techniques as in the two-person zero-sum games.

In addition, expectiminimax trees have been developed, for two-player games in which chance (for example, dice) is a factor.Minimax in non-zero-sum games

If games have a non-zero-sum in terms of the payoffs between the players, apparently non-optimal strategies may evolve. For example in the prisoner's dilemma, the minimax strategy for each prisoner is to betray the other even though they would each do better if neither confessed their guilt. So, for non-zero-sum games, minimax is not necessarily the best strategy.

Maximin in philosophy

In philosophy, the term "maximin" is often used to refer to what John Rawls called The Difference Principle. In his book A Theory of Justice, Rawls defined this principle as the rule which states that social and economic inequalities should be arranged so that "they are to be of the greatest benefit to the least-advantaged members of society". In other words, an unequal distribution can be just when it maximizes the benefit to those who have the most miniscule allocation of welfare conferring resources (which he refers to as "primary goods").[original research?]

558

558

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?