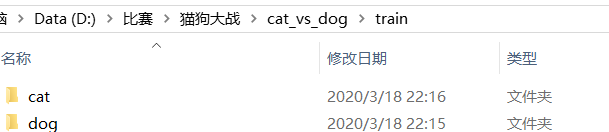

数据来源于猫狗大战,我在这里用分好了,如下图所示

首先是建立TFRcord文件,如下所示:

import os

import tensorflow as tf

from PIL import Image #注意Image,后面会用到

import matplotlib.pyplot as plt

import numpy as np

cwd='D:\比赛\猫狗大战\cat_vs_dog\\train\\'

classes={'cat','dog'} #人为 设定 2 类

writer= tf.python_io.TFRecordWriter("D:\比赛\猫狗大战\cat_vs_dog\\train\\train.tfrecords") #要生成的文件

for index,name in enumerate(classes):

class_path=cwd+name+'\\'

for img_name in os.listdir(class_path):

img_path=class_path+img_name #每一个图片的地址

img=Image.open(img_path)

img= img.resize((208,208))

img_raw=img.tobytes()#将图片转化为二进制格式

#数据填入缓冲区域,并通过writer写入TFRecords文件

example = tf.train.Example(features=tf.train.Features(feature={

"label": tf.train.Feature(int64_list=tf.train.Int64List(value=[index])),

'image_raw': tf.train.Feature(bytes_list=tf.train.BytesList(value=[img_raw]))

})) #example对象对label和image数据进行封装

writer.write(example.SerializeToString()) #序列化为字符串

writer.close()

这样就可以建立tfrecords文件了

如何读入文件?如下所示:

def read_and_decode(tfrecords_file, batch_size=5,epoch_num=3):

'''read and decode tfrecord file, generate (image, label) batches

Args:

tfrecords_file: tfrecord 文件地址

batch_size: 每个batch大小,我计算机性能差,设置的比较小

epoch_num:重复多少次

Returns:

image: 4D tensor - [batch_size, width, height, channel]

label: 1D tensor - [batch_size]

'''

# 制造文件队列名

filename_queue = tf.train.string_input_producer([tfrecords_file])

reader = tf.TFRecordReader()

_, serialized_example = reader.read(filename_queue)

#解析

img_features = tf.parse_single_example(

serialized_example,

features={

'label': tf.FixedLenFeature([], tf.int64),

'image_raw': tf.FixedLenFeature([], tf.string),

})

image = tf.decode_raw(img_features['image_raw'], tf.uint8)

label = tf.cast(img_features['label'], tf.float32)

##########################################################

# you can put data augmentation here, I didn't use it

##########################################################

# all the images of notMNIST are 28*28, you need to change the image size if you use other dataset.

image = tf.reshape(image, [208, 208,3])

#label=tf.reshape(label, [batch_size,2])

#image.set_shape([208*208*3])

image_batch, label_batch = tf.train.batch([image, label],

batch_size= batch_size,

num_threads= 64,

capacity = 2000)

#image = tf.image.per_image_standardization(image)

#从tensor列表中按顺序或随机抽取一个tensor准备放入文件名称队列

input_queue = tf.train.slice_input_producer([image_batch, label_batch], num_epochs=epoch_num, shuffle=False)

image_batch, label_batch = tf.train.batch(input_queue, batch_size, num_threads=2, capacity=10, allow_smaller_final_batch=False)

image_batch=tf.cast(image_batch, tf.float32)

label_batch=tf.cast(label_batch, tf.int32)

return image_batch, tf.reshape(label_batch, [batch_size])

通过以上即可将文件读取数据了,那怎么使用这种tensor呢,我看到很多博客建议的是将tensor变为numpy的格式,再通过feed_dict填补数据,这种方法非常愚蠢,完全没有利用到TFRcord文件的优势。我接下来就利用猫狗大战的数据,写个简单的方法实现AlexNet。

首先是定义网格参数以及基础函数:

#定义网络超参数

learning_rate = 0.0001

train_epochs = 5

batch_size = 5

display = 10

t = 50 # 每轮随机抽的次数

# 网络参数

n_input = 208*208

n_classes = 2

keep_prob= 0.75

# 卷积层使用截断正太分布初始化,shape为列表对象

def conv_weight_init(shape,stddev):

weight = tf.Variable(tf.truncated_normal(dtype=tf.float32, shape=shape, stddev=stddev))

return weight

# 全连接层使用xavier初始化

def xavier_init(layer1, layer2, constant = 1):

Min = -constant * np.sqrt(6.0 / (layer1 + layer2))

Max = constant * np.sqrt(6.0 / (layer1 + layer2))

weight = tf.random_uniform((layer1, layer2), minval = Min, maxval = Max, dtype = tf.float32)

return tf.Variable(weight)

# 偏置

def biases_init(shape):

biases = tf.Variable(tf.random_normal(shape, dtype=tf.float32))

return biases

#卷积 +池化+LRN

def conv2d(image, weight, stride=1):

return tf.nn.conv2d(image, weight, strides=[1,stride,stride,1],padding='SAME')

def max_pool(tensor, k = 2, stride=2):

return tf.nn.max_pool(tensor, ksize=[1,k,k,1], strides=[1,stride,stride,1],padding='SAME')

def lrnorm(tensor):

return tf.nn.lrn(tensor,depth_radius=4, bias=1.0, alpha=0.001/9.0, beta=0.75)

# AlexNet中的LRN不知道是否适合

##卷积

def inference(images,batch_size=5):

# 转换image的shape

imgs = tf.reshape(images,[-1, 208, 208, 3])

imgs=tf.cast(imgs, tf.float32)

# 初始化权重

# wc = weight convolution

wc1 = conv_weight_init([5, 5, 3, 12], 0.05)

wc2 = conv_weight_init([5, 5, 12, 32], 0.05)

wc3 = conv_weight_init([3, 3, 32, 48], 0.05)

wc4 = conv_weight_init([3, 3, 48, 48], 0.05)

wc5 = conv_weight_init([3, 3, 48, 32], 0.05)

# wf : weight fullconnection

wf1 = xavier_init(26*26*32, 512)

wf2 = xavier_init(512, 512)

wf3 = xavier_init(512, 2)

# 初始化偏置

bc1 = biases_init([12])

bc2 = biases_init([32])

bc3 = biases_init([48])

bc4 = biases_init([48])

bc5 = biases_init([32])

# full connection

bf1 = biases_init([512])

bf2 = biases_init([512])

bf3 = biases_init([2])

# 卷积1

c1 = conv2d(imgs, wc1) # 未激活

conv1 = tf.nn.relu(c1 + bc1)

lrn1 = lrnorm(conv1)

pool1 = max_pool(lrn1)

# 卷积2

conv2 = tf.nn.relu(conv2d(pool1, wc2) + bc2)

lrn2 = lrnorm(conv2)

pool2 = max_pool(lrn2)

# 卷积3--5,不在进行LRN,会严重影响网络前馈,反馈的速度(效果还不明显)

conv3 = tf.nn.relu(conv2d(pool2, wc3) + bc3)

#pool3 = max_pool(conv3)

# 卷积4

conv4 = tf.nn.relu(conv2d(conv3, wc4) + bc4)

# pool4 = max_pool(conv4)

# 卷积5

conv5 = tf.nn.relu(conv2d(conv4, wc5) + bc5)

pool5 = max_pool(conv5)##?*26*26*32

#pool5

#全连接

# 转换pool5的shape

reshape_p5 = tf.reshape(pool5, [-1,26*26*32])

fc1 = tf.nn.relu(tf.matmul(reshape_p5, wf1) + bf1)

# 正则化

drop_fc1 = tf.nn.dropout(fc1, keep_prob)

# full connect 2

fc2 = tf.nn.relu(tf.matmul(drop_fc1, wf2) + bf2)

drop_fc2 = tf.nn.dropout(fc2, keep_prob)

# full connect 3 (输出层)未激活

output = tf.matmul(drop_fc2, wf3) + bf3#?,2

y=tf.reshape(output, [-1,2])

return y

#损失函数和优化器

def cost(x,y):

pred=inference(x)

#loss=tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=pred,labels=y))

with tf.variable_scope('loss') as scope:

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits\

(logits=pred, labels=y, name='xentropy_per_example')

loss = tf.reduce_mean(cross_entropy, name='loss')

tf.summary.scalar(scope.name+'/loss', loss)

return loss

def trainning(loss, learning_rate):

with tf.name_scope('optimizer'):

optimizer = tf.train.AdamOptimizer(learning_rate= learning_rate)

global_step = tf.Variable(0, name='global_step', trainable=False)

train_op = optimizer.minimize(loss, global_step= global_step)

return train_op

以上基础函数建立完毕,我们读入数据以及运行以上函数

filename="D:\比赛\猫狗大战\cat_vs_dog\\train\\train.tfrecords"

x,y = read_and_decode(filename, batch_size=5)

train_logits = inference(x,batch_size=5)

train_loss = cost(x, y)

train_op = trainning(train_loss, learning_rate)

with tf.Session() as sess:

# 先执行初始化工作

sess.run(tf.global_variables_initializer())

sess.run(tf.local_variables_initializer())

# 开启一个协调器

coord = tf.train.Coordinator()

# 使用start_queue_runners 启动队列填充

threads = tf.train.start_queue_runners(sess, coord)

try:

while not coord.should_stop():

print ('******1******')

# 获取每一个batch中batch_size个样本和标签

_, tra_loss = sess.run([train_op, train_loss])

print('train loss = %.2f,' %( tra_loss))

#image_batch_v, label_batch_v = sess.run([image_batch, label_batch])

#print(image_batch_v.shape, label_batch_v)

except tf.errors.OutOfRangeError: #如果读取到文件队列末尾会抛出此异常

print("done! now lets kill all the threads……")

finally:

# 协调器coord发出所有线程终止信号

coord.request_stop()

print('all threads are asked to stop!')

#coord.join(threads) #把开启的线程加入主线程,等待threads结束

print('all threads are stopped!')

运行结果如下所示:

import time

import tensorflow as tf

import numpy as np

import os

import matplotlib.pyplot as plt

import time

import tensorflow.contrib.layers as layers

def read_and_decode(tfrecords_file, batch_size=1,epoch_num=1):

'''read and decode tfrecord file, generate (image, label) batches

Args:

tfrecords_file: tfrecord 文件地址

batch_size: 每个batch大小

epoch_num:重复多少次

Returns:

image: 4D tensor - [batch_size, width, height, channel]

label: 1D tensor - [batch_size]

'''

# make an input queue from the tfrecord file

filename_queue = tf.train.string_input_producer([tfrecords_file])

reader = tf.TFRecordReader()

_, serialized_example = reader.read(filename_queue)

img_features = tf.parse_single_example(

serialized_example,

features={

'label': tf.FixedLenFeature([], tf.int64),

'image_raw': tf.FixedLenFeature([], tf.string),

})

image = tf.decode_raw(img_features['image_raw'], tf.uint8)

label = tf.cast(img_features['label'], tf.float32)

##########################################################

# you can put data augmentation here, I didn't use it

##########################################################

# all the images of notMNIST are 28*28, you need to change the image size if you use other dataset.

image = tf.reshape(image, [208, 208,3])

#label=tf.reshape(label, [batch_size,2])

#image.set_shape([208*208*3])

image_batch, label_batch = tf.train.batch([image, label],

batch_size= batch_size,

num_threads= 64,

capacity = 2000)

#image = tf.image.per_image_standardization(image)

#从tensor列表中按顺序或随机抽取一个tensor准备放入文件名称队列

input_queue = tf.train.slice_input_producer([image_batch, label_batch], num_epochs=epoch_num, shuffle=False)

image_batch, label_batch = tf.train.batch(input_queue, batch_size, num_threads=2, capacity=10, allow_smaller_final_batch=False)

image_batch=tf.cast(image_batch, tf.float32)

label_batch=tf.cast(label_batch, tf.int32)

return image_batch, tf.reshape(label_batch, [batch_size])

def conv_weight_init(shape,stddev):

weight = tf.Variable(tf.truncated_normal(dtype=tf.float32, shape=shape, stddev=stddev))

return weight

# 全连接层使用xavier初始化

def xavier_init(layer1, layer2, constant = 1):

Min = -constant * np.sqrt(6.0 / (layer1 + layer2))

Max = constant * np.sqrt(6.0 / (layer1 + layer2))

weight = tf.random_uniform((layer1, layer2), minval = Min, maxval = Max, dtype = tf.float32)

return tf.Variable(weight)

# 偏置

def biases_init(shape):

biases = tf.Variable(tf.random_normal(shape, dtype=tf.float32))

return biases

#卷积 +池化+LRN

def conv2d(image, weight, stride=1):

return tf.nn.conv2d(image, weight, strides=[1,stride,stride,1],padding='SAME')

def max_pool(tensor, k = 2, stride=2):

return tf.nn.max_pool(tensor, ksize=[1,k,k,1], strides=[1,stride,stride,1],padding='SAME')

def lrnorm(tensor):

return tf.nn.lrn(tensor,depth_radius=4, bias=1.0, alpha=0.001/9.0, beta=0.75)

def inference(images,batch_size=5):

# 转换image的shape

#images = tf.placeholder(dtype = tf.float32,shape = [5, 208, 208, 3])

imgs = tf.reshape(images,[-1, 208, 208, 3])

imgs=tf.cast(imgs, tf.float32)

# 初始化权重

# wc = weight convolution

wc1 = conv_weight_init([5, 5, 3, 12], 0.05)

wc2 = conv_weight_init([5, 5, 12, 32], 0.05)

wc3 = conv_weight_init([3, 3, 32, 48], 0.05)

wc4 = conv_weight_init([3, 3, 48, 48], 0.05)

wc5 = conv_weight_init([3, 3, 48, 32], 0.05)

# wf : weight fullconnection

wf1 = xavier_init(26*26*32, 512)

wf2 = xavier_init(512, 512)

wf3 = xavier_init(512, 2)

# 初始化偏置

bc1 = biases_init([12])

bc2 = biases_init([32])

bc3 = biases_init([48])

bc4 = biases_init([48])

bc5 = biases_init([32])

# full connection

bf1 = biases_init([512])

bf2 = biases_init([512])

bf3 = biases_init([2])

# 卷积1

c1 = conv2d(imgs, wc1) # 未激活

conv1 = tf.nn.relu(c1 + bc1)

lrn1 = lrnorm(conv1)

pool1 = max_pool(lrn1)

# 卷积2

conv2 = tf.nn.relu(conv2d(pool1, wc2) + bc2)

lrn2 = lrnorm(conv2)

pool2 = max_pool(lrn2)

# 卷积3--5,不在进行LRN,会严重影响网络前馈,反馈的速度(效果还不明显)

conv3 = tf.nn.relu(conv2d(pool2, wc3) + bc3)

#pool3 = max_pool(conv3)

# 卷积4

conv4 = tf.nn.relu(conv2d(conv3, wc4) + bc4)

# pool4 = max_pool(conv4)

# 卷积5

conv5 = tf.nn.relu(conv2d(conv4, wc5) + bc5)

pool5 = max_pool(conv5)##?*26*26*32

#pool5

#全连接

# 转换pool5的shape

reshape_p5 = tf.reshape(pool5, [-1,26*26*32])

fc1 = tf.nn.relu(tf.matmul(reshape_p5, wf1) + bf1)

# 正则化

drop_fc1 = tf.nn.dropout(fc1, keep_prob=0.75)

# full connect 2

fc2 = tf.nn.relu(tf.matmul(drop_fc1, wf2) + bf2)

drop_fc2 = tf.nn.dropout(fc2, keep_prob=0.75)

# full connect 3 (输出层)未激活

output = tf.matmul(drop_fc2, wf3) + bf3#?,2

y=tf.reshape(output, [-1,2])

return y

def losses(logits, labels):

with tf.variable_scope('loss'):

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits\

(logits=logits, labels=labels, name='xentropy_per_example')

loss = tf.reduce_mean(cross_entropy, name='loss')

return loss

def evaluation(logits, labels):

with tf.variable_scope("accuracy"):

correct = tf.nn.in_top_k(logits, labels, 1)

correct = tf.cast(correct, tf.float16)

accuracy = tf.reduce_mean(correct)

return accuracy

# 训练模型

def training():

N_CLASSES = 2

MAX_STEP = 500

LEARNING_RATE = 1e-4

batch_size = 20

# 测试图片读取

tfrecords_file = 'D:\比赛\猫狗大战\\train.tfrecords'

logs_dir = 'logs_1' # 检查点保存路径

sess = tf.Session()

'''

读取数据

'''

filename_queue = tf.train.string_input_producer([tfrecords_file])#读入流中

reader = tf.TFRecordReader()

_, serialized_example = reader.read(filename_queue)

img_features = tf.parse_single_example(

serialized_example,

features={

'label': tf.FixedLenFeature([], tf.int64),

'image_raw': tf.FixedLenFeature([], tf.string),

})

image = tf.decode_raw(img_features['image_raw'], tf.uint8)

label = tf.cast(img_features['label'], tf.float32)

##########################################################

# you can put data augmentation here, I didn't use it

##########################################################

# all the images of notMNIST are 28*28, you need to change the image size if you use other dataset.

image = tf.reshape(image, [208, 208,3])

#label=tf.reshape(label, [batch_size,2])

#image.set_shape([208*208*3])

image_batch, label_batch = tf.train.shuffle_batch([image, label],

batch_size=batch_size,

capacity=2000,

min_after_dequeue=60,

num_threads=2 # 线程数

)

#从tensor列表中按顺序或随机抽取一个tensor准备放入文件名称队列

#input_queue = tf.train.slice_input_producer([image, label], shuffle=False)

image_train_batch=tf.cast(image_batch, tf.float32)

label_train_batch=tf.cast(label_batch, tf.int32)

train_logits = inference(image_train_batch)

train_loss = losses(train_logits, label_train_batch)

train_acc = evaluation(train_logits, label_train_batch)

train_op = tf.train.AdamOptimizer(LEARNING_RATE).minimize(train_loss)

#train_op = tf.train.AdamOptimizer(LEARNING_RATE).minimize(train_loss)

var_list = tf.trainable_variables()

paras_count = tf.reduce_sum([tf.reduce_prod(v.shape) for v in var_list])

print('参数数目:%d' % sess.run(paras_count), end='\n\n')

saver = tf.train.Saver()

sess.run(tf.global_variables_initializer())

sess.run(tf.local_variables_initializer())

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess, coord=coord)

# 先执行初始化工作

s_t = time.time()

try:

for step in range(MAX_STEP):

if coord.should_stop():

break

_, loss, acc = sess.run([train_op, train_loss, train_acc])

runtime = time.time() - s_t

print('Step: %6d, loss: %.8f, accuracy: %.2f%%, time:%.2fs, time left: %.2fhours'

% (step, loss, acc * 100, runtime, (MAX_STEP - step) * runtime / 360000))

s_t = time.time()

if step % 100 == 0 or step == MAX_STEP - 1: # 保存检查点

checkpoint_path = os.path.join(logs_dir, 'model.ckpt')

saver.save(sess, checkpoint_path, global_step=step)

except tf.errors.OutOfRangeError:

print('Done.')

finally:

coord.request_stop()

coord.join(threads=threads)

sess.close()

# -*- coding: utf-8 -*-

"""

Created on Tue Dec 1 00:49:59 2020

@author: 许竞

"""

import time

import tensorflow as tf

import numpy as np

import os

import matplotlib.pyplot as plt

def read_and_decode(tfrecords_file, batch_size=1,epoch_num=1):

'''read and decode tfrecord file, generate (image, label) batches

Args:

tfrecords_file: tfrecord 文件地址

batch_size: 每个batch大小

epoch_num:重复多少次

Returns:

image: 4D tensor - [batch_size, width, height, channel]

label: 1D tensor - [batch_size]

'''

# make an input queue from the tfrecord file

filename_queue = tf.train.string_input_producer([tfrecords_file])

reader = tf.TFRecordReader()

_, serialized_example = reader.read(filename_queue)

img_features = tf.parse_single_example(

serialized_example,

features={

'label': tf.FixedLenFeature([], tf.int64),

'image_raw': tf.FixedLenFeature([], tf.string),

})

image = tf.decode_raw(img_features['image_raw'], tf.uint8)

label = tf.cast(img_features['label'], tf.float32)

##########################################################

# you can put data augmentation here, I didn't use it

##########################################################

# all the images of notMNIST are 28*28, you need to change the image size if you use other dataset.

image = tf.reshape(image, [208, 208,3])

#label=tf.reshape(label, [batch_size,2])

#image.set_shape([208*208*3])

image_batch, label_batch = tf.train.batch([image, label],

batch_size= batch_size,

num_threads= 64,

capacity = 2000)

#image = tf.image.per_image_standardization(image)

#从tensor列表中按顺序或随机抽取一个tensor准备放入文件名称队列

input_queue = tf.train.slice_input_producer([image_batch, label_batch], num_epochs=epoch_num, shuffle=False)

image_batch, label_batch = tf.train.batch(input_queue, batch_size, num_threads=2, capacity=10, allow_smaller_final_batch=False)

image_batch=tf.cast(image_batch, tf.float32)

label_batch=tf.cast(label_batch, tf.int32)

return image_batch, tf.reshape(label_batch, [batch_size])

def conv_weight_init(shape,stddev):

weight = tf.Variable(tf.truncated_normal(dtype=tf.float32, shape=shape, stddev=stddev))

return weight

# 全连接层使用xavier初始化

def xavier_init(layer1, layer2, constant = 1):

Min = -constant * np.sqrt(6.0 / (layer1 + layer2))

Max = constant * np.sqrt(6.0 / (layer1 + layer2))

weight = tf.random_uniform((layer1, layer2), minval = Min, maxval = Max, dtype = tf.float32)

return tf.Variable(weight)

# 偏置

def biases_init(shape):

biases = tf.Variable(tf.random_normal(shape, dtype=tf.float32))

return biases

#卷积 +池化+LRN

def conv2d(image, weight, stride=1):

return tf.nn.conv2d(image, weight, strides=[1,stride,stride,1],padding='SAME')

def max_pool(tensor, k = 2, stride=2):

return tf.nn.max_pool(tensor, ksize=[1,k,k,1], strides=[1,stride,stride,1],padding='SAME')

def lrnorm(tensor):

return tf.nn.lrn(tensor,depth_radius=4, bias=1.0, alpha=0.001/9.0, beta=0.75)

def inference(images,batch_size=5):

# 转换image的shape

#images = tf.placeholder(dtype = tf.float32,shape = [5, 208, 208, 3])

imgs = tf.reshape(images,[-1, 208, 208, 3])

imgs=tf.cast(imgs, tf.float32)

# 初始化权重

# wc = weight convolution

wc1 = conv_weight_init([5, 5, 3, 12], 0.05)

wc2 = conv_weight_init([5, 5, 12, 32], 0.05)

wc3 = conv_weight_init([3, 3, 32, 48], 0.05)

wc4 = conv_weight_init([3, 3, 48, 48], 0.05)

wc5 = conv_weight_init([3, 3, 48, 32], 0.05)

# wf : weight fullconnection

wf1 = xavier_init(26*26*32, 512)

wf2 = xavier_init(512, 512)

wf3 = xavier_init(512, 2)

# 初始化偏置

bc1 = biases_init([12])

bc2 = biases_init([32])

bc3 = biases_init([48])

bc4 = biases_init([48])

bc5 = biases_init([32])

# full connection

bf1 = biases_init([512])

bf2 = biases_init([512])

bf3 = biases_init([2])

# 卷积1

c1 = conv2d(imgs, wc1) # 未激活

conv1 = tf.nn.relu(c1 + bc1)

lrn1 = lrnorm(conv1)

pool1 = max_pool(lrn1)

# 卷积2

conv2 = tf.nn.relu(conv2d(pool1, wc2) + bc2)

lrn2 = lrnorm(conv2)

pool2 = max_pool(lrn2)

# 卷积3--5,不在进行LRN,会严重影响网络前馈,反馈的速度(效果还不明显)

conv3 = tf.nn.relu(conv2d(pool2, wc3) + bc3)

#pool3 = max_pool(conv3)

# 卷积4

conv4 = tf.nn.relu(conv2d(conv3, wc4) + bc4)

# pool4 = max_pool(conv4)

# 卷积5

conv5 = tf.nn.relu(conv2d(conv4, wc5) + bc5)

pool5 = max_pool(conv5)##?*26*26*32

#pool5

#全连接

# 转换pool5的shape

reshape_p5 = tf.reshape(pool5, [-1,26*26*32])

fc1 = tf.nn.relu(tf.matmul(reshape_p5, wf1) + bf1)

# 正则化

drop_fc1 = tf.nn.dropout(fc1, keep_prob=0.75)

# full connect 2

fc2 = tf.nn.relu(tf.matmul(drop_fc1, wf2) + bf2)

drop_fc2 = tf.nn.dropout(fc2, keep_prob=0.75)

# full connect 3 (输出层)未激活

output = tf.matmul(drop_fc2, wf3) + bf3#?,2

y=tf.reshape(output, [-1,2])

return y

def losses(logits, labels):

with tf.variable_scope('loss'):

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits\

(logits=logits, labels=labels, name='xentropy_per_example')

loss = tf.reduce_mean(cross_entropy, name='loss')

return loss

def evaluation(logits, labels):

with tf.variable_scope("accuracy"):

correct = tf.nn.in_top_k(logits, labels, 1)

correct = tf.cast(correct, tf.float16)

accuracy = tf.reduce_mean(correct)

return accuracy

# 训练模型

def training():

N_CLASSES = 2

MAX_STEP = 100

EPOCH_NUM=2

LEARNING_RATE = 1e-4

batch_size = 20

# 测试图片读取

tfrecords_file = 'D:\比赛\猫狗大战\\train.tfrecords'

logs_dir = 'logs_1' # 检查点保存路径

sess = tf.Session()

'''

读取数据

'''

filename_queue = tf.train.string_input_producer([tfrecords_file],EPOCH_NUM)#读入流中 输入管道队列

reader = tf.TFRecordReader()

_, serialized_example = reader.read(filename_queue)

img_features = tf.parse_single_example(

serialized_example,

features={

'label': tf.FixedLenFeature([], tf.int64),

'image_raw': tf.FixedLenFeature([], tf.string),

})

image = tf.decode_raw(img_features['image_raw'], tf.uint8)

label = tf.cast(img_features['label'], tf.float32)

##########################################################

# you can put data augmentation here, I didn't use it

##########################################################

# all the images of notMNIST are 28*28, you need to change the image size if you use other dataset.

image = tf.reshape(image, [208, 208,3])

#label=tf.reshape(label, [batch_size,2])

#image.set_shape([208*208*3])

image_batch, label_batch = tf.train.shuffle_batch([image, label],

batch_size=batch_size,

capacity=2000,

min_after_dequeue=60,

num_threads=2 # 线程数

)

#从tensor列表中按顺序或随机抽取一个tensor准备放入文件名称队列

#input_queue = tf.train.slice_input_producer([image, label], shuffle=False)

image_train_batch=tf.cast(image_batch, tf.float32)

label_train_batch=tf.cast(label_batch, tf.int32)

train_logits = inference(image_train_batch)

train_loss = losses(train_logits, label_train_batch)

train_acc = evaluation(train_logits, label_train_batch)

train_op = tf.train.AdamOptimizer(LEARNING_RATE).minimize(train_loss)

#train_op = tf.train.AdamOptimizer(LEARNING_RATE).minimize(train_loss)

var_list = tf.trainable_variables()

paras_count = tf.reduce_sum([tf.reduce_prod(v.shape) for v in var_list])

print('参数数目:%d' % sess.run(paras_count), end='\n\n')

saver = tf.train.Saver()

sess.run(tf.global_variables_initializer())

sess.run(tf.local_variables_initializer())

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess, coord=coord)

# 先执行初始化工作

#fig_loss = np.zeros([100])

#fig_accuracy = np.zeros([100])

s_t = time.time()

try:

step = 0

while not coord.should_stop():

_, loss, acc = sess.run([train_op, train_loss, train_acc])

runtime = time.time() - s_t

if step %200 == 0:

print('Step: %6d, loss: %.8f, accuracy: %.2f%%, time:%.2fs, time left: %.2fhours'

% (step, loss, acc * 100, runtime, (MAX_STEP - step) * runtime / 360000))

checkpoint_path = os.path.join(logs_dir, 'model.ckpt')# 保存检查点

saver.save(sess, checkpoint_path, global_step=step)

step += 1

s_t = time.time()

except tf.errors.OutOfRangeError:

print('Done.')

finally:

coord.request_stop()

coord.join(threads=threads)

sess.close()

fig_loss = np.zeros([100])

fig_accuracy = np.zeros([100])

# 绘制曲线

fig, ax1 = plt.subplots()

ax2 = ax1.twinx()

lns1 = ax1.plot(np.arange(100), fig_loss, label="Loss")

# 按一定间隔显示实现方法

# ax2.plot(200 * np.arange(len(fig_accuracy)), fig_accuracy, 'r')

lns2 = ax2.plot(np.arange(100), fig_accuracy, 'r', label="Accuracy")

ax1.set_xlabel('iteration')

ax1.set_ylabel('training loss')

ax2.set_ylabel('training accuracy')

# 合并图例

lns = lns1 + lns2

labels = ["Loss", "Accuracy"]

# labels = [l.get_label() for l in lns]

plt.legend(lns, labels, loc=7)

plt.show()

352

352

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?