FFmpeg介绍-上

前两篇文章分别介绍如何在三端(android,ios ,server)编译ffmpeg以及简单的推流的功能和在android和ios上实现播放功能。从这篇文章开始我们将详细介绍FFmpeg。介绍大概分为两部分,解码、编码。整个过程和上一篇博客中介绍的流程一样。

解码过程

在上一篇文章中我们提到了视频播放过程中,FFmpeg做的一些工作,主要包含以下步骤:

av_register_all(); // 注册所有的文件格式和编解码器的库,打开的合适格式的文件上会自动选择相应的编解码库

avformat_network_init(); // 注册网络服务

avformat_alloc_context(); // 分配FormatContext内存,

avformat_open_input(); // 打开输入流,获取头部信息,配合av_close_input_file()关闭流

avformat_find_stream_info(); // 读取packets,来获取流信息,并在pFormatCtx->streams 填充上正确的信息

avcodec_find_decoder(); // 获取解码器,

avcodec_open2(); // 通过AVCodec来初始化AVCodecContext

av_read_frame(); // 读取每一帧

avcodec_decode_video2(); // 解码视频帧数据

avcodec_decode_audio4(); // 解码音频帧数据

avcodec_close(); // 关闭编辑器上下文

avformat_close_input(); // 关闭文件流记得我们之前说过,使用FFmpeg第一个调用API是av_register_all(),这个api主要的代码如下,其实很容易明白,是针对不同的音视频格式注册不同编解码。

av_register_all

#define REGISTER_MUXER(X, x) \

{ \

extern AVOutputFormat ff_##x##_muxer; \

if (CONFIG_##X##_MUXER) \

av_register_output_format(&ff_##x##_muxer); \

}

#define REGISTER_DEMUXER(X, x) \

{ \

extern AVInputFormat ff_##x##_demuxer; \

if (CONFIG_##X##_DEMUXER) \

av_register_input_format(&ff_##x##_demuxer); \

}

#define REGISTER_MUXDEMUX(X, x) REGISTER_MUXER(X, x); REGISTER_DEMUXER(X, x)

#define REGISTER_PROTOCOL(X,x) { \

extern URLProtocol x##_protocol; \

if(CONFIG_##X##_PROTOCOL) av_register_protocol(&x##_protocol); \

}

void av_register_all(void)

{

static int initialized;

if (initialized)

return;

initialized = 1;

avcodec_register_all();

/* (de)muxers */

REGISTER_MUXER (A64, a64);

REGISTER_DEMUXER (AA, aa);

.......

}

#define REGISTER_HWACCEL(X, x) \

{ \

extern AVHWAccel ff_##x##_hwaccel; \

if (CONFIG_##X##_HWACCEL) \

av_register_hwaccel(&ff_##x##_hwaccel); \

}

#define REGISTER_ENCODER(X, x) \

{ \

extern AVCodec ff_##x##_encoder; \

if (CONFIG_##X##_ENCODER) \

avcodec_register(&ff_##x##_encoder); \

}

#define REGISTER_DECODER(X, x) \

{ \

extern AVCodec ff_##x##_decoder; \

if (CONFIG_##X##_DECODER) \

avcodec_register(&ff_##x##_decoder); \

}

#define REGISTER_ENCDEC(X, x) REGISTER_ENCODER(X, x); REGISTER_DECODER(X, x)

#define REGISTER_PARSER(X, x) \

{ \

extern AVCodecParser ff_##x##_parser; \

if (CONFIG_##X##_PARSER) \

av_register_codec_parser(&ff_##x##_parser); \

}

void avcodec_register_all(void)

{

static int initialized;

if (initialized)

return;

initialized = 1;

/* hardware accelerators */

REGISTER_HWACCEL(H263_VAAPI, h263_vaapi);

REGISTER_HWACCEL(H263_VIDEOTOOLBOX, h263_videotoolbox);

REGISTER_HWACCEL(H264_CUVID, h264_cuvid);

.....

/* video codecs */

REGISTER_ENCODER(A64MULTI, a64multi);

REGISTER_ENCODER(A64MULTI5, a64multi5);

....

/* subtitles */

REGISTER_ENCDEC (SSA, ssa);

REGISTER_ENCDEC (ASS, ass);

....

}

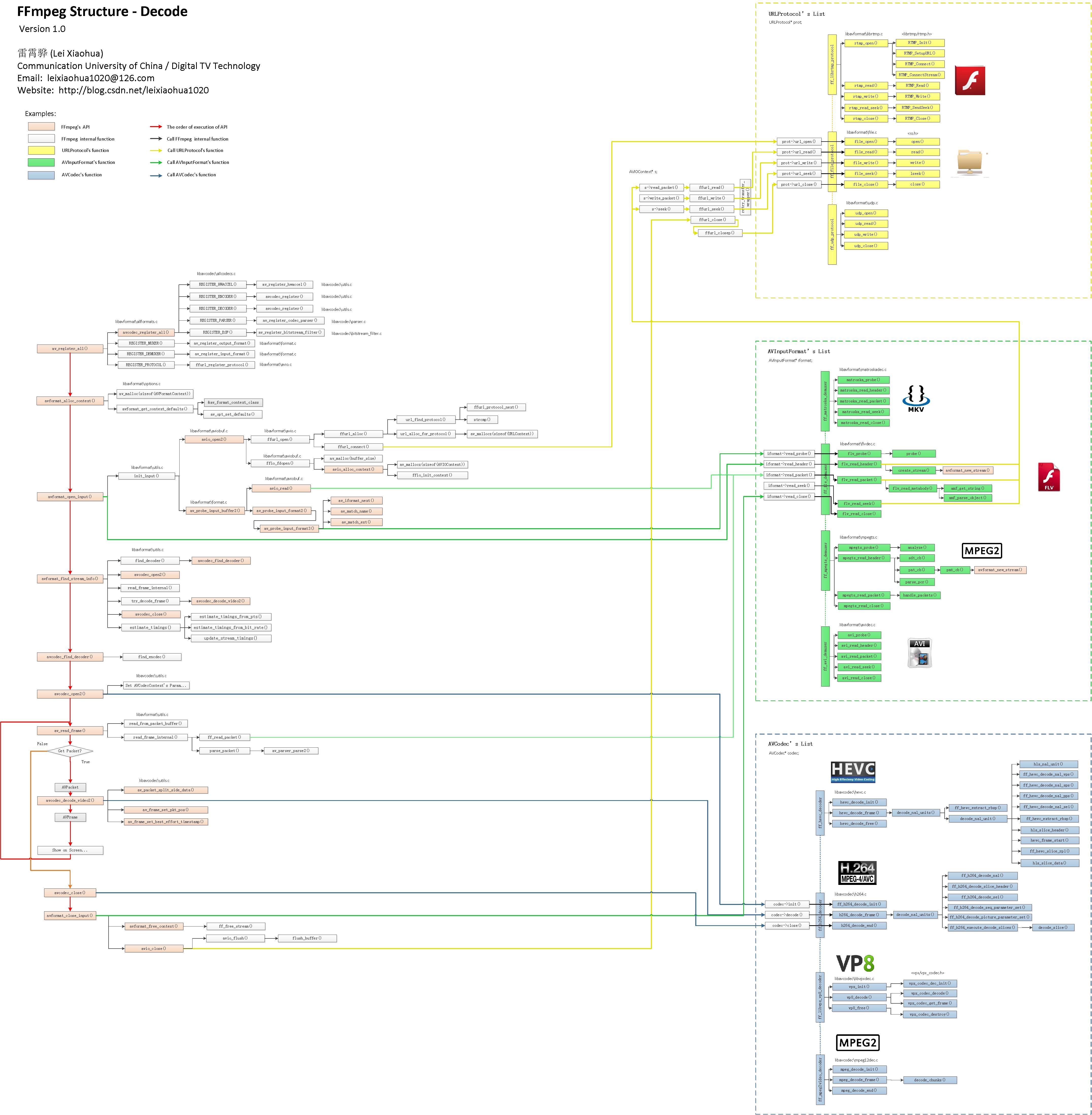

这里简单解释一下下面一段代码。这是一个宏定义,其中“ ff_##x##muxer”这个是一个连接符号,例如 REGISTER_MUXER (A64, a64);那么x = a64, X=A64; ” ff##x##_muxer”->”ff_a64_muxer”。其他的类似。从上面的代码中可以看出av_register_all()就是真的不同的格式注册AVOutputFormat和AVInputFormat。同时也对编解码器进行注册 avcodec_register_all(),在这个api中对硬件加速,音频、视频、字幕等进行编解码注册。主要包含:AVHWAccel(硬件加速器)、AVCodec(编解码器)、AVCodecParser(解析器)。然后他们有分别:av_register_output_format、av_register_input_format、av_register_hwaccel、avcodec_register、av_register_codec_parser、av_register_protocol这6个api。其结构如图(图片来自已故大牛雷霄骅,感谢他在多媒体做出的贡献,同时也提醒广大猿友注意身体)

在整个av_regitster_all过程主要是注册编解码,注册网络协议、硬件加速器等。接下来我们继续分析一下网络协议avformat_network_init。

avformat_network_init/avformat_network_deinit

int avformat_network_init(void)

{

#if CONFIG_NETWORK

int ret;

ff_network_inited_globally = 1;

if ((ret = ff_network_init()) < 0)

return ret;

ff_tls_init();

#endif

return 0;

}

int ff_network_init(void)

{

#if HAVE_WINSOCK2_H

WSADATA wsaData;

#endif

if (!ff_network_inited_globally)

av_log(NULL, AV_LOG_WARNING, "Using network protocols without global "

"network initialization. Please use "

"avformat_network_init(), this will "

"become mandatory later.\n");

#if HAVE_WINSOCK2_H

if (WSAStartup(MAKEWORD(1,1), &wsaData))

return 0;

#endif

return 1;

}

int ff_tls_init(void)

{

#if CONFIG_TLS_OPENSSL_PROTOCOL

int ret;

if ((ret = ff_openssl_init()) < 0)

return ret;

#endif

#if CONFIG_TLS_GNUTLS_PROTOCOL

ff_gnutls_init();

#endif

return 0;

}

int avformat_network_deinit(void)

{

#if CONFIG_NETWORK

ff_network_close();

ff_tls_deinit();

#endif

return 0;

}

这两个api是分别是注册网络和反注册网络.这里包含了https相关的注册。

avformat_alloc_context

avformat_alloc_context分配AVFormatContext内存,同时做一些初始化的工作。具体源码。AVFormatContext主要用于文件或者url操作,读取视频信息,avformat_open_input和avformat_find_stream_info就是填充AVFormatContext中的相关数据,之后我们详细介绍。通过AVFormatContext我们可以获得视频 和音频StreamIndex。在后面的缓冲数据的时候,可以将音频数据和视频数据分开,主要就是根据StreamIndex来确定,AVFormatContext的数据格式可以参考源码。参考图

AVFormatContext *avformat_alloc_context(void)

{

AVFormatContext *ic;

ic = av_malloc(sizeof(AVFormatContext));

if (!ic) return ic;

avformat_get_context_defaults(ic);

ic->av_class = &av_format_context_class;

return ic;

}

static void avformat_get_context_defaults(AVFormatContext *s)

{

memset(s, 0, sizeof(AVFormatContext));

s->av_class = &av_format_context_class;

av_opt_set_defaults(s);

}avformat_open_input

打开输入的流信息,并且读取头部信息。并将头部信息保留到AVFormatContext中。在里面会对AVFormatContext进行检查,同时对输入格式进行设置,对参数进行设置。当然这里所谓的读取文件内容是包含两种方式:第一是本地磁盘文件,第二种是网络文件。因此在读取文件信息的时候,会获取相应通讯协议。

int avformat_open_input(AVFormatContext **ps, const char *filename,

AVInputFormat *fmt, AVDictionary **options)

{

// 检验AVFormatContext开始

AVFormatContext *s = *ps;

int i, ret = 0;

AVDictionary *tmp = NULL;

ID3v2ExtraMeta *id3v2_extra_meta = NULL;

if (!s && !(s = avformat_alloc_context()))

return AVERROR(ENOMEM);

if (!s->av_class) {

av_log(NULL, AV_LOG_ERROR, "Input context has not been properly allocated by avformat_alloc_context() and is not NULL either\n");

return AVERROR(EINVAL);

}

// 检验AVFormatContext结束

// 设置格式,如果fmt不为null

if (fmt)

s->iformat = fmt;

// 复制options格式,如果options不为null

if (options)

av_dict_copy(&tmp, *options, 0);

// pb 是io context

if (s->pb) // must be before any goto fail

s->flags |= AVFMT_FLAG_CUSTOM_IO;

// 设置参数

if ((ret = av_opt_set_dict(s, &tmp)) < 0)

goto fail;

// 打开输入,并且探测输入流格式,其中流格式可以通过av_format_get_probe_score()方法获得。

if ((ret = init_input(s, filename, &tmp)) < 0)

goto fail;

s->probe_score = ret;

// 文件流协议,比如http, file, rtmp, hls等是否是在白名单中。

if (!s->protocol_whitelist && s->pb && s->pb->protocol_whitelist) {

s->protocol_whitelist = av_strdup(s->pb->protocol_whitelist);

if (!s->protocol_whitelist) {

ret = AVERROR(ENOMEM);

goto fail;

}

}

// 文件流协议是否是在黑名单中。

if (!s->protocol_blacklist && s->pb && s->pb->protocol_blacklist) {

s->protocol_blacklist = av_strdup(s->pb->protocol_blacklist);

if (!s->protocol_blacklist) {

ret = AVERROR(ENOMEM);

goto fail;

}

}

// 如果流格式不匹配,则告知失败

if (s->format_whitelist && av_match_list(s->iformat->name, s->format_whitelist, ',') <= 0) {

av_log(s, AV_LOG_ERROR, "Format not on whitelist \'%s\'\n", s->format_whitelist);

ret = AVERROR(EINVAL);

goto fail;

}

avio_skip(s->pb, s->skip_initial_bytes);

// 检查文件名

/* Check filename in case an image number is expected. */

if (s->iformat->flags & AVFMT_NEEDNUMBER) {

if (!av_filename_number_test(filename)) {

ret = AVERROR(EINVAL);

goto fail;

}

}

// 初始化文件长度

s->duration = s->start_time = AV_NOPTS_VALUE;

av_strlcpy(s->filename, filename ? filename : "", sizeof(s->filename));

........

fail:

ff_id3v2_free_extra_meta(&id3v2_extra_meta);

av_dict_free(&tmp);

if (s->pb && !(s->flags & AVFMT_FLAG_CUSTOM_IO))

avio_closep(&s->pb);

avformat_free_context(s);

*ps = NULL;

return ret;

}

init_input方法主要包含两个方法av_probe_input_buffer2和avio_open2两个方法,av_probe_input_buffer2是针对本地磁盘文件进行读取文件信息,而avio_open2是针对网络协议流程的。

av_probe_input_buffer2方法探测输入流的格式。

/* open input file and probe the format if necessary */

static int init_input(AVFormatContext *s, const char *filename, AVDictionary **options)

{

int ret;

AVProbeData pd = {filename, NULL, 0};

if(s->iformat && !strlen(filename))

return 0;

// 判断io Context,如果不为空,则打上标记AVFMT_FLAG_CUSTOM_IO,同时读取内容。

if (s->pb) {

s->flags |= AVFMT_FLAG_CUSTOM_IO;

if (!s->iformat)

return av_probe_input_buffer2(s->pb, &s->iformat, filename, s, 0, 0);

else if (s->iformat->flags & AVFMT_NOFILE)

av_log(s, AV_LOG_WARNING, "Custom AVIOContext makes no sense and "

"will be ignored with AVFMT_NOFILE format.\n");

return 0;

}

if ( (s->iformat && s->iformat->flags & AVFMT_NOFILE) ||

(!s->iformat && (s->iformat = av_probe_input_format2(&pd, 0))))

return 0;

// 如果不是本地磁盘文件就通过avio_open2读取文件信息。

if ((ret = avio_open2(&s->pb, filename, AVIO_FLAG_READ,

&s->interrupt_callback, options)) < 0)

return ret;

if (s->iformat)

return 0;

return av_probe_input_buffer2(s->pb, &s->iformat, filename, s, 0, 0);

}avio_open2方法主要是打开url(ffurl_open方法),这里包含链接服务器( ffurl_alloc和ffurl_connect),最终结果是填充URLContext信息。URLContext是一种网络上下文的结构体。ffio_fdopen则是将URLContext的数据内容填充到AVIOContext中去。如果要实现p2p点播功能,可以在这里面添加相关的参数设置。

int avio_open2(AVIOContext **s, const char *filename, int flags,

const AVIOInterruptCB *int_cb, AVDictionary **options)

{

URLContext *h;

int err;

err = ffurl_open(&h, filename, flags, int_cb, options);

if (err < 0)

return err;

err = ffio_fdopen(s, h);

if (err < 0) {

ffurl_close(h);

return err;

}

return 0;

}avformat_find_stream_info

avformat_find_stream_info这个方法主要干了以下几件事1、查找对于的解码器;2、打开解码器;3、读取帧信息;4、解码帧信息;5、关闭解码器;6、评估时间信息

int avformat_find_stream_info(AVFormatContext *ic, AVDictionary **options)

{

AVStream *st;

AVPacket pkt1, *pkt;

.......

for (i = 0; i < ic->nb_streams; i++) {

const AVCodec *codec;

AVDictionary *thread_opt = NULL;

st = ic->streams[i];

// 如果是视频帧或者字幕帧,设置基准时间

if (st->codec->codec_type == AVMEDIA_TYPE_VIDEO ||

st->codec->codec_type == AVMEDIA_TYPE_SUBTITLE) {

/* if (!st->time_base.num)

st->time_base = */

if (!st->codec->time_base.num)

st->codec->time_base = st->time_base;

}

// only for the split stuff

if (!st->parser && !(ic->flags & AVFMT_FLAG_NOPARSE)) {

st->parser = av_parser_init(st->codec->codec_id);

if (st->parser) {

if (st->need_parsing == AVSTREAM_PARSE_HEADERS) {

st->parser->flags |= PARSER_FLAG_COMPLETE_FRAMES;

} else if (st->need_parsing == AVSTREAM_PARSE_FULL_RAW) {

st->parser->flags |= PARSER_FLAG_USE_CODEC_TS;

}

} else if (st->need_parsing) {

av_log(ic, AV_LOG_VERBOSE, "parser not found for codec "

"%s, packets or times may be invalid.\n",

avcodec_get_name(st->codec->codec_id));

}

}

// 查找解码器

codec = find_decoder(ic, st, st->codec->codec_id);

/* Force thread count to 1 since the H.264 decoder will not extract

* SPS and PPS to extradata during multi-threaded decoding. */

av_dict_set(options ? &options[i] : &thread_opt, "threads", "1", 0);

if (ic->codec_whitelist)

av_dict_set(options ? &options[i] : &thread_opt, "codec_whitelist", ic->codec_whitelist, 0);

/* Ensure that subtitle_header is properly set. */

if (st->codec->codec_type == AVMEDIA_TYPE_SUBTITLE

&& codec && !st->codec->codec) {

if (avcodec_open2(st->codec, codec, options ? &options[i] : &thread_opt) < 0)

av_log(ic, AV_LOG_WARNING,

"Failed to open codec in av_find_stream_info\n");

}

// 打开解码器,对

if (!has_codec_parameters(st, NULL) && st->request_probe <= 0) {

if (codec && !st->codec->codec)

if (avcodec_open2(st->codec, codec, options ? &options[i] : &thread_opt) < 0)

av_log(ic, AV_LOG_WARNING,

"Failed to open codec in av_find_stream_info\n");

}

if (!options)

av_dict_free(&thread_opt);

}

.....

/* NOTE: A new stream can be added there if no header in file

* (AVFMTCTX_NOHEADER). */

ret = read_frame_internal(ic, &pkt1);

if (ret == AVERROR(EAGAIN))

continue;

if (ret < 0) {

/* EOF or error*/

break;

}

......

// 如果之前还没有获取帧信息,再次尝试解码,尽量避免使用该方法,耗时较长。如果设置了AV_CODEC_CAP_CHANNEL_CONF,则必须强制解码一次来确保解码器通道配置的正确性。

try_decode_frame(ic, st, pkt, (options && i < orig_nb_streams) ? &options[i] : NULL);

....

// 后面就是关闭解码器,和时间评估了

}

这里avcodec_open2是通过AVCodec来初始化 AVCodecContext。AVCodec是通过find_decoder获取的。

read_frame_internal是真正的获取frame信息,其中av_read_frame和avformat_find_stream_info都是通过read_frame_internal来获取的。try_decode_frame是在read_frame_internal没有效果,无法获取帧信息的时候使用的。如果设置了AV_CODEC_CAP_CHANNEL_CONF参数,则就是强制使用try_decode_frame来确保码器通道配置的正确性。

由于整个代码灰常的长,这里只是挑选出部分进行分析,源码地址:https://www.ffmpeg.org/doxygen/2.8/libavformat_2utils_8c_source.html

avcodec_find_decoder

这个方法是查找匹配code ID的解码器,解码器在刚开始就以及注册了(av_register_all的时候)。从源码上看这个段代码非常简单, 其中remap_deprecated_codec_id方法是通过id来匹配。encoder是告知是解码器还是编码器。

AVCodec *avcodec_find_decoder(enum AVCodecID id)

{

return find_encdec(id, 0);

}

static AVCodec *find_encdec(enum AVCodecID id, int encoder)

{

AVCodec *p, *experimental = NULL;

p = first_avcodec;

id= remap_deprecated_codec_id(id);

while (p) {

if ((encoder ? av_codec_is_encoder(p) : av_codec_is_decoder(p)) &&

p->id == id) {

if (p->capabilities & AV_CODEC_CAP_EXPERIMENTAL && !experimental) {

experimental = p;

} else

return p;

}

p = p->next;

}

return experimental;

}avcodec_open2

avcodec_open2方法是通过AVCodec也就是查找到的解码器来初始化 AVCodecContext。由于avcodec_open2很长,我们直接贴上代码进行归纳。

int attribute_align_arg avcodec_open2(AVCodecContext *avctx, const AVCodec *codec, AVDictionary **options)

{

int ret = 0;

AVDictionary *tmp = NULL;

const AVPixFmtDescriptor *pixdesc;

// 判断解码器是不是已经打开了

if (avcodec_is_open(avctx))

return 0;

// 条件判断开始

if ((!codec && !avctx->codec)) {

av_log(avctx, AV_LOG_ERROR, "No codec provided to avcodec_open2()\n");

return AVERROR(EINVAL);

}

if ((codec && avctx->codec && codec != avctx->codec)) {

av_log(avctx, AV_LOG_ERROR, "This AVCodecContext was allocated for %s, ""but %s passed to avcodec_open2()\n", avctx->codec->name, codec->name);

return AVERROR(EINVAL);

}

if (!codec)

codec = avctx->codec;

if (avctx->extradata_size < 0 || avctx->extradata_size >= FF_MAX_EXTRADATA_SIZE)

return AVERROR(EINVAL);

// 条件判断结束

// 参数赋值开始

if (options)

av_dict_copy(&tmp, *options, 0);

ret = ff_lock_avcodec(avctx, codec);

if (ret < 0)

return ret;

avctx->internal = av_mallocz(sizeof(AVCodecInternal));

if (!avctx->internal) {

ret = AVERROR(ENOMEM);

goto end;

}

avctx->internal->pool = av_mallocz(sizeof(*avctx->internal->pool));

if (!avctx->internal->pool) {

ret = AVERROR(ENOMEM);

goto free_and_end;

}

avctx->internal->to_free = av_frame_alloc();

if (!avctx->internal->to_free) {

ret = AVERROR(ENOMEM);

goto free_and_end;

}

avctx->internal->buffer_frame = av_frame_alloc();

if (!avctx->internal->buffer_frame) {

ret = AVERROR(ENOMEM);

goto free_and_end;

}

avctx->internal->buffer_pkt = av_packet_alloc();

if (!avctx->internal->buffer_pkt) {

ret = AVERROR(ENOMEM);

goto free_and_end;

}

if (codec->priv_data_size > 0) {

if (!avctx->priv_data) {

avctx->priv_data = av_mallocz(codec->priv_data_size);

if (!avctx->priv_data) {

ret = AVERROR(ENOMEM);

goto end;

}

if (codec->priv_class) {

*(const AVClass **)avctx->priv_data = codec->priv_class;

av_opt_set_defaults(avctx->priv_data);

}

}

if (codec->priv_class && (ret = av_opt_set_dict(avctx->priv_data, &tmp)) < 0)

goto free_and_end;

} else {

avctx->priv_data = NULL;

}

if ((ret = av_opt_set_dict(avctx, &tmp)) < 0)

goto free_and_end;

if (avctx->codec_whitelist && av_match_list(codec->name, avctx->codec_whitelist, ',') <= 0) {

av_log(avctx, AV_LOG_ERROR, "Codec (%s) not on whitelist \'%s\'\n", codec->name, avctx->codec_whitelist);

ret = AVERROR(EINVAL);

goto free_and_end;

}

// only call ff_set_dimensions() for non H.264/VP6F/DXV codecs so as not to overwrite previously setup dimensions

if (!(avctx->coded_width && avctx->coded_height && avctx->width && avctx->height &&

(avctx->codec_id == AV_CODEC_ID_H264 || avctx->codec_id == AV_CODEC_ID_VP6F || avctx->codec_id == AV_CODEC_ID_DXV))) {

if (avctx->coded_width && avctx->coded_height)

ret = ff_set_dimensions(avctx, avctx->coded_width, avctx->coded_height);

else if (avctx->width && avctx->height)

ret = ff_set_dimensions(avctx, avctx->width, avctx->height);

if (ret < 0)

goto free_and_end;

}

if ((avctx->coded_width || avctx->coded_height || avctx->width || avctx->height)

&& ( av_image_check_size(avctx->coded_width, avctx->coded_height, 0, avctx) < 0

|| av_image_check_size(avctx->width, avctx->height, 0, avctx) < 0)) {

av_log(avctx, AV_LOG_WARNING, "Ignoring invalid width/height values\n");

ff_set_dimensions(avctx, 0, 0);

}

if (avctx->width > 0 && avctx->height > 0) {

if (av_image_check_sar(avctx->width, avctx->height,

avctx->sample_aspect_ratio) < 0) {

av_log(avctx, AV_LOG_WARNING, "ignoring invalid SAR: %u/%u\n",

avctx->sample_aspect_ratio.num,

avctx->sample_aspect_ratio.den);

avctx->sample_aspect_ratio = (AVRational){ 0, 1 };

}

}

/* if the decoder init function was already called previously,

* free the already allocated subtitle_header before overwriting it */

if (av_codec_is_decoder(codec))

av_freep(&avctx->subtitle_header);

if (avctx->channels > FF_SANE_NB_CHANNELS) {

ret = AVERROR(EINVAL);

goto free_and_end;

}

avctx->codec = codec;

if ((avctx->codec_type == AVMEDIA_TYPE_UNKNOWN || avctx->codec_type == codec->type) &&

avctx->codec_id == AV_CODEC_ID_NONE) {

avctx->codec_type = codec->type;

avctx->codec_id = codec->id;

}

if (avctx->codec_id != codec->id || (avctx->codec_type != codec->type

&& avctx->codec_type != AVMEDIA_TYPE_ATTACHMENT)) {

av_log(avctx, AV_LOG_ERROR, "Codec type or id mismatches\n");

ret = AVERROR(EINVAL);

goto free_and_end;

}

avctx->frame_number = 0;

avctx->codec_descriptor = avcodec_descriptor_get(avctx->codec_id);

if ((avctx->codec->capabilities & AV_CODEC_CAP_EXPERIMENTAL) &&

avctx->strict_std_compliance > FF_COMPLIANCE_EXPERIMENTAL) {

const char *codec_string = av_codec_is_encoder(codec) ? "encoder" : "decoder";

AVCodec *codec2;

av_log(avctx, AV_LOG_ERROR,

"The %s '%s' is experimental but experimental codecs are not enabled, "

"add '-strict %d' if you want to use it.\n",

codec_string, codec->name, FF_COMPLIANCE_EXPERIMENTAL);

codec2 = av_codec_is_encoder(codec) ? avcodec_find_encoder(codec->id) : avcodec_find_decoder(codec->id);

if (!(codec2->capabilities & AV_CODEC_CAP_EXPERIMENTAL))

av_log(avctx, AV_LOG_ERROR, "Alternatively use the non experimental %s '%s'.\n",

codec_string, codec2->name);

ret = AVERROR_EXPERIMENTAL;

goto free_and_end;

}

if (avctx->codec_type == AVMEDIA_TYPE_AUDIO &&

(!avctx->time_base.num || !avctx->time_base.den)) {

avctx->time_base.num = 1;

avctx->time_base.den = avctx->sample_rate;

}

if (!HAVE_THREADS)

av_log(avctx, AV_LOG_WARNING, "Warning: not compiled with thread support, using thread emulation\n");

if (CONFIG_FRAME_THREAD_ENCODER && av_codec_is_encoder(avctx->codec)) {

ff_unlock_avcodec(codec); //we will instantiate a few encoders thus kick the counter to prevent false detection of a problem

ret = ff_frame_thread_encoder_init(avctx, options ? *options : NULL);

ff_lock_avcodec(avctx, codec);

if (ret < 0)

goto free_and_end;

}

if (HAVE_THREADS

&& !(avctx->internal->frame_thread_encoder && (avctx->active_thread_type&FF_THREAD_FRAME))) {

ret = ff_thread_init(avctx);

if (ret < 0) {

goto free_and_end;

}

}

if (!HAVE_THREADS && !(codec->capabilities & AV_CODEC_CAP_AUTO_THREADS))

avctx->thread_count = 1;

if (avctx->codec->max_lowres < avctx->lowres || avctx->lowres < 0) {

av_log(avctx, AV_LOG_ERROR, "The maximum value for lowres supported by the decoder is %d\n",

avctx->codec->max_lowres);

ret = AVERROR(EINVAL);

goto free_and_end;

}

#if FF_API_VISMV

if (avctx->debug_mv)

av_log(avctx, AV_LOG_WARNING, "The 'vismv' option is deprecated, "

"see the codecview filter instead.\n");

#endif

if (av_codec_is_encoder(avctx->codec)) {

int i;

#if FF_API_CODED_FRAME

FF_DISABLE_DEPRECATION_WARNINGS

avctx->coded_frame = av_frame_alloc();

if (!avctx->coded_frame) {

ret = AVERROR(ENOMEM);

goto free_and_end;

}

FF_ENABLE_DEPRECATION_WARNINGS

#endif

if (avctx->time_base.num <= 0 || avctx->time_base.den <= 0) {

av_log(avctx, AV_LOG_ERROR, "The encoder timebase is not set.\n");

ret = AVERROR(EINVAL);

goto free_and_end;

}

if (avctx->codec->sample_fmts) {

for (i = 0; avctx->codec->sample_fmts[i] != AV_SAMPLE_FMT_NONE; i++) {

if (avctx->sample_fmt == avctx->codec->sample_fmts[i])

break;

if (avctx->channels == 1 &&

av_get_planar_sample_fmt(avctx->sample_fmt) ==

av_get_planar_sample_fmt(avctx->codec->sample_fmts[i])) {

avctx->sample_fmt = avctx->codec->sample_fmts[i];

break;

}

}

if (avctx->codec->sample_fmts[i] == AV_SAMPLE_FMT_NONE) {

char buf[128];

snprintf(buf, sizeof(buf), "%d", avctx->sample_fmt);

av_log(avctx, AV_LOG_ERROR, "Specified sample format %s is invalid or not supported\n",

(char *)av_x_if_null(av_get_sample_fmt_name(avctx->sample_fmt), buf));

ret = AVERROR(EINVAL);

goto free_and_end;

}

}

if (avctx->codec->pix_fmts) {

for (i = 0; avctx->codec->pix_fmts[i] != AV_PIX_FMT_NONE; i++)

if (avctx->pix_fmt == avctx->codec->pix_fmts[i])

break;

if (avctx->codec->pix_fmts[i] == AV_PIX_FMT_NONE

&& !((avctx->codec_id == AV_CODEC_ID_MJPEG || avctx->codec_id == AV_CODEC_ID_LJPEG)

&& avctx->strict_std_compliance <= FF_COMPLIANCE_UNOFFICIAL)) {

char buf[128];

snprintf(buf, sizeof(buf), "%d", avctx->pix_fmt);

av_log(avctx, AV_LOG_ERROR, "Specified pixel format %s is invalid or not supported\n",

(char *)av_x_if_null(av_get_pix_fmt_name(avctx->pix_fmt), buf));

ret = AVERROR(EINVAL);

goto free_and_end;

}

if (avctx->codec->pix_fmts[i] == AV_PIX_FMT_YUVJ420P ||

avctx->codec->pix_fmts[i] == AV_PIX_FMT_YUVJ411P ||

avctx->codec->pix_fmts[i] == AV_PIX_FMT_YUVJ422P ||

avctx->codec->pix_fmts[i] == AV_PIX_FMT_YUVJ440P ||

avctx->codec->pix_fmts[i] == AV_PIX_FMT_YUVJ444P)

avctx->color_range = AVCOL_RANGE_JPEG;

}

if (avctx->codec->supported_samplerates) {

for (i = 0; avctx->codec->supported_samplerates[i] != 0; i++)

if (avctx->sample_rate == avctx->codec->supported_samplerates[i])

break;

if (avctx->codec->supported_samplerates[i] == 0) {

av_log(avctx, AV_LOG_ERROR, "Specified sample rate %d is not supported\n",

avctx->sample_rate);

ret = AVERROR(EINVAL);

goto free_and_end;

}

}

if (avctx->sample_rate < 0) {

av_log(avctx, AV_LOG_ERROR, "Specified sample rate %d is not supported\n",

avctx->sample_rate);

ret = AVERROR(EINVAL);

goto free_and_end;

}

if (avctx->codec->channel_layouts) {

if (!avctx->channel_layout) {

av_log(avctx, AV_LOG_WARNING, "Channel layout not specified\n");

} else {

for (i = 0; avctx->codec->channel_layouts[i] != 0; i++)

if (avctx->channel_layout == avctx->codec->channel_layouts[i])

break;

if (avctx->codec->channel_layouts[i] == 0) {

char buf[512];

av_get_channel_layout_string(buf, sizeof(buf), -1, avctx->channel_layout);

av_log(avctx, AV_LOG_ERROR, "Specified channel layout '%s' is not supported\n", buf);

ret = AVERROR(EINVAL);

goto free_and_end;

}

}

}

if (avctx->channel_layout && avctx->channels) {

int channels = av_get_channel_layout_nb_channels(avctx->channel_layout);

if (channels != avctx->channels) {

char buf[512];

av_get_channel_layout_string(buf, sizeof(buf), -1, avctx->channel_layout);

av_log(avctx, AV_LOG_ERROR,

"Channel layout '%s' with %d channels does not match number of specified channels %d\n",

buf, channels, avctx->channels);

ret = AVERROR(EINVAL);

goto free_and_end;

}

} else if (avctx->channel_layout) {

avctx->channels = av_get_channel_layout_nb_channels(avctx->channel_layout);

}

if (avctx->channels < 0) {

av_log(avctx, AV_LOG_ERROR, "Specified number of channels %d is not supported\n",

avctx->channels);

ret = AVERROR(EINVAL);

goto free_and_end;

}

if(avctx->codec_type == AVMEDIA_TYPE_VIDEO) {

pixdesc = av_pix_fmt_desc_get(avctx->pix_fmt);

if ( avctx->bits_per_raw_sample < 0

|| (avctx->bits_per_raw_sample > 8 && pixdesc->comp[0].depth <= 8)) {

av_log(avctx, AV_LOG_WARNING, "Specified bit depth %d not possible with the specified pixel formats depth %d\n",

avctx->bits_per_raw_sample, pixdesc->comp[0].depth);

avctx->bits_per_raw_sample = pixdesc->comp[0].depth;

}

if (avctx->width <= 0 || avctx->height <= 0) {

av_log(avctx, AV_LOG_ERROR, "dimensions not set\n");

ret = AVERROR(EINVAL);

goto free_and_end;

}

}

if ( (avctx->codec_type == AVMEDIA_TYPE_VIDEO || avctx->codec_type == AVMEDIA_TYPE_AUDIO)

&& avctx->bit_rate>0 && avctx->bit_rate<1000) {

av_log(avctx, AV_LOG_WARNING, "Bitrate %"PRId64" is extremely low, maybe you mean %"PRId64"k\n", (int64_t)avctx->bit_rate, (int64_t)avctx->bit_rate);

}

if (!avctx->rc_initial_buffer_occupancy)

avctx->rc_initial_buffer_occupancy = avctx->rc_buffer_size * 3 / 4;

if (avctx->ticks_per_frame && avctx->time_base.num &&

avctx->ticks_per_frame > INT_MAX / avctx->time_base.num) {

av_log(avctx, AV_LOG_ERROR,

"ticks_per_frame %d too large for the timebase %d/%d.",

avctx->ticks_per_frame,

avctx->time_base.num,

avctx->time_base.den);

goto free_and_end;

}

if (avctx->hw_frames_ctx) {

AVHWFramesContext *frames_ctx = (AVHWFramesContext*)avctx->hw_frames_ctx->data;

if (frames_ctx->format != avctx->pix_fmt) {

av_log(avctx, AV_LOG_ERROR,

"Mismatching AVCodecContext.pix_fmt and AVHWFramesContext.format\n");

ret = AVERROR(EINVAL);

goto free_and_end;

}

}

}

avctx->pts_correction_num_faulty_pts =

avctx->pts_correction_num_faulty_dts = 0;

avctx->pts_correction_last_pts =

avctx->pts_correction_last_dts = INT64_MIN;

if ( !CONFIG_GRAY && avctx->flags & AV_CODEC_FLAG_GRAY

&& avctx->codec_descriptor->type == AVMEDIA_TYPE_VIDEO)

av_log(avctx, AV_LOG_WARNING,

"gray decoding requested but not enabled at configuration time\n");

// 参数赋值结束

if ( avctx->codec->init && (!(avctx->active_thread_type&FF_THREAD_FRAME)

|| avctx->internal->frame_thread_encoder)) {

ret = avctx->codec->init(avctx);

if (ret < 0) {

goto free_and_end;

}

}

ret=0;

#if FF_API_AUDIOENC_DELAY

if (av_codec_is_encoder(avctx->codec))

avctx->delay = avctx->initial_padding;

#endif

if (av_codec_is_decoder(avctx->codec)) {

if (!avctx->bit_rate)

avctx->bit_rate = get_bit_rate(avctx);

/* validate channel layout from the decoder */

if (avctx->channel_layout) {

int channels = av_get_channel_layout_nb_channels(avctx->channel_layout);

if (!avctx->channels)

avctx->channels = channels;

else if (channels != avctx->channels) {

char buf[512];

av_get_channel_layout_string(buf, sizeof(buf), -1, avctx->channel_layout);

av_log(avctx, AV_LOG_WARNING,

"Channel layout '%s' with %d channels does not match specified number of channels %d: "

"ignoring specified channel layout\n",

buf, channels, avctx->channels);

avctx->channel_layout = 0;

}

}

if (avctx->channels && avctx->channels < 0 ||

avctx->channels > FF_SANE_NB_CHANNELS) {

ret = AVERROR(EINVAL);

goto free_and_end;

}

if (avctx->sub_charenc) {

if (avctx->codec_type != AVMEDIA_TYPE_SUBTITLE) {

av_log(avctx, AV_LOG_ERROR, "Character encoding is only "

"supported with subtitles codecs\n");

ret = AVERROR(EINVAL);

goto free_and_end;

} else if (avctx->codec_descriptor->props & AV_CODEC_PROP_BITMAP_SUB) {

av_log(avctx, AV_LOG_WARNING, "Codec '%s' is bitmap-based, "

"subtitles character encoding will be ignored\n",

avctx->codec_descriptor->name);

avctx->sub_charenc_mode = FF_SUB_CHARENC_MODE_DO_NOTHING;

} else {

/* input character encoding is set for a text based subtitle

* codec at this point */

if (avctx->sub_charenc_mode == FF_SUB_CHARENC_MODE_AUTOMATIC)

avctx->sub_charenc_mode = FF_SUB_CHARENC_MODE_PRE_DECODER;

if (avctx->sub_charenc_mode == FF_SUB_CHARENC_MODE_PRE_DECODER) {

#if CONFIG_ICONV

iconv_t cd = iconv_open("UTF-8", avctx->sub_charenc);

if (cd == (iconv_t)-1) {

ret = AVERROR(errno);

av_log(avctx, AV_LOG_ERROR, "Unable to open iconv context "

"with input character encoding \"%s\"\n", avctx->sub_charenc);

goto free_and_end;

}

iconv_close(cd);

#else

av_log(avctx, AV_LOG_ERROR, "Character encoding subtitles "

"conversion needs a libavcodec built with iconv support "

"for this codec\n");

ret = AVERROR(ENOSYS);

goto free_and_end;

#endif

}

}

}

#if FF_API_AVCTX_TIMEBASE

if (avctx->framerate.num > 0 && avctx->framerate.den > 0)

avctx->time_base = av_inv_q(av_mul_q(avctx->framerate, (AVRational){avctx->ticks_per_frame, 1}));

#endif

}

if (codec->priv_data_size > 0 && avctx->priv_data && codec->priv_class) {

av_assert0(*(const AVClass **)avctx->priv_data == codec->priv_class);

}

end:

ff_unlock_avcodec(codec);

if (options) {

av_dict_free(options);

*options = tmp;

}

return ret;

free_and_end:

if (avctx->codec &&

(avctx->codec->caps_internal & FF_CODEC_CAP_INIT_CLEANUP))

avctx->codec->close(avctx);

if (codec->priv_class && codec->priv_data_size)

av_opt_free(avctx->priv_data);

av_opt_free(avctx);

#if FF_API_CODED_FRAME

FF_DISABLE_DEPRECATION_WARNINGS

av_frame_free(&avctx->coded_frame);

FF_ENABLE_DEPRECATION_WARNINGS

#endif

av_dict_free(&tmp);

av_freep(&avctx->priv_data);

if (avctx->internal) {

av_packet_free(&avctx->internal->buffer_pkt);

av_frame_free(&avctx->internal->buffer_frame);

av_frame_free(&avctx->internal->to_free);

av_freep(&avctx->internal->pool);

}

av_freep(&avctx->internal);

avctx->codec = NULL;

goto end;

}整个代码非常长,灰常啰嗦。其实归纳起来,主要做了这么些事1、参数检查;2、参数设置;3、解码器初始化。

av_read_frame

av_read_frame是读取流数据的下一帧,这个方法是返回视频内容,它不会检查解码器的有效帧。它将视频内容分成了很多帧,每次调用依次返回一个,为了给解码器带来更多的信息,因此它不会删除无效数据。

int av_read_frame(AVFormatContext *s, AVPacket *pkt)

{

const int genpts = s->flags & AVFMT_FLAG_GENPTS;

int eof = 0;

int ret;

AVStream *st;

if (!genpts) {

ret = s->internal->packet_buffer

? read_from_packet_buffer(&s->internal->packet_buffer,

&s->internal->packet_buffer_end, pkt)

: read_frame_internal(s, pkt);

if (ret < 0)

return ret;

goto return_packet;

}

for (;;) {

AVPacketList *pktl = s->internal->packet_buffer;

if (pktl) {

AVPacket *next_pkt = &pktl->pkt;

if (next_pkt->dts != AV_NOPTS_VALUE) {

int wrap_bits = s->streams[next_pkt->stream_index]->pts_wrap_bits;

// last dts seen for this stream. if any of packets following

// current one had no dts, we will set this to AV_NOPTS_VALUE.

int64_t last_dts = next_pkt->dts;

while (pktl && next_pkt->pts == AV_NOPTS_VALUE) {

if (pktl->pkt.stream_index == next_pkt->stream_index &&

(av_compare_mod(next_pkt->dts, pktl->pkt.dts, 2LL << (wrap_bits - 1)) < 0)) {

if (av_compare_mod(pktl->pkt.pts, pktl->pkt.dts, 2LL << (wrap_bits - 1))) {

// not B-frame

next_pkt->pts = pktl->pkt.dts;

}

if (last_dts != AV_NOPTS_VALUE) {

// Once last dts was set to AV_NOPTS_VALUE, we don't change it.

last_dts = pktl->pkt.dts;

}

}

pktl = pktl->next;

}

if (eof && next_pkt->pts == AV_NOPTS_VALUE && last_dts != AV_NOPTS_VALUE) {

// Fixing the last reference frame had none pts issue (For MXF etc).

// We only do this when

// 1. eof.

// 2. we are not able to resolve a pts value for current packet.

// 3. the packets for this stream at the end of the files had valid dts.

next_pkt->pts = last_dts + next_pkt->duration;

}

pktl = s->internal->packet_buffer;

}

/* read packet from packet buffer, if there is data */

st = s->streams[next_pkt->stream_index];

if (!(next_pkt->pts == AV_NOPTS_VALUE && st->discard < AVDISCARD_ALL &&

next_pkt->dts != AV_NOPTS_VALUE && !eof)) {

ret = read_from_packet_buffer(&s->internal->packet_buffer,

&s->internal->packet_buffer_end, pkt);

goto return_packet;

}

}

ret = read_frame_internal(s, pkt);

if (ret < 0) {

if (pktl && ret != AVERROR(EAGAIN)) {

eof = 1;

continue;

} else

return ret;

}

if (av_dup_packet(add_to_pktbuf(&s->internal->packet_buffer, pkt,

&s->internal->packet_buffer_end)) < 0)

return AVERROR(ENOMEM);

}

return_packet:

st = s->streams[pkt->stream_index];

if ((s->iformat->flags & AVFMT_GENERIC_INDEX) && pkt->flags & AV_PKT_FLAG_KEY) {

ff_reduce_index(s, st->index);

av_add_index_entry(st, pkt->pos, pkt->dts, 0, 0, AVINDEX_KEYFRAME);

}

if (is_relative(pkt->dts))

pkt->dts -= RELATIVE_TS_BASE;

if (is_relative(pkt->pts))

pkt->pts -= RELATIVE_TS_BASE;

return ret;

}从av_read_frame的源码我们可以看出,它首选调用read_from_packet_buffer去packet buffer中获取AVPacket或者read_frame_internal去获取AVPacket数据。read_from_packet_buffer表示已经缓冲的帧数据(网络),read_frame_internal是从远端获取,没有缓冲的数据。而那个for循环是在手帧的时候去生成缓冲数据。

avcodec_decode_video2 、avcodec_decode_audio4

这个方法主要是将 AVPacket数据解码成AVFrame 数据,主要是AVPacket.size和AVPacket.data。这个需要配合av_read_frame使用,每一个从av_read_frame读取来的AVPacket经过avcodec_decode_video2解码成AVFrame。

avcodec_decode_video2主要干了三件事1、数据切分;2、数据解码;3、时间信息设置。

avcodec_decode_audio4和avcodec_decode_video2类似。

由于源码比较长,我这边就不发出来了。大家可以从

https://www.ffmpeg.org/doxygen/2.8/libavcodec_2utils_8c_source.html进行在线查看。

avcodec_close()和avformat_close_input

这个方法一般是视频播放完成时候调用,用来释放资源,清理内存,防止泄露。这里不详细阐述。详细可以参考源码。

总结

我们从FFmpeg的api调用顺序详细阐述了这些api的作用和简单分析一下源码。对于真个解码过程我们可以很清楚的了解上面各个过程主要做了什么事情,方面我们在开发中可以有针对性的进行调试修改。

这篇博客之后我本来是打算写编码过程的,因为没有将到推流编码的过程,因此编码过程放到推流之后,下一个章节是niginx服务和nginx-rtmp-module配置。由于最近在学习机器学习的东西,这个序列更新可能会比较慢。

最后附一张雷霄骅大神的图,方便大家查阅

865

865

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?