Using the LeNet-5 to implement the handwritting recognition.

target image

notebook.ipynb

import cv2

import numpy as np

import matplotlib.pyplot as plt

# display plots in this notebook

%matplotlib inline

# set display defaults

plt.rcParams['figure.figsize'] = (10, 10) # large images

plt.rcParams['image.interpolation'] = 'nearest' # don't interpolate: show square pixels

plt.rcParams['image.cmap'] = 'gray' # use grayscale output rather than a (potentially misleading) color heatmapImage Process

filename = "../images/h1.jpg"

srcImg = cv2.imread(filename)

szImg = cv2.resize(srcImg, (640, 360))

grayImg = cv2.cvtColor(szImg, cv2.COLOR_BGR2GRAY)

blurImg = cv2.GaussianBlur(grayImg, (5, 5), 0)

binImg = cv2.adaptiveThreshold(blurImg, 255,

cv2.ADAPTIVE_THRESH_GAUSSIAN_C,

cv2.THRESH_BINARY_INV, 11, 2)

plt.imshow(binImg)<matplotlib.image.AxesImage at 0x7fc431819090>

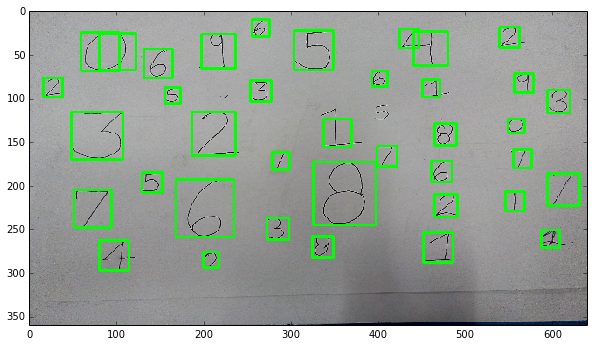

Find Contours

class DigitROI(object):

def __init__(self, roi, x, y, num=0):

self.roi = roi

self.x = x

self.y = y

self.num = num

SZ = 28

digit = []

frame = szImg.copy()

contours, heirs = cv2.findContours(binImg.copy(), cv2.RETR_CCOMP, cv2.CHAIN_APPROX_SIMPLE)

try:

heirs = heirs[0]

except:

heirs = []

for cnt, heir in zip(contours, heirs):

_, _, _, outer_i = heir

if outer_i >= 0:

continue

x, y, w, h = cv2.boundingRect(cnt)

if not (16 <= h <= 80 and w <= 1.2 * h):

continue

pad = max(h - w, 0)

x, w = x - pad / 2, w + pad

cv2.rectangle(szImg, (x, y), (x + w, y + h), (0, 255, 0), 2)

bin_roi = binImg[y:, x:][:h, :w]

s = 1.5 * float(h) / SZ

m = cv2.moments(bin_roi)

c1 = np.float32([m['m10'], m['m01']]) / m['m00']

c0 = np.float32([SZ / 2, SZ / 2])

t = c1 - s * c0

A = np.zeros((2, 3), np.float32)

A[:, :2] = np.eye(2) * s

A[:, 2] = t

bin_norm = cv2.warpAffine(bin_roi, A, (SZ, SZ), flags=cv2.WARP_INVERSE_MAP | cv2.INTER_LINEAR)

roi = DigitROI(bin_norm, x, y)

digit.append(roi)

plt.imshow(szImg)<matplotlib.image.AxesImage at 0x7fc431964d50>

Visualize the ROIs which are extracted from image

for i in range(4):

plt.figure(figsize=(2, 2))

plt.imshow(digit[i].roi)Load net and set up input preprocessing

import caffe

caffe.set_mode_cpu()

# deploy.prototxt是用来在网络完成训练之后进行部署的(其他深度学习框架导入caffemodel文件时,也是以此为原型)

model_def = 'mnist/deploy.prototxt'

model_weights = 'mnist/custom_net_iter_5000.caffemodel'

net = caffe.Net(model_def, # defines the structure of the model

model_weights, # contains the trained weights

caffe.TEST) # use test mode (e.g., don't perform dropout)- Converting OpenCV grayscale Mat to Caffe blob

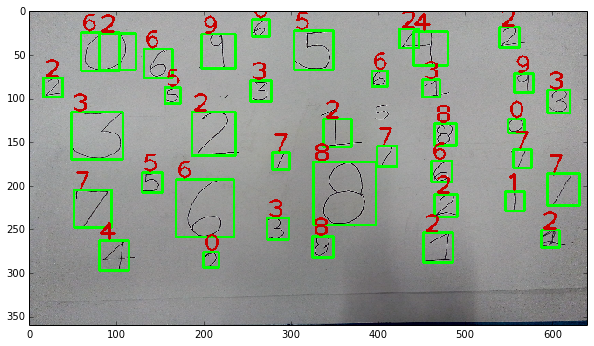

for i in range(len(digit)):

transformed_image = transformer.preprocess('data', digit[i].roi)

# copy the image data into the memory allocated for the net

net.blobs['data'].data[...] = transformed_image

output = net.forward()

output_prob = output['loss'][0]

digit[i].num = output_prob.argmax()

cv2.putText(szImg, '%d' % digit[i].num, (digit[i].x, digit[i].y),

cv2.FONT_HERSHEY_PLAIN, 2.0, (200, 0, 0), thickness=2)

plt.imshow(szImg)<matplotlib.image.AxesImage at 0x7fc42ebb00d0>

Usefull websites

https://github.com/BVLC/caffe/wiki/Using-a-Trained-Network:-Deploy

https://ipython.org/ipython-doc/3/notebook/nbconvert.html

1153

1153

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?