一、背景知识

逻辑回归(LR)和广义线性模型(GLM)是统计学和机器学习中的重要工具,广泛用于解决预测和分类问题。逻辑回归专用于二分类问题,而广义线性模型则适用于更广泛的数据分布类型。

1.1 逻辑回归(LR)

逻辑回归是广义线性模型的一个特例,用于处理二分类问题,其中响应变量 Y Y Y 遵循二项分布,采用 Logit 链接函数。

特点:

- 响应变量: Y Y Y 表示二分类结果(0或1)。

- 链接函数:Logit 函数,表达式为:

log ( p 1 − p ) = β 0 + β 1 x 1 + ⋯ + β p x p \log\left(\frac{p}{1-p}\right) = \beta_0 + \beta_1 x_1 + \cdots + \beta_p x_p log(1−pp)=β0+β1x1+⋯+βpxp

其中 p p p 表示事件 Y = 1 Y=1 Y=1 的概率。

1.2 广义线性模型(GLM)

广义线性模型是一个统计框架,用于描述预测变量和响应变量之间的关系。响应变量的分布属于指数分布族。

主要组成:

- 随机分量:响应变量 Y Y Y 的概率分布,属于指数分布族。

- 系统分量:预测变量 X X X 的线性组合,表达式为 η = β 0 + β 1 x 1 + ⋯ + β p x p \eta = \beta_0 + \beta_1 x_1 + \cdots + \beta_p x_p η=β0+β1x1+⋯+βpxp。

- 链接函数:函数 g g g 将响应变量的期望 μ = E ( Y ) \mu = E(Y) μ=E(Y) 与系统分量 η \eta η 联系起来,表达式为 g ( μ ) = η g(\mu) = \eta g(μ)=η。

公式示例:

如果响应变量

Y

Y

Y 遵循泊松分布,适合的链接函数是自然对数:

log

(

μ

)

=

η

\log(\mu) = \eta

log(μ)=η

1.3 关系与区别

- 关系:逻辑回归是广义线性模型的一个特例,专门用于处理响应变量遵循二项分布的情况。

- 区别:GLM 提供了更多的灵活性,允许根据数据的具体分布选择不同的分布和链接函数。

1.4 应用

- 广义线性模型:可用于各种数据类型,例如,正态分布用于连续数据,泊松分布用于计数数据。

- 逻辑回归:广泛应用于医疗诊断、信用评分、市场营销响应预测等领域,特别适用于处理二分类问题。

二、隐语模型

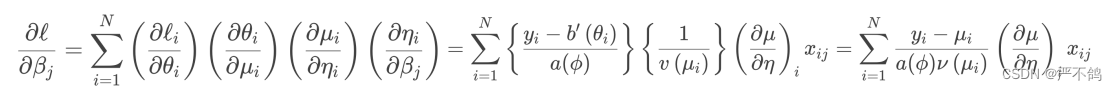

2.1 GLM模型参数估计

一阶优化器SGD

二阶优化器IRLS

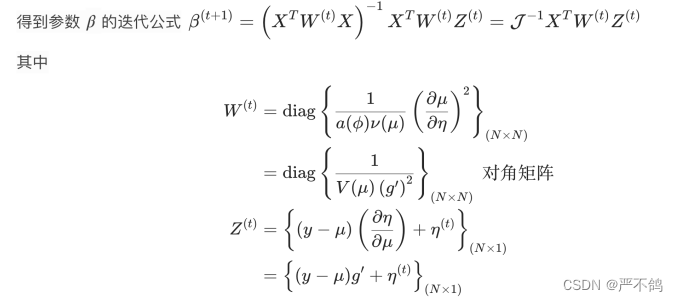

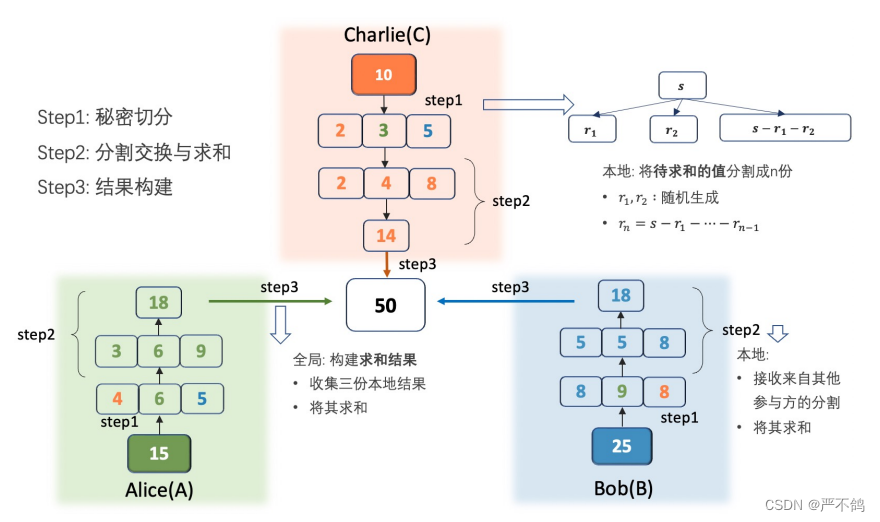

2.2 秘密分享

加法

乘法

正确性分析:

c

=

c

0

+

c

1

=

f

a

0

+

e

b

0

+

z

0

−

e

f

+

f

a

1

+

e

b

1

+

z

1

=

f

a

+

e

b

+

z

−

e

f

=

(

b

−

v

)

a

+

(

a

−

u

)

b

+

z

−

(

a

−

u

)

(

b

−

v

)

=

a

b

−

a

v

+

a

b

−

b

u

+

a

b

−

a

b

+

b

u

+

a

v

−

u

v

=

a

b

\begin{align*} c &= c_0 + c_1 \\ &= fa_0 + eb_0 + z_0 - ef + fa_1 + eb_1 + z_1 \\ &= fa + eb + z - ef \\ &= (b - v)a + (a - u)b+z-(a-u)(b-v)\\ &=ab-av+ab-bu+ab-ab+bu+av-uv\\ &=ab \end{align*}

c=c0+c1=fa0+eb0+z0−ef+fa1+eb1+z1=fa+eb+z−ef=(b−v)a+(a−u)b+z−(a−u)(b−v)=ab−av+ab−bu+ab−ab+bu+av−uv=ab

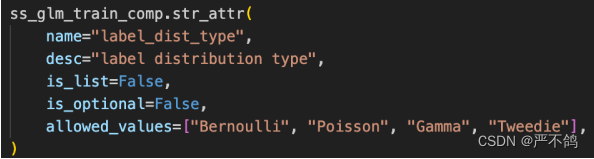

2.3 SSGLM/SSLR参数分析

1.选择分布类型

主要支持:伯努利分布,泊松分布,伽马分布,威迪分布。用于描述

Y

Y

Y的分布情况。

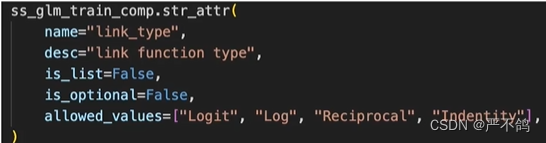

2.连接函数

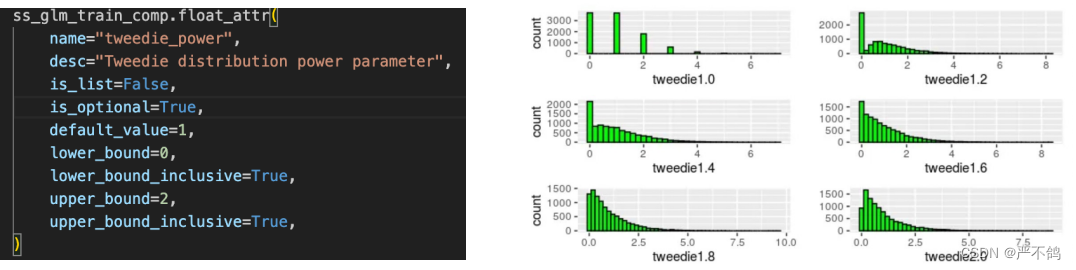

3.tweedie分布的P值

Tweedie分布是一种泊松分布和伽马分布的复合分布。当p=1,Tweedie就是Poisson分布,当p=2,Tweedie就是Gamma分布。1<p<2的时候就变成Poisson和Gamma的复合分布。

Tweedie分布是一种泊松分布和伽马分布的复合分布。当p=1,Tweedie就是Poisson分布,当p=2,Tweedie就是Gamma分布。1<p<2的时候就变成Poisson和Gamma的复合分布。

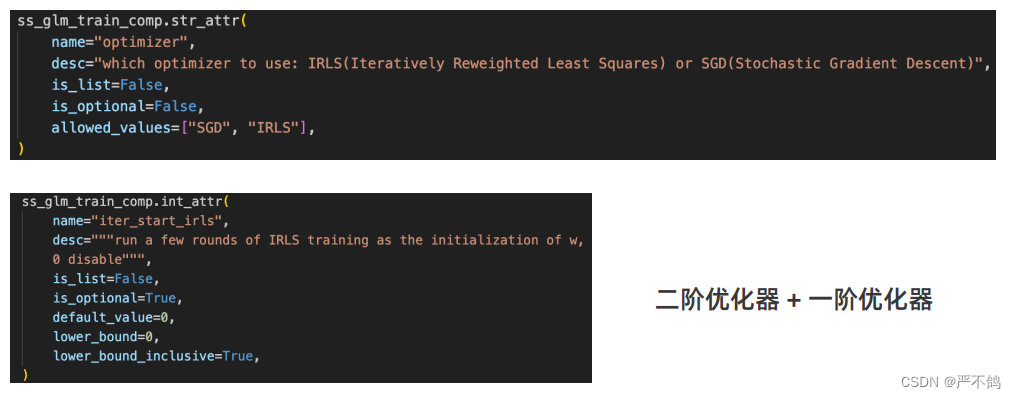

4.优化器

可以刚开始通过irls选定较好初始值,接着转SGD。

5.

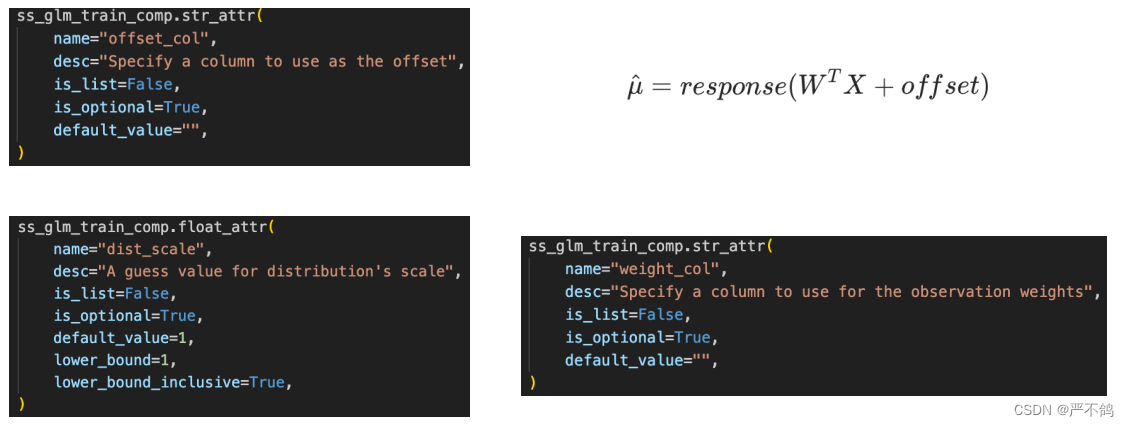

- o f f s e t _ c o l offset\_col offset_col:偏置

- d i s t _ s c a l e dist\_scale dist_scale:分布的方差

- w e i g h t _ c o l weight\_col weight_col:对训练的每一个数据进行加权

三、实践

要求:

环境

- wsl2

- ubuntu 20.04

- secretnote 1.3.0-amd64版本

定义加载mtpl2数据集,画图,评估函数

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

from functools import partial

from sklearn.datasets import fetch_openml

from sklearn.metrics import (

mean_absolute_error,

mean_squared_error,

mean_tweedie_deviance,

)

def load_mtpl2(n_samples=None):

"""Fetch the French Motor Third-Party Liability Claims dataset.

Parameters

----------

n_samples: int, default=None

number of samples to select (for faster run time). Full dataset has

678013 samples.

"""

# freMTPL2freq dataset from https://www.openml.org/d/41214

df_freq = fetch_openml(data_id=41214, as_frame=True).data

df_freq["IDpol"] = df_freq["IDpol"].astype(int)

df_freq.set_index("IDpol", inplace=True)

# freMTPL2sev dataset from https://www.openml.org/d/41215

df_sev = fetch_openml(data_id=41215, as_frame=True).data

# sum ClaimAmount over identical IDs

df_sev = df_sev.groupby("IDpol").sum()

df = df_freq.join(df_sev, how="left")

df["ClaimAmount"].fillna(0, inplace=True)

# unquote string fields

for column_name in df.columns[df.dtypes.values == object]:

df[column_name] = df[column_name].str.strip("'")

return df.iloc[:n_samples]

def plot_obs_pred(

df,

feature,

weight,

observed,

predicted,

y_label=None,

title=None,

ax=None,

fill_legend=False,

):

"""Plot observed and predicted - aggregated per feature level.

Parameters

----------

df : DataFrame

input data

feature: str

a column name of df for the feature to be plotted

weight : str

column name of df with the values of weights or exposure

observed : str

a column name of df with the observed target

predicted : DataFrame

a dataframe, with the same index as df, with the predicted target

fill_legend : bool, default=False

whether to show fill_between legend

"""

# aggregate observed and predicted variables by feature level

df_ = df.loc[:, [feature, weight]].copy()

df_["observed"] = df[observed] * df[weight]

df_["predicted"] = predicted * df[weight]

df_ = (

df_.groupby([feature])[[weight, "observed", "predicted"]]

.sum()

.assign(observed=lambda x: x["observed"] / x[weight])

.assign(predicted=lambda x: x["predicted"] / x[weight])

)

ax = df_.loc[:, ["observed", "predicted"]].plot(style=".", ax=ax)

y_max = df_.loc[:, ["observed", "predicted"]].values.max() * 0.8

p2 = ax.fill_between(

df_.index,

0,

y_max * df_[weight] / df_[weight].values.max(),

color="g",

alpha=0.1,

)

if fill_legend:

ax.legend([p2], ["{} distribution".format(feature)])

ax.set(

ylabel=y_label if y_label is not None else None,

title=title if title is not None else "Train: Observed vs Predicted",

)

def score_estimator(

estimator,

X_train,

X_test,

df_train,

df_test,

target,

weights,

tweedie_powers=None,

):

"""Evaluate an estimator on train and test sets with different metrics"""

metrics = [

("D² explained", None), # Use default scorer if it exists

("mean abs. error", mean_absolute_error),

("mean squared error", mean_squared_error),

]

if tweedie_powers:

metrics += [

(

"mean Tweedie dev p={:.4f}".format(power),

partial(mean_tweedie_deviance, power=power),

)

for power in tweedie_powers

]

res = []

for subset_label, X, df in [

("train", X_train, df_train),

("test", X_test, df_test),

]:

y, _weights = df[target], df[weights]

for score_label, metric in metrics:

if isinstance(estimator, tuple) and len(estimator) == 2:

# Score the model consisting of the product of frequency and

# severity models.

est_freq, est_sev = estimator

y_pred = est_freq.predict(X) * est_sev.predict(X)

else:

y_pred = estimator.predict(X)

if metric is None:

if not hasattr(estimator, "score"):

continue

score = estimator.score(X, y, sample_weight=_weights)

else:

score = metric(y, y_pred, sample_weight=_weights)

res.append({"subset": subset_label, "metric": score_label, "score": score})

res = (

pd.DataFrame(res)

.set_index(["metric", "subset"])

.score.unstack(-1)

.round(4)

.loc[:, ["train", "test"]]

)

return res

基于sklearn的TweedieRegressor方法训练模型

from sklearn.compose import ColumnTransformer

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import (

FunctionTransformer,

KBinsDiscretizer,

OneHotEncoder,

StandardScaler,

)

df = load_mtpl2(n_samples=50000)

# Note: filter out claims with zero amount, as the severity model

# requires strictly positive target values.

df.loc[(df["ClaimAmount"] == 0) & (df["ClaimNb"] >= 1), "ClaimNb"] = 0

# Correct for unreasonable observations (that might be data error)

# and a few exceptionally large claim amounts

df["ClaimNb"] = df["ClaimNb"].clip(upper=4)

df["Exposure"] = df["Exposure"].clip(upper=1)

df["ClaimAmount"] = df["ClaimAmount"].clip(upper=200000)

log_scale_transformer = make_pipeline(

FunctionTransformer(func=np.log), StandardScaler()

)

column_trans = ColumnTransformer(

[

(

"binned_numeric",

KBinsDiscretizer(n_bins=10, subsample=int(2e5), random_state=0),

["VehAge", "DrivAge"],

),

(

"onehot_categorical",

OneHotEncoder(),

["VehBrand", "VehPower", "VehGas", "Region", "Area"],

),

("passthrough_numeric", "passthrough", ["BonusMalus"]),

("log_scaled_numeric", log_scale_transformer, ["Density"]),

],

remainder="drop",

)

X = column_trans.fit_transform(df)

# Insurances companies are interested in modeling the Pure Premium, that is

# the expected total claim amount per unit of exposure for each policyholder

# in their portfolio:

df["PurePremium"] = df["ClaimAmount"] / df["Exposure"]

# This can be indirectly approximated by a 2-step modeling: the product of the

# Frequency times the average claim amount per claim:

df["Frequency"] = df["ClaimNb"] / df["Exposure"]

df["AvgClaimAmount"] = df["ClaimAmount"] / np.fmax(df["ClaimNb"], 1)

with pd.option_context("display.max_columns", 15):

print(df[df.ClaimAmount > 0].head())

数据集分为训练集和测试集

from sklearn.model_selection import train_test_split

df_train, df_test, X_train, X_test = train_test_split(df, X, random_state=0)

开始拟合

from sklearn.linear_model import TweedieRegressor

# 创建 Tweedie 回归模型,用于预测纯保费

# power 参数指定 Tweedie 分布的指数(1.9 介于泊松分布和伽马分布之间)

# alpha 是正则化强度,solver 是求解器类型

#1.4版本之后才有solver

glm_pure_premium = TweedieRegressor(power=1.9, alpha=0.1, max_iter=10000)

# 使用训练集数据训练模型

# X_train 是特征矩阵,df_train["PurePremium"] 是目标变量(纯保费)

# sample_weight 参数指定每个样本的权重,这里使用的是曝光量

glm_pure_premium.fit(

X_train, df_train["PurePremium"], sample_weight=df_train["Exposure"]

)

# 定义不同的 Tweedie power 值,用于评估模型

tweedie_powers = [1.5, 1.7, 1.8, 1.9, 1.99, 1.999, 1.9999]

# 评估 Tweedie 回归模型在训练集和测试集上的表现

# 使用不同的评估指标,包括 Tweedie deviance

scores_glm_pure_premium = score_estimator(

glm_pure_premium,

X_train,

X_test,

df_train,

df_test,

target="PurePremium",

weights="Exposure",

tweedie_powers=tweedie_powers,

)

# 将评估结果合并为一个 DataFrame

scores = pd.concat(

[scores_glm_pure_premium],

axis=1,

sort=True,

keys=("TweedieRegressor"),

)

# 打印模型评估结果

print("Evaluation of the Product Model and the Tweedie Regressor on target PurePremium")

with pd.option_context("display.expand_frame_repr", False):

print(scores)

采用SS_GLM方法建模

n_iter = glm_pure_premium.n_iter_

import secretflow as sf

# Check the version of your SecretFlow

print('The version of SecretFlow: {}'.format(sf.__version__))

# In case you have a running secretflow runtime already.

sf.shutdown()

sf.init(['alice', 'bob'], address='local')

alice, bob = sf.PYU('alice'), sf.PYU('bob')

spu = sf.SPU(

sf.utils.testing.cluster_def(

['alice', 'bob'],

{"protocol": "REF2K", "field": "FM128", "fxp_fraction_bits": 40},

),

)

from secretflow.data import FedNdarray, PartitionWay

x, y = X_train, df_train["PurePremium"]

w = df_train["Exposure"]

def x_to_vdata(x):

x = x.todense()

v_data = FedNdarray(

partitions={

alice: alice(lambda: x[:, :15])(),

bob: bob(lambda: x[:, 15:])(),

},

partition_way=PartitionWay.VERTICAL,

)

return v_data

v_data = x_to_vdata(x)

label_data = FedNdarray(

partitions={alice: alice(lambda: y.values)()},

partition_way=PartitionWay.VERTICAL,

)

sample_weight = FedNdarray(

partitions={alice: alice(lambda: w.values)()},

partition_way=PartitionWay.VERTICAL,

)

from secretflow.device.driver import reveal

from secretflow.ml.linear.ss_glm.core import get_dist

dist = 'Tweedie' # 设定分布类型为 Tweedie

ss_glm_power = 1.9 # 设定 SS GLM 模型的 power 参数为 1.9

class DirectRevealModel:

def __init__(self, model) -> None:

self.model = model

def predict(self, X):

# 将输入数据 X 转换为分布式数据对象 vdata

vdata = x_to_vdata(X)

# 使用模型预测 vdata

y = self.model.predict(vdata)

# 对预测结果进行解密和重塑

return reveal(y).reshape((-1,))

def score(self, X, y, sample_weight=None):

y = y.values # 获取目标变量的值

y_pred = self.predict(X) # 使用模型预测 X

# 计算常数项

constant = np.mean(y)

if sample_weight is not None:

constant *= sample_weight.shape[0] / np.sum(sample_weight)

# 计算偏差(deviance)

# 在 deviance 计算中缺少的因子 2 将被抵消。

deviance = get_dist(dist, 1, ss_glm_power).deviance(y_pred, y, None)

deviance_null = get_dist(dist, 1, ss_glm_power).deviance(

np.average(y, weights=sample_weight) + np.zeros(y.shape), y, None

)

# 返回评分结果,评分公式如下

return 1 - (deviance + constant) / (deviance_null + constant)

import time

from secretflow.ml.linear.ss_glm import SSGLM

model = SSGLM(spu)

ss_glm_power = 1.9

start = time.time()

model.fit_irls(

v_data,

label_data,

None,

sample_weight,

n_iter,

'Log',

'Tweedie',

ss_glm_power,

l2_lambda=0.1,

# infeed_batch_size_limit=10000000,

# fraction_of_validation_set=0.2,

# stopping_rounds=2,

# stopping_metric='deviance',

# stopping_tolerance=0.001,

)

wrapped_model = DirectRevealModel(model)

tweedie_powers = [1.5, 1.7, 1.8, 1.9, 1.99, 1.999, 1.9999]

scores_ss_glm_pure_premium = score_estimator(

wrapped_model,

X_train,

X_test,

df_train,

df_test,

target="PurePremium",

weights="Exposure",

tweedie_powers=tweedie_powers,

)

scores = pd.concat(

[scores_glm_pure_premium, scores_ss_glm_pure_premium],

axis=1,

sort=True,

keys=("TweedieRegressor", "SSGLMRegressor"),

)

print("Evaluation of the Tweedie Regressor and SS GLM on target PurePremium")

with pd.option_context("display.expand_frame_repr", False):

print(scores)

res = []

for subset_label, x, df in [

("train", X_train, df_train),

("test", X_test, df_test),

]:

exposure = df["Exposure"].values

res.append(

{

"subset": subset_label,

"observed": df["ClaimAmount"].values.sum(),

"predicted, tweedie, power=%.2f"

% glm_pure_premium.power: np.sum(exposure * glm_pure_premium.predict(x)),

"predicted, ss glm, power=%.2f"

% ss_glm_power: np.sum(exposure * wrapped_model.predict(x)),

}

)

print(pd.DataFrame(res).set_index("subset").T)

对比分析

from sklearn.metrics import auc

def lorenz_curve(y_true, y_pred, exposure):

y_true, y_pred = np.asarray(y_true), np.asarray(y_pred)

exposure = np.asarray(exposure)

# order samples by increasing predicted risk:

ranking = np.argsort(y_pred)

ranked_exposure = exposure[ranking]

ranked_pure_premium = y_true[ranking]

cumulated_claim_amount = np.cumsum(ranked_pure_premium * ranked_exposure)

cumulated_claim_amount /= cumulated_claim_amount[-1]

cumulated_samples = np.linspace(0, 1, len(cumulated_claim_amount))

return cumulated_samples, cumulated_claim_amount

fig, ax = plt.subplots(figsize=(8, 8))

y_pred_total_ss_glm = wrapped_model.predict(X_test).reshape((-1,))

y_pred_total = glm_pure_premium.predict(X_test)

for label, y_pred in [

("Compound Poisson Gamma", y_pred_total),

("Compound Poisson Gamma SS GLM", y_pred_total_ss_glm),

]:

ordered_samples, cum_claims = lorenz_curve(

df_test["PurePremium"], y_pred, df_test["Exposure"]

)

gini = 1 - 2 * auc(ordered_samples, cum_claims)

label += " (Gini index: {:.3f})".format(gini)

ax.plot(ordered_samples, cum_claims, linestyle="-", label=label)

# Oracle model: y_pred == y_test

ordered_samples, cum_claims = lorenz_curve(

df_test["PurePremium"], df_test["PurePremium"], df_test["Exposure"]

)

gini = 1 - 2 * auc(ordered_samples, cum_claims)

label = "Oracle (Gini index: {:.3f})".format(gini)

ax.plot(ordered_samples, cum_claims, linestyle="-.", color="gray", label=label)

# Random baseline

ax.plot([0, 1], [0, 1], linestyle="--", color="black", label="Random baseline")

ax.set(

title="Lorenz Curves",

xlabel="Fraction of policyholders\n(ordered by model from safest to riskiest)",

ylabel="Fraction of total claim amount",

)

ax.legend(loc="upper left")

plt.plot()

2149

2149

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?