Hbase索引主要是用于提高Hbase中表数据的访问速度,有效的避免全表扫描。

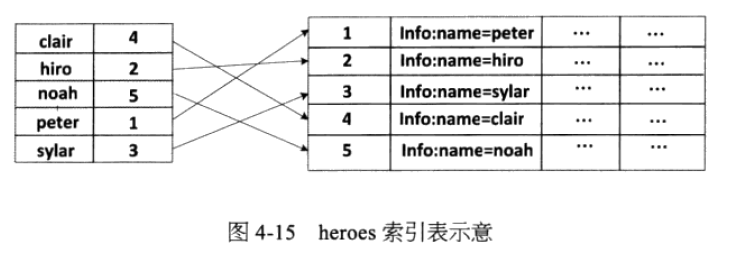

简单的讲:将经常被查询的列作为行健,行健作为列键重新构造一张表,即可实现根据列值快速地定位相关数据所在的行,这就是索引。

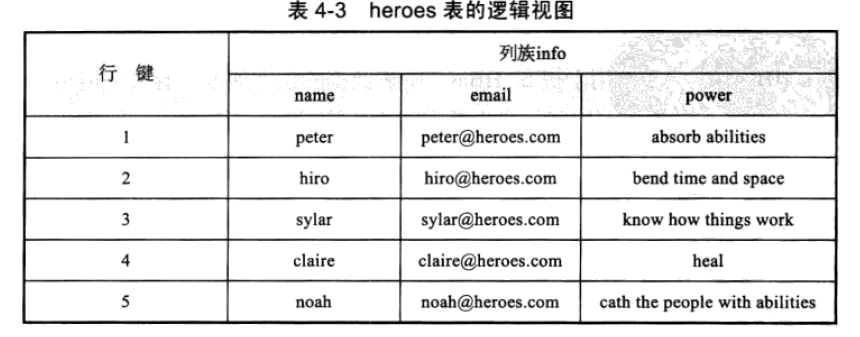

下面是图片展示:

我自己写了实现代码不过和这图还是有不同,我定义的列是name,sex,tel,这些都不是重点啦。下面给大家讲讲具体的过程:

1,在tableMap过程:

我们拿到名字,和行健。

2,在tableReduce过程:

将拿到的名字做行健,行健做列,插入到Hbase中就可以哒。

代码展示:

建立母表:

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.util.Bytes;

public class CreateHbaseTable {

public static void main(String[] args) throws IOException {

Admin admin = null;

Connection con = null;

Configuration conf = HBaseConfiguration.create();// 获取hbase配置文件

conf.set("hbase.zookeeper.quorum", "192.168.61.128");

con = ConnectionFactory.createConnection(conf);

admin=con.getAdmin();//获得管理员

TableName tn=TableName.valueOf("workerinfo");

HTableDescriptor htd=new HTableDescriptor(tn);//创建表结构

HColumnDescriptor hcd=new HColumnDescriptor("info");

htd.addFamily(hcd);

admin.createTable(htd);//创建表

//向表中添加数据

Table table=con.getTable(tn);

for(int i=0;i<5;i++){

Put put=new Put((i+"").getBytes());

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("name"), Bytes.toBytes("zhangsan"+i))

.addColumn(Bytes.toBytes("info"), Bytes.toBytes("sex"), Bytes.toBytes("man"))

.addColumn(Bytes.toBytes("info"), Bytes.toBytes("tel"), Bytes.toBytes("0000"+i));

table.put(put);

}

}

}

建立索引:

import java.io.IOException;

import java.util.List;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Mutation;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;

import org.apache.hadoop.hbase.mapreduce.TableMapper;

import org.apache.hadoop.hbase.mapreduce.TableReducer;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

public class CreateHbaseIndex2 {

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

conf = HBaseConfiguration.create(conf);

conf.set("hbase.zookeeper.quorum", "192.168.61.128");

Job job = Job.getInstance(conf, "HbaseIndex");

job.setJarByClass(CreateHbaseIndex2.class);

Scan scan=new Scan();

scan.addColumn(Bytes.toBytes("info"), Bytes.toBytes("name"));

TableMapReduceUtil.initTableMapperJob("workerinfo", scan, HbaseIndexMap.class,

ImmutableBytesWritable.class, ImmutableBytesWritable.class,job);

TableMapReduceUtil.initTableReducerJob("HbaseIndex", HbaseIndexReduce.class, job);

checkTable(conf);

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

private static class HbaseIndexMap extends TableMapper<ImmutableBytesWritable, ImmutableBytesWritable>{

@Override

protected void map(ImmutableBytesWritable key, Result value,

Mapper<ImmutableBytesWritable, Result, ImmutableBytesWritable, ImmutableBytesWritable>.Context context)

throws IOException, InterruptedException {

List<Cell> cs=value.listCells();

for(Cell cell:cs){

//name作为Key 行健作为值

context.write(new ImmutableBytesWritable(CellUtil.cloneValue(cell)), new ImmutableBytesWritable(CellUtil.cloneRow(cell)));

}

}

}

private static class HbaseIndexReduce extends TableReducer<ImmutableBytesWritable, ImmutableBytesWritable, ImmutableBytesWritable>{

@Override

protected void reduce(ImmutableBytesWritable key, Iterable<ImmutableBytesWritable> values,

Reducer<ImmutableBytesWritable, ImmutableBytesWritable, ImmutableBytesWritable, Mutation>.Context context)

throws IOException, InterruptedException {

Put put=new Put(key.get());

for (ImmutableBytesWritable v : values) {

put.addColumn("oldrowkey".getBytes(),"index".getBytes(),v.get() );

}

context.write(key, put);

}

}

private static void checkTable(Configuration conf) throws Exception {

Connection con = ConnectionFactory.createConnection(conf);

Admin admin = con.getAdmin();

TableName tn = TableName.valueOf("HbaseIndex");

if (!admin.tableExists(tn)){

HTableDescriptor htd = new HTableDescriptor(tn);

HColumnDescriptor hcd = new HColumnDescriptor("oldrowkey".getBytes());

htd.addFamily(hcd);

admin.createTable(htd);

System.out.println("表不存在,新创建表成功....");

}

}

}

1364

1364

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?