利用逻辑回归来实现一个神经元的过程

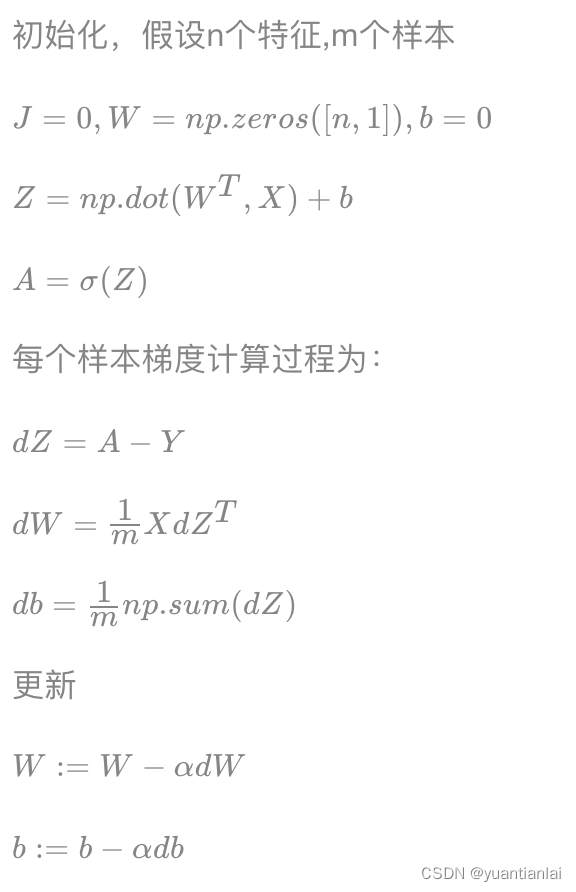

一,实现的过程逻辑如图:

二,实现逻辑和代码如下:

1,先初始化参数w和b

w初始化出样本个数的行数 m

b直接赋值 0

def basic_sigmoid(x):

"""

计算sigmoid函数

"""

s = 1 / (1 + np.exp(-x))

return s

def init_w_b(shape):

"""

初始化参数w和b

:param shape:

:return:

"""

w = np.zeros((shape, 1))

b = 0

return w, b2,梯度下降法获取出合适的w和b的值

主要公式:

dz = A - Y

dw = 1 / m * np.dot(X, dz.T)

db = 1 / m * np.sum(dz)

分别去求出每一次学习的w和b的导数

def propagate(w, b, X, Y):

# 前向传播

###开始

m = X.shape[0]

print('propagate w X 的形状: ',w.shape, X.shape)

# w(m,1) ,x(m,n)

A = basic_sigmoid(np.dot(w.T, X) + b)

# print('aaaa:',A)

print('propagate A Y 的形状: ', A.shape, Y.shape)

# 计算损失

# y (1,n)

cost = (-1 / m) * np.sum(Y * np.log(A) + (1 - Y) * np.log(1 - A))

###结束

# 反向传播

###开始

dz = A - Y

dw = 1 / m * np.dot(X, dz.T)

db = 1 / m * np.sum(dz)

###结束

grads = {

"dw": dw,

"db": db}

return grads, cost

def optimize(w, b, X, Y, num, learn_rate):

"""

优化参数,梯度下降

:param w: 权重

:param b: 偏置

:param X: 特征

:param Y: 目标

:param num: 迭代次数

:param learn_rate: 学习率

:return: 更新后的参数,损失结果

"""

global dw, db

costs = []

for i in range(num):

# 梯度更新参数

grads, cost = propagate(w, b, X, Y)

dw = grads["dw"]

db = grads["db"]

w = w - learn_rate * dw

b = b - learn_rate * db

if i % 100 == 0:

costs.append(cost)

print("损失结果 %i: %f" % (i, cost))

params = {"w": w,

"b": b}

grads = {

"dw": dw,

"db": db}

return params, grads, costs3,用训练好的w和b去预测结果

def predict(w, b, X):

"""

利用训练好的参数进行预测

:param w:

:param b:

:param X:

:return:

"""

m = X.shape[1]

Y_per = np.zeros((1, m))

print(w)

w = w.reshape(X.shape[0], 1)

print('转换后的w: ', w)

# 计算结果

A = basic_sigmoid(np.dot(w.T, X) + b)

for i in range(A.shape[1]):

if A[0, i] <= 0.5:

Y_per[0, i] = 0

else:

Y_per[0, i] = 1

return Y_per整体的步骤就是按照前面的逻辑图来实现参数w和b的求解

整体的代码逻辑就是:

def model(X_train, Y_train, X_test, Y_test, num=2000, learn_rate=0.5):

# 初始化参数

w, b = init_w_b(X_train.shape[0])

# print(w,b)

# 梯度下降

params, grads, costs = optimize(w, b, X_train, Y_train, num, learn_rate)

# 获取训练的参数

w = params['w']

b = params['b']

# 预测结果

Y_per_train = predict(w, b, X_train)

Y_per_test = predict(w, b, X_test)

print("训练集的正确率: {}".format(100 - np.mean(np.abs(Y_per_train - Y_train)) * 100))

print("测试集的正确率: {}".format(100 - np.mean(np.abs(Y_per_test - Y_test)) * 100))

print("costs: ",costs)三,训练数据

数据集可点击 数据集

def load_dataset():

"""

数据集

:return:

"""

train_dataset = h5py.File('datasets/train_catvnoncat.h5', "r")

train_set_x_orig = np.array(train_dataset["train_set_x"][:]) # your train set features

train_set_y_orig = np.array(train_dataset["train_set_y"][:]) # your train set labels

test_dataset = h5py.File('datasets/test_catvnoncat.h5', "r")

test_set_x_orig = np.array(test_dataset["test_set_x"][:]) # your test set features

test_set_y_orig = np.array(test_dataset["test_set_y"][:]) # your test set labels

classes = np.array(test_dataset["list_classes"][:]) # the list of classes

train_set_y_orig = train_set_y_orig.reshape((1, train_set_y_orig.shape[0]))

test_set_y_orig = test_set_y_orig.reshape((1, test_set_y_orig.shape[0]))

return train_set_x_orig, train_set_y_orig, test_set_x_orig, test_set_y_orig, classes

train_x, train_y, test_x, test_y, classes = load_dataset()

# print(train_x.shape,train_y.shape)

# print(test_x.shape,test_y.shape)

train_x = (train_x.reshape(train_x.shape[0], -1).T) / 255

test_x = (test_x.reshape(test_x.shape[0], -1).T) / 255

919

919

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?