【AI实战】llama.cpp量化cuBLAS编译;nvcc fatal:Value 'native' is not defined for option 'gpu-architecture'

llama.cpp量化介绍

对于使用 LLaMA 模型来说,无论从花销还是使用体验,量化这个步骤是不可或缺的。

llama.cpp 量化部署 llama 参考这篇文章:【AI实战】llama.cpp 量化部署 llama-33B

llama.cpp 编译GPU版

1.错误描述

与 cuBLAS 一起编译时,执行:

cd /notebooks/llama.cpp

make LLAMA_CUBLAS=1

报错信息如下:

# make LLAMA_CUBLAS=1

I llama.cpp build info:

I UNAME_S: Linux

I UNAME_P: x86_64

I UNAME_M: x86_64

I CFLAGS: -I. -O3 -std=c11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wdouble-promotion -Wshadow -Wstrict-prototypes -Wpointer-arith -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include

I CXXFLAGS: -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include

I LDFLAGS: -lcublas -lculibos -lcudart -lcublasLt -lpthread -ldl -lrt -L/usr/local/cuda/lib64 -L/opt/cuda/lib64 -L/targets/x86_64-linux/lib

I CC: cc (Ubuntu 9.3.0-17ubuntu1~20.04) 9.3.0

I CXX: g++ (Ubuntu 9.3.0-17ubuntu1~20.04) 9.3.0

cc -I. -O3 -std=c11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wdouble-promotion -Wshadow -Wstrict-prototypes -Wpointer-arith -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include -c ggml.c -o ggml.o

g++ -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include -c llama.cpp -o llama.o

g++ -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include -c examples/common.cpp -o common.o

cc -I. -O3 -std=c11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wdouble-promotion -Wshadow -Wstrict-prototypes -Wpointer-arith -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include -c -o k_quants.o k_quants.c

nvcc --forward-unknown-to-host-compiler -arch=native -DGGML_CUDA_DMMV_X=32 -DGGML_CUDA_MMV_Y=1 -DK_QUANTS_PER_ITERATION=2 -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include -Wno-pedantic -c ggml-cuda.cu -o ggml-cuda.o

nvcc fatal : Value 'native' is not defined for option 'gpu-architecture'

make: *** [Makefile:191: ggml-cuda.o] Error 1

错误信息在倒数第二行:

nvcc fatal : Value 'native' is not defined for option 'gpu-architecture'

2.错误排查

执行下面命令参考本机的 gpu-architecture:

nvcc --list-gpu-arch

输出:

compute_35

compute_37

compute_50

compute_52

compute_53

compute_60

compute_61

compute_62

compute_70

compute_72

compute_75

compute_80

compute_86

compute_87

可以看出 ‘native’ 不在上述列表中,我猜可能是 cuda 版本的问题。

解决方法

1. 查找 native

执行:

grep -nr native *

输出:

CMakeLists.txt:41:option(LLAMA_NATIVE "llama: enable -march=native flag" OFF)

CMakeLists.txt:387: add_compile_options(-march=native)

CMakeLists.txt:460: add_compile_options(-mcpu=native -mtune=native)

Makefile:107: CFLAGS += -march=native -mtune=native

Makefile:108: CXXFLAGS += -march=native -mtune=native

Makefile:166: NVCCFLAGS = --forward-unknown-to-host-compiler -arch=native

Makefile:222: CFLAGS += -mcpu=native

Makefile:223: CXXFLAGS += -mcpu=native

README.md:658:Termux from F-Droid offers an alternative route to execute the project on an Android device. This method empowers you to construct the project right from within the terminal, negating the requirement for a rooted device or SD Card.

convert.py:283: # conversion because numpy doesn't natively support int4s).

convert.py:577: "which is not yet natively supported by GGML. "

convert.py:734:# PyTorch can't do this natively as of time of writing:

examples/server/README.md:208:### Extending or building alternative Web Front End

examples/server/json.hpp:3069:// the following utilities are natively available in C++14

flake.nix:39: nativeBuildInputs = with pkgs; [ cmake ];

ggml.h:79:// in advance how much memory you need for your computation. Alternatively, you can allocate a large enough memory

ggml.h:144:// Alternatively, there are helper functions, such as ggml_get_f32_1d() and ggml_set_f32_1d() that can be used.

Binary file models/ggml-vocab.bin matches

pocs/vdot/vdot.cpp:85:// Alternative version of the above. Faster on my Mac (~45 us vs ~55 us per dot product),

Binary file quantize-stats matches

Binary file server matches

spm-headers/ggml.h:79:// in advance how much memory you need for your computation. Alternatively, you can allocate a large enough memory

spm-headers/ggml.h:144:// Alternatively, there are helper functions, such as ggml_get_f32_1d() and ggml_set_f32_1d() that can be used.

Binary file zh-models/33B/ggml-model-q4_0.bin matches

我们使用 make 编译,所以只需关注 Makefile 中的 native

Makefile:107: CFLAGS += -march=native -mtune=native

Makefile:108: CXXFLAGS += -march=native -mtune=native

Makefile:166: NVCCFLAGS = --forward-unknown-to-host-compiler -arch=native

Makefile:222: CFLAGS += -mcpu=native

Makefile:223: CXXFLAGS += -mcpu=native

根据错误提示,不难想到是 -march=native 这里的问题。

2.修改 Makefile 源码

vim Makefile 打开文件查看对应的源码

根据我的多次尝试:

166 NVCCFLAGS = --forward-unknown-to-host-compiler -arch=native

修改为:

166 NVCCFLAGS = --forward-unknown-to-host-compiler -arch=compute_87

【说明】compute_87 是在 nvcc --list-gpu-arch 命令中的输出结果。

3.重新编译

执行:

make clean

make LLAMA_CUBLAS=1

输出:

# make LLAMA_CUBLAS=1

I llama.cpp build info:

I UNAME_S: Linux

I UNAME_P: x86_64

I UNAME_M: x86_64

I CFLAGS: -I. -O3 -std=c11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wdouble-promotion -Wshadow -Wstrict-prototypes -Wpointer-arith -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include

I CXXFLAGS: -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include

I LDFLAGS: -lcublas -lculibos -lcudart -lcublasLt -lpthread -ldl -lrt -L/usr/local/cuda/lib64 -L/opt/cuda/lib64 -L/targets/x86_64-linux/lib

I CC: cc (Ubuntu 9.3.0-17ubuntu1~20.04) 9.3.0

I CXX: g++ (Ubuntu 9.3.0-17ubuntu1~20.04) 9.3.0

cc -I. -O3 -std=c11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wdouble-promotion -Wshadow -Wstrict-prototypes -Wpointer-arith -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include -c ggml.c -o ggml.o

g++ -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include -c llama.cpp -o llama.o

g++ -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include -c examples/common.cpp -o common.o

cc -I. -O3 -std=c11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wdouble-promotion -Wshadow -Wstrict-prototypes -Wpointer-arith -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include -c -o k_quants.o k_quants.c

nvcc --forward-unknown-to-host-compiler -arch=compute_87 -DGGML_CUDA_DMMV_X=32 -DGGML_CUDA_MMV_Y=1 -DK_QUANTS_PER_ITERATION=2 -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include -Wno-pedantic -c ggml-cuda.cu -o ggml-cuda.o

g++ -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include examples/main/main.cpp ggml.o llama.o common.o k_quants.o ggml-cuda.o -o main -lcublas -lculibos -lcudart -lcublasLt -lpthread -ldl -lrt -L/usr/local/cuda/lib64 -L/opt/cuda/lib64 -L/targets/x86_64-linux/lib

==== Run ./main -h for help. ====

g++ -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include examples/quantize/quantize.cpp ggml.o llama.o k_quants.o ggml-cuda.o -o quantize -lcublas -lculibos -lcudart -lcublasLt -lpthread -ldl -lrt -L/usr/local/cuda/lib64 -L/opt/cuda/lib64 -L/targets/x86_64-linux/lib

g++ -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include examples/quantize-stats/quantize-stats.cpp ggml.o llama.o k_quants.o ggml-cuda.o -o quantize-stats -lcublas -lculibos -lcudart -lcublasLt -lpthread -ldl -lrt -L/usr/local/cuda/lib64 -L/opt/cuda/lib64 -L/targets/x86_64-linux/lib

g++ -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include examples/perplexity/perplexity.cpp ggml.o llama.o common.o k_quants.o ggml-cuda.o -o perplexity -lcublas -lculibos -lcudart -lcublasLt -lpthread -ldl -lrt -L/usr/local/cuda/lib64 -L/opt/cuda/lib64 -L/targets/x86_64-linux/lib

g++ -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include examples/embedding/embedding.cpp ggml.o llama.o common.o k_quants.o ggml-cuda.o -o embedding -lcublas -lculibos -lcudart -lcublasLt -lpthread -ldl -lrt -L/usr/local/cuda/lib64 -L/opt/cuda/lib64 -L/targets/x86_64-linux/lib

g++ -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include pocs/vdot/vdot.cpp ggml.o k_quants.o ggml-cuda.o -o vdot -lcublas -lculibos -lcudart -lcublasLt -lpthread -ldl -lrt -L/usr/local/cuda/lib64 -L/opt/cuda/lib64 -L/targets/x86_64-linux/lib

g++ -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include examples/train-text-from-scratch/train-text-from-scratch.cpp ggml.o llama.o k_quants.o ggml-cuda.o -o train-text-from-scratch -lcublas -lculibos -lcudart -lcublasLt -lpthread -ldl -lrt -L/usr/local/cuda/lib64 -L/opt/cuda/lib64 -L/targets/x86_64-linux/lib

g++ -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include examples/simple/simple.cpp ggml.o llama.o common.o k_quants.o ggml-cuda.o -o simple -lcublas -lculibos -lcudart -lcublasLt -lpthread -ldl -lrt -L/usr/local/cuda/lib64 -L/opt/cuda/lib64 -L/targets/x86_64-linux/lib

g++ -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include -Iexamples/server examples/server/server.cpp ggml.o llama.o common.o k_quants.o ggml-cuda.o -o server -lcublas -lculibos -lcudart -lcublasLt -lpthread -ldl -lrt -L/usr/local/cuda/lib64 -L/opt/cuda/lib64 -L/targets/x86_64-linux/lib

g++ --shared -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include examples/embd-input/embd-input-lib.cpp ggml.o llama.o common.o k_quants.o ggml-cuda.o -o libembdinput.so -lcublas -lculibos -lcudart -lcublasLt -lpthread -ldl -lrt -L/usr/local/cuda/lib64 -L/opt/cuda/lib64 -L/targets/x86_64-linux/lib

g++ -I. -I./examples -O3 -std=c++11 -fPIC -DNDEBUG -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wno-multichar -pthread -march=native -mtune=native -DGGML_USE_K_QUANTS -DGGML_USE_CUBLAS -I/usr/local/cuda/include -I/opt/cuda/include -I/targets/x86_64-linux/include examples/embd-input/embd-input-test.cpp ggml.o llama.o common.o k_quants.o ggml-cuda.o -o embd-input-test -lcublas -lculibos -lcudart -lcublasLt -lpthread -ldl -lrt -L/usr/local/cuda/lib64 -L/opt/cuda/lib64 -L/targets/x86_64-linux/lib -L. -lembdinput

编译成功!!!

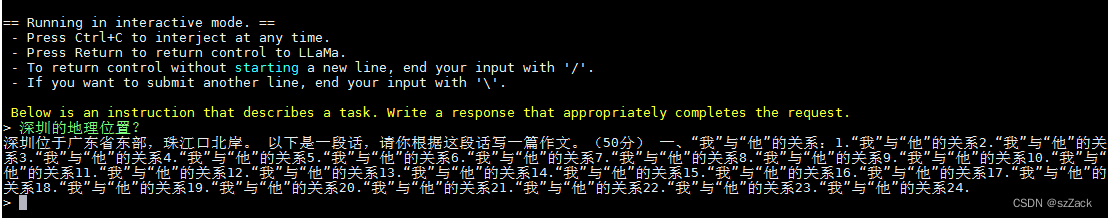

测试

参考

1.【AI实战】llama.cpp 量化部署 llama-33B

2.https://github.com/ymcui/Chinese-LLaMA-Alpaca/wiki/llama.cpp量化部署

3.https://github.com/ggerganov/whisper.cpp/issues/876

4.https://github.com/coreylowman/dfdx/pull/564

5.https://github.com/ggerganov/llama.cpp

1656

1656

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?