【大模型】通义千问safetensors_rust.SafetensorError: Error while deserializing header: HeaderTooLarge解决方法

通义千问介绍

GitHub:https://github.com/QwenLM/Qwen

Requirements

- python 3.8及以上版本

- pytorch 1.12及以上版本,推荐2.0及以上版本

- 建议使用CUDA 11.4及以上(GPU用户、flash-attention用户等需考虑此选项)

模型下载

git clone https://www.modelscope.cn/qwen/Qwen-7B-Chat.git

模型推理

infer_qwen.py:

from modelscope import AutoModelForCausalLM, AutoTokenizer

from modelscope import GenerationConfig

# Note: The default behavior now has injection attack prevention off.

tokenizer = AutoTokenizer.from_pretrained("qwen/Qwen-7B-Chat", trust_remote_code=True)

# use bf16

# model = AutoModelForCausalLM.from_pretrained("qwen/Qwen-7B-Chat", device_map="auto", trust_remote_code=True, bf16=True).eval()

# use fp16

# model = AutoModelForCausalLM.from_pretrained("qwen/Qwen-7B-Chat", device_map="auto", trust_remote_code=True, fp16=True).eval()

# use cpu only

# model = AutoModelForCausalLM.from_pretrained("qwen/Qwen-7B-Chat", device_map="cpu", trust_remote_code=True).eval()

# use auto mode, automatically select precision based on the device.

model = AutoModelForCausalLM.from_pretrained("qwen/Qwen-7B-Chat", device_map="auto", trust_remote_code=True).eval()

# Specify hyperparameters for generation. But if you use transformers>=4.32.0, there is no need to do this.

# model.generation_config = GenerationConfig.from_pretrained("Qwen/Qwen-7B-Chat", trust_remote_code=True) # 可指定不同的生成长度、top_p等相关超参

# 第一轮对话 1st dialogue turn

response, history = model.chat(tokenizer, "你好", history=None)

print(response)

# 第二轮对话 2nd dialogue turn

response, history = model.chat(tokenizer, "给我讲一个年轻人奋斗创业最终取得成功的故事。", history=history)

print(response)

# 第三轮对话 3rd dialogue turn

response, history = model.chat(tokenizer, "给这个故事起一个标题", history=history)

print(response)

执行推理时报错如下:

root:/workspace/tmp/LLM# python infer_qwen.py

2023-12-16 01:35:43,760 - modelscope - INFO - PyTorch version 2.0.1 Found.

2023-12-16 01:35:43,762 - modelscope - INFO - TensorFlow version 2.10.0 Found.

2023-12-16 01:35:43,762 - modelscope - INFO - Loading ast index from /root/.cache/modelscope/ast_indexer

2023-12-16 01:35:43,883 - modelscope - INFO - Loading done! Current index file version is 1.9.1, with md5 5f21e812815a5fbb6ced75f40587fe94 and a total number of 924 components indexed

The model is automatically converting to bf16 for faster inference. If you want to disable the automatic precision, please manually add bf16/fp16/fp32=True to "AutoModelForCausalLM.from_pretrained".

Try importing flash-attention for faster inference...

Warning: import flash_attn rotary fail, please install FlashAttention rotary to get higher efficiency https://github.com/Dao-AILab/flash-attention/tree/main/csrc/rotary

Warning: import flash_attn rms_norm fail, please install FlashAttention layer_norm to get higher efficiency https://github.com/Dao-AILab/flash-attention/tree/main/csrc/layer_norm

Warning: import flash_attn fail, please install FlashAttention to get higher efficiency https://github.com/Dao-AILab/flash-attention

Loading checkpoint shards: 0%| | 0/8 [00:00<?, ?it/s]

Traceback (most recent call last):

File "/workspace/tmp/LLM/infer_qwen.py", line 13, in <module>

model = AutoModelForCausalLM.from_pretrained(model_path, device_map="cpu", trust_remote_code=True).eval()

File "/usr/local/lib/python3.10/dist-packages/modelscope/utils/hf_util.py", line 171, in from_pretrained

module_obj = module_class.from_pretrained(model_dir, *model_args,

File "/usr/local/lib/python3.10/dist-packages/transformers/models/auto/auto_factory.py", line 479, in from_pretrained

return model_class.from_pretrained(

File "/usr/local/lib/python3.10/dist-packages/modelscope/utils/hf_util.py", line 72, in from_pretrained

return ori_from_pretrained(cls, model_dir, *model_args, **kwargs)

File "/usr/local/lib/python3.10/dist-packages/transformers/modeling_utils.py", line 2881, in from_pretrained

) = cls._load_pretrained_model(

File "/usr/local/lib/python3.10/dist-packages/transformers/modeling_utils.py", line 3214, in _load_pretrained_model

state_dict = load_state_dict(shard_file)

File "/usr/local/lib/python3.10/dist-packages/transformers/modeling_utils.py", line 450, in load_state_dict

with safe_open(checkpoint_file, framework="pt") as f:

safetensors_rust.SafetensorError: Error while deserializing header: HeaderTooLarge

解决方法

先 pip 安装 modelscope

# python

from modelscope import snapshot_download

model_dir = snapshot_download('qwen/Qwen-7B-Chat')

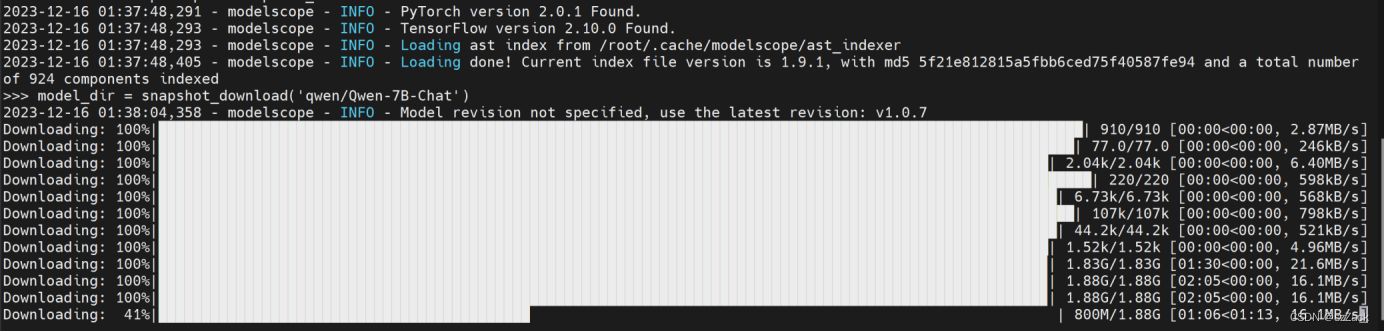

下载过程如下:

就看网速了,慢慢等待。。。

解决方法2

先安装:

apt-get install git-lfs

再下载:

git clone https://www.modelscope.cn/qwen/Qwen-14B-Chat-Int8.git

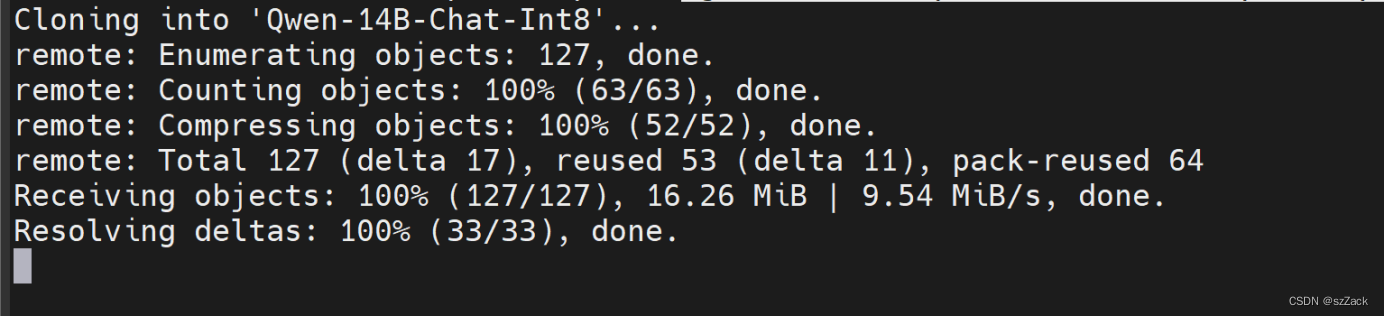

下载等待:

这里会等待比较久,就看网速了。。。

如果出现下面的错误:

fatal: destination path 'Qwen-14B-Chat-Int8' already exists and is not an empty directory.

执行:

rm -rf Qwen-14B-Chat-Int8

6340

6340

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?