为了方便介绍,这里我以3台服务器为例进行介绍,下面是主机的一些信息:

|

主机名

|

IP

|

|

master

|

192.168.116.31

|

|

slave1

|

192.168.116.32

|

|

slave2

|

192.168.116.33

|

-

修改hosts文件,配置域名,编译hosts文件

vim /etc/hosts

添加一下内容

192.168.116.31 master

192.168.116.32 slave1

192.168.116.31 slave2

-

配置免密(详见安装文档kerberos)

官方提供ssh和kerberos两种免密方式,由于ssh免密对已存在的项目产生冲突,现用kerberos免密方式。

|

配置项

|

master

|

slave1

|

slave2

|

|

配置主机名

|

√

|

√

|

√

|

|

安装kerberos

|

√

|

√

|

√

|

|

配置免密登录

|

√

|

√

|

√

|

|

关闭防火墙

|

√

|

√

|

√

|

|

Java jdk1.8

|

√

|

√

|

√

|

|

Zookeeper-3.5.8

|

√

|

√

|

√

|

|

Hadoop-3.1.4

|

√

|

√

|

√

|

|

Hbase-2.2.6

|

√

|

√

|

√

|

|

phoenix-5.0.0-HBase-2.0-bin

|

√

|

-

安装zookeeper

3.1下载zookeeper

将Zookeeper下载到本地后,通过scp命令将安装包发送到master主机上。下面以master为例介绍安装配置,其他的主机安装和配置是完全一样的

3.2解压zookeeper

进入服务器根目录下,将

zookeeper-3

.5.8

.tar.gz

解压至

/home中

tar -zxvf zookeeper压缩包目录 -C 指定解压后的目录

3.3 设置环境变量

export ZOOKEEPER=/home/zookeeper-3.5.8

export PATH=$PATH:$ZOOKEEPER/bin

source /etc/profile 使环境变量生效

3.4配置zookeeper

建立数据和日志文件

mkdir /home/zookeeper-3.5.8/data

mkdir /home/zookeeper-3.5.8/logs

进入conf目录创建并修改zoo.cfg文件

cp zoo_sample.cfg zoo.cfg

修改后的内容为:

这里还需要在数据目录 /home

/zookeeper

-3.5.8

/data

下新建名为myid的文件,各个主机对应的内容是不同的,master的内容是0,slave1的内容是1,slave2的内容是2,分别对应server.x中的x。server.A=B:C:D,其中A是一个数字, 表示这是第几号server。B是该server所在的IP地址。C配置该server和集群中的leader交换消息所使用的端口。D配置选举leader时所使用的端口。

使用scp 命令,将配置好的zookeeper发送到其他节点上去,别忘了从节点对应的myid要修改

成对应的值

3.5启动zookeeper

.zkServer.sh start

-

安装Hadoop

4.1下载安装包,解压到对应的目录,配置环境变量

4.2配置hadoop

进去hadoop目录,新建几个文件夹,配置文件需要用到

mkdir tmp

mkdir hdfs

mkdir hdfs/name

mkdir hdfs/data

需要修改的配置文件为:core-site.xml、hdfs-site.xml、yarn-site.xml、mapred-site.xml、slaves、hadoop-env.sh、yarn-env.sh。

hadoop-env.sh

和 yarn-env.sh增加下面一行命令,即配置java环境。

export JAVA_HOME=/home/jdk1.8.0_91

core-site.xml、hdfs-site.xml、yarn-site.xml、mapred-site.xml文件里的端口号最好不使用默认端口号

core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>

hdfs://master:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>4096</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop-2.7.1/tmp</value>

</property>

<!-- 指定zookeeper地址 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>master:2181,slave1:2181,slave2:2181</value>

</property>

<!-- hadoop链接zookeeper的超时时长设置 -->

<property>

<name>ha.zookeeper.session-timeout.ms</name>

<value>1000</value>

<description>ms</description>

</property>

</configuration>

hdfs-site.xml

配置

<configuration>

<property>

<name>dfs.datanode.max.transfer.threads</name>

<value>8192</value>

</property>

<property>

<name>dfs.client.block.write.replace-datanode-on-failure.enable</name>

<value>true</value>

</property>

<property>

<name>dfs.client.block.write.replace-datanode-on-failure.policy</name>

<value>NEVER</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/hadoop-2.7.1/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/home/hadoop-2.7.1/hdfs/data</value>

</property>

<property>

<name>dfs.http.address</name>

<value>master:50070</value>

</property>

<property>

<name>dfs.secondary.http.address</name>

<value>master:50090</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

yarn-site.xml

配置

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.application.classpath</name>

<value>

/home/hadoop-2.7.1/etc/*,

/home/hadoop-2.7.1/etc/hadoop/*,

/home/hadoop-2.7.1/lib/*,

/home/hadoop-2.7.1/share/hadoop/common/*,

/home/hadoop-2.7.1/share/hadoop/common/lib/*,

/home/hadoop-2.7.1/share/hadoop/mapreduce/*,

/home/hadoop-2.7.1/share/hadoop/mapreduce/lib/*,

/home/hadoop-2.7.1/share/hadoop/hdfs/*,

/home/hadoop-2.7.1/share/hadoop/hdfs/lib/*,

/home/hadoop-2.7.1/share/hadoop/yarn/*,

/home/hadoop-2.7.1/share/hadoop/yarn/lib/*,

/home/hbase-1.4.0/lib/*

</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

</configuration>

mapred-site.xml

配置

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

</configuration>

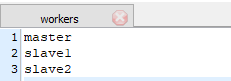

编辑slaves 添加slave1,slave2。

修改hadoop下workes

配置文件修改完以后,使用scp命令将master下hadoop文件夹复制到slave1和slave2中。

如果ssh端口不是默认2

2

端口,修改

/home/hadoop-2.7.1/sbin

/

slaves.sh

首行添加

HADOOP_SSH_OPTS=

`-p 33333`

4.3运行hadoop,注意hadoop命令只在master上运行

将start-dfs.sh,stop-dfs.sh两个文件顶部添加以下参数

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

还有,start-yarn.sh,stop-yarn.sh顶部也需添加以下YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

启动namenode,如果是第一次启动namenode,需要对namenode进行格式化。命令如下:

/home/hadoop-3.1.4/bin/hdfs namenode -format

启动hdfs:

/home/hadoop-3.1.4/sbin/start-dfs.sh

-

安装Hbase

5.1下载安装包,解压到对应的目录,配置环境变量

5.2配置Hbase

主要修改conf目录下的三个文件:hbase-env.sh、hbase-site.xml、regionservers。

hbase-env.sh

eexport HBASE_OPTS="$HBASE_OPTS -XX:+UseConcMarkSweepGC"

export JAVA_HOME=/home/jdk1.8.0_91

export HBASE_CLASSPATH=/home/hbase-2.2.6/lib

export HBASE_PID_DIR=/home/hbase-2.2.6/data

export HBASE_LOG_DIR=/home/hbase-2.2.6/logs

export HBASE_MANAGES_ZK=false

要在hbase文件下,新建data和logs两个文件夹

mkdir /home/hbase-2.2.6/data

mkdir /home/hbase-2.2.6/logs

hbase-site.xml

<configuration>

<!--配置Hbase支持Phoenix创建二级索引-->

<property>

<name>hbase.regionserver.wal.codec</name>

<value>org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec</value>

</property>

<property>

<name>hbase.master.loadbalancer.class</name>

<value>org.apache.phoenix.hbase.index.balancer.IndexLoadBalancer</value>

</property>

<!--配置namespace -->

<property>

<name>phoenix.schema.isNamespaceMappingEnabled</name>

<value>true</value>

</property>

<property>

<name>phoenix.schema.mapSystemTablesToNamespace</name>

<value>true</value>

</property>

<property>

<name>phoenix.trace.frequency</name>

<value>never</value>

</property>

<!--

<property>

<name> phoenix.trace.statsTableName </name>

<value>phoenix</value>

</property>

-->

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/home/hbase-1.4.0/data</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>

hdfs://master:9000/hbase

</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master,slave1,slave2</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/home/zookeeper-3.5.8/data</value>

<description>property from zoo.cfg,the directory where the snapshot is stored</description>

</property>

</configuration>

编辑/home/hbase-2.2.6/conf/

regionservers

master

slave1

slave2

当ssh端口非2

2

端口时

b

in

目录中以下文件:hbase

-cleanup.sh

master

-backup.sh regionservers.sh rolling-restart.sh shutdowm_regionserver.rb zookeeper.sh

添加端口号配置

HBASE_SSH_OPTS

=

`-p 33333`

5.3启动hbase

/home/hbase-2.2.6/bin/start-hbase.sh

5.4.验证是否成功

-

安装Phoenix

6.1下载安装包,并解压到对应的目录

6.2 拷贝jar包到hbase/lib包

scp phoenix-5.0.0-HBase-2.0-server.jar

root

@

slave1

:/home/hbase-2.2.6/lib/

6.3. 配置phoenix环境变量

export PHOENIX_HOME=/home/phoenix-5.0.0-HBase-2.0-bin/

export PATH=$PATH:$PHOENIX_HOME/bin

修改bin目录下的hbase

-site.xml

<configuration>

<property>

<name>phoenix.query.dateFormatTimeZone</name>

<value>GMT+08:00</value>

</property>

<!--配置Hbase支持Phoenix创建二级索引-->

<property>

<name>hbase.regionserver.wal.codec</name>

<value>org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec</value>

</property>

<property>

<name>hbase.master.loadbalancer.class</name>

<value>org.apache.phoenix.hbase.index.balancer.IndexLoadBalancer</value>

</property>

<!--配置Namespace-->

<property>

<name>phoenix.schema.isNamespaceMappingEnabled</name>

<value>true</value>

</property>

<property>

<name>phoenix.schema.mapSystemTablesToNamespace</name>

<value>true</value>

</property>

<property>

<name>phoenix.trace.frequency</name>

<value>never</value>

</property>

</configuration>

6.4重启hbase和zookeeper

6.5启动Phoenix

/home/phoenix-5.0.0-HBase-2.0-bin/

bin/

sqlline.py

master

-

后期遇到的问题汇总

-

phoenix创建异步索引构建任务失败,缺少类时

修改hadoop 下yarn-size.xml文件,新增配置

<property>

<name>yarn.application.classpath</name>

<value>

/home/hadoop-2.7.1/etc/*,

/home/hadoop-2.7.1/etc/hadoop/*,

/home/hadoop-2.7.1/lib/*,

/home/hadoop-2.7.1/share/hadoop/common/*,

/home/hadoop-2.7.1/share/hadoop/common/lib/*,

/home/hadoop-2.7.1/share/hadoop/mapreduce/*,

/home/hadoop-2.7.1/share/hadoop/mapreduce/lib/*,

/home/hadoop-2.7.1/share/hadoop/hdfs/*,

/home/hadoop-2.7.1/share/hadoop/hdfs/lib/*,

/home/hadoop-2.7.1/share/hadoop/yarn/*,

/home/hadoop-2.7.1/share/hadoop/yarn/lib/*,

/home/hbase-1.4.0/lib/*

</value>

</property>

7927

7927

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?