作为一个java菜鸡,想了解一下python的爬虫,据说文书网反爬很厉害,遍去试试

好嘛

我去,啥啊,不讲武德

这个网站的特点首先符合了政府网站响应慢的特点,7百亿的访问量。。。,再加上时时刻刻的小机器人,正常访问都卡的一批

有事找度娘,网上最新的几种方案,最多的还是,破解post参数

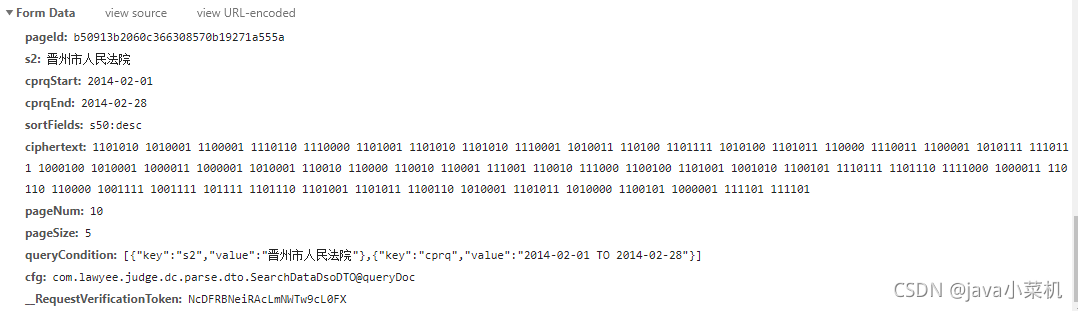

pageId,ciphertext,__RequestVerificationToken 三个参数

我也试过了,都没人说过cookie参数怎么搞,都说登录之后,写死就行,反正我是没成功,“无权限访问接口”

继续换,试过web scraper。我去,啥啊,文书网超时严重,1分钟不带返回的,scraper还总出问题,最大的问题就是只能获取单页的,没啥用,果断放弃

正题,来了!!!!!!敲黑板,我要变了

selenium,模拟用户行为访问,xpath获取数据,暂时这个是搞得挺顺畅

文书网有个600条限制,就是说最大能查到600,在往后查就需要高级查询等条件了。

思路!!!!敲黑板

1、看见首页这个,法院地图没

把所有法院搞出来(什么?不会搞,我也不会。。。),应该有什么政府网能查到这些法院名称,只提供个思路哈,因为我是针对某个法院做的高级搜索,然后再具体到月份(这样就能限制到600),(什么?要是超过600怎么办,大哥哪个法院一个月能上传有600多文书啊,文员不得累死–嗯嗯,我是这么认为滴滴滴滴滴滴滴,托下巴表情)

2、然后就是,程序控制浏览器,自动打开网址,登录,(登录成功后,有时候会让输入验证码,手动输入就行了)

在这之前呢,我手动大体看了下,13年以前的都没有数据(什么?有的有,大拇指,大家可以往前搞几年),(什么?要知道这个干嘛),要填入整月高级搜索丫丫丫丫,就是那个裁判日期,法院名称填上哪个法院就行了(更具体的搜索,自己填去)

登录成功后呢跳到主页

循环去吧,打开高级搜索,填上内容,点击搜索(等那么几十秒,这玩意不一定啊,1分钟最长了),全选文章,点击批量下载,点击下一页(等那么几十秒,这玩意不一定啊,1分钟最长了),点击全选文章,点击批量下载,点击下一页。。。。。最后一页下载完了!!!!打开高级搜索,填上内容,点击搜索(等那么几十秒,这玩意不一定啊,1分钟最长了),全选文章,点击批量下载,点击下一页(等那么几十秒,这玩意不一定啊,1分钟最长了),点击全选文章,点击批量下载,点击下一页。。。。。最后一页下载完了…(口渴)

3、上代码

谷歌浏览器,驱动

from selenium import webdriver

import time

bro = webdriver.Chrome(executable_path='chromedriver.exe')

# 打开网页

bro.get('https://wenshu.court.gov.cn/')

最大化窗口,为什么还是刷新一下呢,哎,这玩意加载不完整啊!后边还有刷新,大家试试就知道了

# 最大化窗口

bro.maximize_window()

time.sleep(2)

bro.refresh()

# 点击登录按钮

login_tag = bro.find_element_by_xpath('//*[@id="loginLi"]/a')

# 执行点击命令

time.sleep(2)

login_tag.click();

time.sleep(2)

bro.refresh()

# 切换到iframe登录窗口

bro.switch_to.frame("contentIframe")

。。。。。。

。。。。。

。。。。

。。。

。。

。

不写了,大家下边看代码吧!!!!!!!

4、注意,敲黑板,完整代码,以下链接,嘿嘿嘿,只要 5 C币,大家搞一下哈!!!!!

什么!没看见链接,哎,公司搞什么安全软件,不让上传文件了!!瞬间损失了好几万!!!!

搞上!!!!!!!!!

from selenium import webdriver

import time

bro = webdriver.Chrome(executable_path='chromedriver.exe')

# 打开网页

bro.get('https://wenshu.court.gov.cn/')

# 最大化窗口

bro.maximize_window()

time.sleep(2)

bro.refresh()

# 点击登录按钮

login_tag = bro.find_element_by_xpath('//*[@id="loginLi"]/a')

# 执行点击命令

time.sleep(2)

login_tag.click();

time.sleep(2)

bro.refresh()

# 切换到iframe登录窗

bro.switch_to.frame("contentIframe")

# 定位 手机号,密码,登录按钮位置

username_path=bro.find_element_by_xpath('//*[@class="phone-number-input"]')

password_path=bro.find_element_by_xpath('//*[@class="password"]')

login_in=bro.find_element_by_xpath('//*[@id="root"]/div/form/div/div[3]/span')

time.sleep(1)

username_path.send_keys("")

time.sleep(1)

password_path.send_keys("")

start_time = [#"2008-01-01","2010-01-01","2011-01-01","2012-01-01","2013-01-01",

#"2014-01-10","2014-02-01",

"2014-03-01","2014-04-01","2014-05-01","2014-06-01","2014-07-01","2014-08-01","2014-09-01",

"2014-10-01","2014-11-01","2014-12-01","2015-01-01","2015-02-01","2015-03-01","2015-04-01","2015-05-01",

"2015-06-01","2015-07-01","2015-08-01","2015-09-01","2015-10-01","2015-11-01","2015-12-01","2016-01-01",

"2016-02-01","2016-03-01","2016-04-01","2016-05-01","2016-06-01","2016-07-01","2016-08-01","2016-09-01",

"2016-10-01","2016-11-01","2016-12-01","2017-01-01","2017-02-01","2017-03-01","2017-04-01","2017-05-01",

"2017-06-01","2017-07-01","2017-08-01","2017-09-01","2017-10-01","2017-11-01","2017-12-01","2018-01-01",

"2018-02-01","2018-03-01","2018-04-01","2018-05-01","2018-06-01","2018-07-01","2018-08-01","2018-09-01",

"2018-10-01","2018-11-01","2018-12-01","2019-01-01","2019-02-01","2019-03-01","2019-04-01","2019-05-01",

"2019-06-01","2019-07-01","2019-08-01","2019-09-01","2019-10-01","2019-11-01","2019-12-01","2020-01-01",

"2020-02-01","2020-03-01","2020-04-01","2020-05-01","2020-06-01","2020-07-01","2020-08-01","2020-09-01",

"2020-10-01","2020-11-01","2020-12-01","2021-01-01","2021-02-01","2021-03-01","2021-04-01","2021-05-01",

"2021-06-01","2021-07-01","2021-08-01","2021-09-01","2021-10-01"];

end_time = [#"2008-12-31","2010-12-31","2011-12-31","2012-12-31","2013-12-31",

#"2014-02-10","2014-02-31",

"2014-03-31","2014-04-31","2014-05-31","2014-06-31","2014-07-31","2014-08-31","2014-09-31",

"2014-10-31","2014-11-31","2014-12-31","2015-01-31","2015-02-31","2015-03-31","2015-04-31","2015-05-31",

"2015-06-31","2015-07-31","2015-08-31","2015-09-31","2015-10-31","2015-11-31","2015-12-31","2016-01-31",

"2016-02-31","2016-03-31","2016-04-31","2016-05-31","2016-06-31","2016-07-31","2016-08-31","2016-09-31",

"2016-10-31","2016-11-31","2016-12-31","2017-01-31","2017-02-31","2017-03-31","2017-04-31","2017-05-31",

"2017-06-31","2017-07-31","2017-08-31","2017-09-31","2017-10-31","2017-11-31","2017-12-31","2018-01-31",

"2018-02-31","2018-03-31","2018-04-31","2018-05-31","2018-06-31","2018-07-31","2018-08-31","2018-09-31",

"2018-10-31","2018-11-31","2018-12-31","2019-01-31","2019-02-31","2019-03-31","2019-04-31","2019-05-31",

"2019-06-31","2019-07-31","2019-08-31","2019-09-31","2019-10-31","2019-11-31","2019-12-31","2020-01-31",

"2020-02-31","2020-03-31","2020-04-31","2020-05-31","2020-06-31","2020-07-31","2020-08-31","2020-09-31",

"2020-10-31","2020-11-31","2020-12-31","2021-01-31","2021-02-31","2021-03-31","2021-04-31","2021-05-31",

"2021-06-31","2021-07-31","2021-08-31","2021-09-31","2021-10-31"];

for index, item in enumerate(start_time):

print(index, item)

time.sleep(10)

gaojisousuo=bro.find_element_by_xpath('//*[@class="advenced-search"]')

gaojisousuo.click()

fayuanVal=bro.find_element_by_xpath('//*[@id="s2"]')

fayuanVal.clear()

fayuanVal.send_keys("晋州市人民法院")

startTime=bro.find_element_by_xpath('//*[@id="cprqStart"]')

startTime.clear()

startTime.send_keys(item)

endTime=bro.find_element_by_xpath('//*[@id="cprqEnd"]')

endTime.clear()

endTime.send_keys(end_time[index])

sousuo = bro.find_element_by_xpath('//*[@id="searchBtn"]')

time.sleep(5)

sousuo.click()

time.sleep(60)

# 先判断是否有数据

page_num_all = bro.find_element_by_xpath('//*[@id="_view_1545184311000"]/div[1]/div[2]/span')

if page_num_all.text != '0':

next = True

page_num = 1

while next:

# 定位全选和批量下载

all_select = bro.find_element_by_xpath('//*[@id="AllSelect"]')

all_select.click()

time.sleep(5)

all_download = bro.find_element_by_xpath('//*[@id="_view_1545184311000"]/div[2]/div[4]/a[3]')

all_download.click()

time.sleep(5)

next_click = bro.find_element_by_xpath('//*[@id="_view_1545184311000"]/div[last()]/a[last()]')

class_name = next_click.get_attribute('class')

if class_name == 'disabled pageButton':

next = False

break

else:

next_click.click()

page_num += 1

print(page_num)

time.sleep(70)

注释不太完整哈,写着玩来着!思路还是上边的思路

本文分享了一名Java初学者如何使用Selenium模拟用户行为,通过XPath抓取数据,攻克文书网反爬难题,包括登录、高级搜索及批量下载法院文书的过程。作者提到关键步骤如获取法院列表、处理登录验证码和页面加载问题。

本文分享了一名Java初学者如何使用Selenium模拟用户行为,通过XPath抓取数据,攻克文书网反爬难题,包括登录、高级搜索及批量下载法院文书的过程。作者提到关键步骤如获取法院列表、处理登录验证码和页面加载问题。

1126

1126

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?