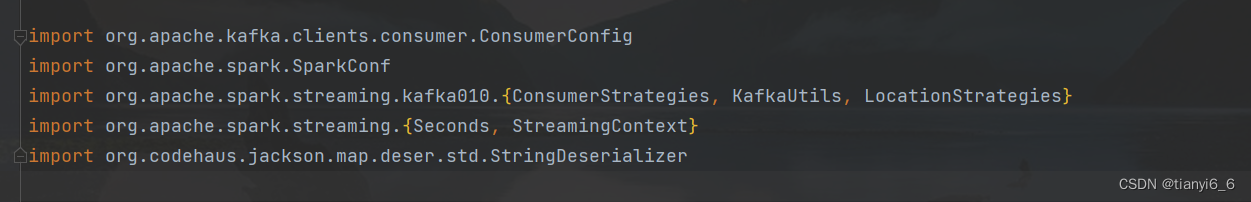

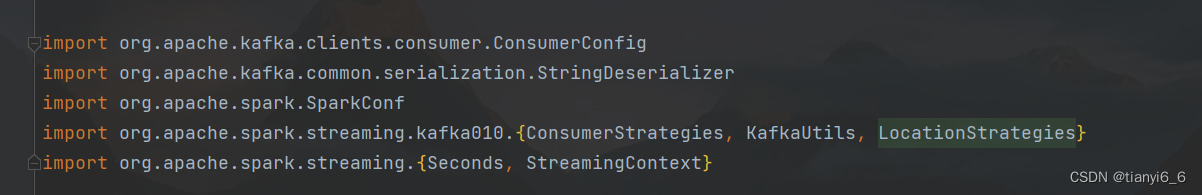

导包还是需要小心一点的

删除包之前的包

修改之后的包

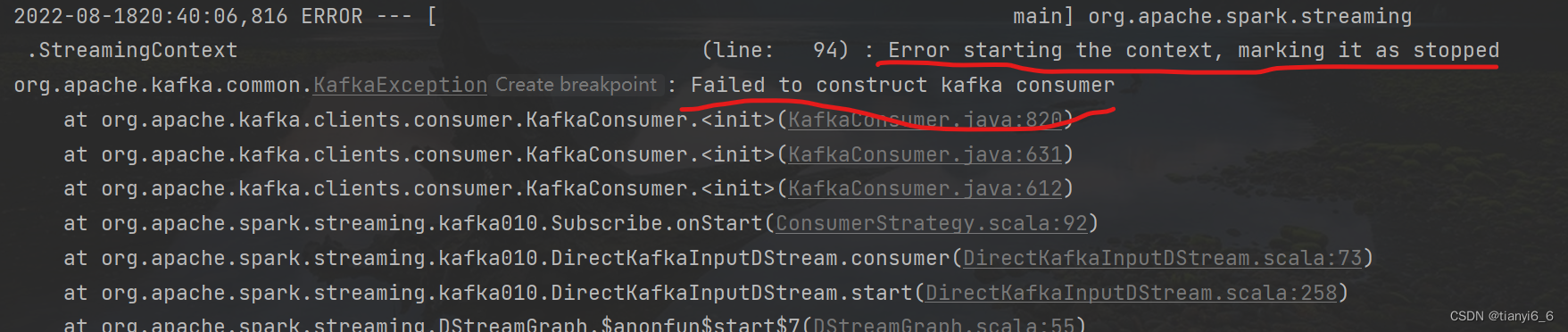

2022-08-1820:40:06,816 ERROR --- [ main] org.apache.spark.streaming.StreamingContext (line: 94) : Error starting the context, marking it as stopped

org.apache.kafka.common.KafkaException: Failed to construct kafka consumer

at org.apache.kafka.clients.consumer.KafkaConsumer.<init>(KafkaConsumer.java:820)

at org.apache.kafka.clients.consumer.KafkaConsumer.<init>(KafkaConsumer.java:631)

at org.apache.kafka.clients.consumer.KafkaConsumer.<init>(KafkaConsumer.java:612)

at org.apache.spark.streaming.kafka010.Subscribe.onStart(ConsumerStrategy.scala:92)

at org.apache.spark.streaming.kafka010.DirectKafkaInputDStream.consumer(DirectKafkaInputDStream.scala:73)

at org.apache.spark.streaming.kafka010.DirectKafkaInputDStream.start(DirectKafkaInputDStream.scala:258)

at org.apache.spark.streaming.DStreamGraph.$anonfun$start$7(DStreamGraph.scala:55)

at org.apache.spark.streaming.DStreamGraph.$anonfun$start$7$adapted(DStreamGraph.scala:55)

at scala.collection.Iterator.foreach(Iterator.scala:943)

at scala.collection.Iterator.foreach$(Iterator.scala:943)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1431)

at scala.collection.parallel.ParIterableLike$Foreach.leaf(ParIterableLike.scala:974)

at scala.collection.parallel.Task.$anonfun$tryLeaf$1(Tasks.scala:53)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at scala.util.control.Breaks$$anon$1.catchBreak(Breaks.scala:67)

at scala.collection.parallel.Task.tryLeaf(Tasks.scala:56)

at scala.collection.parallel.Task.tryLeaf$(Tasks.scala:50)

at scala.collection.parallel.ParIterableLike$Foreach.tryLeaf(ParIterableLike.scala:971)

at scala.collection.parallel.AdaptiveWorkStealingTasks$WrappedTask.compute(Tasks.scala:153)

at scala.collection.parallel.AdaptiveWorkStealingTasks$WrappedTask.compute$(Tasks.scala:149)

at scala.collection.parallel.AdaptiveWorkStealingForkJoinTasks$WrappedTask.compute(Tasks.scala:440)

at java.util.concurrent.RecursiveAction.exec(RecursiveAction.java:189)

at java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:289)

at java.util.concurrent.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1067)

at java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1703)

at java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:172)

at ... run in separate thread using org.apache.spark.util.ThreadUtils ... ()

at org.apache.spark.streaming.StreamingContext.liftedTree1$1(StreamingContext.scala:585)

at org.apache.spark.streaming.StreamingContext.start(StreamingContext.scala:577)

at com.tianyi.spark.SparkKafkaConsumer$.main(SparkKafkaConsumer.scala:35)

at com.tianyi.spark.SparkKafkaConsumer.main(SparkKafkaConsumer.scala)

Caused by: org.apache.kafka.common.KafkaException: class org.codehaus.jackson.map.deser.std.StringDeserializer is not an instance of org.apache.kafka.common.serialization.Deserializer

at org.apache.kafka.common.config.AbstractConfig.getConfiguredInstance(AbstractConfig.java:374)

at org.apache.kafka.common.config.AbstractConfig.getConfiguredInstance(AbstractConfig.java:392)

at org.apache.kafka.clients.consumer.KafkaConsumer.<init>(KafkaConsumer.java:713)

at org.apache.kafka.clients.consumer.KafkaConsumer.<init>(KafkaConsumer.java:631)

at org.apache.kafka.clients.consumer.KafkaConsumer.<init>(KafkaConsumer.java:612)

at org.apache.spark.streaming.kafka010.Subscribe.onStart(ConsumerStrategy.scala:92)

at org.apache.spark.streaming.kafka010.DirectKafkaInputDStream.consumer(DirectKafkaInputDStream.scala:73)

at org.apache.spark.streaming.kafka010.DirectKafkaInputDStream.start(DirectKafkaInputDStream.scala:258)

at org.apache.spark.streaming.DStreamGraph.$anonfun$start$7(DStreamGraph.scala:55)

at org.apache.spark.streaming.DStreamGraph.$anonfun$start$7$adapted(DStreamGraph.scala:55)

at scala.collection.Iterator.foreach(Iterator.scala:943)

at scala.collection.Iterator.foreach$(Iterator.scala:943)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1431)

at scala.collection.parallel.ParIterableLike$Foreach.leaf(ParIterableLike.scala:974)

at scala.collection.parallel.Task.$anonfun$tryLeaf$1(Tasks.scala:53)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at scala.util.control.Breaks$$anon$1.catchBreak(Breaks.scala:67)

at scala.collection.parallel.Task.tryLeaf(Tasks.scala:56)

at scala.collection.parallel.Task.tryLeaf$(Tasks.scala:50)

at scala.collection.parallel.ParIterableLike$Foreach.tryLeaf(ParIterableLike.scala:971)

at scala.collection.parallel.AdaptiveWorkStealingTasks$WrappedTask.compute(Tasks.scala:153)

at scala.collection.parallel.AdaptiveWorkStealingTasks$WrappedTask.compute$(Tasks.scala:149)

at scala.collection.parallel.AdaptiveWorkStealingForkJoinTasks$WrappedTask.compute(Tasks.scala:440)

at java.util.concurrent.RecursiveAction.exec(RecursiveAction.java:189)

at java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:289)

at java.util.concurrent.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1067)

at java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1703)

at java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:172)

Exception in thread "main" org.apache.kafka.common.KafkaException: Failed to construct kafka consumer

at org.apache.kafka.clients.consumer.KafkaConsumer.<init>(KafkaConsumer.java:820)

at org.apache.kafka.clients.consumer.KafkaConsumer.<init>(KafkaConsumer.java:631)

at org.apache.kafka.clients.consumer.KafkaConsumer.<init>(KafkaConsumer.java:612)

at org.apache.spark.streaming.kafka010.Subscribe.onStart(ConsumerStrategy.scala:92)

at org.apache.spark.streaming.kafka010.DirectKafkaInputDStream.consumer(DirectKafkaInputDStream.scala:73)

at org.apache.spark.streaming.kafka010.DirectKafkaInputDStream.start(DirectKafkaInputDStream.scala:258)

at org.apache.spark.streaming.DStreamGraph.$anonfun$start$7(DStreamGraph.scala:55)

at org.apache.spark.streaming.DStreamGraph.$anonfun$start$7$adapted(DStreamGraph.scala:55)

at scala.collection.Iterator.foreach(Iterator.scala:943)

at scala.collection.Iterator.foreach$(Iterator.scala:943)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1431)

at scala.collection.parallel.ParIterableLike$Foreach.leaf(ParIterableLike.scala:974)

at scala.collection.parallel.Task.$anonfun$tryLeaf$1(Tasks.scala:53)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at scala.util.control.Breaks$$anon$1.catchBreak(Breaks.scala:67)

at scala.collection.parallel.Task.tryLeaf(Tasks.scala:56)

at scala.collection.parallel.Task.tryLeaf$(Tasks.scala:50)

at scala.collection.parallel.ParIterableLike$Foreach.tryLeaf(ParIterableLike.scala:971)

at scala.collection.parallel.AdaptiveWorkStealingTasks$WrappedTask.compute(Tasks.scala:153)

at scala.collection.parallel.AdaptiveWorkStealingTasks$WrappedTask.compute$(Tasks.scala:149)

at scala.collection.parallel.AdaptiveWorkStealingForkJoinTasks$WrappedTask.compute(Tasks.scala:440)

at java.util.concurrent.RecursiveAction.exec(RecursiveAction.java:189)

at java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:289)

at java.util.concurrent.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1067)

at java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1703)

at java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:172)

at ... run in separate thread using org.apache.spark.util.ThreadUtils ... ()

at org.apache.spark.streaming.StreamingContext.liftedTree1$1(StreamingContext.scala:585)

at org.apache.spark.streaming.StreamingContext.start(StreamingContext.scala:577)

at com.tianyi.spark.SparkKafkaConsumer$.main(SparkKafkaConsumer.scala:35)

at com.tianyi.spark.SparkKafkaConsumer.main(SparkKafkaConsumer.scala)

Caused by: org.apache.kafka.common.KafkaException: class org.codehaus.jackson.map.deser.std.StringDeserializer is not an instance of org.apache.kafka.common.serialization.Deserializer

at org.apache.kafka.common.config.AbstractConfig.getConfiguredInstance(AbstractConfig.java:374)

at org.apache.kafka.common.config.AbstractConfig.getConfiguredInstance(AbstractConfig.java:392)

at org.apache.kafka.clients.consumer.KafkaConsumer.<init>(KafkaConsumer.java:713)

at org.apache.kafka.clients.consumer.KafkaConsumer.<init>(KafkaConsumer.java:631)

at org.apache.kafka.clients.consumer.KafkaConsumer.<init>(KafkaConsumer.java:612)

at org.apache.spark.streaming.kafka010.Subscribe.onStart(ConsumerStrategy.scala:92)

at org.apache.spark.streaming.kafka010.DirectKafkaInputDStream.consumer(DirectKafkaInputDStream.scala:73)

at org.apache.spark.streaming.kafka010.DirectKafkaInputDStream.start(DirectKafkaInputDStream.scala:258)

at org.apache.spark.streaming.DStreamGraph.$anonfun$start$7(DStreamGraph.scala:55)

at org.apache.spark.streaming.DStreamGraph.$anonfun$start$7$adapted(DStreamGraph.scala:55)

at scala.collection.Iterator.foreach(Iterator.scala:943)

at scala.collection.Iterator.foreach$(Iterator.scala:943)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1431)

at scala.collection.parallel.ParIterableLike$Foreach.leaf(ParIterableLike.scala:974)

at scala.collection.parallel.Task.$anonfun$tryLeaf$1(Tasks.scala:53)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at scala.util.control.Breaks$$anon$1.catchBreak(Breaks.scala:67)

at scala.collection.parallel.Task.tryLeaf(Tasks.scala:56)

at scala.collection.parallel.Task.tryLeaf$(Tasks.scala:50)

at scala.collection.parallel.ParIterableLike$Foreach.tryLeaf(ParIterableLike.scala:971)

at scala.collection.parallel.AdaptiveWorkStealingTasks$WrappedTask.compute(Tasks.scala:153)

at scala.collection.parallel.AdaptiveWorkStealingTasks$WrappedTask.compute$(Tasks.scala:149)

at scala.collection.parallel.AdaptiveWorkStealingForkJoinTasks$WrappedTask.compute(Tasks.scala:440)

at java.util.concurrent.RecursiveAction.exec(RecursiveAction.java:189)

at java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:289)

at java.util.concurrent.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1067)

at java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1703)

at java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:172)

Process finished with exit code 1

建议可以把包都给删除,在重新导一下包

662

662

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?