内容:使用TensorFlow跑MNIST,并保存模型。之后恢复模型并进行测试

配置:win7x64/PyCharm/Python3.5/tensorflow-1.2.1/dataSet-MNIST

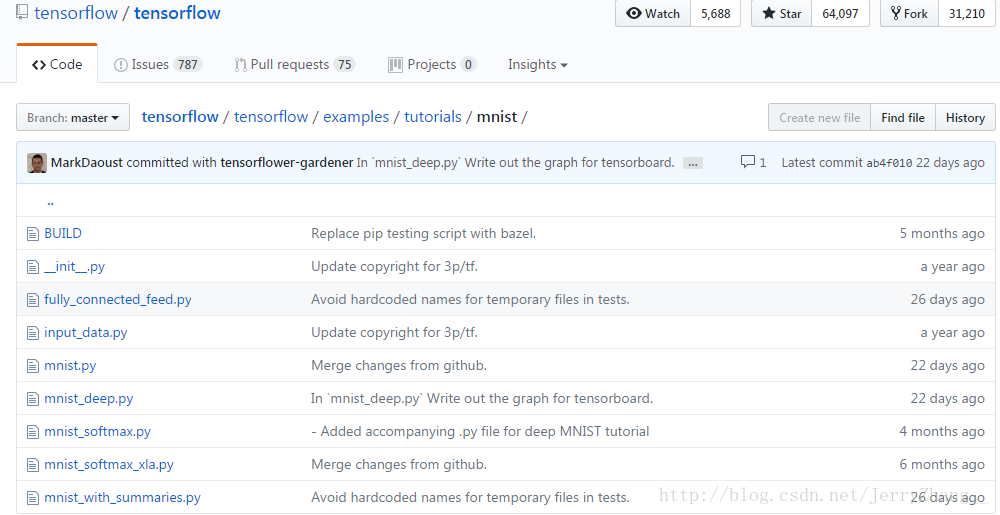

原程序:https://github.com/tensorflow/tensorflow/tree/master/tensorflow/examples/tutorials/mnist

1.网络定义

def deepnn(x):

"""deepnn builds the graph for a deep net for classifying digits.

Args:

x: an input tensor with the dimensions (N_examples, 784), where 784 is the

number of pixels in a standard MNIST image.

Returns:

A tuple (y, keep_prob). y is a tensor of shape (N_examples, 10), with values

equal to the logits of classifying the digit into one of 10 classes (the

digits 0-9). keep_prob is a scalar placeholder for the probability of

dropout.

"""

# Reshape to use within a convolutional neural net.

# Last dimension is for "features" - there is only one here, since images are

# grayscale -- it would be 3 for an RGB image, 4 for RGBA, etc.

with tf.name_scope('reshape'):

x_image = tf.reshape(x, [-1, 28, 28, 1]) # [batch,height,width,channels]

# First convolutional layer - maps one grayscale image to 32 feature maps.

with tf.name_scope('conv1'):

W_conv1 = weight_variable([5, 5, 1, 32]) # shape of weight [conv_W,conv_H,channal_before_conv,channal_after_conv]

b_conv1 = bias_variable([32])

h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1)

# Pooling layer - downsamples by 2X.

with tf.name_scope('pool1'):

h_pool1 = max_pool_2x2(h_conv1)

# Second convolutional layer -- maps 32 feature maps to 64.

with t

本文档展示了如何使用TensorFlow训练MNIST数据集,并详细说明了模型及其参数的保存和恢复过程。在Windows环境下,通过Python3.5和TensorFlow 1.2.1实现网络定义,训练后保存模型文件。恢复模型后进行测试,由于训练迭代次数有限,测试准确率较低。

本文档展示了如何使用TensorFlow训练MNIST数据集,并详细说明了模型及其参数的保存和恢复过程。在Windows环境下,通过Python3.5和TensorFlow 1.2.1实现网络定义,训练后保存模型文件。恢复模型后进行测试,由于训练迭代次数有限,测试准确率较低。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

2011

2011

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?