- 大脑利用眼睛的不同视角来感知物体的深度。

- 不要忽略单眼的深度线索,比如说纹理和亮度。

- 用户使用虚拟现实头戴显示器的最佳深度范围在0.75米至3.5米之间(在Unity里,1单位=1米)

- 虚拟摄像头之间的距离,得从OVR配置工具中设置,等同于用户瞳孔之间的距离。

- 确保每只眼睛中的成像一致,相互融合,只有一只眼睛能看到的效果,或者双眼看到的成像效果差异太大,画面看起来不好。

基本原理

双眼视觉意思是同时看到两种视图的方式——每只眼睛看到的视图都截然不同,大脑将二者结合起来,形成一个3D立体图像,这即是立体视觉。我们左眼看到的景象与右眼看到的景象不同,形成了双眼差异。立体视觉的产生,由于双眼用不同视角观看客观世界,或由于观看两张具有一定差异的平面图像。

头戴显示器显示两张图像,这两张图像由两个有一定间隔的虚拟摄像头形成,每张图像对应用户的一只眼睛。按顺序定义一些术语。我们双眼的距离称为瞳距(IPD),将捕捉虚拟环境的两个摄像头之间的距离称为摄像头间距(ICD)。虽然IPD的范围可在52mm到78mm之间调节,平均IPD(根据一项针对大约4000名美军士兵的调查)为63.5mm,等于头戴显示器的轴间距(IAD),即头戴显示器镜片的中心距(适用于这份修订版指南)。

单眼深度线索

立体视觉仅仅是我们大脑处理的多种深度线索中的一种。大部分其他深度线索都是单眼的,也就是说,即便只用一只眼睛看,或是以平面图像的形式呈现出来由双眼同时看,都能传达深度。对于VR,由于头部移动形成的运动视差不需要立体视觉来感知,但这对于传达深度和为用户提供舒适的体验是极为重要的。

其他重要的深度线索包括:曲线透视(直线向远处延伸后会交汇于一点),相对标度(越远的物体看起来越小),阻隔(近处的物体阻隔我们观察远处的物体),空中透视(由于大气的折射性,远处的物体比近处的物体看起来模糊),纹理梯度(重复的图案,投影大小越小,投影密度越大),和光线(明暗可以帮我们看到物体的形状和位置)。这些深度线索,很多当前的电脑成像技术已经可以运用,我们之所以还要提起它们,是因为在创新的3D立体视觉的影响之下,我们很容易忽略这些深度线索的重要性。

头戴显示器内舒适的视距

当视线集中在一个物体上时,有两个问题是理解眼睛舒适度的关键:调节滞后和聚焦需求。滞后调节需求指的是眼睛需要如何调节晶体的形状方能聚焦到一个纵面(这个过程称为调节)。聚焦需求指的是眼睛需要向内转动多少,方能使视线在一个特定的纵面相交。在现实世界中,这二者是紧密相关的,二者的联系十分紧密,因此有所谓的调焦反射:眼睛转向的度数影响晶体的调节,反之亦然。

头戴显示器与其他3D立体视觉技术(如3D电影)一样,可以将滞后需求和聚焦需求相互分离——滞后调节需求是固定的,但是调焦需求可以改变。这是由于形成3D立体视觉的实际图片都是被投射在与眼睛距离始终保持不变的屏幕上,但是不同的图像还是需要眼睛转动,以使视线在不同的纵面交汇于物体上。

我们已经在研究滞后调节需求和调焦需求可以彼此不同到何种程度而不至于引起用户不适。目前,DK2头戴显示器的视觉效果相当于看一块1.3米外的屏幕。(由于制造的误差和头戴显示仪镜片的不同,这个数字仅仅是个大概值。)为了避免视疲劳,若你知道某一物体会让用户将视线集中在它身上较长一段时间(比如菜单,当前环境中比较有趣的物体等),该物体的距离应该调节到0.75米和3.5米之间。

很显然,一个完整的虚拟环境会要求将一些物体调整到上述最佳舒适范围之外。只要用户无需将视线长时间集中在这些物体上,这就不会造成什么问题。在使用Unity编程时,1个单位相当于现实世界大约1米,所以不需要长时间注视的物体需要被放置在0.75至3.5单位距离之外。

作为目前正在的研究和发展的一部分,未来头戴显示器的发展必然将会改进它们的成像零件,使舒适视距范围扩大。但是不论这个范围如何改变,保险起见,对于需要用户长时间观看的物体,比如菜单或图形用户界面等,2.5米应该是一个舒适的距离,不会被未来所淘汰。

据说,一些头戴显示器用户表示,当他们眼睛的晶体调节到虚拟屏幕的纵面上时,若他们此时注视视界上的所有物体,会产生一种非同寻常的感受。这有可能让一小部分用户觉得不适或造成他们视疲劳,因为眼睛可能无法容易地准确聚焦。

一些研发人员发现,在一些你知道用户会仔细观察的场景中使用景深效果,既可以让用户身临其境,也能使他们觉得更舒适。例如,你可以有意地将用户调出菜单界面的背景模糊化,或将与需要突出显示的物体不在同一个纵面上的物体模糊处理。这不仅仅模仿现实中人的视觉的自然机制,而且可以避免让不需要用户聚焦的物体吸引了视线。

不幸的是,我们无法控制一些有不合常理、不合常规、不可预见的用户行为。一些VR用户可能将眼睛放在物体几英寸之外,盯着物体看一整天。虽然我们知道这会导致视疲劳,但是如果我们采取一些激烈的措施来阻止这样反常的情况,比如说设置冲突检测来防止用户如此靠近物体,只会破坏大部分用户的体验。而作为一个开发人员,你的职责就是避免让用户置身于不太友好的环境。

摄像头间距(ICD)的效果

ICD即两个摄像头之间的距离,改变ICD对用户有重要的影响。如果ICD增加,它会造成一种被称为“超立体”的体验,即深度过深。如果ICD缩小,深度将会变浅,称为“次立体”。改变ICD对用户还有两个更深的影响:首先,它改变了眼睛为看清一个指定物体所需要转动的角度。增大ICD,用户的眼球需要转动更大的角度,才能看清同一件物体,这会导致视疲劳。其次,它会改变用户对置身在虚拟环境中的大小的感受。后者在“用户创建和环境标度”一章的“内容创造”一节中有更详细的论述。

按用户的实际的IPD调节ICD,可使虚拟环境的比例和深度更真实。如果采用缩放效应,那么一定要应用在整个头部模型中,这样方能在头部移动过程中准确地反映用户在现实世界中的知觉体验。其它与距离相关的指导方针也是如此。

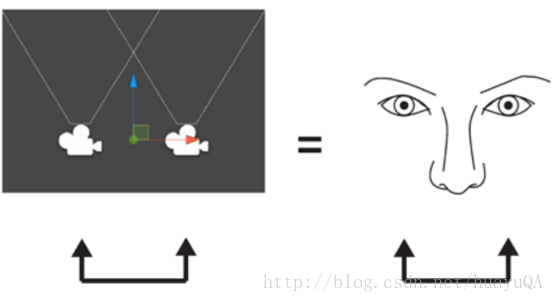

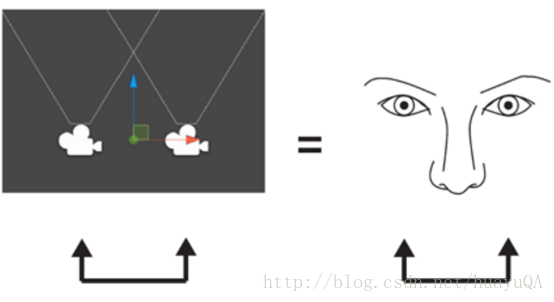

左右摄像头之间的距离ICD(下图左),与用户双眼之间的距离IPD(下图右)必须成比例。任何运用于ICD的比例因数和其它本文所提供的与距离相关的指导方针,都必须应用到整个头部模型。

融合两个图像的潜在问题

在现实世界中,我们经常面临的情况每一只眼睛有非常不同的视角,并且通常给我们带来一些小问题。在VR中使用一只眼睛观察一个角落的效果跟现实中一样。事实上,眼睛的不同视角可以是有益的:比如说你是一名特工(在现实生活中或者VR中),你正尝试隐藏在一些长草中。你的眼睛的不同视角可以让你穿过眼前的草监控周围的环境,就好像草不在眼前似得。然而,在2D屏幕中的视频游戏实现同样的场景,每一片草叶都会遮挡后面世界。

不过,VR(像其他任何的立体图像)会引起一些让用户觉得很烦人的潜在异常情况。例如,渲染效果(如光畸变、粒子效果、泛光)应该显示在两只眼睛中,并且视差正确。这种操作失败会导致闪烁/闪光(当某些东西只显示在一只眼中)出现,或者错误的深度(是否视差是关的,或者是否后期处理效果未渲染其影响到的对象的上下文深度-例如,高光阴影)。重要的是,确保两眼中图像不要与固有的双眼视差造成的轻微不同视角相差太远。

虽然在一个复杂的3D环境中,这看起来不像是个问题,但是对确保用户的眼睛能够接收足够的信息,以便大脑知道如何正确的融合和解析地图来说可能是重要的。通常情况下,行和边组成一个3D场景是足够的;但是,需要堤防那些会导致人类融合双眼视觉图片效果与预期不一致的重复区域。也要注意的是,视错觉的深度(如“凹面具错觉”,凹面显示为凸面)有时会导致知觉错误,尤其是在单眼深度线索较少的情况下。

原文如下

- The brain uses differences between your eyes’ viewpoints to perceive depth.

- Don’t neglect monocular depth cues, such as texture and lighting.

- The most comfortable range of depths for a user to look at in the Rift is between 0.75 and 3.5 meters (1 unit in Unity = 1 meter).

- Set the distance between the virtual cameras to the distance between the user’s pupils from the OVR config tool.

- Make sure the images in each eye correspond and fuse properly; effects that appear in only one eye or differ significantly between the eyes look bad.

Basics

Binocular vision describes the way in which we see two views of the world simultaneously—the view from each eye is slightly different and our brain combines them into a single three-dimensional stereoscopic image, an experience known as stereopsis. The difference between what we see from our left eye and what we see from our right eye generates binocular disparity. Stereopsis occurs whether we are seeing our eye’s different viewpoints of the physical world, or two flat pictures with appropriate differences (disparity) between them.

The Oculus Rift presents two images, one to each eye, generated by two virtual cameras separated by a short distance. Defining some terminology is in order. The distance between our two eyes is called the interpupillary distance (IPD), and we refer to the distance between the two rendering cameras that capture the virtual environment as the inter-camera distance (ICD). Although the IPD can vary from about 52mm to 78mm, average IPD (based on data from a survey of approximately 4000 U.S. Army soldiers) is about 63.5 mm—the same as the Rift’s interaxial distance (IAD), the distance between the centers of the Rift’s lenses (as of this revision of this guide).

Monocular depth cues

Stereopsis is just one of many depth cues our brains process. Most of the other depth cues are monocular; that is, they convey depth even when they are viewed by only one eye or appear in a flat image viewed by both eyes. For VR, motion parallax due to head movement does not require stereopsis to see, but is extremely important for conveying depth and providing a comfortable experience to the user.

Other important depth cues include: curvilinear perspective (straight lines converge as they extend into the distance), relative scale (objects get smaller when they are farther away), occlusion (closer objects block our view of more distant objects), aerial perspective (distant objects appear fainter than close objects due to the refractive properties of the atmosphere), texture gradients (repeating patterns get more densely packed as they recede) and lighting (highlights and shadows help us perceive the shape and position of objects). Currentgeneration computer-generated content already leverages a lot of these depth cues, but we mention them because it can be easy to neglect their importance in light of the novelty of stereoscopic 3D.

Comfortable Viewing Distances Inside the Rift

Two issues are of primary importance to understanding eye comfort when the eyes are fixating on (i.e., looking at) an object: accommodative demand and vergence demand. Accommodative demand refers to how your eyes have to adjust the shape of their lenses to bring a depth plane into focus (a process known as accommodation). Vergence demand refers to the degree to which the eyes have to rotate inwards so their lines of sight intersect at a particular depth plane. In the real world, these two are strongly correlated with one another; so much so that we have what is known as the accommodation-convergence reflex: the degree of convergence of your eyes influences the accommodation of your lenses, and vice-versa.

The Rift, like any other stereoscopic 3D technology (e.g., 3D movies), creates an unusual situation that decouples accommodative and vergence demands—accommodative demand is fixed, but vergence demand can change. This is because the actual images for creating stereoscopic 3D are always presented on a screen that remains at the same distance optically, but the different images presented to each eye still require the eyes to rotate so their lines of sight converge on objects at a variety of different depth planes.

Research has looked into the degree to which the accommodative and vergence demands can differ from each other before the situation becomes uncomfortable to the viewer.[1] The current optics of the DK2 Rift are equivalent to looking at a screen approximately 1.3 meters away. (Manufacturing tolerances and the power of the Rift’s lenses means this number is only a rough approximation.) In order to prevent eyestrain, objects that you know the user will be fixating their eyes on for an extended period of time (e.g., a menu, an object of interest in the environment) should be rendered between approximately 0.75 and 3.5 meters away.

Obviously, a complete virtual environment requires rendering some objects outside this optimally comfortable range. As long as users are not required to fixate on those objects for extended periods, they are of little concern. When programming in Unity, 1 unit will correspond to approximately 1 meter in the real world, so objects of focus should be placed 0.75 to 3.5 distance units away.

As part of our ongoing research and development, future incarnations of the Rift will inevitably improve their optics to widen the range of comfortable viewing distances. No matter how this range changes, however, 2.5 meters should be a comfortable distance, making it a safe, future-proof distance for fixed items on which users will have to focus for an extended time, like menus or GUIs.

Anecdotally, some Rift users have remarked on the unusualness of seeing all objects in the world in focus when the lenses of their eyes are accommodated to the depth plane of the virtual screen. This can potentially lead to frustration or eye strain in a minority of users, as their eyes may have difficulty focusing appropriately.

Some developers have found that depth-of-field effects can be both immersive and comfortable for situations in which you know where the user is looking. For example, you might artificially blur the background behind a menu the user brings up, or blur objects that fall outside the depth plane of an object being held up for examination. This not only simulates the natural functioning of your vision in the real world, it can prevent distracting the eyes with salient objects outside the user’s focus.

Unfortunately, we have no control over a user who chooses to behave in an unreasonable, abnormal, or unforeseeable manner; someone in VR might choose to stand with their eyes inches away from an object and stare at it all day. Although we know this can lead to eye strain, drastic measures to prevent this anomalous case, such as setting collision detection to prevent users from walking that close to objects, would only hurt overall user experience. Your responsibility as a developer, however, is to avoid requiring the user to put themselves into circumstances we know are sub-optimal.

Effects of Inter-Camera Distance

Changing inter-camera distance, the distance between the two rendering cameras, can impact users in important ways. If the inter-camera distance is increased, it creates an experience known as hyperstereo in which depth is exaggerated; if it is decreased, depth will flatten, a state known as hypostereo. Changing inter-camera distance has two further effects on the user: First, it changes the degree to which the eyes must converge to look at a given object. As you increase inter-camera distance, users have to converge their eyes more to look at the same object, and that can lead to eyestrain. Second, it can alter the user’s sense of their own size inside the virtual environment. The latter is discussed further in Content Creation under User and Environment Scale.

Set the inter-camera distance to the user’s actual IPD to achieve veridical scale and depth in the virtual environment. If applying a scaling effect, make sure it is applied to the entire head model to accurately reflect the user’s real-world perceptual experience during head movements, as well as any of our guidelines related to distance.

The inter-camera distance (ICD) between the left and right scene cameras (left) must be proportional to the user’s inter-pupillary distance (IPD; right). Any scaling factor applied to ICD must be applied to the entire head model and distance-related guidelines provided throughout this guide.

Potential Issues with Fusing Two Images

We often face situations in the real world where each eye gets a very different viewpoint, and we generally have little problem with it. Peeking around a corner with one eye works in VR just as well as it does in real life. In fact, the eyes’ different viewpoints can be beneficial: say you’re a special agent (in real life or VR) trying to stay hidden in some tall grass. Your eyes’ different viewpoints allow you to look “through” the grass to monitor your surroundings as if the grass weren’t even there in front of you. Doing the same in a video game on a 2D screen, however, leaves the world behind each blade of grass obscured from view.

Still, VR (like any other stereoscopic imagery) can give rise to some potentially unusual situations that can be annoying to the user. For instance, rendering effects (such as light distortion, particle effects, or light bloom) should appear in both eyes and with correct disparity. Failing to do so can give the effects the appearance of flickering/shimmering (when something appears only in one eye) or floating at the wrong depth (if disparity is off, or if the post processing effect is not rendered to contextual depth of the object it should be effecting—for example, a specular shading pass). It is important to ensure that the images between the two eyes do not differ aside from the slightly different viewing positions inherent to binocular disparity.

Although less likely to be a problem in a complex 3D environment, it can be important to ensure the user’s eyes receive enough information for the brain to know how to fuse and interpret the image properly. The lines and edges that make up a 3D scene are generally sufficient; however, be wary of wide swaths of repeating patterns, which could cause people to fuse the eyes’ images differently than intended. Be aware also that optical illusions of depth (such as the “hollow mask illusion,” where concave surfaces appear convex) can sometimes lead to misperceptions, particularly in situations where monocular depth cues are sparse.

2791

2791

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?