最近处理一台,很久没有启动datanode服务的节点,启动后发现日志中一直有如下信息不断个产生,

2015-09-10 14:22:28,474 INFO datanode.DataNode (DataXceiver.java:writeBlock(598)) - Receiving BP-219392391-192.168.20.101-

1404293177278:blk_1121179008_48430870 src: /192.168.20.106:44987 dest: /192.168.20.51:50010

2015-09-10 14:22:29,848 INFO datanode.DataNode (DataXceiver.java:writeBlock(598)) - Receiving BP-219392391-192.168.20.101-

1404293177278:blk_1121179009_48430871 src: /192.168.20.33:51146 dest: /192.168.20.51:50010

2015-09-10 14:22:36,264 INFO DataNode.clienttrace (BlockReceiver.java:finalizeBlock(1215)) - src: /192.168.20.33:51146, dest:

/192.168.20.51:50010, bytes: 268435456, op: HDFS_WRITE, cliID: DFSClient_NONMAPREDUCE_1092031102_1, offset: 0, srvID: 18c8d025-9722-42aa-9762-

2b5dcc70a8c6, blockid: BP-219392391-192.168.20.101-1404293177278:blk_1121179009_48430871, duration: 6408669315

2015-09-10 14:22:36,264 INFO datanode.DataNode (BlockReceiver.java:run(1196)) - PacketResponder: BP-219392391-192.168.20.101-

1404293177278:blk_1121179009_48430871, type=HAS_DOWNSTREAM_IN_PIPELINE terminating

2015-09-10 14:22:40,188 INFO DataNode.clienttrace (BlockReceiver.java:finalizeBlock(1215)) - src: /192.168.20.106:44987, dest:

/192.168.20.51:50010, bytes: 268435456, op: HDFS_WRITE, cliID: DFSClient_NONMAPREDUCE_-1502082792_1, offset: 0, srvID: 18c8d025-9722-42aa-9762-

2b5dcc70a8c6, blockid: BP-219392391-192.168.20.101-1404293177278:blk_1121179008_48430870, duration: 11664448051

2015-09-10 14:22:40,188 INFO datanode.DataNode (BlockReceiver.java:run(1196)) - PacketResponder: BP-219392391-192.168.20.101-

1404293177278:blk_1121179008_48430870, type=HAS_DOWNSTREAM_IN_PIPELINE terminating

2015-09-10 14:23:02,883 INFO datanode.DataNode (DataXceiver.java:writeBlock(598)) - Receiving BP-219392391-192.168.20.101-

1404293177278:blk_1121179028_48430890 src: /192.168.20.49:37440 dest: /192.168.20.51:50010

2015-09-10 14:23:16,756 INFO DataNode.clienttrace (BlockReceiver.java:finalizeBlock(1215)) - src: /192.168.20.49:37440, dest:

/192.168.20.51:50010, bytes: 268435456, op: HDFS_WRITE, cliID: DFSClient_NONMAPREDUCE_-14894317_1, offset: 0, srvID: 18c8d025-9722-42aa-9762-

2b5dcc70a8c6, blockid: BP-219392391-192.168.20.101-1404293177278:blk_1121179028_48430890, duration: 13835429062

2015-09-10 14:23:16,756 INFO datanode.DataNode (BlockReceiver.java:run(1196)) - PacketResponder: BP-219392391-192.168.20.101-

1404293177278:blk_1121179028_48430890, type=HAS_DOWNSTREAM_IN_PIPELINE terminating

大概是更新块信息,很多文件都更新了,所以block也更新,使用jps看到有个进程只用PID号,我感觉是datanode的,

继续查看下进程,应该是在更新

# jps

14452

18708 Jps

18142 NodeManager

# ps -ef|grep 14452

hdfs 14452 22731 12 14:00 ? 00:02:56 jsvc.exec -Dproc_datanode -outfile /var/log/hadoop/hdfs/jsvc.out -errfile

/var/log/hadoop/hdfs/jsvc.err -pidfile /var/run/hadoop/hdfs/hadoop_secure_dn.pid -nodetach -user hdfs -cp

/etc/hadoop/conf:/usr/lib/hadoop/lib/*:/usr/lib/hadoop/.//*:/usr/lib/hadoop-hdfs/./:/usr/lib/hadoop-hdfs/lib/*:/usr/lib/hadoop-

hdfs/.//*:/usr/lib/hadoop-yarn/lib/*:/usr/lib/hadoop-yarn/.//*:/usr/lib/hadoop-mapreduce/lib/*:/usr/lib/hadoop-mapreduce/.//*::/usr/lib/hadoop-mapreduce/*:/usr/lib/hadoop-mapreduce/*:/usr/lib/hadoop-mapreduce/* -Xmx1024m -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/var/log/hadoop/root -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/usr/lib/hadoop -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,console -Djava.library.path=:/usr/lib/hadoop/lib/native/Linux-amd64-64:/usr/lib/hadoop/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/var/log/hadoop/root -Dhadoop.log.file=hadoop-hdfs-datanode-dn51.20.bjlt.log -Dhadoop.home.dir=/usr/lib/hadoop -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,RFA -Djava.library.path=:/usr/lib/hadoop/lib/native/Linux-amd64-64:/usr/lib/hadoop/lib/native:/usr/lib/hadoop/lib/native/Linux-amd64-64:/usr/lib/hadoop/lib/native/Linux-amd64-64:/usr/lib/hadoop/lib/native -Dhadoop.policy.file=hadoop-policy.xml-Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/var/log/hadoop/hdfs -Dhadoop.id.str=hdfs -jvm server -Xmx1024m -Dhadoop.security.logger=ERROR,DRFAS - Dhadoop.security.logger=INFO,RFAS org.apache.hadoop.hdfs.server.datanode.SecureDataNodeStarter

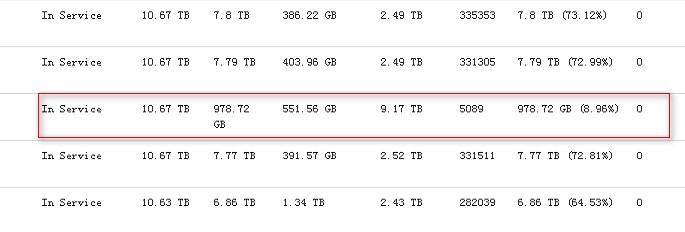

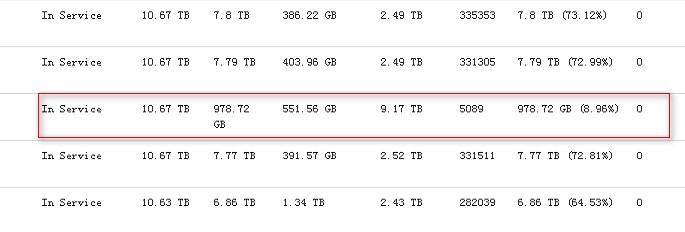

从namenode中查看block其他节点都是33W多,这个才5千多,而且在不断的增加,这两天观察下,看看更新到33万多会不会正常,在过来更新下。

跟进帖:

几天后发现没有同步成功,只好下线节点,重新安装后再上线。

2015-09-10 14:22:28,474 INFO datanode.DataNode (DataXceiver.java:writeBlock(598)) - Receiving BP-219392391-192.168.20.101-

1404293177278:blk_1121179008_48430870 src: /192.168.20.106:44987 dest: /192.168.20.51:50010

2015-09-10 14:22:29,848 INFO datanode.DataNode (DataXceiver.java:writeBlock(598)) - Receiving BP-219392391-192.168.20.101-

1404293177278:blk_1121179009_48430871 src: /192.168.20.33:51146 dest: /192.168.20.51:50010

2015-09-10 14:22:36,264 INFO DataNode.clienttrace (BlockReceiver.java:finalizeBlock(1215)) - src: /192.168.20.33:51146, dest:

/192.168.20.51:50010, bytes: 268435456, op: HDFS_WRITE, cliID: DFSClient_NONMAPREDUCE_1092031102_1, offset: 0, srvID: 18c8d025-9722-42aa-9762-

2b5dcc70a8c6, blockid: BP-219392391-192.168.20.101-1404293177278:blk_1121179009_48430871, duration: 6408669315

2015-09-10 14:22:36,264 INFO datanode.DataNode (BlockReceiver.java:run(1196)) - PacketResponder: BP-219392391-192.168.20.101-

1404293177278:blk_1121179009_48430871, type=HAS_DOWNSTREAM_IN_PIPELINE terminating

2015-09-10 14:22:40,188 INFO DataNode.clienttrace (BlockReceiver.java:finalizeBlock(1215)) - src: /192.168.20.106:44987, dest:

/192.168.20.51:50010, bytes: 268435456, op: HDFS_WRITE, cliID: DFSClient_NONMAPREDUCE_-1502082792_1, offset: 0, srvID: 18c8d025-9722-42aa-9762-

2b5dcc70a8c6, blockid: BP-219392391-192.168.20.101-1404293177278:blk_1121179008_48430870, duration: 11664448051

2015-09-10 14:22:40,188 INFO datanode.DataNode (BlockReceiver.java:run(1196)) - PacketResponder: BP-219392391-192.168.20.101-

1404293177278:blk_1121179008_48430870, type=HAS_DOWNSTREAM_IN_PIPELINE terminating

2015-09-10 14:23:02,883 INFO datanode.DataNode (DataXceiver.java:writeBlock(598)) - Receiving BP-219392391-192.168.20.101-

1404293177278:blk_1121179028_48430890 src: /192.168.20.49:37440 dest: /192.168.20.51:50010

2015-09-10 14:23:16,756 INFO DataNode.clienttrace (BlockReceiver.java:finalizeBlock(1215)) - src: /192.168.20.49:37440, dest:

/192.168.20.51:50010, bytes: 268435456, op: HDFS_WRITE, cliID: DFSClient_NONMAPREDUCE_-14894317_1, offset: 0, srvID: 18c8d025-9722-42aa-9762-

2b5dcc70a8c6, blockid: BP-219392391-192.168.20.101-1404293177278:blk_1121179028_48430890, duration: 13835429062

2015-09-10 14:23:16,756 INFO datanode.DataNode (BlockReceiver.java:run(1196)) - PacketResponder: BP-219392391-192.168.20.101-

1404293177278:blk_1121179028_48430890, type=HAS_DOWNSTREAM_IN_PIPELINE terminating

大概是更新块信息,很多文件都更新了,所以block也更新,使用jps看到有个进程只用PID号,我感觉是datanode的,

继续查看下进程,应该是在更新

# jps

14452

18708 Jps

18142 NodeManager

# ps -ef|grep 14452

hdfs 14452 22731 12 14:00 ? 00:02:56 jsvc.exec -Dproc_datanode -outfile /var/log/hadoop/hdfs/jsvc.out -errfile

/var/log/hadoop/hdfs/jsvc.err -pidfile /var/run/hadoop/hdfs/hadoop_secure_dn.pid -nodetach -user hdfs -cp

/etc/hadoop/conf:/usr/lib/hadoop/lib/*:/usr/lib/hadoop/.//*:/usr/lib/hadoop-hdfs/./:/usr/lib/hadoop-hdfs/lib/*:/usr/lib/hadoop-

hdfs/.//*:/usr/lib/hadoop-yarn/lib/*:/usr/lib/hadoop-yarn/.//*:/usr/lib/hadoop-mapreduce/lib/*:/usr/lib/hadoop-mapreduce/.//*::/usr/lib/hadoop-mapreduce/*:/usr/lib/hadoop-mapreduce/*:/usr/lib/hadoop-mapreduce/* -Xmx1024m -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/var/log/hadoop/root -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/usr/lib/hadoop -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,console -Djava.library.path=:/usr/lib/hadoop/lib/native/Linux-amd64-64:/usr/lib/hadoop/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/var/log/hadoop/root -Dhadoop.log.file=hadoop-hdfs-datanode-dn51.20.bjlt.log -Dhadoop.home.dir=/usr/lib/hadoop -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,RFA -Djava.library.path=:/usr/lib/hadoop/lib/native/Linux-amd64-64:/usr/lib/hadoop/lib/native:/usr/lib/hadoop/lib/native/Linux-amd64-64:/usr/lib/hadoop/lib/native/Linux-amd64-64:/usr/lib/hadoop/lib/native -Dhadoop.policy.file=hadoop-policy.xml-Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/var/log/hadoop/hdfs -Dhadoop.id.str=hdfs -jvm server -Xmx1024m -Dhadoop.security.logger=ERROR,DRFAS - Dhadoop.security.logger=INFO,RFAS org.apache.hadoop.hdfs.server.datanode.SecureDataNodeStarter

从namenode中查看block其他节点都是33W多,这个才5千多,而且在不断的增加,这两天观察下,看看更新到33万多会不会正常,在过来更新下。

跟进帖:

几天后发现没有同步成功,只好下线节点,重新安装后再上线。

3554

3554

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?