一、概述

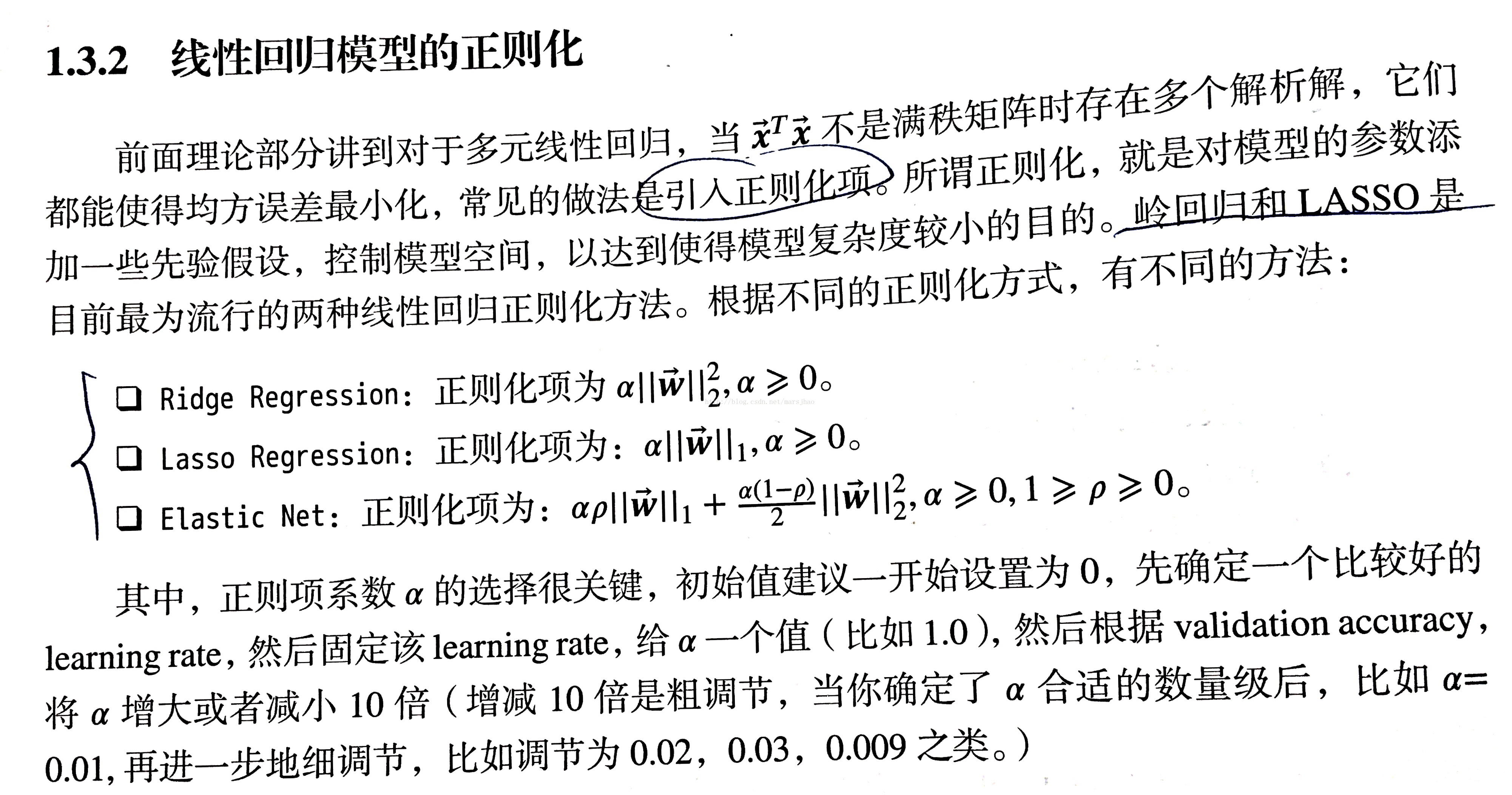

线性模型中的“线性”是一系列一次特征的线性组合,在二维空间中是一条直线,在三维空间中是一个平面,推广到n维空间可以理解为广义线性模型。常见的广义线性模型有岭回归、Lasso回归、Elastic Net、逻辑回归、线性判别分析。

二、算法笔记

1. 普通线性回归

线性回归是一种回归分析技术,回归分析本质上就是一个函数估计问题。

根据给定的数据集,定义模型和模型的损失函数,我们的目标是损失函数最小化。可以用梯度下降法求解使损失函数最小化的参数w和b,要注意的是,使用梯度下降时,必须要进行特征归一化(Feature Scaling)。特征归一化有两个好处:一是提升模型的收敛速度,二是提升模型精度,它可以让各个特征对结果做出的贡献相同。

2. 广义线性模型

令h(y)=wTx + b,这样就得到了广义线性模型,例如lny=wTx+ b,它是通过exp(wTx + b)来拟合y的 ,实质上是非线性的。

3. 逻辑回归

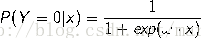

逻辑回归模型是:

其中w和x可以为扩充的形式。

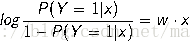

一个事件的几率(odds)是指该事件发生的概率与该事件不发生的概率的比值。设事件发生的概率是p,那么该事件的几率是p/(1-p),该事件的对数几率(log odds)或logit函数是:

对逻辑回归而言,可得

这就是说,在逻辑回归模型中,输出Y=1的对数几率是输入x的线性函数。

对于给定的训练数据集

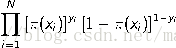

似然函数为

对数似然函数为

对L(w)求极大值,得到w的估计值,带入到逻辑回归模型即可。

4. 线性判别分析

线性判别分析(Linear Discriminant Analysis,LDA)的思想是:

训练时,设法将训练样本投影到一条直线上,使得同类样本的投影点尽可能地接近、异类样本的投影点尽可能远离。要学习的就是这样一条直线。

预测时,将待预测样本投影到学到的直线上,根据其投影点的位置来判定其类别。

三、Sklearn代码实现及实验结果

import matplotlib.pyplot as plt

import numpy as np

from sklearn import datasets, linear_model, cross_validation

# 加载sklearn中的糖尿病人数据集

def load_data():

diabetes = datasets.load_diabetes()

return cross_validation.train_test_split(diabetes.data, diabetes.target,

test_size=0.25, random_state=0)

# 线性回归模型

def test_LinearRegression(*data):

X_train, X_test, y_train, y_test = data

regr = linear_model.LinearRegression()

regr.fit(X_train, y_train)

print('线性回归模型')

# 线性回归模型的权重值和偏置值

print('Cofficients:%s, intercept %.2f' % (regr.coef_, regr.intercept_))

# 测试集预测结果的均方误差

print('Residual sum of square: %.2f' % np.mean((regr.predict(X_test) - y_test) ** 2))

# 预测性能得分,score值最大为1.0,越大越好,也可能为负值(预测效果太差)

print('Score: %.2f' % regr.score(X_test, y_test))

X_train, X_test, y_train, y_test = load_data()

test_LinearRegression(X_train, X_test, y_train, y_test)

# 岭回归Ridge Regresssion

def test_Ridge(*data):

X_train, X_test, y_train, y_test = data

regr = linear_model.Ridge()

regr.fit(X_train, y_train)

print('Ridge岭回归')

print('Cofficients:%s, intercept %.2f' % (regr.coef_, regr.intercept_))

print('Residual sum of square: %.2f' % np.mean((regr.predict(X_test) - y_test) ** 2))

print('Score: %.2f' % regr.score(X_test, y_test))

X_train, X_test, y_train, y_test = load_data()

test_Ridge(X_train, X_test, y_train, y_test)

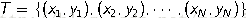

# 检验不同的alpha值对岭回归预测性能的影响

def test_Ridge_alpha(*data):

X_train, X_test, y_train, y_test = data

print('不同的alpha值对岭回归预测性能的影响')

alphas = [0.01, 0.02, 0.05, 0.1, 0.2, 0.5, 1, 2, 5, 10, 20, 50, 100, 200, 500, 1000]

scores = []

# 按照list中的元素迭代

for i, alpha in enumerate(alphas):

regr = linear_model.Ridge(alpha=alpha)

regr.fit(X_train, y_train)

scores.append(regr.score(X_test, y_test))

# alpha对预测性能影响的可视化

fig = plt.figure()

ax = fig.add_subplot(1, 1, 1)

ax.plot(alphas, scores)

ax.set_xlabel(r"$\alpha$")

ax.set_ylabel('score')

ax.set_xscale('log')

ax.set_title('Ridge')

plt.show()

X_train, X_test, y_train, y_test = load_data()

test_Ridge_alpha(X_train, X_test, y_train, y_test)

# Lasso回归

def test_Lasso(*data):

X_train, X_test, y_train, y_test = data

regr = linear_model.Lasso()

regr.fit(X_train, y_train)

print('Lasso回归')

print('Cofficients:%s, intercept %.2f' % (regr.coef_, regr.intercept_))

print('Residual sum of square: %.2f' % np.mean((regr.predict(X_test) - y_test) ** 2))

print('Score: %.2f' % regr.score(X_test, y_test))

X_train, X_test, y_train, y_test = load_data()

test_Lasso(X_train, X_test, y_train, y_test)

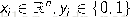

# 检验不同的alpha值对Lasso回归预测性能的影响

def test_Lasso_alpha(*data):

X_train, X_test, y_train, y_test = data

print('不同的alpha值对Lasso回归预测性能的影响')

alphas = [0.01, 0.02, 0.05, 0.1, 0.2, 0.5, 1, 2, 5, 10, 20, 50, 100, 200, 500, 1000]

scores = []

# 按照list中的元素迭代

for i, alpha in enumerate(alphas):

regr = linear_model.Lasso(alpha=alpha)

regr.fit(X_train, y_train)

scores.append(regr.score(X_test, y_test))

# alpha对预测性能影响的可视化

fig = plt.figure()

ax = fig.add_subplot(1, 1, 1)

ax.plot(alphas, scores)

ax.set_xlabel(r"$\alpha$")

ax.set_ylabel('score')

ax.set_xscale('log')

ax.set_title('Lasso')

plt.show()

X_train, X_test, y_train, y_test = load_data()

test_Lasso_alpha(X_train, X_test, y_train, y_test)

# ElasticNet回归

def test_ElasticNet(*data):

X_train, X_test, y_train, y_test = data

regr = linear_model.ElasticNet()

regr.fit(X_train, y_train)

print('ElasticNet回归')

print('Cofficients:%s, intercept %.2f' % (regr.coef_, regr.intercept_))

print('Residual sum of square: %.2f' % np.mean((regr.predict(X_test) - y_test) ** 2))

print('Score: %.2f' % regr.score(X_test, y_test))

X_train, X_test, y_train, y_test = load_data()

test_ElasticNet(X_train, X_test, y_train, y_test)

# 检验不同的alpha值对ElasticNet回归预测性能的影响

def test_ElasticNet_alpha_rho(*data):

X_train, X_test, y_train, y_test = data

print('不同的alpha值对Lasso回归预测性能的影响')

alphas = np.logspace(-2, 2)

rhos = np.linspace(0.01, 1)

scores = []

for alpha in alphas:

for rho in rhos:

regr = linear_model.ElasticNet(alpha=alpha, l1_ratio=rho)

regr.fit(X_train, y_train)

scores.append(regr.score(X_test, y_test))

# alpha对预测性能影响的可视化

alphas, rhos = np.meshgrid(alphas, rhos)

scores = np.array(scores).reshape(alphas.shape)

from mpl_toolkits.mplot3d import Axes3D

from matplotlib import cm

fig = plt.figure()

ax = Axes3D(fig)

surf = ax.plot_surface(alphas, rhos, scores, rstride=1, cstride=1,

cmap=cm.jet, linewidth=0, antialiased=False)

fig.colorbar(surf, shrink=0.5, aspect=5)

ax.set_xlabel(r"$\alpha$")

ax.set_ylabel(r"$\rho$")

ax.set_zlabel('score')

ax.set_title('ElasticNst')

plt.show()

X_train, X_test, y_train, y_test = load_data()

test_ElasticNet_alpha_rho(X_train, X_test, y_train, y_test)

# 加载sklearn中的鸢尾花数据集

def load_data1():

iris = datasets.load_iris()

X_train = iris.data

y_train = iris.target

return cross_validation.train_test_split(X_train, y_train,

test_size=0.25, random_state=0, stratify=y_train)

# Logistic回归

def test_LogisticRegression(*data):

X_train, X_test, y_train, y_test = data

regr = linear_model.LogisticRegression()

regr.fit(X_train, y_train)

print('Logistic回归')

print('Cofficients:%s, intercept %s' % (regr.coef_, regr.intercept_))

print('Score: %.2f' % regr.score(X_test, y_test))

X_train, X_test, y_train, y_test = load_data1()

test_LogisticRegression(X_train, X_test, y_train, y_test)

# 多分类Logistic回归

def test_LogisticRegression_multinomial(*data):

X_train, X_test, y_train, y_test = data

regr = linear_model.LogisticRegression(multi_class='multinomial', solver='lbfgs')

regr.fit(X_train, y_train)

print('Logistic_multinomial回归')

print('Cofficients:%s, intercept %s' % (regr.coef_, regr.intercept_))

print('Score: %.2f' % regr.score(X_test, y_test))

X_train, X_test, y_train, y_test = load_data1()

test_LogisticRegression_multinomial(X_train, X_test, y_train, y_test)线性回归模型

Cofficients:[ -43.26774487-208.67053951 593.39797213 302.89814903 -560.27689824

261.47657106 -8.83343952 135.93715156 703.22658427 28.34844354],intercept 153.07

Residual sum of square: 3180.20

Score: 0.36

Ridge岭回归

Cofficients:[ 21.19927911 -60.47711393 302.87575204 179.41206395 8.90911449

-28.8080548 -149.30722541 112.67185758 250.53760873 99.57749017],intercept 152.45

Residual sum of square: 3192.33

Score: 0.36

不同的alpha值对岭回归预测性能的影响

Lasso回归

Cofficients:[ 0. -0. 442.67992538 0. 0. 0.

-0. 0. 330.76014648 0. ], intercept 152.52

Residual sum of square: 3583.42

Score: 0.28

不同的alpha值对Lasso回归预测性能的影响

ElasticNet回归

Cofficients:[ 0.40560736 0. 3.76542456 2.38531508 0.58677945 0.22891647

-2.15858149 2.33867566 3.49846121 1.98299707], intercept 151.93

Residual sum of square: 4922.36

Score: 0.01

不同的alpha值对Lasso回归预测性能的影响

Logistic回归

Cofficients:[[ 0.39310895 1.35470406 -2.12308303 -0.96477916]

[0.22462128 -1.34888898 0.60067997-1.24122398]

[-1.50918214 -1.29436177 2.14150484 2.2961458 ]], intercept [ 0.24122458 1.13775782 -1.09418724]

Score: 0.97

Logistic_multinomial回归

Cofficients:[[-0.38365872 0.85501328 -2.27224244 -0.98486171]

[0.34359409 -0.37367647 -0.03043553 -0.86135577]

[0.04006464 -0.48133681 2.30267797 1.84621747]], intercept [ 8.79984878 2.46853199 -11.26838077]

Score: 1.00

1477

1477

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?