颜色深度图像的显示:初始化、绑定流、提取流。

1、提取颜色数据:

#include <iostream>

#include "Windows.h"

#include "MSR_NuiApi.h"

#include "cv.h"

#include "highgui.h"

using namespace std;

int main(int argc,char * argv[])

{

IplImage *colorImage=NULL;

colorImage = cvCreateImage(cvSize(640, 480), 8, 3);

//初始化NUI

HRESULT hr = NuiInitialize(NUI_INITIALIZE_FLAG_USES_COLOR);

if( hr != S_OK )

{

cout<<"NuiInitialize failed"<<endl;

return hr;

}

//定义事件句柄

HANDLE h1 = CreateEvent( NULL, TRUE, FALSE, NULL );//控制KINECT是否可以开始读取下一帧数据

HANDLE h2 = NULL;//保存数据流的地址,用以提取数据

hr = NuiImageStreamOpen(NUI_IMAGE_TYPE_COLOR,NUI_IMAGE_RESOLUTION_640x480,0,2,h1,&h2);//打开KINECT设备的彩色图信息通道

if( FAILED( hr ) )//判断是否提取正确

{

cout<<"Could not open color image stream video"<<endl;

NuiShutdown();

return hr;

}

//开始读取彩色图数据

while(1)

{

const NUI_IMAGE_FRAME * pImageFrame = NULL;

if (WaitForSingleObject(h1, INFINITE)==0)//判断是否得到了新的数据

{

NuiImageStreamGetNextFrame(h2, 0, &pImageFrame);//得到该帧数据

NuiImageBuffer *pTexture = pImageFrame->pFrameTexture;

KINECT_LOCKED_RECT LockedRect;

pTexture->LockRect(0, &LockedRect, NULL, 0);//提取数据帧到LockedRect,它包括两个数据对象:pitch每行字节数,pBits第一个字节地址

if( LockedRect.Pitch != 0 )

{

cvZero(colorImage);

for (int i=0; i<480; i++)

{

uchar* ptr = (uchar*)(colorImage->imageData+i*colorImage->widthStep);

BYTE * pBuffer = (BYTE*)(LockedRect.pBits)+i*LockedRect.Pitch;//每个字节代表一个颜色信息,直接使用BYTE

for (int j=0; j<640; j++)

{

ptr[3*j] = pBuffer[4*j];//内部数据是4个字节,0-1-2是BGR,第4个现在未使用

ptr[3*j+1] = pBuffer[4*j+1];

ptr[3*j+2] = pBuffer[4*j+2];

}

}

cvShowImage("colorImage", colorImage);//显示图像

}

else

{

cout<<"Buffer length of received texture is bogus\r\n"<<endl;

}

//释放本帧数据,准备迎接下一帧

NuiImageStreamReleaseFrame( h2, pImageFrame );

}

if (cvWaitKey(30) == 27)

break;

}

//关闭NUI链接

NuiShutdown();

return 0;

}

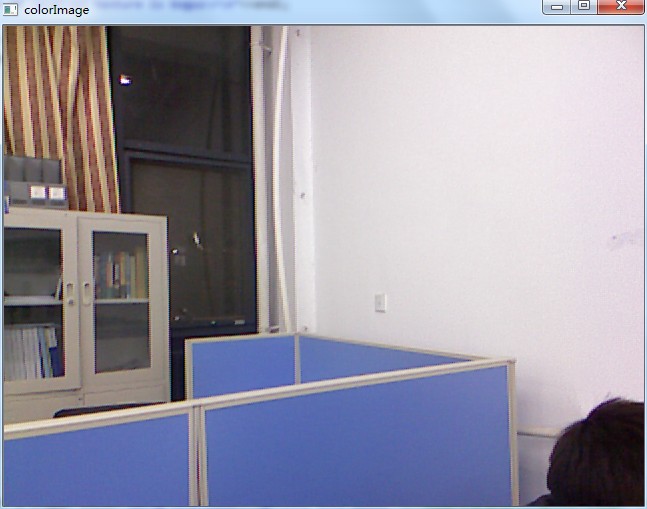

实验结果:

2、提取带有用户ID的深度数据

#include <iostream>

#include "Windows.h"

#include "MSR_NuiApi.h"

#include "cv.h"

#include "highgui.h"

using namespace std;

RGBQUAD Nui_ShortToQuad_Depth( USHORT s )//该函数我是调用的SDK自带例子的函数。

{

USHORT RealDepth = (s & 0xfff8) >> 3;//提取距离信息

USHORT Player = s & 7 ;//提取ID信息

//16bit的信息,其中最低3位是ID(所捕捉到的人的ID),剩下的13位才是信息

BYTE l = 255 - (BYTE)(256*RealDepth/0x0fff);//因为提取的信息时距离信息,这里归一化为0-255。======这里一直不明白为什么是除以0x0fff,希望了解的同志给解释一下。

RGBQUAD q;

q.rgbRed = q.rgbBlue = q.rgbGreen = 0;

switch( Player )

{

case 0:

q.rgbRed = l / 2;

q.rgbBlue = l / 2;

q.rgbGreen = l / 2;

break;

case 1:

q.rgbRed = l;

break;

case 2:

q.rgbGreen = l;

break;

case 3:

q.rgbRed = l / 4;

q.rgbGreen = l;

q.rgbBlue = l;

break;

case 4:

q.rgbRed = l;

q.rgbGreen = l;

q.rgbBlue = l / 4;

break;

case 5:

q.rgbRed = l;

q.rgbGreen = l / 4;

q.rgbBlue = l;

break;

case 6:

q.rgbRed = l / 2;

q.rgbGreen = l / 2;

q.rgbBlue = l;

break;

case 7:

q.rgbRed = 255 - ( l / 2 );

q.rgbGreen = 255 - ( l / 2 );

q.rgbBlue = 255 - ( l / 2 );

}

return q;

}

int main(int argc,char * argv[])

{

IplImage *depthIndexImage=NULL;

depthIndexImage = cvCreateImage(cvSize(320, 240), 8, 3);

//初始化NUI

HRESULT hr = NuiInitialize(NUI_INITIALIZE_FLAG_USES_DEPTH_AND_PLAYER_INDEX );

if( hr != S_OK )

{

cout<<"NuiInitialize failed"<<endl;

return hr;

}

//打开KINECT设备的彩色图信息通道

HANDLE h1 = CreateEvent( NULL, TRUE, FALSE, NULL );

HANDLE h2 = NULL;

hr = NuiImageStreamOpen(NUI_IMAGE_TYPE_DEPTH_AND_PLAYER_INDEX,NUI_IMAGE_RESOLUTION_320x240,0,2,h1,&h2);//这里根据文档信息,当初始化是NUI_INITIALIZE_FLAG_USES_DEPTH_AND_PLAYER_INDEX时,分辨率只能是320*240或者80*60

if( FAILED( hr ) )

{

cout<<"Could not open color image stream video"<<endl;

NuiShutdown();

return hr;

}

while(1)

{

const NUI_IMAGE_FRAME * pImageFrame = NULL;

if (WaitForSingleObject(h1, INFINITE)==0)

{

NuiImageStreamGetNextFrame(h2, 0, &pImageFrame);

NuiImageBuffer *pTexture = pImageFrame->pFrameTexture;

KINECT_LOCKED_RECT LockedRect;

pTexture->LockRect(0, &LockedRect, NULL, 0);

if( LockedRect.Pitch != 0 )

{

cvZero(depthIndexImage);

for (int i=0; i<240; i++)

{

uchar* ptr = (uchar*)(depthIndexImage->imageData+i*depthIndexImage->widthStep);

BYTE * pBuffer = (BYTE *)(LockedRect.pBits)+i*LockedRect.Pitch;

USHORT * pBufferRun = (USHORT*) pBuffer;//注意这里需要转换,因为每个数据是2个字节,存储的同上面的颜色信息不一样,这里是2个字节一个信息,不能再用BYTE,转化为USHORT

for (int j=0; j<320; j++)

{

RGBQUAD rgb = Nui_ShortToQuad_Depth(pBufferRun[j]);//调用函数进行转化

ptr[3*j] = rgb.rgbBlue;

ptr[3*j+1] = rgb.rgbGreen;

ptr[3*j+2] = rgb.rgbRed;

}

}

cvShowImage("depthIndexImage", depthIndexImage);

}

else

{

cout<<"Buffer length of received texture is bogus\r\n"<<endl;

}

//释放本帧数据,准备迎接下一帧

NuiImageStreamReleaseFrame( h2, pImageFrame );

}

if (cvWaitKey(30) == 27)

break;

}

//关闭NUI链接

NuiShutdown();

return 0;

}实验结果:

3、不带ID的深度数据的提取

#include <iostream>

#include "Windows.h"

#include "MSR_NuiApi.h"

#include "cv.h"

#include "highgui.h"

using namespace std;

int main(int argc,char * argv[])

{

IplImage *depthIndexImage=NULL;

depthIndexImage = cvCreateImage(cvSize(320, 240), 8, 1);//这里我们用灰度图来表述深度数据,越远的数据越暗。

//初始化NUI

HRESULT hr = NuiInitialize(NUI_INITIALIZE_FLAG_USES_DEPTH);

if( hr != S_OK )

{

cout<<"NuiInitialize failed"<<endl;

return hr;

}

//打开KINECT设备的彩色图信息通道

HANDLE h1 = CreateEvent( NULL, TRUE, FALSE, NULL );

HANDLE h2 = NULL;

hr = NuiImageStreamOpen(NUI_IMAGE_TYPE_DEPTH,NUI_IMAGE_RESOLUTION_320x240,0,2,h1,&h2);//这里根据文档信息,当初始化是NUI_INITIALIZE_FLAG_USES_DEPTH_AND_PLAYER_INDEX时,分辨率只能是320*240或者80*60

if( FAILED( hr ) )

{

cout<<"Could not open color image stream video"<<endl;

NuiShutdown();

return hr;

}

while(1)

{

const NUI_IMAGE_FRAME * pImageFrame = NULL;

if (WaitForSingleObject(h1, INFINITE)==0)

{

NuiImageStreamGetNextFrame(h2, 0, &pImageFrame);

NuiImageBuffer *pTexture = pImageFrame->pFrameTexture;

KINECT_LOCKED_RECT LockedRect;

pTexture->LockRect(0, &LockedRect, NULL, 0);

if( LockedRect.Pitch != 0 )

{

cvZero(depthIndexImage);

for (int i=0; i<240; i++)

{

uchar* ptr = (uchar*)(depthIndexImage->imageData+i*depthIndexImage->widthStep);

BYTE * pBuffer = (BYTE *)(LockedRect.pBits)+i*LockedRect.Pitch;

USHORT * pBufferRun = (USHORT*) pBuffer;//注意这里需要转换,因为每个数据是2个字节,存储的同上面的颜色信息不一样,这里是2个字节一个信息,不能再用BYTE,转化为USHORT

for (int j=0; j<320; j++)

{

ptr[j] = 255 - (BYTE)(256*pBufferRun[j]/0x0fff);//直接将数据归一化处理

}

}

cvShowImage("depthIndexImage", depthIndexImage);

}

else

{

cout<<"Buffer length of received texture is bogus\r\n"<<endl;

}

//释放本帧数据,准备迎接下一帧

NuiImageStreamReleaseFrame( h2, pImageFrame );

}

if (cvWaitKey(30) == 27)

break;

}

//关闭NUI链接

NuiShutdown();

return 0;

}实验结果:

4、需要注意的地方

①NUI_INITIALIZE_FLAG_USES_DEPTH_AND_PLAYER_INDEX与NUI_INITIALIZE_FLAG_USES_DEPTH不能同时创建数据流。这个我在试验中证实了。而且单纯的深度图像是左右倒置的。

②文中归一化的地方除以0x0fff的原因是kinect的有效距离是1.2m到3.5m(官方文档),如果是3.5m那用十六进制表示是0x0DAC,我在实际测试中我的实验室能够测到的最大距离是0x0F87也就是3975mm。估计是官方他们直接使用极限距离0x0FFF来作为除数的。

③文中的cv.h,highgui.h是我使用的opencv中的库,因为对这个比较熟悉。

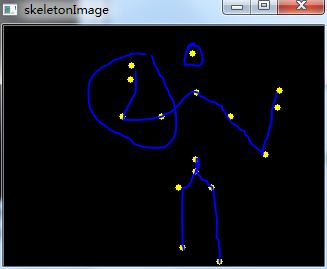

5、骨骼数据的提取:

#include <iostream>

#include "Windows.h"

#include "MSR_NuiApi.h"

#include "cv.h"

#include "highgui.h"

using namespace std;

void Nui_DrawSkeleton(NUI_SKELETON_DATA * pSkel,int whichone, IplImage *SkeletonImage)//画出骨骼,第二个参数未使用,想跟踪多人的童鞋可以考虑使用

{

float fx, fy;

CvPoint SkeletonPoint[NUI_SKELETON_POSITION_COUNT];

for (int i = 0; i < NUI_SKELETON_POSITION_COUNT; i++)//所有的坐标转化为深度图的坐标

{

NuiTransformSkeletonToDepthImageF( pSkel->SkeletonPositions[i], &fx, &fy );

SkeletonPoint[i].x = (int)(fx*320+0.5f);

SkeletonPoint[i].y = (int)(fy*240+0.5f);

}

for (int i = 0; i < NUI_SKELETON_POSITION_COUNT ; i++)

{

if (pSkel->eSkeletonPositionTrackingState[i] != NUI_SKELETON_POSITION_NOT_TRACKED)//跟踪点一用有三种状态:1没有被跟踪到,2跟踪到,3根据跟踪到的估计到

{

cvCircle(SkeletonImage, SkeletonPoint[i], 3, cvScalar(0, 255, 255), -1, 8, 0);

}

}

return;

}

int main(int argc,char * argv[])

{

IplImage *skeletonImage=NULL;

skeletonImage = cvCreateImage(cvSize(320, 240), 8, 3);

//初始化NUI

HRESULT hr = NuiInitialize(NUI_INITIALIZE_FLAG_USES_SKELETON );

if( hr != S_OK )

{

cout<<"NuiInitialize failed"<<endl;

return hr;

}

//打开KINECT设备的彩色图信息通道

HANDLE h1 = CreateEvent( NULL, TRUE, FALSE, NULL );

hr = NuiSkeletonTrackingEnable( h1, 0 );//打开骨骼跟踪事件

if( FAILED( hr ) )

{

cout << "NuiSkeletonTrackingEnable fail" << endl;

NuiShutdown();

return hr;

}

while(1)

{

if(WaitForSingleObject(h1, INFINITE)==0)

{

NUI_SKELETON_FRAME SkeletonFrame;//骨骼帧的定义

bool bFoundSkeleton = false;

if( SUCCEEDED(NuiSkeletonGetNextFrame( 0, &SkeletonFrame )) )//Get the next frame of skeleton data.直接从kinect中提取骨骼帧

{

for( int i = 0 ; i < NUI_SKELETON_COUNT ; i++ )

{

if( SkeletonFrame.SkeletonData[i].eTrackingState == NUI_SKELETON_TRACKED )//最多跟踪六个人,检查每个“人”(有可能是空,不是人)是否跟踪到了

{

bFoundSkeleton = true;

}

}

}

if( !bFoundSkeleton )

{

continue;;

}

// smooth out the skeleton data

NuiTransformSmooth(&SkeletonFrame,NULL);//平滑骨骼帧,消除抖动

// draw each skeleton color according to the slot within they are found.

cvZero(skeletonImage);

for( int i = 0 ; i < NUI_SKELETON_COUNT ; i++ )

{

// Show skeleton only if it is tracked, and the center-shoulder joint is at least inferred.

//断定是否是一个正确骨骼的条件:骨骼被跟踪到并且肩部中心(颈部位置)必须跟踪到。

if( SkeletonFrame.SkeletonData[i].eTrackingState == NUI_SKELETON_TRACKED &&

SkeletonFrame.SkeletonData[i].eSkeletonPositionTrackingState[NUI_SKELETON_POSITION_SHOULDER_CENTER] != NUI_SKELETON_POSITION_NOT_TRACKED)

{

Nui_DrawSkeleton(&SkeletonFrame.SkeletonData[i], i , skeletonImage);

}

}

cvShowImage("skeletonImage", skeletonImage);//显示骨骼图像。

cvWaitKey(30);

}

}

//关闭NUI链接

NuiShutdown();

return 0;

}

2558

2558

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?