caffe源码

本文主要包含如下内容:

- caffe源码

- caffeproto

- commonhpp和commoncpp

- internal_threadhpp和internal_threadcpp

- blobhpp和blobcpp

- layerhpp和layercpp

- nethpp和netcpp

- solverhpp和solvercpp

caffe.proto

位于src/caffe/proto目录下,该文件是一个消息格式文件,后缀为proto的文件即为消息协议原型定义文件,通过使用描述性语言来定义程序中需要用到的数据格式。

Caffe中,数据存储格式为Google Protocol Buffer,简称PB。PB是一种轻便、高效的结构化数据储存格式,可以用于结构化数据串行化,很适合做数据存储或RPC数据交换格式。它可以用于通讯协议、数据存储等领域的语言无关、平台无关、可扩展的序列化结构数据格式。

message表示需要传输的参数的结构体optional是一个可选成员,即消息中可以不包含该成员,如果包含成员而未进行初始化,会赋予默认默认值。required表明是必须包含该成员,且初值必须被提供。repeated表示可以重复多次的成员,出现零次也可以出现在成员中。属于blob的: BlobProto, BlobProtoVector, Datum

- 属于layer的: FillerParameter, LayerParameter,ArgMaxParameter,TransformationParameter, LossParameter, AccuracyParameter,ConcatParameter, ContrastiveLossParameter, ConvolutionParameter,

DataParameter, DropoutParameter, DummyDataParameter, EltwiseParameter,ExpParameter, HDF5DataParameter, HDF5OutputParameter, HingeLossParameter,ImageDataParameter, InfogainLossParameter, InnerProductParameter,LRNParameter, MemoryDataParameter, MVNParameter, PoolingParameter,PowerParameter, PythonParameter, ReLUParameter, SigmoidParameter,

SliceParameter, SoftmaxParameter, TanHParameter, ThresholdParameter等 - 属于net的: NetParameter, SolverParameter, SolverState, NetState, NetStateRule,

ParamSpec

自己定义proto,生成对应的.h和.c文件

package lm;

message helloworld

{

required int32 id = 1; // ID

required string str = 2; // str

optional int32 opt = 3; // optional field

} 控制台中输入:protoc -I=. --cpp_out=. ./caffe.proto,其中,-I表示proto所在路径,--cpp_out表示生成的头文件和实现文件所在的路径

生成对应的C++类的头文件Caffe.pb.h,以及对应的实现文件Caffe.pb.cc,里面是一些关于数据结构的标准化操作:

void CopyFrom();

void MergeFrom();

void Clear();

bool IsInitialized() const;

int ByteSize() const;

bool MergePartialFromCodedStream();

void SerializeWithCachedSizes() const;

SerializeWithCachedSizesToArray() const;

int GetCachedSize();

void SharedCtor();

void SharedDtor();

void SetCachedSize() const;

proto源码,粘贴重要部分

syntax = "proto2";

package caffe;

message BlobShape { //数据块形状,指定Blob的形状或维度

repeated int64 dim = 1 [packed = true]; //数据块形状定义为NUM*Channel*Height*Wight,采用高维数据的封装

}

message BlobProto { //数据块(数据 形状 微分)

optional BlobShape shape = 7; //维度

repeated float data = 5 [packed = true]; //前向传播计算数据

repeated float diff = 6 [packed = true]; //反向传播计算数据

repeated double double_data = 8 [packed = true];

repeated double double_diff = 9 [packed = true];

// 数据4D形状,已使用BlobShape shape代替

optional int32 num = 1 [default = 0];

optional int32 channels = 2 [default = 0];

optional int32 height = 3 [default = 0];

optional int32 width = 4 [default = 0];

}

...

message Datum { //数据层

optional int32 channels = 1; //图像通道数

optional int32 height = 2; //图像的高度

optional int32 width = 3; //图像的宽度

optional bytes data = 4; //真实的图像数据,以字节存储

optional int32 label = 5; //这张图片对应的标签

repeated float float_data = 6; //float类型的数据

optional bool encoded = 7 [default = false]; //是否需要解码

}

...

message NetParameter {

optional string name = 1; //网络的名字

repeated string input = 3; //输入blob的名字

repeated BlobShape input_shape = 8;

repeated int32 input_dim = 4; //输入层blob的维度

optional bool force_backward = 5 [default = false]; //网络是否进行反向传播

optional NetState state = 6;

optional bool debug_info = 7 [default = false];

repeated LayerParameter layer = 100; // ID 100 so layers are printed last.

repeated V1LayerParameter layers = 2;

}

...

message SolverParameter {

optional string net = 24;

optional NetParameter net_param = 25;

optional string train_net = 1; //训练网络的proto file

repeated string test_net = 2; //测试网络的Proto file

optional NetParameter train_net_param = 21; // Inline train net params.

repeated NetParameter test_net_param = 22; // Inline test net params.

optional NetState train_state = 26;

repeated NetState test_state = 27;

repeated int32 test_iter = 3; //每次测试时的迭代次数

optional int32 test_interval = 4 [default = 0]; //两次测试的间隔迭代次数

optional bool test_compute_loss = 19 [default = false]; //默认不计算loss

optional bool test_initialization = 32 [default = true]; //如果为真,则在训练前运行一次测试,以确保内存足够,并打印初始损失值

optional float base_lr = 5; //基础学习率

optional int32 display = 6; //打印信息的遍历间隔,设置为0则不打印

optional int32 average_loss = 33 [default = 1]; //打印最后一个迭代的平均loss

optional int32 max_iter = 7; //最大迭代次数

optional int32 iter_size = 36 [default = 1];

optional string lr_policy = 8; //学习率调节策略

optional float gamma = 9; // The parameter to compute the learning rate.

optional float power = 10; // The parameter to compute the learning rate.

optional float momentum = 11; //动量

optional float weight_decay = 12; //权值衰减

optional string regularization_type = 29 [default = "L2"];

optional int32 stepsize = 13; //学习速率的衰减步长

repeated int32 stepvalue = 34;

optional float clip_gradients = 35 [default = -1];

optional int32 snapshot = 14 [default = 0]; // The snapshot interval

optional string snapshot_prefix = 15; // The prefix for the snapshot.

optional bool snapshot_diff = 16 [default = false];

enum SnapshotFormat {

HDF5 = 0;

BINARYPROTO = 1;

}

optional SnapshotFormat snapshot_format = 37 [default = BINARYPROTO];

enum SolverMode {

CPU = 0;

GPU = 1;

}

optional SolverMode solver_mode = 17 [default = GPU];

optional int32 device_id = 18 [default = 0];

optional int64 random_seed = 20 [default = -1];

optional string type = 40 [default = "SGD"];

optional float delta = 31 [default = 1e-8];

optional float momentum2 = 39 [default = 0.999];

optional float rms_decay = 38 [default = 0.99];

optional bool debug_info = 23 [default = false];

optional bool snapshot_after_train = 28 [default = true];

enum SolverType {

SGD = 0;

NESTEROV = 1;

ADAGRAD = 2;

RMSPROP = 3;

ADADELTA = 4;

ADAM = 5;

}

optional SolverType solver_type = 30 [default = SGD];

}

...

message LayerParameter { //层参数(名称 类型 输入 输出 阶段 损失加权系数 全局乘数)

optional string name = 1; //类名称

optional string type = 2; //类类型

repeated string bottom = 3; //输入blob名称

repeated string top = 4; //输出blob名称

// The train / test phase for computation.

optional Phase phase = 10;

repeated float loss_weight = 5; //每层输出blob在目标损失函数中的加权系数,每层默认为0或1

repeated ParamSpec param = 6; //指定学习参数

repeated BlobProto blobs = 7; //每层数值参数的blobs

repeated bool propagate_down = 11; //指定是否向每个底部反向传播。如果未指定,Caffe将自动推断每个输入是否需要反向传播来计算参数梯度。如果为某些输入设置为true,则强制对这些输入的反向传播;如果某些输入设置为false,则跳过这些输入的反向传播。

repeated NetStateRule include = 8;

repeated NetStateRule exclude = 9;

optional TransformationParameter transform_param = 100;

optional LossParameter loss_param = 101;

//层类型指定参数

optional AccuracyParameter accuracy_param = 102;

optional ArgMaxParameter argmax_param = 103;

optional BatchNormParameter batch_norm_param = 139;

optional BiasParameter bias_param = 141;

optional ConcatParameter concat_param = 104;

optional ContrastiveLossParameter contrastive_loss_param = 105;

optional ConvolutionParameter convolution_param = 106;

optional CropParameter crop_param = 144;

optional DataParameter data_param = 107;

optional DropoutParameter dropout_param = 108;

optional DummyDataParameter dummy_data_param = 109;

optional EltwiseParameter eltwise_param = 110;

optional ELUParameter elu_param = 140;

optional EmbedParameter embed_param = 137;

optional ExpParameter exp_param = 111;

optional FlattenParameter flatten_param = 135;

optional HDF5DataParameter hdf5_data_param = 112;

optional HDF5OutputParameter hdf5_output_param = 113;

optional HingeLossParameter hinge_loss_param = 114;

optional ImageDataParameter image_data_param = 115;

optional InfogainLossParameter infogain_loss_param = 116;

optional InnerProductParameter inner_product_param = 117;

optional InputParameter input_param = 143;

optional LogParameter log_param = 134;

optional LRNParameter lrn_param = 118;

optional MemoryDataParameter memory_data_param = 119;

optional MVNParameter mvn_param = 120;

optional ParameterParameter parameter_param = 145;

optional PoolingParameter pooling_param = 121;

optional PowerParameter power_param = 122;

optional PReLUParameter prelu_param = 131;

optional PythonParameter python_param = 130;

optional RecurrentParameter recurrent_param = 146;

optional ReductionParameter reduction_param = 136;

optional ReLUParameter relu_param = 123;

optional ReshapeParameter reshape_param = 133;

optional ScaleParameter scale_param = 142;

optional SigmoidParameter sigmoid_param = 124;

optional SoftmaxParameter softmax_param = 125;

optional SPPParameter spp_param = 132;

optional SliceParameter slice_param = 126;

optional TanHParameter tanh_param = 127;

optional ThresholdParameter threshold_param = 128;

optional TileParameter tile_param = 138;

optional WindowDataParameter window_data_param = 129;

}

// Data 参数

message DataParameter {

// 只支持下面两种数据格式

enum DB {

LEVELDB = 0;

LMDB = 1;

}

// 指定数据路径

optional string source = 1;

// 指定batchsize

optional uint32 batch_size = 4;

// 指定数据格式

optional DB backend = 8 [default = LEVELDB];

// 强制将图片转为三通道彩色图片

optional bool force_encoded_color = 9 [default = false];

// 预先存取多少个batch到host memory

// (每次forward完成之后再全部重新取一个batch比较浪费时间),

// increase if data access bandwidth varies).

optional uint32 prefetch = 10 [default = 4];

}common.hpp和common.cpp

命名空间的使用:google cv caffe,然后在项目中就可以随意使用.单例化caffe类,并且封装了boost和cuda随机数生成的函数,提供了统一接口

common.hpp

#ifndef CAFFE_COMMON_HPP_

#define CAFFE_COMMON_HPP_

#include <boost/shared_ptr.hpp>

#include <gflags/gflags.h>

#include <glog/logging.h>

#include <climits>

#include <cmath>

#include <fstream>

#include <iostream>

#include <map>

#include <set>

#include <sstream>

#include <string>

#include <utility>

#include <vector>

#include "caffe/util/device_alternate.hpp"

#define STRINGIFY(m) #m

#define AS_STRING(m) STRINGIFY(m)

// 使用GFLAGS_GFLAGS_H_来检测是否为2.1版本,将命名空间google更改为gflags

#ifndef GFLAGS_GFLAGS_H_

namespace gflags = google;

#endif // GFLAGS_GFLAGS_H_

// 禁止某个类通过构造函数直接初始化为另一个类(copy,copy assignment),声明在private即可完成目的

#define DISABLE_COPY_AND_ASSIGN(classname) \

private:\

classname(const classname&);\

classname& operator=(const classname&)

// 模板类实例化,用float和double规范实例化一个类

#define INSTANTIATE_CLASS(classname) \

char gInstantiationGuard##classname; \

template class classname<float>; \

template class classname<double>

// 初始化GPU的前向传播函数

#define INSTANTIATE_LAYER_GPU_FORWARD(classname) \

template void classname<float>::Forward_gpu( \

const std::vector<Blob<float>*>& bottom, \

const std::vector<Blob<float>*>& top); \

template void classname<double>::Forward_gpu( \

const std::vector<Blob<double>*>& bottom, \

const std::vector<Blob<double>*>& top);

// 初始化GPU的反向传播函数

#define INSTANTIATE_LAYER_GPU_BACKWARD(classname) \

template void classname<float>::Backward_gpu( \

const std::vector<Blob<float>*>& top, \

const std::vector<bool>& propagate_down, \ //指定是否进行反向传播

const std::vector<Blob<float>*>& bottom); \

template void classname<double>::Backward_gpu( \

const std::vector<Blob<double>*>& top, \

const std::vector<bool>& propagate_down, \

const std::vector<Blob<double>*>& bottom)

//初始化GPU的前向传播和反向传播函数,即对其进行了封装

#define INSTANTIATE_LAYER_GPU_FUNCS(classname) \

INSTANTIATE_LAYER_GPU_FORWARD(classname); \

INSTANTIATE_LAYER_GPU_BACKWARD(classname)

// 一个简单的宏来标记未实现的代码_Not Implemented Yet

#define NOT_IMPLEMENTED LOG(FATAL) << "Not Implemented Yet"

// See PR #1236

namespace cv { class Mat; }

namespace caffe {

// 使用boost的shared_ptr智能指针,而不是C++11,因为现在的cuda不支持

using boost::shared_ptr;

// Common functions and classes from std that caffe often uses.

using std::fstream;

using std::ios;

using std::isnan;

using std::isinf;

using std::iterator;

using std::make_pair;

using std::map;

using std::ostringstream;

using std::pair;

using std::set;

using std::string;

using std::stringstream;

using std::vector;

// 一个全局初始化函数,初始化gflags和glog,您应该在主函数中调用该函数。目前,它初始化了google标志和谷歌日志记录。

void GlobalInit(int* pargc, char*** pargv);

// Caffe 类

class Caffe {

public:

~Caffe();

// Get 函数利用Boost的局部线程储存功能,Get()就是Caffe类

static Caffe& Get();

enum Brew { CPU, GPU };

// random number generator 随机数生成器

class RNG {

public:

RNG(); //利用系统的熵池或者时间来初始化RNG内部的generator_

explicit RNG(unsigned int seed);

explicit RNG(const RNG&);

RNG& operator=(const RNG&);

void* generator();

private:

class Generator;

shared_ptr<Generator> generator_;

};

// Getters for boost rng, curand, and cublas handles

inline static RNG& rng_stream() {

if (!Get().random_generator_) {

Get().random_generator_.reset(new RNG());

}

return *(Get().random_generator_);

}

#ifndef CPU_ONLY

inline static cublasHandle_t cublas_handle() { return Get().cublas_handle_; }

inline static curandGenerator_t curand_generator() {

return Get().curand_generator_;

}

#endif

/*设置CPU和GPU以及训练的时候线程并行数目*/

// Returns the mode: running on CPU or GPU.

inline static Brew mode() { return Get().mode_; }

// 变量的设置器设置模式

inline static void set_mode(Brew mode) { Get().mode_ = mode; }

// 手动设定随机数生成器的种子

static void set_random_seed(const unsigned int seed);

// Sets the device. Since we have cublas and curand stuff, set device also

// requires us to reset those values.

static void SetDevice(const int device_id);

// Prints the current GPU status. 显示当前GPU的状态

static void DeviceQuery();

// Check if specified device is available

static bool CheckDevice(const int device_id);

// 设置设备id

static int FindDevice(const int start_id = 0);

// Parallel training info

inline static int solver_count() { return Get().solver_count_; }

inline static void set_solver_count(int val) { Get().solver_count_ = val; }

inline static bool root_solver() { return Get().root_solver_; }

inline static void set_root_solver(bool val) { Get().root_solver_ = val; }

protected:

#ifndef CPU_ONLY

cublasHandle_t cublas_handle_;

curandGenerator_t curand_generator_;

#endif

shared_ptr<RNG> random_generator_;

Brew mode_;

int solver_count_;

bool root_solver_;

private:

// 实现中构造函数被声明为私有方法,这样从根本上杜绝外部使用构造函数生成新的实例

Caffe();

// 同时禁用拷贝函数与赋值操作符(声明为私有但是不提供实现)避免通过拷贝函数或赋值操作生成新实例。

DISABLE_COPY_AND_ASSIGN(Caffe);

};

} // namespace caffe

#endif // CAFFE_COMMON_HPP_internal_thread.hpp和internal_thread.cpp

InternalThread类实际上就是boost库的thread的封装,对线程进行控制和使用,类的构造函数默认初始化boost::thread,析构函数直接调用线程停止函数.成员函数包括开始线程,结束线程,判断线程是否开始,要求线程结束.

internal_thread.hpp

#ifndef CAFFE_INTERNAL_THREAD_HPP_

#define CAFFE_INTERNAL_THREAD_HPP_

#include "caffe/common.hpp"

namespace boost { class thread; }

namespace caffe {

/**

* Virtual class encapsulate boost::thread for use in base class

* The child class will acquire the ability to run a single thread,

* by reimplementing the virtual function InternalThreadEntry.

* 封装了pthread函数,继承的子类可以得到一个单独的线程,主要作用是

* 在计算当前的一批数据时,在后台获取新一批的数据。

*/

class InternalThread {

public:

InternalThread() : thread_() {} //构造函数

virtual ~InternalThread(); //析构函数

/**

* Caffe's thread local state will be initialized using the current

* thread values, e.g. device id, solver index etc. The random seed

* is initialized using caffe_rng_rand.

* caffe的线程局部状态将使用当前线程值来进行初始化,例如设备id,solver的编号,随机数种子.

*/

void StartInternalThread();

/** Will not return until the internal thread has exited. */

void StopInternalThread(); //停止内部线程函数

bool is_started() const; //线程退出之前不会返回

protected:

/* Implement this method in your subclass

with the code you want your thread to run. */

virtual void InternalThreadEntry() {} //定义了一个虚函数,要求继承类中实现相关代码

/* Should be tested when running loops to exit when requested. */

bool must_stop(); //是否应该进行通过测试以退出循环,检测终结条件的退出方式

private:

void entry(int device, Caffe::Brew mode, int rand_seed, int solver_count,

bool root_solver);

shared_ptr<boost::thread> thread_;

};

} // namespace caffe

#endif // CAFFE_INTERNAL_THREAD_HPP_internal_thread.cpp

#include <boost/thread.hpp>

#include <exception>

#include "caffe/internal_thread.hpp"

#include "caffe/util/math_functions.hpp"

namespace caffe {

InternalThread::~InternalThread() { //析构函数,调用停止内部线程函数

StopInternalThread();

}

bool InternalThread::is_started() const { //测试程序是否已经开启

return thread_ && thread_->joinable();

}

bool InternalThread::must_stop() { //自动检查终结条件的退出方式

return thread_ && thread_->interruption_requested();

}

void InternalThread::StartInternalThread() { //初始化线程

CHECK(!is_started()) << "Threads should persist and not be restarted.";

int device = 0;

#ifndef CPU_ONLY

CUDA_CHECK(cudaGetDevice(&device));

#endif

Caffe::Brew mode = Caffe::mode();

int rand_seed = caffe_rng_rand();

int solver_count = Caffe::solver_count();

bool root_solver = Caffe::root_solver();

try { //重新实例化一个thread对象给thread_指针,该线程的执行是entry函数

thread_.reset(new boost::thread(&InternalThread::entry, this, device, mode,

rand_seed, solver_count, root_solver));

} catch (std::exception& e) {

LOG(FATAL) << "Thread exception: " << e.what();

}

}

void InternalThread::entry(int device, Caffe::Brew mode, int rand_seed,

int solver_count, bool root_solver) { //线程所要执行的函数

#ifndef CPU_ONLY

CUDA_CHECK(cudaSetDevice(device));

#endif

Caffe::set_mode(mode);

Caffe::set_random_seed(rand_seed);

Caffe::set_solver_count(solver_count);

Caffe::set_root_solver(root_solver);

InternalThreadEntry();

}

void InternalThread::StopInternalThread() { //停止线程

if (is_started()) { //如果线程已经开始了

thread_->interrupt(); //打断线程

try {

thread_->join(); //等待线程结束

} catch (boost::thread_interrupted&) { //如果被打断,啥也不干

} catch (std::exception& e) { //如果发生其他错误则记录到日志

LOG(FATAL) << "Thread exception: " << e.what();

}

}

}

} // namespace caffeblob.hpp和blob.cpp

Blob是四维连续数组(4-D, type = float32),如果使用(n,k,h,w)表示的话,每一维的意思分别是:

- n: numbei.输入数据量,比如mini-batch的大小.

- c: channel.通道数量.

- h,w: height,width.图像的高度和宽度.

Blob里面定义的函数:

Reshape(): 改变一个blob的大小;

ReshapeLike(): 为data和diff重新分配一块空间

Num_axes(): 返回blob的维度,要是是4维的话,返回4.

Count(): 计算得到count = num * channels * height * width

Offset(): 可以得到输入blob数据(n,k,h,w)的偏移量位置

CopyFrom(): 从source拷贝数据,copy_diff来作为标志区分是拷贝data还是diff

FromProto(): 从proto读数据进来,反序列化

ToProto(): 把blob数据保存到proto中.

blob.hpp

主要是分配内存和释放内存。class yncedMemory定义了内存分配管理和CPU与GPU之间同步的函数。

Blob会使用SyncedMem自动决定什么时候去copy data以提高运行效率,通常情况是仅当gnu或cpu修改后有copy操作。

#ifndef CAFFE_BLOB_HPP_

#define CAFFE_BLOB_HPP_

#include <algorithm>

#include <string>

#include <vector>

#include "caffe/common.hpp" //单例化caffe类,并且封装了boost和cuda随机数生成的函数,提供了统一接口

#include "caffe/proto/caffe.pb.h"

#include "caffe/syncedmem.hpp"

const int kMaxBlobAxes = 32;

namespace caffe {

/**

* @brief A wrapper around SyncedMemory holders serving as the basic

* computational unit through which Layer%s, Net%s, and Solver%s

* interact.

*

* TODO(dox): more thorough description.

*/

template <typename Dtype>

class Blob {

public:

Blob() : data_(), diff_(), count_(0), capacity_(0) {} //默认构造函数:初始化列表 {空函数体}

// 当构造函数被声明 explicit 时,编译器将不使用它作为转换操作符,禁止单参数构造函数的隐式转换

explicit Blob(const int num, const int channels, const int height,

const int width); //可以通过设置数据维度(N,C,H,W)初始化

explicit Blob(const vector<int>& shape); //也可以通过传入vector<int>直接传入维数

/// @brief Deprecated; use <code>Reshape(const vector<int>& shape)</code>.

void Reshape(const int num, const int channels, const int height,

const int width);

/*

*Blob作为一个最基础的类,其中构造函数开辟一个内存空间来存储数据, Reshape 函数在 Layer 中的

*reshape或者forward操作中来 adjust the dimensions of a top blob 。同时在改变 Blob 大小时,

*内存将会被重新分配如果内存大小不够了,并且额外的内存将不会被释放。对 input 的 blob 进行 reshape,

* 如果立⻢调用 Net::Backward 是会出错的,因为 reshape 之后,要么 Net::forward 或者 Net::Reshape

* 就会被调用来将新的 input shape 传播到高层

*/

void Reshape(const vector<int>& shape);

void Reshape(const BlobShape& shape);

void ReshapeLike(const Blob& other);

// 内联函数:通过内联函数,编译器不需要跳转到内存其他地址去执行函数调用,也不需要保留函数对调用时的现场数据,好处是可以节省调用的开销,但代码总量上升

// 输出blol的形状,用于打印blob的log

inline string shape_string() const {

ostringstream stream;

for (int i = 0; i < shape_.size(); ++i) {

stream << shape_[i] << " ";

}

stream << "(" << count_ << ")";

return stream.str();

}

// 获取shape

inline const vector<int>& shape() const { return shape_; }

// 获取index维的大小,返回某一维的尺寸,对于维数(N,C,H,W),shape(0)返回N,shape(-1)返回W。

inline int shape(int index) const {

return shape_[CanonicalAxisIndex(index)];

}

// 返回Blob维度数,对于维数(N,C,H,W),返回4

inline int num_axes() const { return shape_.size(); }

// 返回Blob维度数,对于维数(N,C,H,W),返回N×C×H×W,当前data的大小

inline int count() const { return count_; }

/*

*多个count()函数,主要为了统计Blob的容量(volume), 或者是某一片(slice),从某个axis到具体某个axis的

*shape乘机

*/

// 获取某几维数据的大小,对于维数(N,C,H,W),count(0, 3)返回N×C×H

inline int count(int start_axis, int end_axis) const {

CHECK_LE(start_axis, end_axis);

CHECK_GE(start_axis, 0);

CHECK_GE(end_axis, 0);

CHECK_LE(start_axis, num_axes());

CHECK_LE(end_axis, num_axes());

int count = 1;

for (int i = start_axis; i < end_axis; ++i) {

count *= shape(i);

}

return count;

}

// 获取某一维到结束数据的大小,对于维数(N,C,H,W),count(1)返回C×H×W

inline int count(int start_axis) const {

return count(start_axis, num_axes());

}

// 标准化索引,主要对参数索引进行标准化,以满足要求,转换坐标轴索引[-N, N]为[0, N]

inline int CanonicalAxisIndex(int axis_index) const {

CHECK_GE(axis_index, -num_axes())

<< "axis " << axis_index << " out of range for " << num_axes()

<< "-D Blob with shape " << shape_string();

CHECK_LT(axis_index, num_axes())

<< "axis " << axis_index << " out of range for " << num_axes()

<< "-D Blob with shape " << shape_string();

if (axis_index < 0) {

return axis_index + num_axes();

}

return axis_index;

}

// Blob中的4个基本变量num,channel,height,width可以直接通过shape(0)shape(1)shape(2)shape(3)来访问

/// @brief Deprecated legacy shape accessor num: use shape(0) instead.

inline int num() const { return LegacyShape(0); }

/// @brief Deprecated legacy shape accessor channels: use shape(1) instead.

inline int channels() const { return LegacyShape(1); }

/// @brief Deprecated legacy shape accessor height: use shape(2) instead.

inline int height() const { return LegacyShape(2); }

/// @brief Deprecated legacy shape accessor width: use shape(3) instead.

inline int width() const { return LegacyShape(3); }

inline int LegacyShape(int index) const {

CHECK_LE(num_axes(), 4)

<< "Cannot use legacy accessors on Blobs with > 4 axes.";

CHECK_LT(index, 4);

CHECK_GE(index, -4);

if (index >= num_axes() || index < -num_axes()) {

// Axis is out of range, but still in [0, 3] (or [-4, -1] for reverse

// indexing) -- this special case simulates the one-padding used to fill

// extraneous axes of legacy blobs.

return 1;

}

return shape(index);

}

// 计算offset物理偏移量,(n,c,h,w)的偏移量为((n*channels()+c)*height()+h)*width()+w

inline int offset(const int n, const int c = 0, const int h = 0,

const int w = 0) const {

CHECK_GE(n, 0);

CHECK_LE(n, num());

CHECK_GE(channels(), 0);

CHECK_LE(c, channels());

CHECK_GE(height(), 0);

CHECK_LE(h, height());

CHECK_GE(width(), 0);

CHECK_LE(w, width());

return ((n * channels() + c) * height() + h) * width() + w;

}

inline int offset(const vector<int>& indices) const {

CHECK_LE(indices.size(), num_axes());

int offset = 0;

for (int i = 0; i < num_axes(); ++i) {

offset *= shape(i);

if (indices.size() > i) {

CHECK_GE(indices[i], 0);

CHECK_LT(indices[i], shape(i));

offset += indices[i];

}

}

return offset;

}

/**

* @brief Copy from a source Blob.

*

* @param source the Blob to copy from

* @param copy_diff: if false, copy the data; if true, copy the diff

* @param reshape: if false, require this Blob to be pre-shaped to the shape

* of other (and die otherwise); if true, Reshape this Blob to other's

* shape if necessary

*/

// 从source拷贝数据, copy_diff来作为标志区分是拷贝data还是diff。

void CopyFrom(const Blob<Dtype>& source, bool copy_diff = false,

bool reshape = false);

/*

* 这一部分函数主要通过给定的位置访问数据,根据位置计算与数据起始的偏差offset,在通过cpu_data*指针获* 得地址

*/

// 获取某位置的data_数据

inline Dtype data_at(const int n, const int c, const int h,

const int w) const {

return cpu_data()[offset(n, c, h, w)];

}

// 获取某位置的diff_数据

inline Dtype diff_at(const int n, const int c, const int h,

const int w) const {

return cpu_diff()[offset(n, c, h, w)];

}

inline Dtype data_at(const vector<int>& index) const {

return cpu_data()[offset(index)];

}

inline Dtype diff_at(const vector<int>& index) const {

return cpu_diff()[offset(index)];

}

inline const shared_ptr<SyncedMemory>& data() const {

CHECK(data_);

return data_;

}

inline const shared_ptr<SyncedMemory>& diff() const {

CHECK(diff_);

return diff_;

}

const Dtype* cpu_data() const; // 访问数据,只读数据

void set_cpu_data(Dtype* data); // 设置data_的cpu指针,只是修改了指针

const int* gpu_shape() const;

const Dtype* gpu_data() const; // 获取data_的gpu指针

const Dtype* cpu_diff() const; // 获取diff_的cpu指针

const Dtype* gpu_diff() const; // 获取diff_的gpu指针

Dtype* mutable_cpu_data(); // mutable方式可改写数据

Dtype* mutable_gpu_data();

Dtype* mutable_cpu_diff();

Dtype* mutable_gpu_diff();

void Update(); // 更新data_的数据,减去diff_的数据,就是合并data和diff

void FromProto(const BlobProto& proto, bool reshape = true); // 从proto读数据进来,其实就是反序列化

void ToProto(BlobProto* proto, bool write_diff = false) const; // blob数据保存到proto中

// 计算L1范数

/// @brief Compute the sum of absolute values (L1 norm) of the data.

Dtype asum_data() const;

/// @brief Compute the sum of absolute values (L1 norm) of the diff.

Dtype asum_diff() const;

// 计算L2范数

/// @brief Compute the sum of squares (L2 norm squared) of the data.

Dtype sumsq_data() const;

/// @brief Compute the sum of squares (L2 norm squared) of the diff.

Dtype sumsq_diff() const;

// 正规化,进行相应的尺度变化

/// @brief Scale the blob data by a constant factor.

void scale_data(Dtype scale_factor);

/// @brief Scale the blob diff by a constant factor.

void scale_diff(Dtype scale_factor);

/**

* @brief Set the data_ shared_ptr to point to the SyncedMemory holding the

* data_ of Blob other -- useful in Layer%s which simply perform a copy

* in their Forward pass.

*

* This deallocates the SyncedMemory holding this Blob's data_, as

* shared_ptr calls its destructor when reset with the "=" operator.

*/

void ShareData(const Blob& other); // Blob& other 赋值给data_

/**

* @brief Set the diff_ shared_ptr to point to the SyncedMemory holding the

* diff_ of Blob other -- useful in Layer%s which simply perform a copy

* in their Forward pass.

*

* This deallocates the SyncedMemory holding this Blob's diff_, as

* shared_ptr calls its destructor when reset with the "=" operator.

*/

void ShareDiff(const Blob& other); //Blob& other 赋值给diff_

bool ShapeEquals(const BlobProto& other); //判断other与本blob形状是否相同

protected:

shared_ptr<SyncedMemory> data_; //存储前向传递数据

shared_ptr<SyncedMemory> diff_; //存储反向传递梯度

shared_ptr<SyncedMemory> shape_data_;

vector<int> shape_; //参数维度

int count_; //Blob存储的元素个数(shape_所有元素乘积)

int capacity_; //当前Blob的元素个数(控制动态分配)

DISABLE_COPY_AND_ASSIGN(Blob); //禁止拷贝和赋值运算

}; // class Blob

} // namespace caffe

#endif // CAFFE_BLOB_HPP_blob.cpp

#include <climits>

#include <vector>

#include "caffe/blob.hpp"

#include "caffe/common.hpp"

#include "caffe/syncedmem.hpp"

#include "caffe/util/math_functions.hpp"

namespace caffe {

// 改变Blob的维度,将num,channels,height,width传递给vector<Dtype> shape

template <typename Dtype>

void Blob<Dtype>::Reshape(const int num, const int channels, const int height,

const int width) {

vector<int> shape(4);

shape[0] = num;

shape[1] = channels;

shape[2] = height;

shape[3] = width;

Reshape(shape);

}

template <typename Dtype>

// 完成blob形状shape_的记录,大小count_的计算,合适大小capacity_存储的申请

void Blob<Dtype>::Reshape(const vector<int>& shape) {

CHECK_LE(shape.size(), kMaxBlobAxes);

count_ = 1;

shape_.resize(shape.size());

if (!shape_data_ || shape_data_->size() < shape.size() * sizeof(int)) {

shape_data_.reset(new SyncedMemory(shape.size() * sizeof(int)));

} //为shape存储大小数据重新开辟空间

int* shape_data = static_cast<int*>(shape_data_->mutable_cpu_data());

for (int i = 0; i < shape.size(); ++i) {

CHECK_GE(shape[i], 0);

CHECK_LE(shape[i], INT_MAX / count_) << "blob size exceeds INT_MAX";

count_ *= shape[i];

shape_[i] = shape[i];

shape_data[i] = shape[i];

}

if (count_ > capacity_) { //由于count_超过了当前capacity,因此需要重新分配空间

capacity_ = count_;

data_.reset(new SyncedMemory(capacity_ * sizeof(Dtype))); // 只是构造了SyncedMemory对象,并未真正分配内存和显存,真正分配是在第一次访问数据时,为data_数据重新开辟空回

diff_.reset(new SyncedMemory(capacity_ * sizeof(Dtype))); // 为diff_数据重新开辟空间

}

}

template <typename Dtype> // BlobShape 在caffe.proto 中定义,将BlobShape类型的数据 转换为vector<int>

void Blob<Dtype>::Reshape(const BlobShape& shape) {

CHECK_LE(shape.dim_size(), kMaxBlobAxes);

vector<int> shape_vec(shape.dim_size());

for (int i = 0; i < shape.dim_size(); ++i) {

shape_vec[i] = shape.dim(i);

}

Reshape(shape_vec);

}

template <typename Dtype> //用已知Blob的shape来对shape_进行reshape

void Blob<Dtype>::ReshapeLike(const Blob<Dtype>& other) {

Reshape(other.shape());

}

//Blob初始化,用num,channels,height,width初始化

template <typename Dtype>

Blob<Dtype>::Blob(const int num, const int channels, const int height,

const int width)

// capacity_ must be initialized before calling Reshape

: capacity_(0) { //在Reshape之前,需要将capacity初始化

Reshape(num, channels, height, width);

}

//用shape 初始化

template <typename Dtype>

Blob<Dtype>::Blob(const vector<int>& shape)

// capacity_ must be initialized before calling Reshape

: capacity_(0) {

Reshape(shape);

}

//返回gpu中的数据,并返回内存指针

template <typename Dtype>

const int* Blob<Dtype>::gpu_shape() const {

CHECK(shape_data_);

return (const int*)shape_data_->gpu_data();

}

// 调用SyncedMemory的数据访问函数cpu_data(),并返回内存指针

template <typename Dtype>

const Dtype* Blob<Dtype>::cpu_data() const {

CHECK(data_);

return (const Dtype*)data_->cpu_data();

}

// 设置cpu 数据

template <typename Dtype>

void Blob<Dtype>::set_cpu_data(Dtype* data) {

CHECK(data);

data_->set_cpu_data(data);

}

template <typename Dtype>

const Dtype* Blob<Dtype>::gpu_data() const {

CHECK(data_);

return (const Dtype*)data_->gpu_data();

}

//调用SyncedMemory的数据访问函数cpu_diff(),并返回内存指针

template <typename Dtype>

const Dtype* Blob<Dtype>::cpu_diff() const {

CHECK(diff_);

return (const Dtype*)diff_->cpu_data();

}

template <typename Dtype>

const Dtype* Blob<Dtype>::gpu_diff() const {

CHECK(diff_);

return (const Dtype*)diff_->gpu_data();

}

template <typename Dtype>

Dtype* Blob<Dtype>::mutable_cpu_data() {

CHECK(data_);

return static_cast<Dtype*>(data_->mutable_cpu_data());

}

template <typename Dtype>

Dtype* Blob<Dtype>::mutable_gpu_data() {

CHECK(data_);

return static_cast<Dtype*>(data_->mutable_gpu_data());

}

template <typename Dtype>

Dtype* Blob<Dtype>::mutable_cpu_diff() {

CHECK(diff_);

return static_cast<Dtype*>(diff_->mutable_cpu_data());

}

template <typename Dtype>

Dtype* Blob<Dtype>::mutable_gpu_diff() {

CHECK(diff_);

return static_cast<Dtype*>(diff_->mutable_gpu_data());

}

//当前的blob的data_指向other.data()的数据

template <typename Dtype>

void Blob<Dtype>::ShareData(const Blob& other) {

CHECK_EQ(count_, other.count());

data_ = other.data();

}

template <typename Dtype>

void Blob<Dtype>::ShareDiff(const Blob& other) {

CHECK_EQ(count_, other.count());

diff_ = other.diff();

}

// The "update" method is used for parameter blobs in a Net, which are stored

// as Blob<float> or Blob<double> -- hence we do not define it for

// Blob<int> or Blob<unsigned int>.

template <> void Blob<unsigned int>::Update() { NOT_IMPLEMENTED; }

template <> void Blob<int>::Update() { NOT_IMPLEMENTED; }

// 更新单个参数权值,updata函数用于参数blob参数的更新

template <typename Dtype>

void Blob<Dtype>::Update() {

// We will perform update based on where the data is located.

switch (data_->head()) {

case SyncedMemory::HEAD_AT_CPU: // 参数更新,新参数(data_) = 原参数(data_) - 梯度(diff_)

// perform computation on CPU

caffe_axpy<Dtype>(count_, Dtype(-1),

static_cast<const Dtype*>(diff_->cpu_data()),

static_cast<Dtype*>(data_->mutable_cpu_data()));

break;

case SyncedMemory::HEAD_AT_GPU:

case SyncedMemory::SYNCED:

#ifndef CPU_ONLY

// perform computation on GPU // 在gpu上参数更新

caffe_gpu_axpy<Dtype>(count_, Dtype(-1),

static_cast<const Dtype*>(diff_->gpu_data()),

static_cast<Dtype*>(data_->mutable_gpu_data()));

#else

NO_GPU;

#endif

break;

default:

LOG(FATAL) << "Syncedmem not initialized.";

}

}

template <> unsigned int Blob<unsigned int>::asum_data() const {

NOT_IMPLEMENTED;

return 0;

}

template <> int Blob<int>::asum_data() const {

NOT_IMPLEMENTED;

return 0;

}

template <typename Dtype>

Dtype Blob<Dtype>::asum_data() const { //计算data_ L1范式,计算所有元素绝对值之和

if (!data_) { return 0; }

switch (data_->head()) {

case SyncedMemory::HEAD_AT_CPU:

return caffe_cpu_asum(count_, cpu_data());

case SyncedMemory::HEAD_AT_GPU:

case SyncedMemory::SYNCED:

#ifndef CPU_ONLY

{

Dtype asum;

caffe_gpu_asum(count_, gpu_data(), &asum);

return asum;

}

#else

NO_GPU;

#endif

case SyncedMemory::UNINITIALIZED:

return 0;

default:

LOG(FATAL) << "Unknown SyncedMemory head state: " << data_->head();

}

return 0;

}

template <> unsigned int Blob<unsigned int>::asum_diff() const {

NOT_IMPLEMENTED;

return 0;

}

template <> int Blob<int>::asum_diff() const {

NOT_IMPLEMENTED;

return 0;

}

template <typename Dtype>

Dtype Blob<Dtype>::asum_diff() const { //计算diff_ L1范式,所有元素的元素的绝对值之和

if (!diff_) { return 0; }

switch (diff_->head()) {

case SyncedMemory::HEAD_AT_CPU:

return caffe_cpu_asum(count_, cpu_diff());

case SyncedMemory::HEAD_AT_GPU:

case SyncedMemory::SYNCED:

#ifndef CPU_ONLY

{

Dtype asum;

caffe_gpu_asum(count_, gpu_diff(), &asum);

return asum;

}

#else

NO_GPU;

#endif

case SyncedMemory::UNINITIALIZED:

return 0;

default:

LOG(FATAL) << "Unknown SyncedMemory head state: " << diff_->head();

}

return 0;

}

template <> unsigned int Blob<unsigned int>::sumsq_data() const {

NOT_IMPLEMENTED;

return 0;

}

template <> int Blob<int>::sumsq_data() const {

NOT_IMPLEMENTED;

return 0;

}

template <typename Dtype>

Dtype Blob<Dtype>::sumsq_data() const { //计算data_ L2范式,计算所有元素的平方和

Dtype sumsq;

const Dtype* data;

if (!data_) { return 0; }

switch (data_->head()) {

case SyncedMemory::HEAD_AT_CPU:

data = cpu_data();

sumsq = caffe_cpu_dot(count_, data, data);

break;

case SyncedMemory::HEAD_AT_GPU:

case SyncedMemory::SYNCED:

#ifndef CPU_ONLY

data = gpu_data();

caffe_gpu_dot(count_, data, data, &sumsq);

#else

NO_GPU;

#endif

break;

case SyncedMemory::UNINITIALIZED:

return 0;

default:

LOG(FATAL) << "Unknown SyncedMemory head state: " << data_->head();

}

return sumsq;

}

template <> unsigned int Blob<unsigned int>::sumsq_diff() const {

NOT_IMPLEMENTED;

return 0;

}

template <> int Blob<int>::sumsq_diff() const {

NOT_IMPLEMENTED;

return 0;

}

template <typename Dtype>

Dtype Blob<Dtype>::sumsq_diff() const { //计算diff_ L2范式,计算所有元素的平方和

Dtype sumsq;

const Dtype* diff;

if (!diff_) { return 0; }

switch (diff_->head()) {

case SyncedMemory::HEAD_AT_CPU:

diff = cpu_diff();

sumsq = caffe_cpu_dot(count_, diff, diff);

break;

case SyncedMemory::HEAD_AT_GPU:

case SyncedMemory::SYNCED:

#ifndef CPU_ONLY

diff = gpu_diff();

caffe_gpu_dot(count_, diff, diff, &sumsq);

break;

#else

NO_GPU;

#endif

case SyncedMemory::UNINITIALIZED:

return 0;

default:

LOG(FATAL) << "Unknown SyncedMemory head state: " << data_->head();

}

return sumsq;

}

template <> void Blob<unsigned int>::scale_data(unsigned int scale_factor) {

NOT_IMPLEMENTED;

}

template <> void Blob<int>::scale_data(int scale_factor) {

NOT_IMPLEMENTED;

}

template <typename Dtype>

void Blob<Dtype>::scale_data(Dtype scale_factor) { //对data_乘上某个因子,data乘以sacle_factor

Dtype* data;

if (!data_) { return; }

switch (data_->head()) {

case SyncedMemory::HEAD_AT_CPU:

data = mutable_cpu_data();

caffe_scal(count_, scale_factor, data);

return;

case SyncedMemory::HEAD_AT_GPU:

case SyncedMemory::SYNCED:

#ifndef CPU_ONLY

data = mutable_gpu_data();

caffe_gpu_scal(count_, scale_factor, data);

return;

#else

NO_GPU;

#endif

case SyncedMemory::UNINITIALIZED:

return;

default:

LOG(FATAL) << "Unknown SyncedMemory head state: " << data_->head();

}

}

template <> void Blob<unsigned int>::scale_diff(unsigned int scale_factor) {

NOT_IMPLEMENTED;

}

template <> void Blob<int>::scale_diff(int scale_factor) {

NOT_IMPLEMENTED;

}

template <typename Dtype>

void Blob<Dtype>::scale_diff(Dtype scale_factor) { //对diff_乘上某个因子,diff乘以sacle_factor

Dtype* diff;

if (!diff_) { return; }

switch (diff_->head()) {

case SyncedMemory::HEAD_AT_CPU:

diff = mutable_cpu_diff();

caffe_scal(count_, scale_factor, diff);

return;

case SyncedMemory::HEAD_AT_GPU:

case SyncedMemory::SYNCED:

#ifndef CPU_ONLY

diff = mutable_gpu_diff();

caffe_gpu_scal(count_, scale_factor, diff);

return;

#else

NO_GPU;

#endif

case SyncedMemory::UNINITIALIZED:

return;

default:

LOG(FATAL) << "Unknown SyncedMemory head state: " << diff_->head();

}

}

// 检查当前的blob和已知的other的shape是否相同,相同则返回ture.当然,首先将BlobProto转换为shape类型

template <typename Dtype>

bool Blob<Dtype>::ShapeEquals(const BlobProto& other) {

if (other.has_num() || other.has_channels() ||

other.has_height() || other.has_width()) {

// Using deprecated 4D Blob dimensions --

// shape is (num, channels, height, width).

// Note: we do not use the normal Blob::num(), Blob::channels(), etc.

// methods as these index from the beginning of the blob shape, where legacy

// parameter blobs were indexed from the end of the blob shape (e.g., bias

// Blob shape (1 x 1 x 1 x N), IP layer weight Blob shape (1 x 1 x M x N)).

return shape_.size() <= 4 &&

LegacyShape(-4) == other.num() &&

LegacyShape(-3) == other.channels() &&

LegacyShape(-2) == other.height() &&

LegacyShape(-1) == other.width();

}

vector<int> other_shape(other.shape().dim_size());

for (int i = 0; i < other.shape().dim_size(); ++i) {

other_shape[i] = other.shape().dim(i);

}

return shape_ == other_shape;

}

// 从source拷贝数据, copy_diff来作为标志区分是拷贝data还是diff

template <typename Dtype>

void Blob<Dtype>::CopyFrom(const Blob& source, bool copy_diff, bool reshape) {

if (source.count() != count_ || source.shape() != shape_) {

if (reshape) {

ReshapeLike(source);

} else {

LOG(FATAL) << "Trying to copy blobs of different sizes.";

}

}

switch (Caffe::mode()) {

case Caffe::GPU:

if (copy_diff) {

caffe_copy(count_, source.gpu_diff(),

static_cast<Dtype*>(diff_->mutable_gpu_data()));

} else {

caffe_copy(count_, source.gpu_data(),

static_cast<Dtype*>(data_->mutable_gpu_data()));

}

break;

case Caffe::CPU:

if (copy_diff) {

caffe_copy(count_, source.cpu_diff(),

static_cast<Dtype*>(diff_->mutable_cpu_data()));

} else {

caffe_copy(count_, source.cpu_data(),

static_cast<Dtype*>(data_->mutable_cpu_data()));

}

break;

default:

LOG(FATAL) << "Unknown caffe mode.";

}

}

//从proto读数据进来,反序列化

template <typename Dtype>

void Blob<Dtype>::FromProto(const BlobProto& proto, bool reshape) {

if (reshape) {

vector<int> shape;

if (proto.has_num() || proto.has_channels() ||

proto.has_height() || proto.has_width()) {

// Using deprecated 4D Blob dimensions --

// shape is (num, channels, height, width).

shape.resize(4);

shape[0] = proto.num();

shape[1] = proto.channels();

shape[2] = proto.height();

shape[3] = proto.width();

} else {

shape.resize(proto.shape().dim_size());

for (int i = 0; i < proto.shape().dim_size(); ++i) {

shape[i] = proto.shape().dim(i);

}

}

Reshape(shape);

} else {

CHECK(ShapeEquals(proto)) << "shape mismatch (reshape not set)";

}

// copy data

Dtype* data_vec = mutable_cpu_data();

if (proto.double_data_size() > 0) {

CHECK_EQ(count_, proto.double_data_size());

for (int i = 0; i < count_; ++i) {

data_vec[i] = proto.double_data(i);

}

} else {

CHECK_EQ(count_, proto.data_size());

for (int i = 0; i < count_; ++i) {

data_vec[i] = proto.data(i);

}

}

if (proto.double_diff_size() > 0) {

CHECK_EQ(count_, proto.double_diff_size());

Dtype* diff_vec = mutable_cpu_diff();

for (int i = 0; i < count_; ++i) {

diff_vec[i] = proto.double_diff(i);

}

} else if (proto.diff_size() > 0) {

CHECK_EQ(count_, proto.diff_size());

Dtype* diff_vec = mutable_cpu_diff();

for (int i = 0; i < count_; ++i) {

diff_vec[i] = proto.diff(i);

}

}

}

// 把blob数据保存到proto中

template <>

void Blob<double>::ToProto(BlobProto* proto, bool write_diff) const {

proto->clear_shape();

for (int i = 0; i < shape_.size(); ++i) {

proto->mutable_shape()->add_dim(shape_[i]);

}

proto->clear_double_data();

proto->clear_double_diff();

const double* data_vec = cpu_data(); //定义指针,指针指向数据

for (int i = 0; i < count_; ++i) {

proto->add_double_data(data_vec[i]);

}

if (write_diff) {

const double* diff_vec = cpu_diff();

for (int i = 0; i < count_; ++i) {

proto->add_double_diff(diff_vec[i]);

}

}

}

template <>

void Blob<float>::ToProto(BlobProto* proto, bool write_diff) const {

proto->clear_shape();

for (int i = 0; i < shape_.size(); ++i) {

proto->mutable_shape()->add_dim(shape_[i]);

}

proto->clear_data();

proto->clear_diff();

const float* data_vec = cpu_data();

for (int i = 0; i < count_; ++i) {

proto->add_data(data_vec[i]);

}

if (write_diff) {

const float* diff_vec = cpu_diff();

for (int i = 0; i < count_; ++i) {

proto->add_diff(diff_vec[i]);

}

}

}

INSTANTIATE_CLASS(Blob);

template class Blob<int>;

template class Blob<unsigned int>;

} // namespace caffelayer.hpp和layer.cpp

layer中的主要参数:

LayerParameter layer_param_;//这个是protobuf文件中存储的layer参数vector<share_ptr<Blob<Dtype>>> blobs_;//这个存储的是layer的参数vector<bool> param_propagate_down_;//这个bool表示是否计算各个blob参数的diff,即传播误差

三个主要的接口

virtual void SetUp(const vector<Blob<Dtype>*>& bottom, vector<Blob<Dtype>*>* top);inline Dtype Forward(const vector<Blob<Dtype>*>& bottom, vector<Blob<Dtype>*>* top);inline void Backward(const vector<Blob<Dtype>*>& top, const vector<bool>& propagate_down, const <Blob<Dtype>*>* bottom);SetUp函数需要根据实际的参数设置进行实现,对各种类型的参数初始化;Forward和Backwar对应前向计算和反向更新,输入统一是bottom,输出为top,其中Backward里面有个peopagate_down参数,用来表示该Layer是否反向传播参数.Caffe::mode()具体选择使用CPU或GPU操作.

互斥体 mutex

一个互斥体一次只允许一个线程访问共享区.当一个线程想要访问共享区时,首先要做的就是锁住(Lock)互斥体.如果其他的线程已经锁住了互斥体,那么,就必须先等线程解锁了互斥体,这样就保证了同一时刻只有一个线程能访问共享区域.

metux有lock和unlock方法,boost::mutex为独占互斥类.构造与析构时则分别自动调用lock和unlock方法.

基类:layer.hpp

#ifndef CAFFE_LAYER_H_

#define CAFFE_LAYER_H_

#include <algorithm>

#include <string>

#include <vector>

#include "caffe/blob.hpp"

#include "caffe/common.hpp"

#include "caffe/layer_factory.hpp"

#include "caffe/proto/caffe.pb.h"

#include "caffe/util/math_functions.hpp"

namespace boost { class mutex; }

namespace caffe {

/**

* @brief An interface for the units of computation which can be composed into a

* Net.

*

* Layer%s must implement a Forward function, in which they take their input

* (bottom) Blob%s (if any) and compute their output Blob%s (if any).

* They may also implement a Backward function, in which they compute the error

* gradients with respect to their input Blob%s, given the error gradients with

* their output Blob%s.

*/

template <typename Dtype>

class Layer {

public:

/**

* You should not implement your own constructor. Any set up code should go

* to SetUp(), where the dimensions of the bottom blobs are provided to the

* layer.

* 显示的构造函数不需要重写,任何初始工作在SetUp()中完成

*/

// 构造方法只复制层参数说明的值,如果层说明参数中提供了权值和偏置参数,也复制. 继承自Layer类的子类都会显示的调用Layer的构造函数

explicit Layer(const LayerParameter& param)

: layer_param_(param), is_shared_(false) { //layer_param_是protobiuf文件中存储的layer参数

phase_ = param.phase(); //phase定义是训练还是测试,定义在proto中

// 在layer类中被初始化,如果blobs_size() > 0

// 在prototxt文件中一般没有提供blobs参数,所以这段代码一般不执行

if (layer_param_.blobs_size() > 0) {

blobs_.resize(layer_param_.blobs_size());

for (int i = 0; i < layer_param_.blobs_size(); ++i) { //申请空间,然后将传入的layer_param中的blob拷贝

blobs_[i].reset(new Blob<Dtype>());

blobs_[i]->FromProto(layer_param_.blobs(i));

}

}

}

// 虚析构

virtual ~Layer() {}

/**

* @brief Implements common layer setup functionality.实现每个对象的setup函数

* @param bottom the preshaped input blobs.bottom层的输入数据,blob中的存储空间已申请

* @param top

* the allocated but unshaped output blobs, to be shaped by Reshape.层的输出数据,blob对象已构造但是其中的存储空间未申请,具体空间大小需根据bottom blob大小和layer_param_共同决定,具体在Reshape函数现实

* Checks that the number of bottom and top blobs is correct.

* Calls LayerSetUp to do special layer setup for individual layer types,

* followed by Reshape to set up sizes of top blobs and internal buffers.

* Sets up the loss weight multiplier blobs for any non-zero loss weights.

* This method may not be overridden.

* 1. 检查输入输出blob个数是否满足要求,每个层能处理的输入输出数据不一样

* 2. 调用LayerSetUp函数初始化特殊的层,每个Layer子类需重写这个函数完成定制的初始化

* 3. 调用Reshape函数为top blob分配合适大小的存储空间

* 4. 为每个top blob设置损失权重乘子,非LossLayer为的top blob其值为零

*

* 此方法非虚函数,不用重写,模式固定

*/

// layer 初始化设置

void SetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) { //在模型初始化时重置 layers 及其相互之间的连接 ;

InitMutex();

CheckBlobCounts(bottom, top); //检查botton和top的blob是否正确

LayerSetUp(bottom, top); //调用layerSetup对每一具体的层做进一步设置,虚方法

Reshape(bottom, top); //用reshape来设置top blobs和internal buffer

SetLossWeights(top); //设置blob对每个非零的loss和weight

}

/**

* @brief Does layer-specific setup: your layer should implement this function

* as well as Reshape.定制初始化,每个子类layer必须实现此虚函数

*

* @param bottom

* the preshaped input blobs, whose data fields store the input data for

* this layer.输入blob, 数据成员data_和diff_存储了相关数据

*

* @param top

* the allocated but unshaped output blobs.输出blob, blob对象已构造但数据成员的空间尚未申请

*

* This method should do one-time layer specific setup. This includes reading

* and processing relevent parameters from the <code>layer_param_</code>.

* Setting up the shapes of top blobs and internal buffers should be done in

* <code>Reshape</code>, which will be called before the forward pass to

* adjust the top blob sizes.

* 此方法执行一次定制化的层初始化,包括从layer_param_读入并处理相关的层权值和偏置参数,调用Reshape函数申请top blob的存储空间,由派生类重写

*/

virtual void LayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {} //需要在派生类中完成

/**

* @brief Whether a layer should be shared by multiple nets during data

* parallelism. By default, all layers except for data layers should

* not be shared. data layers should be shared to ensure each worker

* solver access data sequentially during data parallelism.

* 在数据并行期间时候应该有多个网络共享一个层.默认不应该共享数据层以外的所有图层

*/

virtual inline bool ShareInParallel() const { return false; }

/** @brief Return whether this layer is actually shared by other nets.

* If ShareInParallel() is true and using more than one GPU and the

* net has TRAIN phase, then this function is expected return true.

*/

inline bool IsShared() const { return is_shared_; }

/** @brief Set whether this layer is actually shared by other nets

* If ShareInParallel() is true and using more than one GPU and the

* net has TRAIN phase, then is_shared should be set true.

*/

inline void SetShared(bool is_shared) {

CHECK(ShareInParallel() || !is_shared)

<< type() << "Layer does not support sharing.";

is_shared_ = is_shared;

}

/**

* @brief Adjust the shapes of top blobs and internal buffers to accommodate

* the shapes of the bottom blobs.根据bottom blob的形状和layer_param_计算top blob的形状并为其分配存储空间

*

* @param bottom the input blobs, with the requested input shapes

* @param top the top blobs, which should be reshaped as needed

*

* This method should reshape top blobs as needed according to the shapes

* of the bottom (input) blobs, as well as reshaping any internal buffers

* and making any other necessary adjustments so that the layer can

* accommodate the bottom blobs.

* 每个子类Layer必须重写的Reshape函数,完成top blob形状的设置并为其分配存储空间

*/

//纯虚函数,形参为<Blob<Dtype>*>容器的const引用

virtual void Reshape(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) = 0;

/**

* @brief Given the bottom blobs, compute the top blobs and the loss.计算相应的output data blob, loss.

*

* @param bottom

* the input blobs, whose data fields store the input data for this layer

* @param top

* the preshaped output blobs, whose data fields will store this layers'

* outputs

* \return The total loss from the layer.

*

* The Forward wrapper calls the relevant device wrapper function

* (Forward_cpu or Forward_gpu) to compute the top blob values given the

* bottom blobs. If the layer has any non-zero loss_weights, the wrapper

* then computes and returns the loss.

*

* Your layer should implement Forward_cpu and (optionally) Forward_gpu.

* 这两个函数非虚函数,它们内部会调用如下虚函数(Forward_cpu and (optionally) Forward_gpu)完成数据前向传递和误差反向传播,

* 根据执行环境的不同每个子类Layer必须重写CPU和GPU版本

*/

inline Dtype Forward(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top); //从 bottom 层中接收数据,进行计算后将输出送入到 top 层中;

/**

* @brief Given the top blob error gradients, compute the bottom blob error

* gradients.

*

* @param top

* the output blobs, whose diff fields store the gradient of the error

* with respect to themselves

* @param propagate_down

* a vector with equal length to bottom, with each index indicating

* whether to propagate the error gradients down to the bottom blob at

* the corresponding index

* @param bottom

* the input blobs, whose diff fields will store the gradient of the error

* with respect to themselves after Backward is run

*

* The Backward wrapper calls the relevant device wrapper function

* (Backward_cpu or Backward_gpu) to compute the bottom blob diffs given the

* top blob diffs.

*

* Your layer should implement Backward_cpu and (optionally) Backward_gpu.

* 实现反向传播,给定top blob的erroe 果然gradient计算得到bottom的error gradient.输入为 output blobs.

*/

inline void Backward(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down,

const vector<Blob<Dtype>*>& bottom);//给定相对于 top 层输出的梯度,计算其相对于输入的梯度,并传递到 bottom层。一个有参数的 layer 需要计算相对于各个参数的梯度值并存储在内部。

/**

* @brief Returns the vector of learnable parameter blobs.

* 返回可学习的参数blobs

*/

vector<shared_ptr<Blob<Dtype> > >& blobs() {

return blobs_;

}

/**

* @brief Returns the layer parameter.

* 返回层参数

*/

const LayerParameter& layer_param() const { return layer_param_; }

/**

* @brief Writes the layer parameter to a protocol buffer

* 把参数写进protocol buffer

*

*/

virtual void ToProto(LayerParameter* param, bool write_diff = false);

/**

* @brief Returns the scalar loss associated with a top blob at a given index.

*/

// 给定index返回相应的scalar loss

inline Dtype loss(const int top_index) const {

return (loss_.size() > top_index) ? loss_[top_index] : Dtype(0);

}

/**

* @brief Sets the loss associated with a top blob at a given index.

*/

inline void set_loss(const int top_index, const Dtype value) {

if (loss_.size() <= top_index) {

loss_.resize(top_index + 1, Dtype(0));

}

loss_[top_index] = value;

}

/**

* @brief Returns the layer type.

* 返回层类型

*/

virtual inline const char* type() const { return ""; }

//下面几个函数主要设置bottom或者top blob的数量状态,比较简单,通常需要layer类的派生类重写,因为不同层指定的输入输出数量不同

/**

* @brief Returns the exact number of bottom blobs required by the layer,

* or -1 if no exact number is required.

*

* This method should be overridden to return a non-negative value if your

* layer expects some exact number of bottom blobs.

*

*/

virtual inline int ExactNumBottomBlobs() const { return -1; } //返回需要bottom blob的确切数目,默认不需要确切的数目,返回-1

/**

* @brief Returns the minimum number of bottom blobs required by the layer,

* or -1 if no minimum number is required.

*

* This method should be overridden to return a non-negative value if your

* layer expects some minimum number of bottom blobs.

*/

virtual inline int MinBottomBlobs() const { return -1; } //返回需要bottom blob的最小数目,默认不需要最小的数目,返回-1

/**

* @brief Returns the maximum number of bottom blobs required by the layer,

* or -1 if no maximum number is required.

*

* This method should be overridden to return a non-negative value if your

* layer expects some maximum number of bottom blobs.

*/

virtual inline int MaxBottomBlobs() const { return -1; } //返回需要bottom blob的最大数目,默认不需要最大的数目,返回-1

/**

* @brief Returns the exact number of top blobs required by the layer,

* or -1 if no exact number is required.

*

* This method should be overridden to return a non-negative value if your

* layer expects some exact number of top blobs.

*/

virtual inline int ExactNumTopBlobs() const { return -1; }

/**

* @brief Returns the minimum number of top blobs required by the layer,

* or -1 if no minimum number is required.

*

* This method should be overridden to return a non-negative value if your

* layer expects some minimum number of top blobs.

*/

virtual inline int MinTopBlobs() const { return -1; }

/**

* @brief Returns the maximum number of top blobs required by the layer,

* or -1 if no maximum number is required.

*

* This method should be overridden to return a non-negative value if your

* layer expects some maximum number of top blobs.

*/

virtual inline int MaxTopBlobs() const { return -1; }

/**

* @brief Returns true if the layer requires an equal number of bottom and

* top blobs.

*

* This method should be overridden to return true if your layer expects an

* equal number of bottom and top blobs.

*/

virtual inline bool EqualNumBottomTopBlobs() const { return false; } //如果layer需要相同数量的底部和顶部斑点,则返回true

/**

* @brief Return whether "anonymous" top blobs are created automatically

* by the layer.

*

* If this method returns true, Net::Init will create enough "anonymous" top

* blobs to fulfill the requirement specified by ExactNumTopBlobs() or

* MinTopBlobs().

*/

virtual inline bool AutoTopBlobs() const { return false; } //匿名?

/**

* @brief Return whether to allow force_backward for a given bottom blob

* index.

*

* If AllowForceBackward(i) == false, we will ignore the force_backward

* setting and backpropagate to blob i only if it needs gradient information

* (as is done when force_backward == false).

*/

virtual inline bool AllowForceBackward(const int bottom_index) const {

return true;

} //用来设置是否强制梯度返回,因为有些层不需要梯度信息

/**

* @brief Specifies whether the layer should compute gradients w.r.t. a

* parameter at a particular index given by param_id.

*

* You can safely ignore false values and always compute gradients

* for all parameters, but possibly with wasteful computation.

*/

inline bool param_propagate_down(const int param_id) {

return (param_propagate_down_.size() > param_id) ?

param_propagate_down_[param_id] : false;

} //指定图层是否应该计算特定索引处的参数

/**

* @brief Sets whether the layer should compute gradients w.r.t. a

* parameter at a particular index given by param_id.

* 设置是否对某个学习参数blob计算梯度

*/

inline void set_param_propagate_down(const int param_id, const bool value) {

if (param_propagate_down_.size() <= param_id) {

param_propagate_down_.resize(param_id + 1, true);

}

param_propagate_down_[param_id] = value;

}

protected:

/** The protobuf that stores the layer parameters */

//protobuf文件中存储的layer参数,从protocal buffers格式的网络结构说明文件中读取

//protected类成员,构造函数中初始化

LayerParameter layer_param_;

/** The phase: TRAIN or TEST */

//层状态,参与网络的训练还是测试

Phase phase_;

/** The vector that stores the learnable parameters as a set of blobs. */

// 可学习参数层权值和偏置参数,使用向量是因为权值参数和偏置是分开保存在两个blob中的

// 在基类layer中初始化(只是在描述文件定义了的情况下)

vector<shared_ptr<Blob<Dtype> > > blobs_;

/** Vector indicating whether to compute the diff of each param blob. */

// 标志每个可学习参数blob是否需要计算反向传递的梯度值

vector<bool> param_propagate_down_;

/** The vector that indicates whether each top blob has a non-zero weight in

* the objective function.

*/

// 非LossLayer为零,LossLayer中表示每个top blob计算的loss的权重

vector<Dtype> loss_;

/** @brief Using the CPU device, compute the layer output.

* 纯虚函数,子类必须实现,使用cpu经行前向计算

*/

virtual void Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) = 0;

/**

* @brief Using the GPU device, compute the layer output.

* Fall back to Forward_cpu() if unavailable.

*/

/* void函数返回void函数

* 为什么这么设置,是为了模板的统一性

* template<class T>

* T default_value()

* {

return T();

* }

* 其中T可以为void

*/

virtual void Forward_gpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

// LOG(WARNING) << "Using CPU code as backup.";

return Forward_cpu(bottom, top);

}

/**

* @brief Using the CPU device, compute the gradients for any parameters and

* for the bottom blobs if propagate_down is true.

* 纯虚函数,派生类必须实现

*/

virtual void Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down,

const vector<Blob<Dtype>*>& bottom) = 0;

/**

* @brief Using the GPU device, compute the gradients for any parameters and

* for the bottom blobs if propagate_down is true.

* Fall back to Backward_cpu() if unavailable.

*/

virtual void Backward_gpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down,

const vector<Blob<Dtype>*>& bottom) {

// LOG(WARNING) << "Using CPU code as backup.";

Backward_cpu(top, propagate_down, bottom);

}

/**

* Called by the parent Layer's SetUp to check that the number of bottom

* and top Blobs provided as input match the expected numbers specified by

* the {ExactNum,Min,Max}{Bottom,Top}Blobs() functions.

*/

// 检查输出输出的blobs的个数是否在给定范围内

virtual void CheckBlobCounts(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

if (ExactNumBottomBlobs() >= 0) {

CHECK_EQ(ExactNumBottomBlobs(), bottom.size())

<< type() << " Layer takes " << ExactNumBottomBlobs()

<< " bottom blob(s) as input.";

}

if (MinBottomBlobs() >= 0) {

CHECK_LE(MinBottomBlobs(), bottom.size())

<< type() << " Layer takes at least " << MinBottomBlobs()

<< " bottom blob(s) as input.";

}

if (MaxBottomBlobs() >= 0) {

CHECK_GE(MaxBottomBlobs(), bottom.size())

<< type() << " Layer takes at most " << MaxBottomBlobs()

<< " bottom blob(s) as input.";

}

if (ExactNumTopBlobs() >= 0) {

CHECK_EQ(ExactNumTopBlobs(), top.size())

<< type() << " Layer produces " << ExactNumTopBlobs()

<< " top blob(s) as output.";

}

if (MinTopBlobs() >= 0) {

CHECK_LE(MinTopBlobs(), top.size())

<< type() << " Layer produces at least " << MinTopBlobs()

<< " top blob(s) as output.";

}

if (MaxTopBlobs() >= 0) {

CHECK_GE(MaxTopBlobs(), top.size())

<< type() << " Layer produces at most " << MaxTopBlobs()

<< " top blob(s) as output.";

}

if (EqualNumBottomTopBlobs()) {

CHECK_EQ(bottom.size(), top.size())

<< type() << " Layer produces one top blob as output for each "

<< "bottom blob input.";

}

}

/**

* Called by SetUp to initialize the weights associated with any top blobs in

* the loss function. Store non-zero loss weights in the diff blob.

*/

inline void SetLossWeights(const vector<Blob<Dtype>*>& top) { //设置blob对每个非零的loss和weight,初始化top的weights,并且存储非零的loss_weights在diff中

const int num_loss_weights = layer_param_.loss_weight_size();

if (num_loss_weights) {

CHECK_EQ(top.size(), num_loss_weights) << "loss_weight must be "

"unspecified or specified once per top blob.";

for (int top_id = 0; top_id < top.size(); ++top_id) {

const Dtype loss_weight = layer_param_.loss_weight(top_id);

if (loss_weight == Dtype(0)) { continue; }

this->set_loss(top_id, loss_weight); //设置top_loss值

const int count = top[top_id]->count();

Dtype* loss_multiplier = top[top_id]->mutable_cpu_diff();

caffe_set(count, loss_weight, loss_multiplier); //对cpu_diff()进行初始化

}

}

}

private:

/** Whether this layer is actually shared by other nets*/

bool is_shared_;

/** The mutex for sequential forward if this layer is shared

* 类型为 boost::mutex 的 mutex 全局互斥对象

*/

// 若该layer被shared,则需要这个mutex序列保持forward过程的正常运行

shared_ptr<boost::mutex> forward_mutex_;

/** Initialize forward_mutex_ */

void InitMutex();

/** Lock forward_mutex_ if this layer is shared */

void Lock();

/** Unlock forward_mutex_ if this layer is shared */

void Unlock();

DISABLE_COPY_AND_ASSIGN(Layer);

}; // class Layer

// Forward and backward wrappers. You should implement the cpu and

// gpu specific implementations instead, and should not change these

// functions.

// 前向传播和反向传播接口。 每个Layer的派生类都应该实现Forward_cpu()

template <typename Dtype>

inline Dtype Layer<Dtype>::Forward(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

// Lock during forward to ensure sequential forward

Lock();

Dtype loss = 0;

Reshape(bottom, top);

switch (Caffe::mode()) {

case Caffe::CPU:

Forward_cpu(bottom, top);

// 计算loss

if (!this->loss(top_id)) { continue; }

const int count = top[top_id]->count();

const Dtype* data = top[top_id]->cpu_data();

const Dtype* loss_weights = top[top_id]->cpu_diff();

loss += caffe_cpu_dot(count, data, loss_weights);

} for (int top_id = 0; top_id < top.size(); ++top_id) {

break;

case Caffe::GPU:

Forward_gpu(bottom, top);

#ifndef CPU_ONLY

for (int top_id = 0; top_id < top.size(); ++top_id) {

if (!this->loss(top_id)) { continue; }

const int count = top[top_id]->count();

const Dtype* data = top[top_id]->gpu_data();

const Dtype* loss_weights = top[top_id]->gpu_diff();

Dtype blob_loss = 0;

caffe_gpu_dot(count, data, loss_weights, &blob_loss);

loss += blob_loss;

}

#endif

break;

default:

LOG(FATAL) << "Unknown caffe mode.";

}

Unlock();

return loss;

}

template <typename Dtype>

inline void Layer<Dtype>::Backward(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down,

const vector<Blob<Dtype>*>& bottom) {

switch (Caffe::mode()) {

case Caffe::CPU:

Backward_cpu(top, propagate_down, bottom);

break;

case Caffe::GPU:

Backward_gpu(top, propagate_down, bottom);

break;

default:

LOG(FATAL) << "Unknown caffe mode.";

}

}

// Serialize LayerParameter to protocol buffer

//Layer的序列化函数,将layer的层说明参数layer_param_,

//层权值和偏置参数blobs_复制到LayerParameter对象,便于写到磁盘

template <typename Dtype>

void Layer<Dtype>::ToProto(LayerParameter* param, bool write_diff) {

param->Clear();

param->CopyFrom(layer_param_);

param->clear_blobs();

// 复制层权值和偏置参数blobs_

for (int i = 0; i < blobs_.size(); ++i) {

blobs_[i]->ToProto(param->add_blobs(), write_diff);

}

}

} // namespace caffe

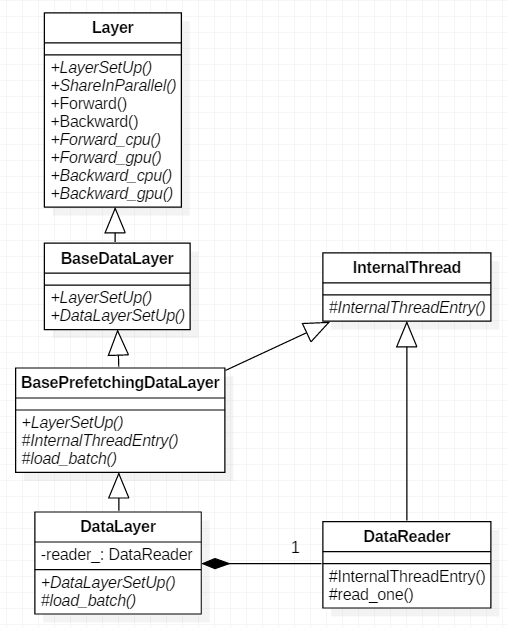

#endif // CAFFE_LAYER_H_Data_layer 派生类

- BaseDataLayer:数据层的基类,继承自通用的类Layer

- Batch:实际上就是一个data_和label_类标

- BasePrefetchingDataLayer:是预取层的基类,继承自BaseDataLayer和InternalThread,包含能够读取一批数据的能力

- DataLayer:继承BasePrefetchingDataLayer基类,使用

DataReader来进行数据共享,从而实现并行化 - DummyDataLayer:该类继承Layer基类,通过Filler产生数据

- HDF5DataLayer:从HDF5中读取,继承自Layer

- ImageDataLayer:从图像文件中读取数据,继承自BaseDataLayer

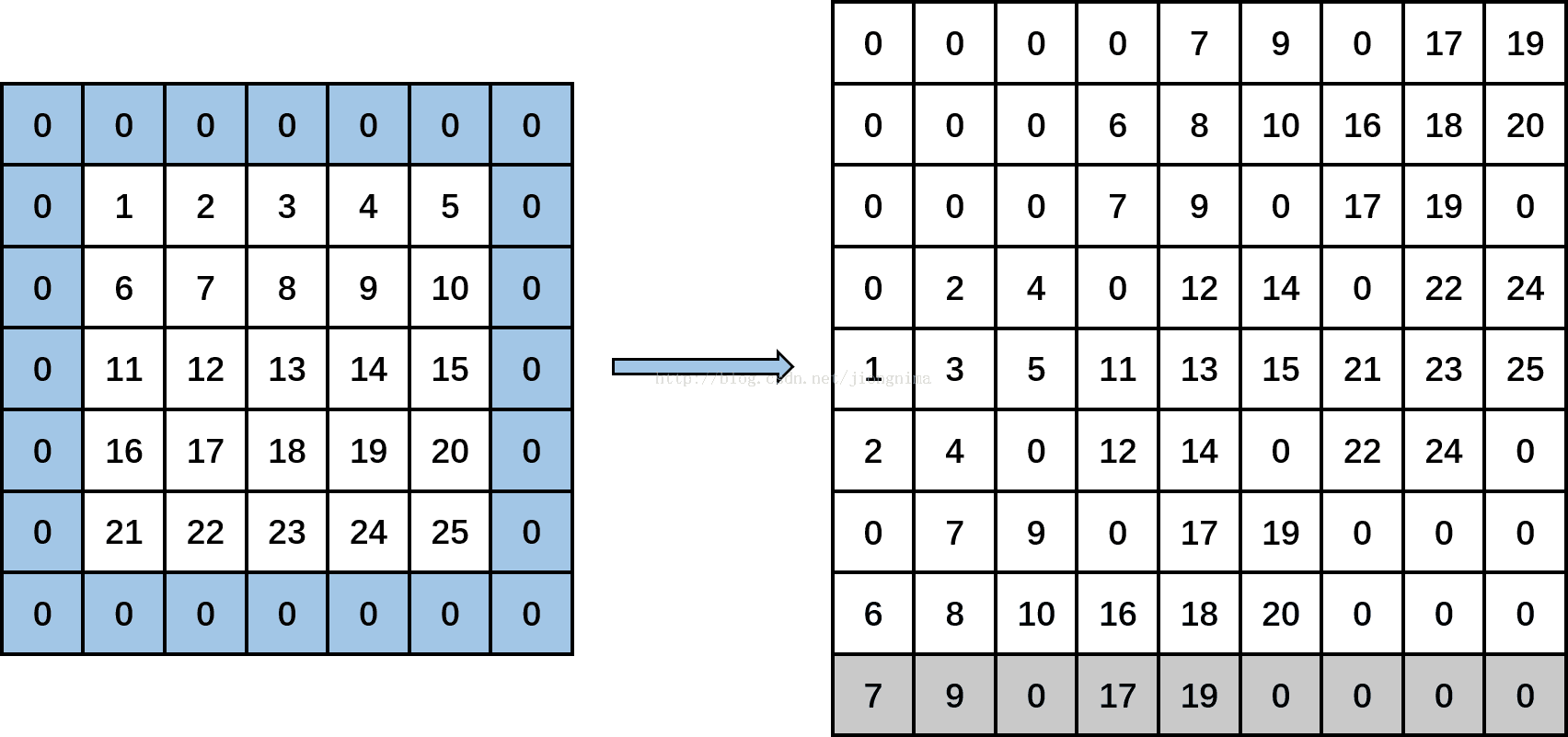

Data_transformer.hpp

定义了类DataTransformer,这个类执行一些预处理操作,比如缩放crop_size\镜像mirror\减去均值mean_value mean_file\尺度变换scale.里面有5个常用的transform函数,其中所有函数的第二个部分是想用的,都是一个目标blob.而输入根据输入的情况有所选择,可以是blob,耶可以是opencv的mat结构,或者proto中定义的datum结构.

void Transform(const Datum& datum, Blob<Dtype>* transformed_blob);

void Transform(const vector<Datum> & datum_vector, Blob<Dtype>* transformed_blob);

void Transform(const vector<cv::Mat> & mat_vector, Blob<Dtype>* transformed_blob);

void Transform(const cv::Mat& cv_img, Blob<Dtype>* transformed_blob);

void Transform(Blob<Dtype>* input_blob, Blob<Dtype>* transformed_blob);proto文件中,对应该类构造函数需要传入的一些变形参数.

message TransformationParameter {

optional float scale = 1 [default = 1];

optional bool mirror = 2 [default = false];

optional uint32 crop_size = 3 [default = 0];

optional string mean_file = 4;

repeated float mean_value = 5;

optional bool force_color = 6 [default = false];

optional bool force_gray = 7 [default = false];

} void Transform(Blob<Dtype>* input_blob, Blob<Dtype>* transformed_blob);其中一个核心函数,transfrom.

emplate<typename Dtype>

void DataTransformer<Dtype>::Transform(Blob<Dtype>* input_blob,

Blob<Dtype>* transformed_blob) {

const int crop_size = param_.crop_size(); //获得crop_size

const int input_num = input_blob->num(); //获取输入数据的四维信息

const int input_channels = input_blob->channels();

const int input_height = input_blob->height();

const int input_width = input_blob->width();

if (transformed_blob->count() == 0) {

// Initialize transformed_blob with the right shape.使用正确的形状初始化blob

if (crop_size) {

transformed_blob->Reshape(input_num, input_channels,

crop_size, crop_size);

} else {

transformed_blob->Reshape(input_num, input_channels,

input_height, input_width);

}

}

const int num = transformed_blob->num();

const int channels = transformed_blob->channels();

const int height = transformed_blob->height();

const int width = transformed_blob->width();

const int size = transformed_blob->count();

CHECK_LE(input_num, num);

CHECK_EQ(input_channels, channels);

CHECK_GE(input_height, height);

CHECK_GE(input_width, width);

const Dtype scale = param_.scale();

const bool do_mirror = param_.mirror() && Rand(2);

const bool has_mean_file = param_.has_mean_file();

const bool has_mean_values = mean_values_.size() > 0;

int h_off = 0;

int w_off = 0;

if (crop_size) {

CHECK_EQ(crop_size, height);

CHECK_EQ(crop_size, width);

// We only do random crop when we do training.

if (phase_ == TRAIN) {

h_off = Rand(input_height - crop_size + 1);

w_off = Rand(input_width - crop_size + 1);

} else {

h_off = (input_height - crop_size) / 2;

w_off = (input_width - crop_size) / 2;

}

} else {

CHECK_EQ(input_height, height);

CHECK_EQ(input_width, width);

}

// 如果我们输入的图片尺寸大于crop_size,那么图片会被裁剪。当`phase`模式为`TRAIN`时,裁剪是随机进行裁剪,而当为`TEST`模式时,其裁剪方式则只是裁剪图像的中间区域。

Dtype* input_data = input_blob->mutable_cpu_data();

if (has_mean_file) {

CHECK_EQ(input_channels, data_mean_.channels());

CHECK_EQ(input_height, data_mean_.height());

CHECK_EQ(input_width, data_mean_.width());

for (int n = 0; n < input_num; ++n) {

int offset = input_blob->offset(n);

caffe_sub(data_mean_.count(), input_data + offset,

data_mean_.cpu_data(), input_data + offset);

}

}

// 如果定义了mean_file,则在输入数据blob中减去对应的均值

if (has_mean_values) {

CHECK(mean_values_.size() == 1 || mean_values_.size() == input_channels) <<

"Specify either 1 mean_value or as many as channels: " << input_channels;

if (mean_values_.size() == 1) {

caffe_add_scalar(input_blob->count(), -(mean_values_[0]), input_data);

} else {

for (int n = 0; n < input_num; ++n) {

for (int c = 0; c < input_channels; ++c) {

int offset = input_blob->offset(n, c);

caffe_add_scalar(input_height * input_width, -(mean_values_[c]),

input_data + offset);

}

}

}

}

// 如果定义了mean_value,则在输入数据blob中减去对应的均值,对应于三个通道RGB

Dtype* transformed_data = transformed_blob->mutable_cpu_data();

for (int n = 0; n < input_num; ++n) {

int top_index_n = n * channels;

int data_index_n = n * channels;

for (int c = 0; c < channels; ++c) {

int top_index_c = (top_index_n + c) * height;

int data_index_c = (data_index_n + c) * input_height + h_off;

for (int h = 0; h < height; ++h) {

int top_index_h = (top_index_c + h) * width;

int data_index_h = (data_index_c + h) * input_width + w_off;

if (do_mirror) {

int top_index_w = top_index_h + width - 1;

for (int w = 0; w < width; ++w) {

transformed_data[top_index_w-w] = input_data[data_index_h + w];

}

} else {

for (int w = 0; w < width; ++w) {

transformed_data[top_index_h + w] = input_data[data_index_h + w];

}

}

}

}

}

// 判断是否做镜像变换,得到最终的transformed_data.由于存储的地址,因此不需要返回数据.

if (scale != Dtype(1)) {

DLOG(INFO) << "Scale: " << scale;

caffe_scal(size, scale, transformed_data);

}

}

// 判断是否做尺度变换scale,得到最终的transformed_datadata_reader.hpp和data_reader.cpp

仅仅把数据从DB中读取出来,这部分会给每一个读入的数据源创建一个独立的线程,专门负责这个数据源的读入工作.DataReader线程将读入的数据放入full中,而下面的BasePrefetchingDataLayer的线程将full中的内容取走.Caffe使用BlockingQueue作为生产者和消费者之间同步的结构.

每一次DataReader将free中已经被消费过的对象取出,填上新的数据,然后将其塞入full中.每一次BlockingQueue将full中的对象取出并消费,然后将其塞入free中.

data_reader.hpp

class::Body

#ifndef CAFFE_DATA_READER_HPP_

#define CAFFE_DATA_READER_HPP_

#include <map>

#include <string>

#include <vector>

#include "caffe/common.hpp"

#include "caffe/internal_thread.hpp"

#include "caffe/util/blocking_queue.hpp"

#include "caffe/util/db.hpp"

namespace caffe {

/**

* @brief Reads data from a source to queues available to data layers.

* A single reading thread is created per source, even if multiple solvers

* are running in parallel, e.g. for multi-GPU training. This makes sure

* databases are read sequentially, and that each solver accesses a different

* subset of the database. Data is distributed to solvers in a round-robin

* way to keep parallel training deterministic.

* 从共享的资源读取数据然后排队输入到数据层,每个资源创建单个线程,即便是使用多个 GPU

* 在并行任务中求解。这就保证对于频繁读取数据库,并且每个求解的线程使用的子数据是不同。

* 数据成功设计就是这样使在求解时数据保持一种循环地并行训练。

*/

class DataReader {

public:

explicit DataReader(const LayerParameter& param); //构造函数

~DataReader(); //析构函数

inline BlockingQueue<Datum*>& free() const {

return queue_pair_->free_;

}

inline BlockingQueue<Datum*>& full() const {

return queue_pair_->full_;

}

protected:

// Queue pairs are shared between a body and its readers

class QueuePair { //线程处理

public:

explicit QueuePair(int size);

~QueuePair();

BlockingQueue<Datum*> free_; //定义阻塞队列free_

BlockingQueue<Datum*> full_; //定义阻塞队列full_

DISABLE_COPY_AND_ASSIGN(QueuePair);

};

// A single body is created per source

class Body : public InternalThread { //继承InternalThread类

public:

explicit Body(const LayerParameter& param); //构造函数

virtual ~Body(); //析构函数

protected:

void InternalThreadEntry(); //重写了InternalThread 内部的 InternalThreadEntry 函数,此外还添加了 read_one 函数

void read_one(db::Cursor* cursor, QueuePair* qp);

const LayerParameter param_;

BlockingQueue<shared_ptr<QueuePair> > new_queue_pairs_;

friend class DataReader; //DataReader的友元

DISABLE_COPY_AND_ASSIGN(Body);

};

// A source is uniquely identified by its layer name + path, in case

// the same database is read from two different locations in the net.

// 数据的唯一标识:层名称+路径,防止不同位置读取相同的数据库

static inline string source_key(const LayerParameter& param) {

return param.name() + ":" + param.data_param().source(); //data_patam()参数.source()代表源地址

}

const shared_ptr<QueuePair> queue_pair_;

shared_ptr<Body> body_;

static map<const string, boost::weak_ptr<DataReader::Body> > bodies_;

DISABLE_COPY_AND_ASSIGN(DataReader);

};

} // namespace caffe

#endif // CAFFE_DATA_READER_HPP_data_reader.cpp