你要的答案或许都在这里:小鹏的博客目录

MachineLP的Github(欢迎follow):https://github.com/MachineLP

人脸检测:cascade cnn,mtcnn,都可以通过下面代码复现。但是下面的实现是比较low的,后面更新FCN的方法。

注意mtcnn的标签加了回归框,训练时候的输出层要作修改:(回归框的作用还是很大的)

# compute bbox reg label,其中x1,x2,y1,y2为真实的人脸坐标,x_left,x_right,y_top,y_bottom,width,height为预测的人脸坐标,

# 如果是在准备人脸和非人脸样本的时候,x_left,x_right,y_top,y_bottom,width,height就是你的滑动窗与真实人脸的IOU>0.6(根据你的定义)的滑动窗坐标。

offset_x1 = (x1 - x_left) / float(width)

offset_y1 = (y1 - y_top) / float(height)

offset_x2 = (x2 - x_right) / float(width)

offset_y2 = (y2 - y_bottom ) / float(height)

tensorflow:12-net训练

2016年9月份的代码,比较乱 >.<,仅供参考,需要的话自己阅读整理吧。

>.<,仅供参考,需要的话自己阅读整理吧。

train_net_12.py : face_AFLW文件夹下包含有人脸和非人脸两个文件夹。

import tensorflow as tf

import cv2

import os

import csv

from pandas import read_csv

import random

import numpy as np

import utils

filename = '/Users/liupeng/Desktop/anaconda/Dlib/face_AFLW'

# 该本分可以保存到txt文件中,可以节省加载时间,另外可以通过判断文件名,给人脸和非人脸加标签。

text_data = []

label = 0

for filename1 in os.listdir(filename):

#print (filename1)

label = label + 1

if (filename1[0] != '.'):

filename1 = filename + '/' + filename1

for filename2 in os.listdir(filename1):

#print (filename2)

if (filename2[0] != '.' ):

#print (filename2)

filename2 = filename1 + '/' + filename2

image = cv2.imread(filename2)

if image is None:

continue

text_data.append(filename2 + ' ' + str(label-2))

text_data = [x.split(' ') for x in text_data]

random.shuffle(text_data)

train_image = []

train_label = []

for i in range(len(text_data)):

train_image.append(text_data[i][0])

train_label.append(text_data[i][1])

#print (train_image)

print (train_label)

batch_size = 128

IMAGE_SIZE = 12

def get_next_batch(pointer):

batch_x = np.zeros([batch_size, IMAGE_SIZE, IMAGE_SIZE, 3])

batch_y = np.zeros([batch_size, 2])

# images = train_image[pointer*batch_size : (pointer+1)*batch_size]

# label = train_label[pointer*batch_size : (pointer+1)*batch_size]

for i in range(batch_size):

image = cv2.imread(train_image[i+pointer*batch_size])

image = cv2.resize(image, (IMAGE_SIZE, IMAGE_SIZE))

image = (image - 127.5)*0.0078125

'''m = image.mean()

s = image.std()

min_s = 1.0/(np.sqrt(image.shape[0]*image.shape[1]*image.shape[2]))

std = max(min_s, s)

image = (image-m)/std'''

batch_x[i,:] = image.astype('float32') #/ 255.0

# print (batch_x[i])

if train_label[i+pointer*batch_size] == '0':

batch_y[i,0] = 1

else:

batch_y[i,1] = 1

# print (train_image[i+pointer*batch_size],batch_y[i])

return batch_x, batch_y

# 网络可以加深一点。改成 3 -> 16 3*3(SAME) pooling -> 32 3*3(SAME) pooling -> 32 3*3(VALID) -> 2

def fcn_12_detect(threshold, dropout=False, activation=tf.nn.relu):

imgs = tf.placeholder(tf.float32, [None, IMAGE_SIZE, IMAGE_SIZE, 3])

labels = tf.placeholder(tf.float32, [None, 2])

keep_prob = tf.placeholder(tf.float32, name='keep_prob')

with tf.variable_scope('net_12'):

conv1,_ = utils.conv2d(x=imgs, n_output=16, k_w=3, k_h=3, d_w=1, d_h=1, name="conv1")

conv1 = activation(conv1)

pool1 = tf.nn.max_pool(conv1, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding="SAME", name="pool1")

ip1,W1 = utils.conv2d(x=pool1, n_output=16, k_w=6, k_h=6, d_w=1, d_h=1, padding="VALID", name="ip1")

ip1 = activation(ip1)

if dropout:

ip1 = tf.nn.dropout(ip1, keep_prob)

ip2,W2 = utils.conv2d(x=ip1, n_output=2, k_w=1, k_h=1, d_w=1, d_h=1, name="ip2")

pred = tf.nn.sigmoid(utils.flatten(ip2))

target = utils.flatten(labels)

regularizer = 8e-3 * (tf.nn.l2_loss(W1)+100*tf.nn.l2_loss(W2))

loss = tf.reduce_mean(tf.div(tf.add(-tf.reduce_sum(target * tf.log(pred + 1e-9),1), -tf.reduce_sum((1-target) * tf.log(1-pred + 1e-9),1)),2)) + regularizer

cost = tf.reduce_mean(loss)

predict = pred

max_idx_p = tf.argmax(predict, 1)

max_idx_l = tf.argmax(target, 1)

correct_pred = tf.equal(max_idx_p, max_idx_l)

acc = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

thresholding_12 = tf.cast(tf.greater(pred, threshold), "float")

recall_12 = tf.reduce_sum(tf.cast(tf.logical_and(tf.equal(thresholding_12, tf.constant([1.0])), tf.equal(target, tf.constant([1.0]))), "float")) / tf.reduce_sum(target)

'''

correct_prediction = tf.equal(tf.cast(tf.greater(pred, threshold), tf.int32), tf.cast(target, tf.int32))

acc = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))'''

return {'imgs': imgs, 'labels': labels, 'keep_prob': keep_prob,

'cost': cost, 'pred': pred, 'accuracy': acc, 'features': ip1,

'recall': recall_12, 'thresholding': thresholding_12}

def train():

net_output = fcn_12_detect(0.0)

global_step = tf.Variable(0, tf.int32)

starter_learning_rate = 0.00001

learning_rate = tf.train.exponential_decay(

learning_rate=starter_learning_rate,

global_step=global_step,

decay_steps=1000,

decay_rate=1.0,

staircase=True,

name=None)

train_step = tf.train.AdamOptimizer(learning_rate).minimize(net_output['cost'], global_step=global_step)

sess = tf.Session()

saver = tf.train.Saver(tf.trainable_variables())

# import pdb; pdb.set_trace()

sess.run(tf.initialize_all_variables())

saver.restore(sess, 'model/model_net_12-123100')

for j in range(2000):

for i in range(700):

imgs, labels = get_next_batch(i)

# labels = labels.reshape((labels.shape[0]))

if i%300==0 and i!=0:

saver.save(sess, 'model/model_net_12', global_step=global_step, write_meta_graph=False)

if i%1==0:

img, label = get_next_batch(700+i%50)

cost, accuracy, recall, lr, pre = sess.run(

[net_output['cost'], net_output['accuracy'], net_output['recall'], learning_rate, net_output['pred']],

feed_dict={net_output['imgs']: img, net_output['labels']: label})

print("Step %d, cost: %f, acc: %f, recall: %f, lr: %f"%(i, cost, accuracy, recall, lr))

print (pre[0], label[0])

print (pre[1], label[1])

print (pre[2], label[2])

print (pre[3], label[3])

print (pre[4], label[4])

# print("target: ", target)

# print("pred: ", pred)

# train

sess.run(train_step, feed_dict={net_output['imgs']: imgs, net_output['labels']: labels})

sess.close()

def test():

image = cv2.imread('images/8.jpg')

image = cv2.resize(image, (IMAGE_SIZE, IMAGE_SIZE))

m = image.mean()

s = image.std()

min_s = 1.0/(np.sqrt(image.shape[0]*image.shape[1]*image.shape[2]))

std = max(min_s, s)

image = (image-m)/std

image = image.astype('float32') #/ 255

net_12 = fcn_12_detect(0.0)

saver = tf.train.Saver()

sess = tf.Session()

# saver.restore(sess, tf.train.latest_checkpoint('/Users/liupeng/Desktop/anaconda/i_code', 'checkpoint'))

sess.run(tf.initialize_all_variables())

print ('start restore model')

saver.restore(sess, 'model/model_net_12-71400')

print ('ok')

# saver.restore(sess, tf.train.latest_checkpoint('.'))

# predict = tf.argmax(tf.reshape(output, [-1, MAX_CAPTCHA, CHAR_SET_LEN]), 2)

predict = sess.run(net_12['pred'], feed_dict={net_12['imgs']: [image]})

print ("predict:", predict)

return predict

if __name__ == '__main__':

train()

# test()import tensorflow as tf

def conv2d(x, n_output,

k_h=5, k_w=5, d_h=2, d_w=2,

padding='SAME', name='conv2d', reuse=None):

"""Helper for creating a 2d convolution operation.

Parameters

----------

x : tf.Tensor

Input tensor to convolve.

n_output : int

Number of filters.

k_h : int, optional

Kernel height

k_w : int, optional

Kernel width

d_h : int, optional

Height stride

d_w : int, optional

Width stride

padding : str, optional

Padding type: "SAME" or "VALID"

name : str, optional

Variable scope

Returns

-------

op : tf.Tensor

Output of convolution

"""

with tf.variable_scope(name or 'conv2d', reuse=reuse):

W = tf.get_variable(

name='W',

shape=[k_h, k_w, x.get_shape()[-1], n_output],

initializer=tf.contrib.layers.xavier_initializer_conv2d())

conv = tf.nn.conv2d(

name='conv',

input=x,

filter=W,

strides=[1, d_h, d_w, 1],

padding=padding)

b = tf.get_variable(

name='b',

shape=[n_output],

initializer=tf.constant_initializer(0.0))

h = tf.nn.bias_add(

name='h',

value=conv,

bias=b)

return h, W

def linear(x, n_output, name=None, activation=None, reuse=None):

"""Fully connected layer.

Parameters

----------

x : tf.Tensor

Input tensor to connect

n_output : int

Number of output neurons

name : None, optional

Scope to apply

Returns

-------

h, W : tf.Tensor, tf.Tensor

Output of fully connected layer and the weight matrix

"""

if len(x.get_shape()) != 2:

x = flatten(x, reuse=reuse)

n_input = x.get_shape().as_list()[1]

with tf.variable_scope(name or "fc", reuse=reuse):

W = tf.get_variable(

name='W',

shape=[n_input, n_output],

dtype=tf.float32,

initializer=tf.tf.contrib.layers.xavier_initializer())

b = tf.get_variable(

name='b',

shape=[n_output],

dtype=tf.float32,

initializer=tf.constant_initializer(0.0))

h = tf.nn.bias_add(

name='h',

value=tf.matmul(x, W),

bias=b)

if activation:

h = activation(h)

return h, W

def flatten(x, name=None, reuse=None):

"""Flatten Tensor to 2-dimensions.

Parameters

----------

x : tf.Tensor

Input tensor to flatten.

name : None, optional

Variable scope for flatten operations

Returns

-------

flattened : tf.Tensor

Flattened tensor.

"""

with tf.variable_scope('flatten'):

dims = x.get_shape().as_list()

if len(dims) == 4:

flattened = tf.reshape(

x,

shape=[-1, dims[1] * dims[2] * dims[3]])

elif len(dims) == 2 or len(dims) == 1:

flattened = x

else:

raise ValueError('Expected n dimensions of 1, 2 or 4. Found:',

len(dims))

return flattened

def lrelu(features, leak=0.2):

"""Leaky rectifier.

Parameters

----------

features : tf.Tensor

Input to apply leaky rectifier to.

leak : float, optional

Percentage of leak.

Returns

-------

op : tf.Tensor

Resulting output of applying leaky rectifier activation.

"""

f1 = 0.5 * (1 + leak)

f2 = 0.5 * (1 - leak)

return f1 * features + f2 * abs(features)下面是滑动窗人脸检测的流程:

(1)确定最小检测人脸,对原图img缩放,缩放比例为(滑动窗大小/最小人脸大小)。

(2)缩放后的图片,构建金字塔。

(3)对金字塔的每一层,通过滑动窗获取patch,对patch归一化处理,之后给训练好的人脸检测器识别,将识别为人脸的窗口位置和概率保存。

(4)将人脸窗口映射到原图img中的人脸位置,概率不变。

(5)NMS处理重叠窗口。

(6)级联的方式提高准确率。

(7)在原图画出人脸位置。

*****调节的参数有:

# 步长

stride = 2

# 最小人脸大小

F = 40

# 构建金字塔的比例

ff = 0.8

# 概率多大时判定为人脸?

p = 0.8

# nms

overlapThresh_12 = 0.7

overlapThresh_24 = 0.7

下面不是完成代码,需要自己添加训练好的model,稍作修改就可以。

import numpy as np

import tensorflow as tf

from model import fcn_12_detect

def py_nms(dets, thresh, mode="Union"):

"""

greedily select boxes with high confidence

keep boxes overlap <= thresh

rule out overlap > thresh

:param dets: [[x1, y1, x2, y2 score]]

:param thresh: retain overlap <= thresh

:return: indexes to keep

"""

if len(dets) == 0:

return []

x1 = dets[:, 0]

y1 = dets[:, 1]

x2 = dets[:, 2]

y2 = dets[:, 3]

scores = dets[:, 4]

areas = (x2 - x1 + 1) * (y2 - y1 + 1)

order = scores.argsort()[::-1]

keep = []

while order.size > 0:

i = order[0]

keep.append(i)

xx1 = np.maximum(x1[i], x1[order[1:]])

yy1 = np.maximum(y1[i], y1[order[1:]])

xx2 = np.minimum(x2[i], x2[order[1:]])

yy2 = np.minimum(y2[i], y2[order[1:]])

w = np.maximum(0.0, xx2 - xx1 + 1)

h = np.maximum(0.0, yy2 - yy1 + 1)

inter = w * h

if mode == "Union":

ovr = inter / (areas[i] + areas[order[1:]] - inter)

elif mode == "Minimum":

ovr = inter / np.minimum(areas[i], areas[order[1:]])

inds = np.where(ovr <= thresh)[0]

order = order[inds + 1]

return dets[keep]

def image_preprocess(img):

img = (img - 127.5)*0.0078125

'''m = img.mean()

s = img.std()

min_s = 1.0/(np.sqrt(img.shape[0]*img.shape[1]*img.shape[2]))

std = max(min_s, s)

img = (img-m)/std'''

return img

def slide_window(img, window_size, stride):

# 对构建的金字塔图片,滑动窗口。

# img:图片, window_size:滑动窗的大小,stride:步长。

window_list = []

w = img.shape[1]

h = img.shape[0]

if w<=window_size+stride or h<=window_size+stride:

return None

if len(img.shape)!=3:

return None

for i in range(int((w-window_size)/stride)):

for j in range(int((h-window_size)/stride)):

box = [j*stride, i*stride, j*stride+window_size, i*stride+window_size]

window_list.append(box)

return img, np.asarray(window_list)

def pyramid(image, f, window_size):

# 构建图像的金字塔,以便进行多尺度滑动窗口

# image:输入图像,f:缩放的尺度, window_size:滑动窗大小。

w = image.shape[1]

h = image.shape[0]

img_ls = []

while( w > window_size and h > window_size):

img_ls.append(image)

w = int(w * f)

h = int(h * f)

image = cv2.resize(image, (w, h))

return img_ls

def min_face(img, F, window_size, stride):

# img:输入图像,F:最小人脸大小, window_size:滑动窗,stride:滑动窗的步长。

h, w, _ = img.shape

w_re = int(float(w)*window_size/F)

h_re = int(float(h)*window_size/F)

if w_re<=window_size+stride or h_re<=window_size+stride:

print (None)

# 调整图片大小的时候注意参数,千万不要写反了

# 根据最小人脸缩放图片

img = cv2.resize(img, (w_re, h_re))

return img

if __name__ = "__main__":

image = cv2.imread('images/1.jpg')

h,w,_ = image.shape

......

# 调参的参数

IMAGE_SIZE = 12

# 步长

stride = 2

# 最小人脸大小

F = 40

# 构建金字塔的比例

ff = 0.8

# 概率多大时判定为人脸?

p_12 = 0.8

p_24 = 0.8

# nms

overlapThresh_12 = 0.7

overlapThresh_24 = 0.3

......

# 加载 model

net_12 = fcn_12_detect()

net_12_vars = [v for v in tf.trainable_variables() if v.name.startswith('net_12')]

saver_net_12 = tf.train.Saver(net_12_vars)

sess = tf.Session()

sess.run(tf.initialize_all_variables())

saver_net_12.restore(sess, 'model/12-net/model_net_12-123200')

# net_24...

......

# 需要检测的最小人脸

image_ = min_face(image, F, IMAGE_SIZE, stride)

......

# 金字塔

pyd = pyramid(np.array(image_), ff, IMAGE_SIZE)

......

# net-12

window_after_12 = []

for i, img in enumerate(pyd):

# 滑动窗口

slide_return = slide_window(img, IMAGE_SIZE, stride)

if slide_return is None:

break

img_12 = slide_return[0]

window_net_12 = slide_return[1]

w_12 = img_12.shape[1]

h_12 = img_12.shape[0]

patch_net_12 = []

for box in window_net_12:

patch = img_12[box[0]:box[2], box[1]:box[3], :]

# 做归一化处理

patch = image_preprocess(patch)

patch_net_12.append(patch)

patch_net_12 = np.array(patch_net_12)

# 预测人脸

pred_cal_12 = sess.run(net_12['pred'], feed_dict={net_12['imgs']: patch_net_12})

window_net = window_net_12

# print (pred_cal_12)

windows = []

for i, pred in enumerate(pred_cal_12):

# 概率大于0.8的判定为人脸。

s = np.where(pred[1]>p_12)[0]

if len(s)==0:

continue

#保存窗口位置和概率。

windows.append([window_net[i][0],window_net[i][1],window_net[i][2],window_net[i][3],pred[1]])

# 按照概率值 由大到小排序

windows = np.asarray(windows)

windows = py_nms(windows, overlapThresh_12, 'Union')

window_net = windows

for box in window_net:

lt_x = int(float(box[0])*w/w_12)

lt_y = int(float(box[1])*h/h_12)

rb_x = int(float(box[2])*w/w_12)

rb_y = int(float(box[3])*h/h_12)

p_box = box[4]

window_after_12.append([lt_x, lt_y, rb_x, rb_y, p_box])

# 按照概率值 由大到小排序

# window_after_12 = np.asarray(window_after_12)

# window_net = py_nms(window_after_12, overlapThresh_12, 'Union')

window_net = window_after_12

print (window_net)

# net-24

windows_24 = []

if window_net == []:

print "windows is None!"

if window_net != []:

patch_net_24 = []

img_24 = image

for box in window_net:

patch = img_24[box[0]:box[2], box[1]:box[3], :]

patch = cv2.resize(patch, (24, 24))

# 做归一化处理

patch = image_preprocess(patch)

patch_net_24.append(patch)

# 预测人脸

pred_net_24 = sess.run(net_24['pred'], feed_dict={net_24['imgs']: patch_net_24})

print (pred_net_24)

window_net = window_net

# print (pred_net_24)

for i, pred in enumerate(pred_net_24):

s = np.where(pred[1]>p_24)[0]

if len(s)==0:

continue

windows_24.append([window_net[i][0],window_net[i][1],window_net[i][2],window_net[i][3],pred[1]])

# 按照概率值 由大到小排序

windows_24 = np.asarray(windows_24)

#window_net = nms_max(windows_24, overlapThresh=0.7)

window_net = py_nms(windows_24, overlapThresh_24, 'Union')

if window_net == []:

print "windows is None!"

if window_net != []:

print(window_net.shape)

for box in window_net:

#ImageDraw.Draw(image).rectangle((box[1], box[0], box[3], box[2]), outline = "red")

cv2.rectangle(image, (int(box[1]),int(box[0])), (int(box[3]),int(box[2])), (0, 255, 0), 2)

cv2.imwrite("images/face_img.jpg", image)

cv2.imshow("face detection", image)

cv2.waitKey(10000)

cv2.destroyAllWindows()

coord.request_stop()

coord.join(threads)

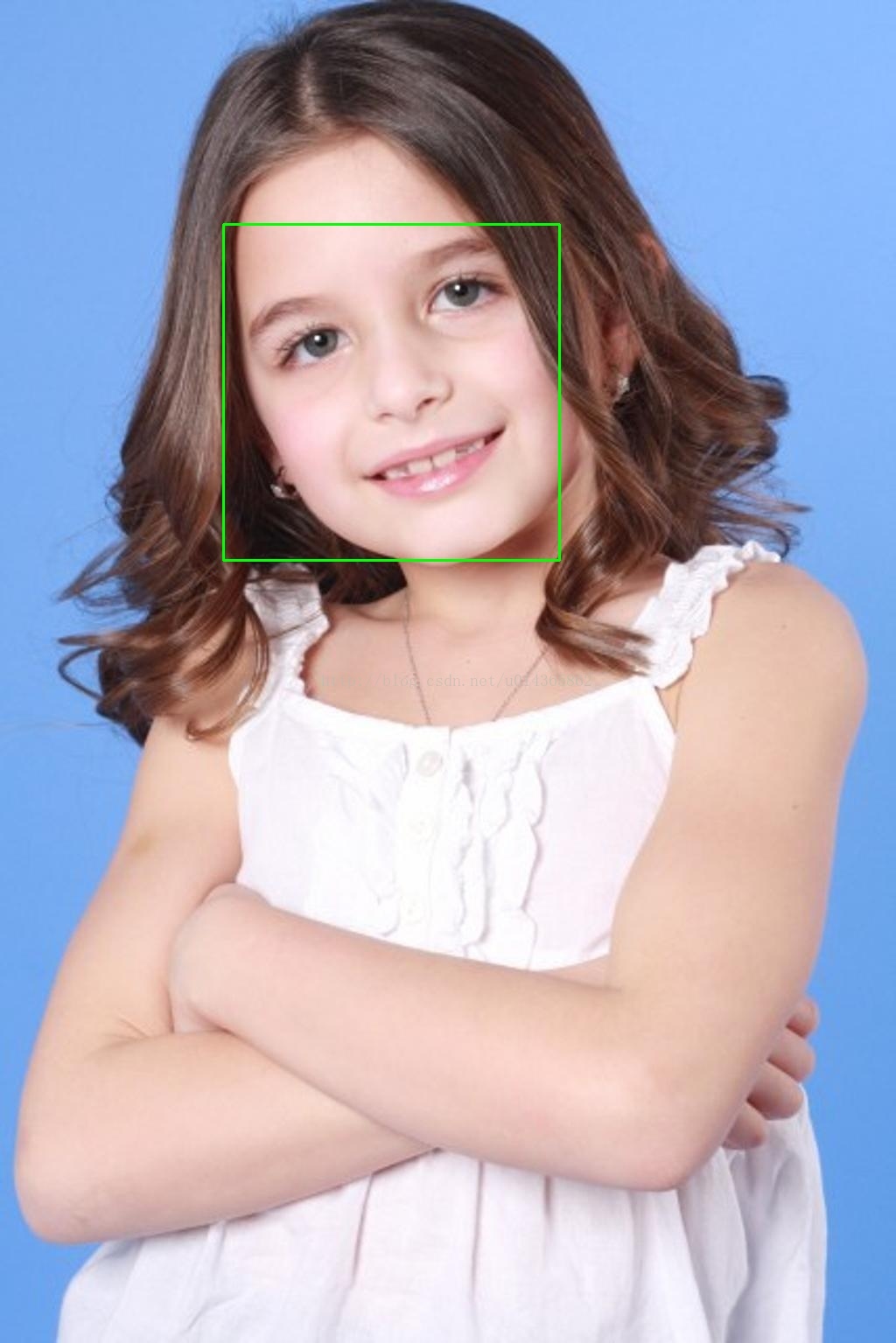

sess.close()检测结果:(下面的重叠窗口可以通过设置overlapThresh去除)

本文介绍了一种基于深度学习的人脸检测方法,包括12-net和24-net的网络结构及训练过程,并给出了从图像预处理到滑动窗口检测的具体实现细节。

本文介绍了一种基于深度学习的人脸检测方法,包括12-net和24-net的网络结构及训练过程,并给出了从图像预处理到滑动窗口检测的具体实现细节。

1333

1333

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?