keepalived高可用

介绍:

Keepalived是一个开源的工具,可以在多台服务器之间提供故障转移和高可用性服务。它使用VRRP协议来提供虚拟IP(VIP),并监控服务器和服务的状态。当主服务器失效时,Keepalived可以自动将VIP转移到备用服务器上,以确保服务的连续性和可靠性。

keepalive:长连接

keepalived:高可用的实现软件

优缺点:

优点:

- 高可用性:Keepalived可以通过将VIP迁移到备用服务器来实现服务的快速切换,并实现高可用性,从而减少服务的中断时间。

- 简单易用:Keepalived具有用户友好的配置文件和命令行界面,使得配置和管理变得简单易懂。

- 冗余和负载均衡:Keepalived不仅可以提供故障转移,还可以在主备服务器之间进行负载均衡,分配流量和资源。

缺点:

- 单一故障点:由于Keepalived依赖于主服务器的状态来触发故障转移,因此如果主服务器本身发生故障,可能会导致服务中断。

- 有限的监控功能:相比一些专门的监控工具,Keepalived的监控功能相对有限,只能基于简单的状态检查进行故障判断。

工作原理:

Keepalived使用VRRP协议来实现高可用性。它通过创建一个虚拟的IP地址(VIP),并将其关联到多个服务器(至少两台)上,这些服务器被视为一个组。其中一台服务器被选为主服务器,负责处理所有的请求和流量,而其他服务器则作为备用服务器。

主服务器通过发送VRRP广播消息,通知其他服务器它是当前的主服务器,同时将VIP分配给自己。备用服务器则收到这些广播消息,并等待主服务器失效。一旦备用服务器检测到主服务器失效,它将发起一个VRRP抢占过程来取得VIP,并成为新的主服务器,接管服务。

Keepalived高可用对之间是通过VRRP通信的,因此,我们从 VRRP开始了解起:

- VRRP,全称 Virtual Router Redundancy Protocol,中文名为虚拟路由冗余协议,VRRP的出现是为了解决静态路由的单点故障。

- VRRP是通过一种竟选协议机制来将路由任务交给某台 VRRP路由器的。

- VRRP用 IP多播的方式(默认多播地址(224.0_0.18))实现高可用对之间通信。

- 工作时主节点发包,备节点接包,当备节点接收不到主节点发的数据包的时候,就启动接管程序接管主节点的开源。备节点可以有多个,通过优先级竞选,但一般 Keepalived系统运维工作中都是一对。

- VRRP使用了加密协议加密数据,但Keepalived官方目前还是推荐用明文的方式配置认证类型和密码。

工作流程:

- 安装和配置:在多台服务器上安装Keepalived并进行配置。配置中包括VIP的定义、服务器角色的指定、监控脚本的配置等。

- VRRP通信:服务器之间通过多播或广播方式进行VRRP通信,主服务器发送广播消息以通知其他服务器自己是当前的主服务器。

- 健康检查:Keepalived定期对指定的服务或脚本进行健康检查,以确保服务正常运行。

- 故障检测:备用服务器持续监测主服务器的状态,一旦检测到主服务器故障,备用服务器将发起抢占过程。

- VIP转移:备用服务器成功抢占VIP后,它将成为新的主服务器,并接管服务。

- 恢复操作:当主服务器恢复正常后,它可以通过发送VRRP通告来参与抢占,争夺回VIP并成为新的主服务器。

keepalived实现nginx负载均衡机高可用

环境说明:

| 服务器类型 | IP地址 | 系统版本 |

|---|---|---|

| haproxy1(master) | 192.168.134.148 | centos 8 |

| haproxy2(slave) | 192.168.134.155 | centos 8 |

| web1 | 192.168.134.151 | centos 8 |

| web2 | 192.168.134.154 | centos 8 |

注:本次高可用虚拟IP(VIP)地址暂定为 192.168.134.255

haproxy部署http负载均衡前提(部署两台RS主机)

在后端服务器上准备测试的http页面(主机web1、web2)

#在web1上做准备工作

//关永久闭防火墙和selinux

[root@web1 ~]# systemctl disable --now firewalld.service

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@web1 ~]# setenforce 0

[root@web1 ~]# vim /etc/selinux/config

SELINUX=disabled #将此处修改为关闭

[root@web1 ~]# reboot //重启生效

[root@web1 ~]# getenforce

Disabled

//配置yum源

[root@web1 ~]# rm -rf /etc/yum.repos.d/*

[root@web1 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

//yum安装httpd服务,并开启服务设置访问网页内容

[root@web1 ~]# yum -y install httpd

. . .

//设置开机自启

[root@web1 ~]# systemctl enable --now httpd

Created symlink /etc/systemd/system/multi-user.target.wants/httpd.service → /usr/lib/systemd/system/httpd.service.

[root@web1 ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 *:80 *:*

LISTEN 0 128 [::]:22 [::]:*

[root@web1 ~]# echo "this is web1" > /var/www/html/index.html

#在web2上做准备工作

//关永久闭防火墙和selinux

[root@web2 ~]# systemctl disable --now firewalld.service

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@web2 ~]# setenforce 0

[root@web2 ~]# vim /etc/selinux/config

[root@web2 ~]# reboot //重启生效

[root@web2 ~]# getenforce

Disabled

//配置yum源

[root@web2 ~]# rm -rf /etc/yum.repos.d/*

[root@web2 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

//yum安装httpd服务,并开启服务设置访问网页内容

[root@web2 ~]# yum -y install httpd

. . .

//设置开机自启

[root@web2 ~]# systemctl enable --now httpd

Created symlink /etc/systemd/system/multi-user.target.wants/httpd.service → /usr/lib/systemd/system/httpd.service.

[root@web2 ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 *:80 *:*

LISTEN 0 128 [::]:22 [::]:*

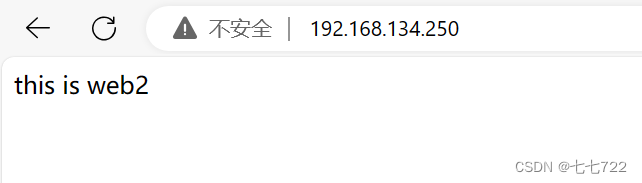

[root@web2 ~]# echo "this is web2" > /var/www/html/index.html

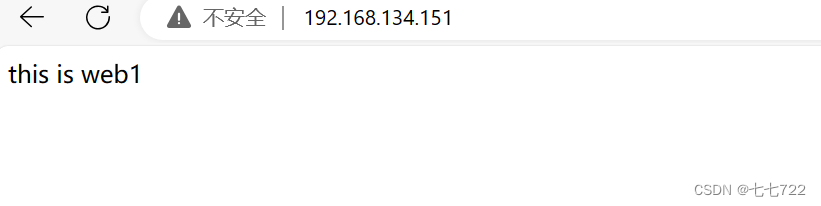

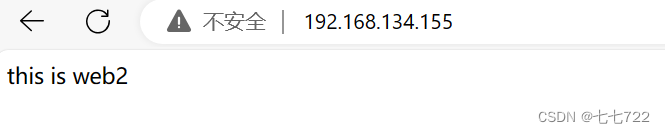

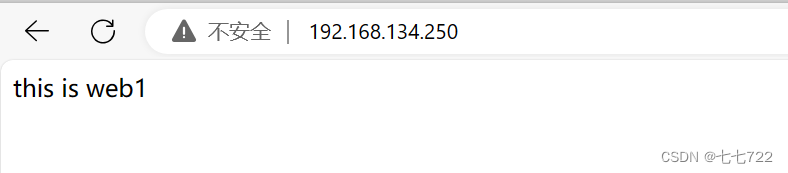

访问web主机网页

web1:

web2:

部署完成

5.1.keepalived安装

配置主keepalived

//永久关闭防火墙和selinux

[root@haproxy1 ~]# systemctl disable --now firewalld.service

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@haproxy1 ~]# setenforce 0

[root@haproxy1 ~]# vim /etc/selinux/config

SELINUX=disabled

[root@haproxy1 ~]# reboot

[root@haproxy1 ~]# getenforce

Disabled

//配置yum源

[root@haproxy1 ~]# rm -rf /etc/yum.repos.d/*

[root@haproxy1 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

[root@haproxy1 ~]# yum clean all

[root@haproxy1 yum.repos.d]# yum makecache

//安装keepalived

[root@haproxy1 ~]# yum -y install epel-release vim wget gcc gcc-c++

. . .

[root@haproxy1 ~]# yum -y install keepalived

. . .

//查看安装生成的文件

[root@haproxy1 ~]# rpm -ql keepalived

/etc/keepalived //配置目录

/etc/keepalived/keepalived.conf //此为主配置文件

/etc/sysconfig/keepalived

/usr/bin/genhash

/usr/lib/systemd/system/keepalived.service //此为服务控制文件

/usr/libexec/keepalived

/usr/sbin/keepalived

. . . . .

同样的方法在备服务器上也安装keepalived

//永久关闭防火墙和selinux

[root@haproxy2 ~]# systemctl disable --now firewalld.service

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@haproxy2 ~]# setenforce 0

[root@haproxy2 ~]# vim /etc/selinux/config

SELINUX=disabled

[root@haproxy2 ~]# reboot

[root@haproxy2 ~]# getenforce

Disabled

//配置yum源

[root@haproxy2 ~]# rm -rf /etc/yum.repos.d/*

[root@haproxy2 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

[root@haproxy2 ~]# yum clean all

[root@haproxy2 yum.repos.d]# yum makecache

//安装keepalived

[root@haproxy2 ~]# yum -y install epel-release vim wget gcc gcc-c++

. . .

[root@haproxy2 ~]# yum -y install keepalived

. . .

//查看安装生成的文件

[root@haproxy2 ~]# rpm -ql keepalived

/etc/keepalived //配置目录

/etc/keepalived/keepalived.conf //此为主配置文件

/etc/sysconfig/keepalived

/usr/bin/genhash

/usr/lib/systemd/system/keepalived.service //此为服务控制文件

/usr/libexec/keepalived

/usr/sbin/keepalived

. . . . .

5.2.在主备机上分别安装haproxy

部署两台haproxy负载均衡httpd的主机

进入haproxy官网拉取软件包

HAProxy - The Reliable, High Perf. TCP/HTTP Load Balancer

利用haproxy1主机部署haproxy的流程,haproxy2主机与haproxy1一致

//安装依赖包,并创建用户

[root@haproxy1 ~]# yum -y install make gcc pcre-devel bzip2-devel openssl-devel systemd-devel vim wget

[root@haproxy1 ~]# useradd -r -M -s /sbin/nologin haproxy

//使用wget命令拉取haproxy软件包

[root@haproxy1 ~]# wget https://www.haproxy.org/download/2.7/src/haproxy-2.7.10.tar.gz

--2023-10-12 14:46:05-- https://www.haproxy.org/download/2.7/src/haproxy-2.7.10.tar.gz

Resolving www.haproxy.org (www.haproxy.org)... 51.15.8.218, 2001:bc8:35ee:100::1

Connecting to www.haproxy.org (www.haproxy.org)|51.15.8.218|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 4191948 (4.0M) [application/x-tar]

Saving to: ‘haproxy-2.7.10.tar.gz’

haproxy-2.7.10.tar.gz 100%[===============================================>] 4.00M 51.0KB/s in 65s

2023-10-12 14:47:12 (63.1 KB/s) - ‘haproxy-2.7.10.tar.gz’ saved [4191948/4191948]

[root@haproxy1 ~]# ls

anaconda-ks.cfg haproxy-2.7.10.tar.gz

//解压并进入该目录进行编译

[root@haproxy1 ~]# tar xf haproxy-2.7.10.tar.gz

[root@haproxy1 ~]# cd haproxy-2.7.10/

[root@haproxy1 haproxy-2.7.10]# make clean

[root@haproxy1 haproxy-2.7.10]# make -j $(nproc) TARGET=linux-glibc USE_OPENSSL=1 USE_ZLIB=1 USE_PCRE=1 USE_SYSTEMD=1

//进行安装,指定路径

[root@haproxy1 haproxy-2.7.10]# make install PREFIX=/usr/local/haproxy

//设置环境变量(此处通过软链接的方式设置环境变量)

[root@haproxy1 haproxy-2.7.10]# ln -s /usr/local/haproxy/sbin/* /usr/sbin/

[root@haproxy1 haproxy-2.7.10]# which haproxy

/usr/sbin/haproxy

//查看haproxy的版本,能够查看版本,则说明我们这个命令是可以使用的

[root@haproxy1 haproxy-2.7.10]# haproxy -v

HAProxy version 2.7.10-d796057 2023/08/09 - https://haproxy.org/

Status: stable branch - will stop receiving fixes around Q1 2024.

Known bugs: http://www.haproxy.org/bugs/bugs-2.7.10.html

Running on: Linux 4.18.0-193.el8.x86_64 #1 SMP Fri Mar 27 14:35:58 UTC 2020 x86_64

//配置各个负载的内核参数

[root@haproxy1 haproxy-2.7.10]# echo 'net.ipv4.ip_nonlocal_bind = 1' >> /etc/sysctl.conf

[root@haproxy1 haproxy-2.7.10]# echo 'net.ipv4.ip_forward = 1' >> /etc/sysctl.conf

[root@haproxy1 haproxy-2.7.10]# sysctl -p

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

//编写haproxys.service文件

[root@haproxy1 haproxy-2.7.10]# vim /usr/lib/systemd/system/haproxy.service

[root@haproxy1 haproxy-2.7.10]# cat /usr/lib/systemd/system/haproxy.service

[Unit]

Description=HAProxy Load Balancer

After=syslog.target network.target

[Service]

ExecStartPre=/usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -c -q

ExecStart=/usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /var/run/haproxy.pid

ExecReload=/bin/kill -USR2 $MAINPID

[Install]

WantedBy=multi-user.target

[root@haproxy1 haproxy-2.7.10]# systemctl daemon-reload

//配置日志记录功能

[root@haproxy ~]# vim /etc/rsyslog.conf

[root@haproxy ~]# cat /etc/rsyslog.conf

# Save boot messages also to boot.log

local0.* /var/log/haproxy.log //添加此行

local7.* /var/log/boot.log

//重启日志服务

[root@haproxy ~]# systemctl restart rsyslog.service

//编写配置文件

[root@haproxy1 ~]# mkdir /etc/haproxy

[root@haproxy1 ~]# vim /etc/haproxy/haproxy.cfg

[root@haproxy1 ~]# cat /etc/haproxy/haproxy.cfg

#--------------全局配置----------------

global

log 127.0.0.1 local0 info

#log loghost local0 info

maxconn 20480

#chroot /usr/local/haproxy

pidfile /var/run/haproxy.pid

#maxconn 4000

user haproxy

group haproxy

daemon

#---------------------------------------------------------------------

#common defaults that all the 'listen' and 'backend' sections will

#use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option dontlognull

option httpclose

option httplog

#option forwardfor

option redispatch

balance roundrobin

timeout connect 10s

timeout client 10s

timeout server 10s

timeout check 10s

maxconn 60000

retries 3

#--------------统计页面配置------------------

listen admin_stats

bind 0.0.0.0:8189

stats enable

mode http

log global

stats uri /haproxy_stats

stats realm Haproxy\ Statistics

stats auth admin:admin

#stats hide-version

stats admin if TRUE

stats refresh 30s

#---------------web设置-----------------------

listen webcluster

bind 0.0.0.0:80

mode http

#option httpchk GET /index.html

log global

maxconn 3000

balance roundrobin

cookie SESSION_COOKIE insert indirect nocache

server web1 192.168.134.151:80 check inter 2000 fall 5

server web2 192.168.134.154:80 check inter 2000 fall 5

#server web1 192.168.179.1:80 cookie web01 check inter 2000 fall 5

//重启haproxy服务,并将haproxy服务设置开机自启

[root@haproxy1 haproxy-2.7.10]# systemctl enable --now haproxy.service

Created symlink /etc/systemd/system/multi-user.target.wants/haproxy.service → /usr/lib/systemd/system/haproxy.service.

[root@haproxy1 haproxy-2.7.10]# systemctl status haproxy.service

● haproxy.service - HAProxy Load Balancer

Loaded: loaded (/usr/lib/systemd/system/haproxy.service; disabled; vendor preset: disabled)

Active: active (running) since Tue 2023-10-10 01:09:07 CST; 9s ago

Process: 12021 ExecStartPre=/usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -c -q (code=exited, status=0/SUCCESS)

Main PID: 12024 (haproxy)

Tasks: 3 (limit: 11294)

//查看端口

[root@haproxy1 haproxy-2.7.10]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 0.0.0.0:80 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 0.0.0.0:8189 0.0.0.0:*

LISTEN 0 128 [::]:22

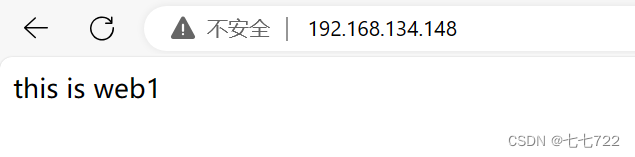

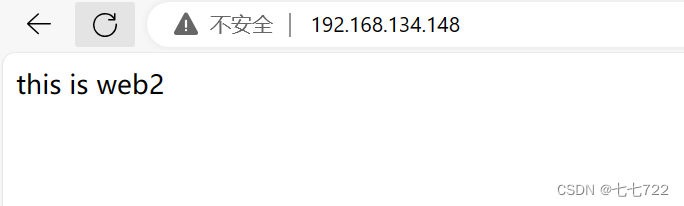

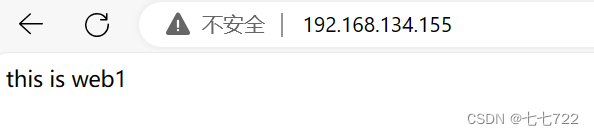

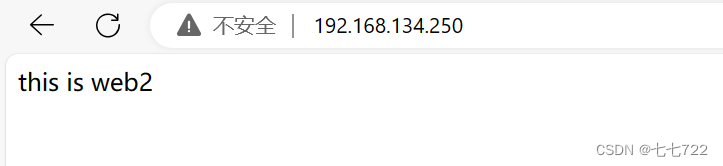

在浏览器上访问试试,确保haproxy1和haproxy2上的haproxy服务能够正常访问

通过192.168.134.148访问

通过192.168.134.155访问

5.3.keepalived配置

5.3.1.配置主keepalived

注意此处给的ip地址是两台负载均衡的ip地址

[root@haproxy1 ~]# vim /etc/keepalived/keepalived.conf

[root@haproxy1 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id haproxy1

}

vrrp_instance VI_1 {

state MASTER

interface ens160

virtual_router_id 80

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 12345678

}

virtual_ipaddress {

192.168.134.255

}

}

virtual_server 192.168.134.255 80 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

real_server 192.168.134.148 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.134.155 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@haproxy1 ~]# systemctl enable --now keepalived.service //开机自启keepalived服务

Created symlink /etc/systemd/system/multi-user.target.wants/keepalived.service → /usr/lib/systemd/system/keepalived.service.

[root@haproxy1 ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 0.0.0.0:80 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 0.0.0.0:8189 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

5.3.2.配置备keepalived

[root@haproxy2 ~]# vim /etc/keepalived/keepalived.conf

[root@haproxy2 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id haproxy2

}

vrrp_instance VI_1 {

state BACKUP

interface ens160

virtual_router_id 80

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 12345678

}

virtual_ipaddress {

192.168.134.255

}

}

virtual_server 192.168.134.255 80 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

real_server 192.168.134.148 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.134.155 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@haproxy2 ~]# systemctl enable --now keepalived.service

Created symlink /etc/systemd/system/multi-user.target.wants/keepalived.service → /usr/lib/systemd/system/keepalived.service.

[root@haproxy2 ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 0.0.0.0:80 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 0.0.0.0:8189 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

5.3.3.查看VIP在哪一台主机上

在MASTER上查看(haproxy1)

[root@haproxy1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:51:4c:69 brd ff:ff:ff:ff:ff:ff

inet 192.168.134.148/24 brd 192.168.134.255 scope global dynamic noprefixroute ens160

valid_lft 1511sec preferred_lft 1511sec

inet 192.168.134.250/32 scope global ens160

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe51:4c69/64 scope link noprefixroute

valid_lft forever preferred_lft forever

在SLAVE上查看(haproxy2)

[root@note1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:14:ea:e9 brd ff:ff:ff:ff:ff:ff

inet 192.168.134.155/24 brd 192.168.134.255 scope global dynamic noprefixroute ens160

valid_lft 1420sec preferred_lft 1420sec

inet 192.168.134.250/32 scope global ens160

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe14:eae9/64 scope link noprefixroute

valid_lft forever preferred_lft forever

5.4.通过vip访问页面

1.因为我们的vip现在在haproxy1主机上,所以需要关闭slave主机(haproxy2)的haproxy服务后,再访问页面

2.模拟master主机(haproxy1)服务因不明原因导致服务关闭,从而开启slave主机(haproxy2)的haproxy服务

切换之前需要先关闭另一台主机的haproxy服务

//在主master主机(haproxy1)上关闭keepalived服务

[root@haproxy1 ~]# systemctl stop keepalived.service

[root@haproxy1 ~]# ip a //再次查看vip,发现vip消失

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:51:4c:69 brd ff:ff:ff:ff:ff:ff

inet 192.168.134.148/24 brd 192.168.134.255 scope global dynamic noprefixroute ens160

valid_lft 1673sec preferred_lft 1673sec

inet6 fe80::20c:29ff:fe51:4c69/64 scope link noprefixroute

valid_lft forever preferred_lft forever

//在备slave主机(haproxy2)上查看vip是否跳转过来

[root@haproxy2 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:14:ea:e9 brd ff:ff:ff:ff:ff:ff

inet 192.168.134.155/24 brd 192.168.134.255 scope global dynamic noprefixroute ens160

valid_lft 1651sec preferred_lft 1651sec

inet 192.168.134.250/32 scope global ens160

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe14:eae9/64 scope link noprefixroute

valid_lft forever preferred_lft forever

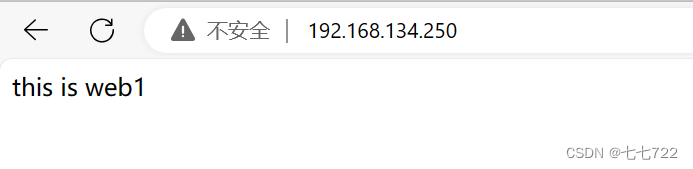

再次通过vip访问页面

部署完成

1575

1575

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?