基于卷积神经网络的红外图像非均匀性校正的研究。

model

项目背景: 红外图像由于传感器本身的制造差异、温度变化等因素,常会出现像素间非均匀性的现象,这会导致图像质量下降,影响后续的图像处理和分析。传统的非均匀性校正方法往往依赖于复杂的标定过程和繁琐的手动调整,难以适应动态变化的环境。因此,探索高效、自动化的非均匀性校正方案具有重要的研究意义和实用价值。

项目目标: 本项目旨在通过卷积神经网络(CNN)技术,开发出一种新的红外图像非均匀性校正方法,即基于残差学习的非均匀性校正网络(RNUC),以期达到更高效且精确的校正效果。

技术亮点:

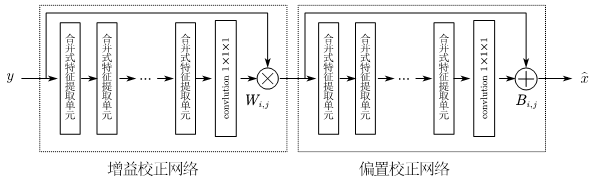

- RNUC网络架构: 本项目提出了基于残差学习的思想,通过两个残差块的级联方式,有效地解决了红外图像中的非均匀性问题。

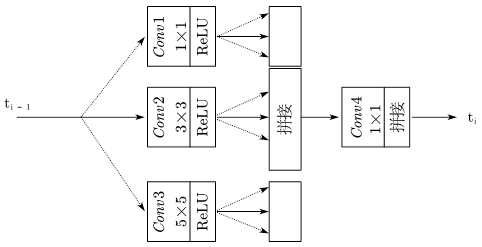

- 合并式特征提取单元: 为了充分利用图像中的多尺度特征信息,RNUC采用了特别设计的特征提取单元,能够在不同层次上捕捉到图像的细节和全局信息,从而提高校正的准确性。

方法概述:

- RNUC网络的核心在于通过级联的残差块来学习红外图像的非均匀性模式,并逐步对其进行校正。这种方法的好处在于它不仅能够保留图像的重要特征,还能有效减少噪声的影响。

- 合并式特征提取单元使得网络能够同时处理局部和全局特征,这对于非均匀性校正尤为重要,因为非均匀性问题通常既存在于图像的小区域也跨越整个图像。

预期成果:

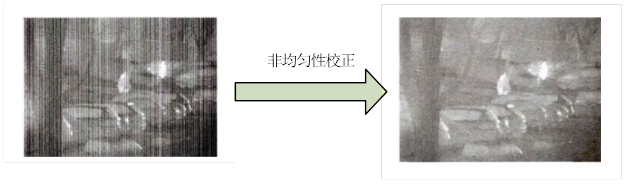

- 通过RNUC网络处理后的红外图像,其非均匀性得到了显著改善,图像质量明显提升。

- 该方法相比传统校正方法具有更高的效率和自动化程度,减少了对人工干预的需求,能够适应更多样化的应用场景。

应用场景:

- 本项目的研究成果可以广泛应用于各种需要高质量红外图像的场合,如军事侦察、安防监控、工业检测、医疗诊断等领域。

- 特别是在需要长时间连续监测的环境中,RNUC能够保证红外图像的一致性和可靠性,为决策提供更加准确的数据支持。

总结: 基于卷积神经网络的红外图像非均匀性校正方法——RNUC,通过引入残差学习机制和创新的特征提取单元,为解决红外图像非均匀性问题提供了一种新颖而有效的解决方案。本项目不仅在理论上有所突破,也为实际应用带来了新的可能性,尤其是在需要高精度图像分析的领域中具有广阔的应用前景。

根据两个残差块进行级联的方式提出一种基于残差学习的红外图像非均匀性校正的方法:Residual-learning based Non-uniformity Correction Net,简称(RNUC)

合并式特征提取单元:

网络结构:

去噪结果:

main.py

# -*- coding: utf-8 -*-

"""

Created on Mar 6 18:47:14 2022

@author: LZK

"""

from keras import backend as K

import tensorflow as tf

import os, time, glob

import PIL.Image as Image

import numpy as np

import pandas as pd

import keras

import cv2

import time

from keras.callbacks import CSVLogger, ModelCheckpoint, LearningRateScheduler

from keras.models import load_model

from keras.optimizers import Adam, SGD

from skimage.measure import compare_psnr, compare_ssim

import models

from keras.utils import multi_gpu_model

from keras.models import Model

from keras.layers import Input, Conv2D, BatchNormalization, Activation, Subtract, Multiply, Add, Concatenate

from keras import regularizers

from keras.utils import plot_model

from keras import initializers

from keras.layers.pooling import MaxPooling2D

from keras.layers.convolutional import UpSampling2D

from models import NUCNN

from utilis import Degrade, PSNR, load_train_data

# from util import *

# os.environ["CUDA_VISIBLE_DEVICES"] = "1"

# # Params

# -----------------------------------------------------------------------#

# 训练数据生成data generation

# -----------------------------------------------------------------------#

def train_datagen(y_, batch_size=128):

# y_ is the tensor of clean patches

indices = list(range(y_.shape[0]))

steps_per_epoch = len(indices) // batch_size - 1

j = 0

while (True):

np.random.shuffle(indices) # shuffle

ge_batch_y = []

ge_batch_x = []

for i in range(batch_size):

flip = True # (i%2 == 0)

sample = y_[indices[j * batch_size + i]]

sample_GO, _, _ = Degrade(sample, flip) # input image = clean image + noise

ge_batch_y.append(sample)

ge_batch_x.append(sample_GO)

if j == steps_per_epoch:

j = 0

np.random.shuffle(indices)

else:

j += 1

yield np.array(ge_batch_x), np.array(ge_batch_y)

# -----------------------------------------------------------------------#

# -----------------------------------------------------------------------#

def adam_step_decay(epoch):

if epoch <= 25:

lr = 1e-3

elif epoch > 25 and epoch <= 45:

lr = 1e-4

else:

lr = 1e-5

return lr

# -----------------------------------------------------------------------#

# -----------------------------------------------------------------------#

batch_size = 128

train_data = './data/Train/clean_patches.npy'

epoch = 50

save_every = 1

pretrain = None

# pretrain = './checkpoints/1_plain_adam/weights-04-27.7000-0.0017.hdf5'

init_epoch = 0

multi_GPU = True

TRAIN = False

TEST = not TRAIN

realFrame = True

# -----------------------------------------------------------------------#

# -----------------------------------------------------------------------#

if TRAIN:

data = load_train_data(train_data)

data = data.reshape((data.shape[0], data.shape[1], data.shape[2], 1))

data = data.astype('float32') / 255.0

if multi_GPU:

with tf.device('/cpu:0'):

model = NUCNN()

else:

model = NUCNN()

# model selection

with open('./export/model_summary.txt', 'w') as f:

model.summary(print_fn=lambda x: f.write(x + '\n'))

plot_model(model, to_file='./export/model.png')

opt = Adam(decay=1e-6)

# opt = SGD(momentum=0.9, decay=1e-4, nesterov=True)

if multi_GPU:

print('Using Multi GPUs !')

parallel_model = multi_gpu_model(model, gpus=2)

parallel_model.compile(optimizer=opt, loss='mse', metrics=[PSNR])

else:

model.compile(optimizer=opt, loss='mse', metrics=[PSNR])

if pretrain:

print('Load pretrained model !')

if multi_GPU:

parallel_model.load_weights(pretrain)

else:

model.load_weights(pretrain)

# use call back functions

filepath = "./checkpoints/weights-{epoch:02d}-{PSNR:.4f}-{loss:.4f}.hdf5"

ckpt = ModelCheckpoint(filepath, monitor='val_loss', verbose=1, period=save_every, save_weights_only=True)

lr = LearningRateScheduler(adam_step_decay)

TensorBoard = keras.callbacks.TensorBoard(log_dir='./logs')

# train

if multi_GPU:

history = parallel_model.fit_generator(train_datagen(data, batch_size=batch_size),

steps_per_epoch=len(data) // batch_size,

epochs=epoch, verbose=1, callbacks=[ckpt, lr, TensorBoard],

initial_epoch=init_epoch)

else:

history = model.fit_generator(train_datagen(data, batch_size=batch_size),

steps_per_epoch=len(data) // batch_size,

epochs=epoch, verbose=1, callbacks=[ckpt, lr, TensorBoard],

initial_epoch=init_epoch)

# ----------------------------------------------------------------------#

# ----------------------------------------------------------------------#

# ----------------------------------------------------------------------#

# ----------------------------------------------------------------------#

if TEST:

if not realFrame:

save_dir = 'results/sim'

test_dir = 'data/Test/Set12'

WEIGHT_PATH = './checkpoints/weights-50-28.8004-0.0013.hdf5'

# ----------------------------------------------------------------------#

def Addnoise(image, sigma=11.55, beta=0.15):

image.astype('float32')

O_noise = np.random.normal(0, sigma / 255.0, image.shape) # noise

G_col = np.random.normal(1, beta, image.shape[1])

G_noise = np.tile(G_col, (image.shape[0], 1))

# G_noise = G_noise + np.random.normal(0, 2/255.0, (image.shape[0],image.shape[1]))

G_noise = np.reshape(G_noise, image.shape)

image_G = np.multiply(image, G_noise)

image_GO = image_G + O_noise # input image = clean image + noise

return image_GO

# ----------------------------------------------------------------------#

if multi_GPU:

model = NUCNN()

print('Using Multi GPUs !')

model = multi_gpu_model(model, gpus=2)

model.load_weights(WEIGHT_PATH)

else:

model = NUCNN()

model.load_weights(WEIGHT_PATH)

print('Start to test on {}'.format(test_dir))

out_dir = save_dir + '/' + test_dir.split('/')[-1] + '/'

if not os.path.exists(out_dir):

os.mkdir(out_dir)

name = []

GainList = []

OnoiseList = []

psnr = []

ssim = []

file_list = glob.glob('{}/*.*'.format(test_dir))

for file in file_list:

start = time.time()

if file[-3:] in ['bmp', 'jpg', 'png', 'BMP']:

# read image

img_clean = np.array(Image.open(file), dtype='float32') / 255.0

img_test = Addnoise(img_clean, sigma=11.55, beta=0.15).astype('float32')

# predict

x_test = img_test.reshape(1, img_test.shape[0], img_test.shape[1], 1)

y_predict = model.predict(x_test)

# calculate numeric metrics

img_out = y_predict.reshape(img_clean.shape)

img_out = np.clip(img_out, 0, 1)

psnr_noise, psnr_denoised = compare_psnr(img_clean, img_test), compare_psnr(img_clean, img_out)

ssim_noise, ssim_denoised = compare_ssim(img_clean, img_test), compare_ssim(img_clean, img_out)

psnr.append(psnr_denoised)

ssim.append(ssim_denoised)

# save images

filename = file.split('/')[-1].split('.')[0] # get the name of image file

filename = filename[6:]

name.append(filename)

img_test = Image.fromarray(np.clip((img_test * 255), 0, 255).astype('uint8'))

img_test.save(out_dir + filename + '_psnr{:.2f}.png'.format(psnr_noise))

img_out = Image.fromarray((img_out * 255).astype('uint8'))

img_out.save(out_dir + filename + '_psnr{:.2f}.png'.format(psnr_denoised))

psnr_avg = sum(psnr) / len(psnr)

ssim_avg = sum(ssim) / len(ssim)

name.append('Average')

psnr.append(psnr_avg)

ssim.append(ssim_avg)

print('Average PSNR = {0:.4f}, SSIM = {1:.4f}'.format(psnr_avg, ssim_avg))

else:

# ----------------------------------------------------------------------#

print("Test on Real Frame !")

save_dir = 'results/real'

multi_GPU = True

# ----------------------------------------------------------------------#

test_dir = './data/Test/Real/'

WEIGHT_PATH = './checkpoints/weights-50-28.8004-0.0013.hdf5'

if multi_GPU:

model = NUCNN()

print('Using Multi GPUs !')

model = multi_gpu_model(model, gpus=2)

model.load_weights(WEIGHT_PATH)

else:

model = NUCNN()

model.load_weights(WEIGHT_PATH)

print('Start to test on {}'.format(test_dir))

out_dir = save_dir + '/' + test_dir.split('/')[-1] + '/'

if not os.path.exists(out_dir):

os.mkdir(out_dir)

name = []

print('Start Test')

file_list = os.listdir(test_dir)

for file in file_list:

# read image

img_clean = np.array(Image.open(test_dir + file), dtype='float32') / 255.0

# img_test = img_clean + np.random.normal(0, sigma/255.0, img_clean.shape)

img_test = img_clean.astype('float32')

# predict

x_test = img_test.reshape(1, img_test.shape[0], img_test.shape[1], 1)

y_predict = model.predict(x_test)

# calculate numeric metrics

img_out = y_predict.reshape(img_clean.shape)

img_out = np.clip(img_out, 0, 1)

filename = file # get the name of image file

name.append(filename)

img_out = Image.fromarray((img_out * 255).astype('uint8'))

img_out.save(out_dir + filename)

print('Test Over')model.py

# -*- coding: utf-8 -*-

"""

Created on Mar 1 18:12:02 2022

@author: LZK

"""

from keras.layers import Input, Conv2D, Activation, Subtract, Multiply, Concatenate

from keras.models import Model

# L2 = regularizers.l2(1e-6)

L2 = None

init = 'he_normal'

# -----------------------------------------------------------------------#

# 合并式特征提取单元

# -----------------------------------------------------------------------#

def Inception(inpt):

x_0 = Conv2D(filters=32, kernel_size=(1, 1), strides=(1, 1), padding='same', kernel_initializer=init)(inpt)

x_0 = Activation('relu')(x_0)

x_0 = Conv2D(filters=32, kernel_size=(1, 1), strides=(1, 1), padding='same', kernel_initializer=init)(x_0)

x_0 = Activation('relu')(x_0)

x_1 = Conv2D(filters=32, kernel_size=(3, 3), strides=(1, 1), padding='same', kernel_initializer=init)(inpt)

x_1 = Activation('relu')(x_1)

x_1 = Conv2D(filters=32, kernel_size=(3, 3), strides=(1, 1), padding='same', kernel_initializer=init)(x_1)

x_1 = Activation('relu')(x_1)

x_2 = Conv2D(filters=32, kernel_size=(5, 5), strides=(1, 1), padding='same', kernel_initializer=init)(inpt)

x_2 = Activation('relu')(x_2)

x_2 = Conv2D(filters=32, kernel_size=(5, 5), strides=(1, 1), padding='same', kernel_initializer=init)(x_2)

x_2 = Activation('relu')(x_2)

x = Concatenate()([x_0, x_1, x_2])

x = Conv2D(filters=64, kernel_size=(1, 1), strides=(1, 1), padding='same', kernel_initializer=init)(x)

x = Activation('relu')(x)

return x

# -----------------------------------------------------------------------#

# 级联两个网络结构

# -----------------------------------------------------------------------#

def NUCNN():

inpt = Input(shape=(None, None, 1))

x = Inception(inpt)

x = Inception(x)

x = Inception(x)

x = Inception(x)

x = Inception(x)

# x = Inception(x)

# x = Inception(x)

O = Conv2D(filters=1, kernel_size=(1, 1), strides=(1, 1), padding='same', kernel_initializer=init)(x)

T = Subtract()([inpt, O])

x = Inception(T)

x = Inception(x)

x = Inception(x)

x = Inception(x)

x = Inception(x)

G = Conv2D(filters=1, kernel_size=(1, 1), strides=(1, 1), padding='same', kernel_initializer=init)(x)

res = Multiply()([T, G])

model = Model(inputs=inpt, outputs=[res])

return model

802

802

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?