- Bounded and unbounded streams

- Real-time and recorded streams

state:

- **Multiple State Primitives:**value, list, or map

- **Pluggable State Backends:**memory or RocksDB or FileSystem

- **Exactly-once state consistency:**checkpoint

- **Very Large State:TB ,**asynchronous and incremental checkpoint

- Scalable Applications

time:

- Event-time Mode

- **Watermark Support:**Watermarks are also a flexible mechanism to trade-off the latency and completeness of results.

- **Late Data Handling:**rerouting them via side outputs and updating previously completed results

- **Processing-time Mode:**The processing-time mode can be suitable for certain applications with strict low-latency requirements that can tolerate approximate results.

public class WatermarkDemo {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator<WaterSensor> dataSource = env.socketTextStream("hadoop102", 7777)

.map(new MapFunction<String, WaterSensor>() {

@Override

public WaterSensor map(String value) throws Exception {

String[] data = value.split(",");

return new WaterSensor(data[0], Long.parseLong(data[1]), Integer.parseInt(data[2]));

}

});

// WatermarkStrategy<WaterSensor> watermarkStrategy = WatermarkStrategy.<WaterSensor>forMonotonousTimestamps()

WatermarkStrategy<WaterSensor> watermarkStrategy = WatermarkStrategy

// 乱序等待时间 3s

.<WaterSensor>forBoundedOutOfOrderness(Duration.ofSeconds(3))

// 时间戳分配器 从数据中提取

.withTimestampAssigner((SerializableTimestampAssigner<WaterSensor>) (element, recordTimestamp) -> {

System.out.println("数据:" + element + ",recordTimestamp:" + recordTimestamp);

return element.getTs() * 1000;

});

SingleOutputStreamOperator<WaterSensor> ds = dataSource.assignTimestampsAndWatermarks(watermarkStrategy);

OutputTag<WaterSensor> lateOutputTag = new OutputTag<>("late-data", Types.POJO(WaterSensor.class));

SingleOutputStreamOperator<String> process = ds.keyBy(WaterSensor::getId)

// 滚动窗口长度 10s

.window(TumblingEventTimeWindows.of(Time.seconds(10)))

// 允许迟到时间 2s (推迟2s关窗,关窗前迟到数据来一条触发一次计算)

.allowedLateness(Time.seconds(2))

// 关窗后的迟到数据放入侧输出流 (这里迟到数据指watermark超出乱序等待时间+允许迟到时间的数据,这里即 3s+2s)

.sideOutputLateData(lateOutputTag)

.process(new ProcessWindowFunction<WaterSensor, String, String, TimeWindow>() {

@Override

public void process(String s, Context context, Iterable<WaterSensor> elements, Collector<String> out) throws Exception {

long start = context.window().getStart();

long end = context.window().getEnd();

long currentProcessingTime = context.currentProcessingTime();

long currentWatermark = context.currentWatermark();

String windowStart = DateFormatUtils.format(start, "yyyy-MM-dd HH:mm:ss.SSS");

String windowEnd = DateFormatUtils.format(end, "yyyy-MM-dd HH:mm:ss.SSS");

long count = elements.spliterator().estimateSize();

out.collect("key=" + s + ";" + windowStart + "=>" + windowEnd + ";" + "count=" + count + ";" + "currentProcessingTime=" + currentProcessingTime + "currentWatermark=" + currentWatermark);

}

});

process.print();

process.getSideOutput(lateOutputTag).printToErr();

env.execute();

}

}

分层API

ProcessFunctions provide fine-grained control over time and state. A ProcessFunction can arbitrarily modify its state and register timers that will trigger a callback function in the future. Hence, ProcessFunctions can implement complex per-event business logic as required for many stateful event-driven applications.

The following example shows a KeyedProcessFunction that operates on a KeyedStream and matches START and END events. When a START event is received, the function remembers its timestamp in state and registers a timer in four hours. If an END event is received before the timer fires, the function computes the duration between END and START event, clears the state, and returns the value. Otherwise, the timer just fires and clears the state.

/**

* Matches keyed START and END events and computes the difference between

* both elements' timestamps. The first String field is the key attribute,

* the second String attribute marks START and END events.

*/

public static class StartEndDuration

extends KeyedProcessFunction<String, Tuple2<String, String>, Tuple2<String, Long>> {

private ValueState<Long> startTime;

@Override

public void open(Configuration conf) {

// obtain state handle

startTime = getRuntimeContext()

.getState(new ValueStateDescriptor<Long>("startTime", Long.class));

}

/** Called for each processed event. */

@Override

public void processElement(

Tuple2<String, String> in,

Context ctx,

Collector<Tuple2<String, Long>> out) throws Exception {

switch (in.f1) {

case "START":

// set the start time if we receive a start event.

startTime.update(ctx.timestamp());

// register a timer in four hours from the start event.

ctx.timerService()

.registerEventTimeTimer(ctx.timestamp() + 4 * 60 * 60 * 1000);

break;

case "END":

// emit the duration between start and end event

Long sTime = startTime.value();

if (sTime != null) {

out.collect(Tuple2.of(in.f0, ctx.timestamp() - sTime));

// clear the state

startTime.clear();

}

default:

// do nothing

}

}

/** Called when a timer fires. */

@Override

public void onTimer(

long timestamp,

OnTimerContext ctx,

Collector<Tuple2<String, Long>> out) {

// Timeout interval exceeded. Cleaning up the state.

startTime.clear();

}

}

The**DataStream API**provides primitives for many common stream processing operations, such as windowing, record-at-a-time transformations, and enriching events by querying an external data store. The DataStream API is available for Java and Scala and is based on functions, such as map(), reduce(), and aggregate(). Functions can be defined by extending interfaces or as Java or Scala lambda functions.

The following example shows how to sessionize a clickstream and count the number of clicks per session.

// a stream of website clicks

DataStream<Click> clicks = ...

DataStream<Tuple2<String, Long>> result = clicks

// project clicks to userId and add a 1 for counting

.map(

// define function by implementing the MapFunction interface.

new MapFunction<Click, Tuple2<String, Long>>() {

@Override

public Tuple2<String, Long> map(Click click) {

return Tuple2.of(click.userId, 1L);

}

})

// key by userId (field 0)

.keyBy(0)

// define session window with 30 minute gap

.window(EventTimeSessionWindows.withGap(Time.minutes(30L)))

// count clicks per session. Define function as lambda function.

.reduce((a, b) -> Tuple2.of(a.f0, a.f1 + b.f1));

Flink features two relational APIs, the**Table API and SQL**. Both APIs are unified APIs for batch and stream processing, i.e., queries are executed with the same semantics on unbounded, real-time streams or bounded, recorded streams and produce the same results. The Table API and SQL leverage Apache Calcite for parsing, validation, and query optimization. They can be seamlessly integrated with the DataStream and DataSet APIs and support user-defined scalar, aggregate, and table-valued functions.

SELECT userId, COUNT(*)

FROM clicks

GROUP BY SESSION(clicktime, INTERVAL '30' MINUTE), userId

Flink和Kafka连接时的精确一次保证

1**)整体介绍**

既然是端到端的exactly-once,我们依然可以从三个组件的角度来进行分析:

(1)Flink内部

Flink内部可以通过检查点机制保证状态和处理结果的exactly-once语义。

(2)输入端

输入数据源端的Kafka可以对数据进行持久化保存,并可以重置偏移量(offset)。所以我们可以在Source任务(FlinkKafkaConsumer)中将当前读取的偏移量保存为算子状态,写入到检查点中;当发生故障时,从检查点中读取恢复状态,并由连接器FlinkKafkaConsumer向Kafka重新提交偏移量,就可以重新消费数据、保证结果的一致性了。

(3)输出端

输出端保证exactly-once的最佳实现,当然就是两阶段提交(2PC)。作为与Flink天生一对的Kafka,自然需要用最强有力的一致性保证来证明自己。

也就是说,我们写入Kafka的过程实际上是一个两段式的提交:处理完毕得到结果,写入Kafka时是基于事务的“预提交”;等到检查点保存完毕,才会提交事务进行“正式提交”。如果中间出现故障,事务进行回滚,预提交就会被放弃;恢复状态之后,也只能恢复所有已经确认提交的操作。

2**)需要的配置**

在具体应用中,实现真正的端到端exactly-once,还需要有一些额外的配置:

(1)必须启用检查点

(2)指定KafkaSink的发送级别为DeliveryGuarantee.EXACTLY_ONCE

(3)配置Kafka读取数据的消费者的隔离级别

这里所说的Kafka,是写入的外部系统。预提交阶段数据已经写入,只是被标记为“未提交”(uncommitted),而Kafka中默认的隔离级别isolation.level是read_uncommitted,也就是可以读取未提交的数据。这样一来,外部应用就可以直接消费未提交的数据,对于事务性的保证就失效了。所以应该将隔离级别配置

为read_committed,表示消费者遇到未提交的消息时,会停止从分区中消费数据,直到消息被标记为已提交才会再次恢复消费。当然,这样做的话,外部应用消费数据就会有显著的延迟。

(4)事务超时配置

Flink的Kafka连接器中配置的事务超时时间transaction.timeout.ms默认是1小时,而Kafka集群配置的事务最大超时时间transaction.max.timeout.ms默认是15分钟。所以在检查点保存时间很长时,有可能出现Kafka已经认为事务超时了,丢弃了预提交的数据;而Sink任务认为还可以继续等待。如果接下来检查点保存成功,发生故障后回滚到这个检查点的状态,这部分数据就被真正丢掉了。所以这两个超时时间,前者应该小于等于后者。

public class KafkaEOSDemo {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 代码中用到hdfs,需要导入hadoop依赖、指定访问hdfs的用户名

System.setProperty("HADOOP_USER_NAME", "atguigu");

// TODO 1、启用检查点,设置为精准一次

env.enableCheckpointing(5000, CheckpointingMode.EXACTLY_ONCE);

CheckpointConfig checkpointConfig = env.getCheckpointConfig();

checkpointConfig.setCheckpointStorage("hdfs://hadoop102:8020/chk");

checkpointConfig.setExternalizedCheckpointCleanup(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);

// TODO 2.读取kafka

KafkaSource<String> kafkaSource = KafkaSource.<String>builder()

.setBootstrapServers("hadoop102:9092,hadoop103:9092,hadoop104:9092")

.setGroupId("atguigu")

.setTopics("topic_1")

.setValueOnlyDeserializer(new SimpleStringSchema())

.setStartingOffsets(OffsetsInitializer.latest())

.build();

DataStreamSource<String> kafkasource = env

.fromSource(kafkaSource, WatermarkStrategy.forBoundedOutOfOrderness(Duration.ofSeconds(3)), "kafkasource");

/**

* TODO 3.写出到Kafka

* 精准一次 写入Kafka,需要满足以下条件,缺一不可

* 1、开启checkpoint

* 2、sink设置保证级别为 精准一次

**自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。**

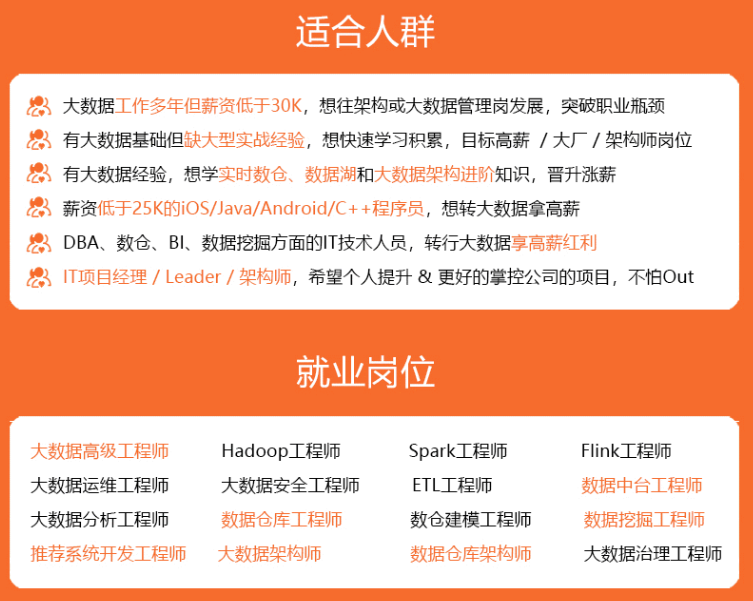

**深知大多数大数据工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!**

**因此收集整理了一份《2024年大数据全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友。**

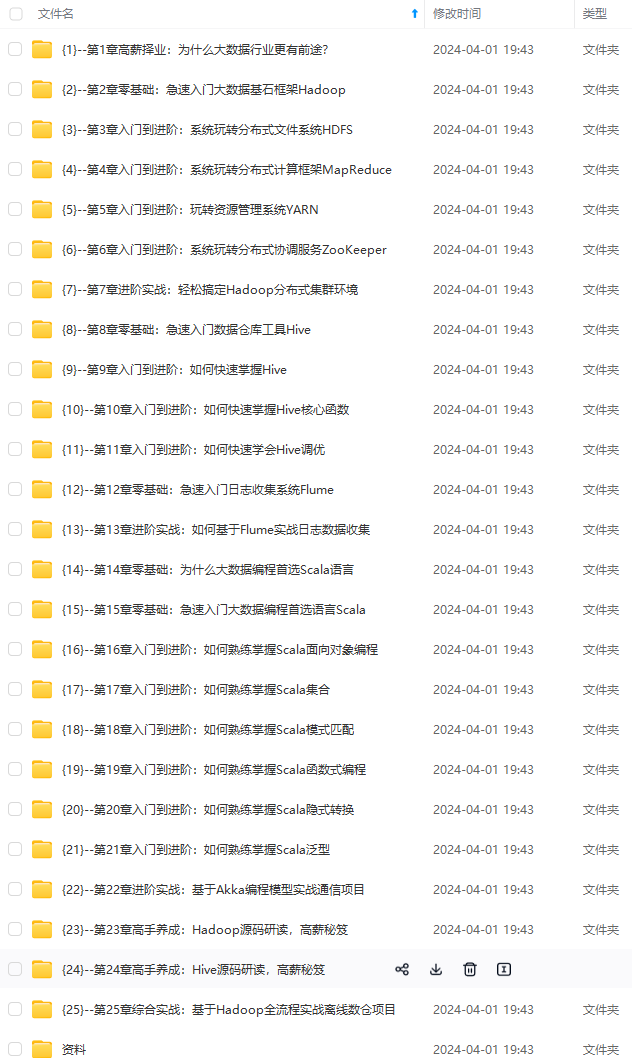

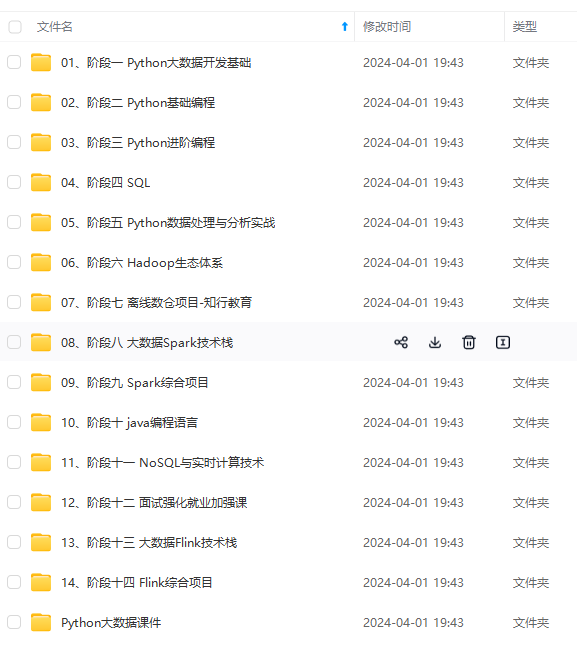

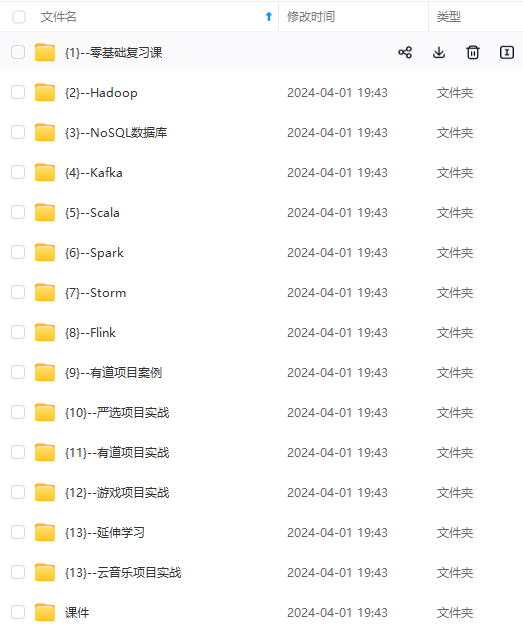

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上大数据开发知识点,真正体系化!**

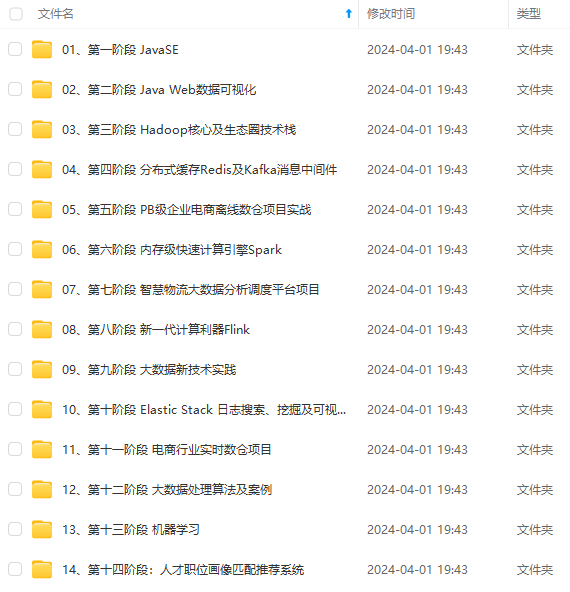

**由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新**

**如果你觉得这些内容对你有帮助,可以添加VX:vip204888 (备注大数据获取)**

**一个人可以走的很快,但一群人才能走的更远。不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎扫码加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

升的进阶课程,基本涵盖了95%以上大数据开发知识点,真正体系化!**

**由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新**

**如果你觉得这些内容对你有帮助,可以添加VX:vip204888 (备注大数据获取)**

[外链图片转存中...(img-NjVJ4UY6-1712962084123)]

**一个人可以走的很快,但一群人才能走的更远。不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎扫码加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

313

313

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?