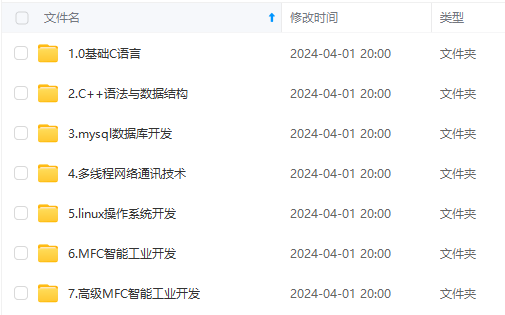

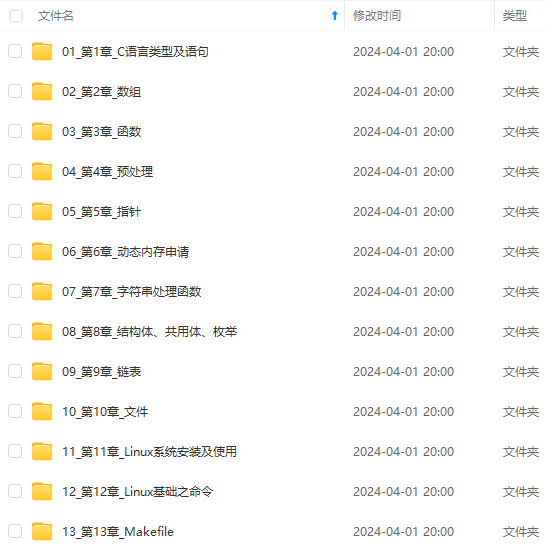

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上C C++开发知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

import numpy as np

import pickle

from collections import OrderedDict

from common.layers import *

class DeepConvNet:

'''深度的卷积网络层(3x3的滤波器)

结构层(6个卷积层+3个池化层,全连接层后面用dropout):

conv-relu-conv-relu-pool- [16个滤波器]

conv-relu-conv-relu-pool- [32个滤波器]

conv-relu-conv-relu-pool- [64个滤波器]

affine-relu-dropout-affine-dropout-softmax

'''

def __init__(self, input_dim=(1, 28, 28),

conv1={'filterNum':16,'filterSize':3,'pad':1,'stride':1},

conv2={'filterNum':16,'filterSize':3,'pad':1,'stride':1},

conv3={'filterNum':32,'filterSize':3,'pad':1,'stride':1},

conv4={'filterNum':32,'filterSize':3,'pad':2,'stride':1},

conv5={'filterNum':64,'filterSize':3,'pad':1,'stride':1},

conv6={'filterNum':64,'filterSize':3,'pad':1,'stride':1},

hiddenSize=50, outputSize=10):

#上一层的神经元数量

pre_n=np.array([1*3*3,16*3*3,16*3*3,32*3*3,32*3*3,64*3*3,64*4*4,hiddenSize])

#权重初始值使用He,因为激活函数是ReLU

weight_inits=np.sqrt(2.0/pre_n)

self.params={}

pre_channel_n=input_dim[0]#通道数,每经过一个卷积层更新

for i,conv in enumerate([conv1,conv2,conv3,conv4,conv5,conv6]):

self.params['W'+str(i+1)]=weight_inits[i]*np.random.randn(conv['filterNum'],pre_channel_n,conv['filterSize'],conv['filterSize'])

self.params['b'+str(i+1)]=np.zeros(conv['filterNum'])

pre_channel_n=conv['filterNum']#更新通道数

self.params['W7']=weight_inits[6]*np.random.randn(64*4*4,hiddenSize)

self.params['b7']=np.zeros(hiddenSize)

self.params['W8']=weight_inits[7]*np.random.randn(hiddenSize,outputSize)

self.params['b8']=np.zeros(outputSize)

#生成各层(21层)

self.layers=[]

self.layers.append(Convolution(self.params['W1'],self.params['b1'],conv1['stride'],conv1['pad']))

self.layers.append(Relu())

self.layers.append(Convolution(self.params['W2'],self.params['b2'],conv2['stride'],conv2['pad']))

self.layers.append(Relu())

self.layers.append(Pooling(pool_h=2,pool_w=2,stride=2))

self.layers.append(Convolution(self.params['W3'],self.params['b3'],conv3['stride'],conv3['pad']))

self.layers.append(Relu())

self.layers.append(Convolution(self.params['W4'],self.params['b4'],conv4['stride'],conv4['pad']))

self.layers.append(Relu())

self.layers.append(Pooling(pool_h=2,pool_w=2,stride=2))

self.layers.append(Convolution(self.params['W5'],self.params['b5'],conv5['stride'],conv5['pad']))

self.layers.append(Relu())

self.layers.append(Convolution(self.params['W6'],self.params['b6'],conv6['stride'],conv6['pad']))

self.layers.append(Relu())

self.layers.append(Pooling(pool_h=2,pool_w=2,stride=2))

self.layers.append(Affine(self.params['W7'],self.params['b7']))

self.layers.append(Relu())

self.layers.append(Dropout(0.5))

self.layers.append(Affine(self.params['W8'],self.params['b8']))

self.layers.append(Dropout(0.5))

self.last_layer=SoftmaxWithLoss()

def predict(self,x,train_flg=False):

for layer in self.layers:

if isinstance(layer,Dropout):#判断层是不是Dropout类型

x=layer.forward(x,train_flg)

else:

x=layer.forward(x)

return x

def loss(self,x,t):

y=self.predict(x,train_flg=True)

return self.last_layer.forward(y,t)

def accuracy(self,x,t,batch_size=100):

if t.ndim!=1:t=np.argmax(t,axis=1)

acc=0.0

for i in range(int(x.shape[0]/batch_size)):

tx=x[i*batch_size:(i+1)*batch_size]

tt=t[i*batch_size:(i+1)*batch_size]

y=self.predict(tx,train_flg=False)

y=np.argmax(y,axis=1)

acc+=np.sum(y==tt)

return acc/x.shape[0]

def gradient(self,x,t):

#forward

self.loss(x,t)

#backward

dout=1

dout=self.last_layer.backward(dout)

tmp_layers=self.layers.copy()

tmp_layers.reverse()

for layer in tmp_layers:

dout=layer.backward(dout)

grads={}#遍历包含权重偏置的层

for i,layer_i in enumerate((0,2,5,7,10,12,15,18)):

grads['W'+str(i+1)]=self.layers[layer_i].dW

grads['b'+str(i+1)]=self.layers[layer_i].db

return grads

def save_params(self,fname='params.pkl'):

params={}

for k,v in self.params.items():

params[k]=v

with open(fname,'wb') as f:

pickle.dump(params,f)

def load_params(self,fname='params.pkl'):

with open(fname,'rb') as f:

params=pickle.load(f)

for k,v in params.items():

self.params[k]=v

for i,layer_i in enumerate((0,2,5,7,10,12,15,18)):

self.layers[layer_i].W=self.params['W'+str(i+1)]

self.layers[layer_i].b=self.params['b'+str(i+1)]

测试精度并保存学习后的参数,代码如下:

import numpy as np

from dataset.mnist import load_mnist

from deepconv import DeepConvNet

from common.trainer import Trainer

#加载MNIST数据集

(x_train,t_train),(x_test,t_test)=load_mnist(flatten=False)

#深度学习CNN

network=DeepConvNet()

trainer=Trainer(network,x_train,t_train,x_test,t_test,epochs=20,mini_batch_size=100,optimizer='Adam',optimizer_param={'lr':0.001},evaluate_sample_num_per_epoch=1000)

trainer.train()

#保存学习的权重偏置参数,方便后续调用

network.save_params('DeepCNN_Params.pkl')

print('保存参数成功!')

上面代码的训练大概花费了6~7个小时(本人配置一般的电脑),接下来我们直接来加载深度学习完保存的权重偏置参数的pkl文件,看下这个深度CNN的精度能达到多少,以及查看20个没有被正确识别的数字图片有什么特征。

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上C C++开发知识点,真正体系化!**

**由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新**

**[如果你需要这些资料,可以戳这里获取](https://bbs.csdn.net/topics/618668825)**

课程,涵盖了95%以上C C++开发知识点,真正体系化!**

**由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新**

**[如果你需要这些资料,可以戳这里获取](https://bbs.csdn.net/topics/618668825)**

1188

1188

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?