既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上C C++开发知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

}

while( data8 != data8End ) {

*data8++ = -*data8;

}

}

This function processes an aligned buffer in 43,043 microseconds and an unaligned buffer in 55,775 microseconds, respectively. Thus, on this test machine, accessing unaligned memory four bytes at a time is *slower* than accessing aligned memory two bytes at a time:

**Figure 8. Single- versus double- versus quad-byte access**

Now for the horror story: processing the buffer eight bytes at a time.

**Listing 4. Munging data eight bytes at a time**

void Munge32( void *data, uint32_t size ) {

uint32_t *data32 = (uint32_t*) data;

uint32_t *data32End = data32 + (size >> 2); /* Divide size by 4. */

uint8_t *data8 = (uint8_t*) data32End;

uint8_t *data8End = data8 + (size & 0x00000003); /* Strip upper 30 bits. */

while( data32 != data32End ) {

*data32++ = -*data32;

}

while( data8 != data8End ) {

*data8++ = -*data8;

}

}

`Munge64` processes an aligned buffer in 39,085 microseconds -- about 10% faster than processing the buffer four bytes at a time. However, processing an unaligned buffer takes an amazing 1,841,155 microseconds -- two orders of magnitude slower than aligned access, an outstanding 4,610% performance penalty!

What happened? Because modern PowerPC processors lack hardware support for unaligned floating-point access, the processor throws an exception *for each unaligned access.* The operating system catches this exception and performs the alignment in software. Here's a chart illustrating the penalty, and when it occurs:

**Figure 9. Multiple-byte access comparison**

The penalties for one-, two- and four-byte unaligned access are dwarfed by the horrendous unaligned eight-byte penalty. Maybe this chart, removing the top (and thus the tremendous gulf between the two numbers), will be clearer:

**Figure 10. Multiple-byte access comparison #2**

There's another subtle insight hidden in this data. Compare eight-byte access speeds on four-byte boundaries:

**Figure 11. Multiple-byte access comparison #3**

Notice accessing memory eight bytes at a time on four- and twelve- byte boundaries *is slower* than reading the same memory four or even two bytes at a time. While PowerPCs have hardware support for four-byte aligned eight-byte doubles, you still pay a performance penalty if you use that support. Granted, it's no where near the 4,610% penalty, but it's certainly noticeable. Moral of the story: accessing memory in large chunks can be slower than accessing memory in small chunks, if that access is not aligned.

Atomicity

All modern processors offer atomic instructions. These special instructions are crucial for synchronizing two or more concurrent tasks. As the name implies, atomic instructions must be *indivisible* -- that's why they're so handy for synchronization: they can't be preempted.

It turns out that in order for atomic instructions to perform correctly, the addresses you pass them must be at least four-byte aligned. This is because of a subtle interaction between atomic instructions and virtual memory.

If an address is unaligned, it requires at least two memory accesses. But what happens if the desired data spans two pages of virtual memory? This could lead to a situation where the first page is resident while the last page is not. Upon access, in the middle of the instruction, a page fault would be generated, executing the virtual memory management swap-in code, destroying the atomicity of the instruction. To keep things simple and correct, both the 68K and PowerPC require that atomically manipulated addresses always be at least four-byte aligned.

Unfortunately, the PowerPC does not throw an exception when atomically storing to an unaligned address. Instead, the store simply always fails. This is bad because most atomic functions are written to retry upon a failed store, under the assumption they were preempted. These two circumstances combine to where your program will go into an infinite loop if you attempt to atomically store to an unaligned address. Oops.

Altivec

Altivec is all about speed. Unaligned memory access slows down the processor and costs precious transistors. Thus, the Altivec engineers took a page from the MIPS playbook and simply don't support unaligned memory access. Because Altivec works with sixteen-byte chunks at a time, all addresses passed to Altivec must be sixteen-byte aligned. What's scary is what happens if your address is not aligned.

Altivec won't throw an exception to warn you about the unaligned address. Instead, Altivec simply ignores the lower four bits of the address and charges ahead, *operating on the wrong address*. This means your program may silently corrupt memory or return incorrect results if you don't explicitly make sure all your data is aligned.

There is an advantage to Altivec's bit-stripping ways. Because you don't need to explicitly truncate (align-down) an address, this behavior can save you an instruction or two when handing addresses to the processor.

This is not to say Altivec can't process unaligned memory. You can find detailed instructions how to do so on the *Altivec Programming Environments Manual* (see [Resources](https://bbs.csdn.net/topics/618668825)). It requires more work, but because memory is so slow compared to the processor, the overhead for such shenanigans is surprisingly low.

Structure alignment

Examine the following structure:

**Listing 5. An innocent structure**

void Munge64( void \*data, uint32\_t size ) {

typedef struct {

char a;

long b;

char c;

} Struct;

What is the size of this structure in bytes? Many programmers will answer "6 bytes." It makes sense: one byte for `a`, four bytes for`b` and another byte for `c`. 1 + 4 + 1 equals 6. Here's how it would lay out in memory:

| | | | | |

| --- | --- | --- | --- | --- |

| Field Type | Field Name | Field Offset | Field Size | Field End |

| `char` | `a` | 0 | 1 | 1 |

| `long` | `b` | 1 | 4 | 5 |

| `char` | `c` | 5 | 1 | 6 |

| Total Size in Bytes: | 6 |

However, if you were to ask your compiler to `sizeof( Struct )`, chances are the answer you'd get back would be greater than six, perhaps eight or even twenty-four. There's two reasons for this: backwards compatibility and efficiency.

First, backwards compatibility. Remember the 68000 was a processor with two-byte memory access granularity, and would throw an exception upon encountering an odd address. If you were to read from or write to field `b`, you'd attempt to access an odd address. If a debugger weren't installed, the old Mac OS would throw up a System Error dialog box with one button: Restart. Yikes!

So, instead of laying out your fields just the way you wrote them, the compiler *padded* the structure so that `b` and `c` would reside at even addresses:

| | | | | |

| --- | --- | --- | --- | --- |

| Field Type | Field Name | Field Offset | Field Size | Field End |

| `char` | `a` | 0 | 1 | 1 |

| *padding* | 1 | 1 | 2 |

| `long` | `b` | 2 | 4 | 6 |

| `char` | `c` | 6 | 1 | 7 |

| *padding* | 7 | 1 | 8 |

| Total Size in Bytes: | 8 |

Padding is the act of adding otherwise unused space to a structure to make fields line up in a desired way. Now, when the 68020 came out with built-in hardware support for unaligned memory access, this padding was unnecessary. However, it didn't hurt anything, and it even helped a little in performance.

The second reason is efficiency. Nowadays, on PowerPC machines, two-byte alignment is nice, but four-byte or eight-byte is better. You probably don't care anymore that the original 68000 choked on unaligned structures, but you probably care about potential 4,610% performance penalties, which can happen if a `double` field doesn't sit aligned in a structure of your devising.

中文代码及其内存解释

内存对齐关键是需要画图!在下面的中文有说明例子

Examine the following structure:

如果英文看不懂,那么可以直接用中文例如(http://www.cppblog.com/snailcong/archive/2009/03/16/76705.html)来说!

首先由一个程序引入话题:

1 //环境:vc6 + windows sp2

2 //程序1

3 #include <iostream>

4

5 using namespace std;

6

7 struct st1

8 {

9 char a ;

10 int b ;

11 short c ;

12 };

13

14 struct st2

15 {

16 short c ;

17 char a ;

18 int b ;

19 };

20

21 int main()

22 {

23 cout<<"sizeof(st1) is "<<sizeof(st1)<<endl;

24 cout<<"sizeof(st2) is "<<sizeof(st2)<<endl;

25 return 0 ;

26 }

27

程序的输出结果为:

sizeof(st1) is 12

sizeof(st2) is 8

问题出来了,这两个一样的结构体,为什么sizeof的时候大小不一样呢?

本文的主要目的就是解释明白这一问题。

内存对齐,正是因为内存对齐的影响,导致结果不同。

对于大多数的程序员来说,内存对齐基本上是透明的,这是编译器该干的活,编译器为程序中的每个数据单元安排在合适的位置上,从而导致了相同的变量,不同声明顺序的结构体大小的不同。

那么编译器为什么要进行内存对齐呢?程序1中结构体按常理来理解sizeof(st1)和sizeof(st2)结果都应该是7,4(int) + 2(short) + 1(char) = 7 。经过内存对齐后,结构体的空间反而增大了。

在解释内存对齐的作用前,先来看下内存对齐的规则:

1、 对于结构的各个成员,第一个成员位于偏移为0的位置,以后每个数据成员的偏移量必须是min(#pragma pack()指定的数,这个数据成员的自身长度) 的倍数。

2、 在数据成员完成各自对齐之后,结构(或联合)本身也要进行对齐,对齐将按照#pragma pack指定的数值和结构(或联合)最大数据成员长度中,比较小的那个进行。

#pragma pack(n) 表示设置为n字节对齐。 VC6默认8字节对齐

以程序1为例解释对齐的规则 :

St1 :char占一个字节,起始偏移为0 ,int 占4个字节,min(#pragma pack()指定的数,这个数据成员的自身长度) = 4(VC6默认8字节对齐),所以int按4字节对齐,起始偏移必须为4的倍数,所以起始偏移为4,在char后编译器会添加3个字节的额外字节,不存放任意数据。short占2个字节,按2字节对齐,起始偏移为8,正好是2的倍数,无须添加额外字节。到此规则1的数据成员对齐结束,此时的内存状态为:

oxxx|oooo|oo

0123 4567 89 (地址)

(x表示额外添加的字节)

共占10个字节。还要继续进行结构本身的对齐,对齐将按照#pragma pack指定的数值和结构(或联合)最大数据成员长度中,比较小的那个进行,st1结构中最大数据成员长度为int,占4字节,而默认的#pragma pack 指定的值为8,所以结果本身按照4字节对齐,结构总大小必须为4的倍数,需添加2个额外字节使结构的总大小为12 。此时的内存状态为:

oxxx|oooo|ooxx

0123 4567 89ab (地址)

到此内存对齐结束。St1占用了12个字节而非7个字节。

St2 的对齐方法和st1相同,读者可自己完成。

下面再看一个例子 http://www.cppblog.com/cc/archive/2006/08/01/10765.html

内存对齐

在我们的程序中,数据结构还有变量等等都需要占有内存,在很多系统中,它都要求内存分配的时候要对齐,这样做的好处就是可以提高访问内存的速度。

我们还是先来看一段简单的程序:

程序一

1 #include <iostream>

2 using namespace std;

3

4 struct X1

5 {

6 int i;//4个字节

7 char c1;//1个字节

8 char c2;//1个字节

9 };

10

11 struct X2

12 {

13 char c1;//1个字节

14 int i;//4个字节

15 char c2;//1个字节

16 };

17

18 struct X3

19 {

20 char c1;//1个字节

21 char c2;//1个字节

22 int i;//4个字节

23 };

24 int main()

25 {

26 cout<<"long "<<sizeof(long)<<"\n";

27 cout<<"float "<<sizeof(float)<<"\n";

28 cout<<"int "<<sizeof(int)<<"\n";

29 cout<<"char "<<sizeof(char)<<"\n";

30

31 X1 x1;

32 X2 x2;

33 X3 x3;

34 cout<<"x1 的大小 "<<sizeof(x1)<<"\n";

35 cout<<"x2 的大小 "<<sizeof(x2)<<"\n";

36 cout<<"x3 的大小 "<<sizeof(x3)<<"\n";

37 return 0;

38 }

这段程序的功能很简单,就是定义了三个结构X1,X2,X3,这三个结构的主要区别就是内存数据摆放的顺序,其他都是一样的,另外程序输入了几种基本类型所占用的字节数,以及我们这里的三个结构所占用的字节数。

这段程序的运行结果为:

1 long 4

2 float 4

3 int 4

4 char 1

5 x1 的大小 8

6 x2 的大小 12

7 x3 的大小 8

结果的前面四行没有什么问题,但是我们在最后三行就可以看到三个结构占用的空间大小不一样,造成这个原因就是内部数据的摆放顺序,怎么会这样呢?

下面就是我们需要讲的内存对齐了。

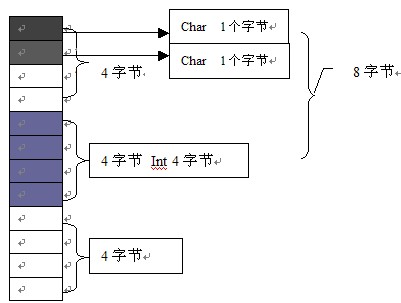

内存是一个连续的块,我们可以用下面的图来表示, 它是以4个字节对一个对齐单位的:

图一

让我们看看三个结构在内存中的布局:

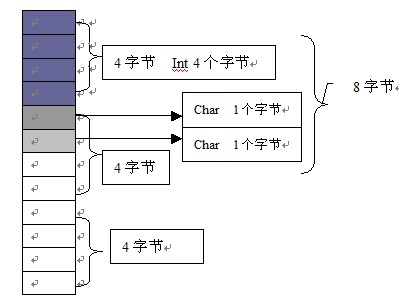

首先是 X1,如下图所示

X1 中第一个是 Int类型,它占有4字节,所以前面4格就是满了,然后第二个是char类型,这中类型只占一个字节,所以它占有了第二个4字节组块中的第一格,第三个也是char类型,所以它也占用一个字节,它就排在了第二个组块的第二格,因为它们加在一起大小也不超过一个块,所以他们三个变量在内存中的结构就是这样的,因为有内存分块对齐,所以最后出来的结果是8,而不是6,因为后面两个格子其实也算是被用了。

再次看看X2,如图所示

X2中第一个类型是Char类型,它占用一个字节,所以它首先排在第一组块的第一个格子里面,第二个是Int类型,它占用4个字节,第一组块已经用掉一格,还剩3格,肯定是无法放下第二Int类型的,因为要考虑到对齐,所以不得不把它放到第二个组块,第三个类型是Char类型,跟第一个类似。所因为有内存分块对齐,我们的内存就不是8个格子了,而是12个了。

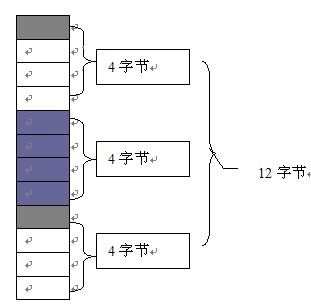

再看看X3,如下图所示:

关于X3的说明其实跟X1是类似的,只不过它把两个1个字节的放到了前面,相信看了前面两种情况的说明这里也是很容易理解的。

What is the size of this structure in bytes? Many programmers will answer "6 bytes." It makes sense: one byte for `a`, four bytes for`b` and another byte for `c`. 1 + 4 + 1 equals 6. Here's how it would lay out in memory:

| | | | | |

| --- | --- | --- | --- | --- |

| Field Type | Field Name | Field Offset | Field Size | Field End |

| `char` | `a` | 0 | 1 | 1 |

| `long` | `b` | 1 | 4 | 5 |

| `char` | `c` | 5 | 1 | 6 |

| Total Size in Bytes: | 6 |

However, if you were to ask your compiler to `sizeof( Struct )`, chances are the answer you'd get back would be greater than six, perhaps eight or even twenty-four. There's two reasons for this: backwards compatibility and efficiency.

First, backwards compatibility. Remember the 68000 was a processor with two-byte memory access granularity, and would throw an exception upon encountering an odd address. If you were to read from or write to field `b`, you'd attempt to access an odd address. If a debugger weren't installed, the old Mac OS would throw up a System Error dialog box with one button: Restart. Yikes!

So, instead of laying out your fields just the way you wrote them, the compiler *padded* the structure so that `b` and `c` would reside at even addresses:

| | | | | |

| --- | --- | --- | --- | --- |

| Field Type | Field Name | Field Offset | Field Size | Field End |

| `char` | `a` | 0 | 1 | 1 |

| *padding* | 1 | 1 | 2 |

| `long` | `b` | 2 | 4 | 6 |

| `char` | `c` | 6 | 1 | 7 |

| *padding* | 7 | 1 | 8 |

| Total Size in Bytes: | 8 |

Padding is the act of adding otherwise unused space to a structure to make fields line up in a desired way. Now, when the 68020 came out with built-in hardware support for unaligned memory access, this padding was unnecessary. However, it didn't hurt anything, and it even helped a little in performance.

The second reason is efficiency. Nowadays, on PowerPC machines, two-byte alignment is nice, but four-byte or eight-byte is better. You probably don't care anymore that the original 68000 choked on unaligned structures, but you probably care about potential 4,610% performance penalties, which can happen if a `double` field doesn't sit aligned in a structure of your devising.

很多人都知道是内存对齐所造成的原因,却鲜有人告诉你内存对齐的基本原理!上面作者就做了解释!

三 不懂内存对齐将造成的可能影响如下

* Your software may hit performance-killing unaligned memory access exceptions, which invoke *very* expensive alignment exception handlers.

* Your application may attempt to atomically store to an unaligned address, causing your application to lock up.

* Your application may attempt to pass an unaligned address to Altivec, resulting in Altivec reading from and/or writing to the wrong part of memory, silently corrupting data or yielding incorrect results.

四 内存对齐规划

* 一、内存对齐的原因

* 大部分的参考资料都是如是说的:

1、平台原因(移植原因):不是所有的硬件平台都能访问任意地址上的任意数据的;某些硬件平台只能在某些地址处取某些特定类型的数据,否则抛出硬件异常。

2、性能原因:数据结构(尤其是栈)应该尽可能地在自然边界上对齐。原因在于,为了访问未对齐的内存,处理器需要作两次内存访问;而对齐的内存访问仅需要一次访问。

二、对齐规则

每个特定平台上的编译器都有自己的默认“对齐系数”(也叫对齐模数)。程序员可以通过预编译命令#pragma pack(n),n=1,2,4,8,16来改变这一系数,其中的n就是你要指定的“对齐系数”。

**网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。**

**[需要这份系统化的资料的朋友,可以添加戳这里获取](https://bbs.csdn.net/topics/618668825)**

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

omically store to an unaligned address, causing your application to lock up.

* Your application may attempt to pass an unaligned address to Altivec, resulting in Altivec reading from and/or writing to the wrong part of memory, silently corrupting data or yielding incorrect results.

四 内存对齐规划

* 一、内存对齐的原因

* 大部分的参考资料都是如是说的:

1、平台原因(移植原因):不是所有的硬件平台都能访问任意地址上的任意数据的;某些硬件平台只能在某些地址处取某些特定类型的数据,否则抛出硬件异常。

2、性能原因:数据结构(尤其是栈)应该尽可能地在自然边界上对齐。原因在于,为了访问未对齐的内存,处理器需要作两次内存访问;而对齐的内存访问仅需要一次访问。

二、对齐规则

每个特定平台上的编译器都有自己的默认“对齐系数”(也叫对齐模数)。程序员可以通过预编译命令#pragma pack(n),n=1,2,4,8,16来改变这一系数,其中的n就是你要指定的“对齐系数”。

[外链图片转存中...(img-1BnQyDm2-1715797006344)]

[外链图片转存中...(img-G1AdwGzY-1715797006344)]

**网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。**

**[需要这份系统化的资料的朋友,可以添加戳这里获取](https://bbs.csdn.net/topics/618668825)**

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

764

764

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?