既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上C C++开发知识点,真正体系化!

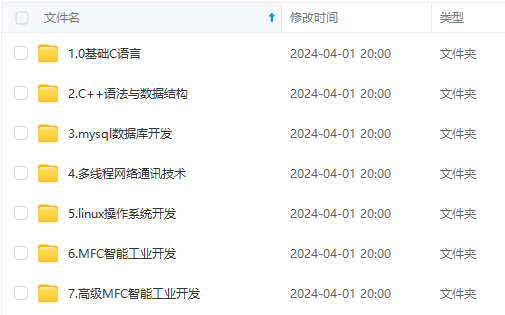

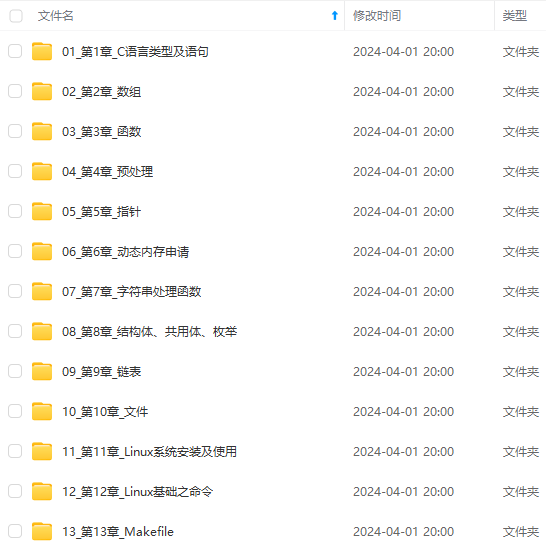

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

FILE* fpfile;

char* filename;

int CMBCamera::StartCamera(int &width, int &height, std::string strCameraVPid)

{

int ret = 0;

//输出YUV文件测试

QDateTime localTime = QDateTime::currentDateTime();//获取系统时 间

QString currentTime = localTime.toString("yyyyMMddhhmmss");//格式转换

char* LocalTime = qstringToChar(currentTime); //QString 转char*

filename = new char[50];

strcpy(filename, SAVEPICTURE); //拼接字 符串

strcat(filename, LocalTime);

strcat(filename, PICTURETAIL);

fpfile = fopen(filename, "wb+"); //打开文件, 以写格式

if(fpfile == NULL)

{

qDebug()<< "create yuv file failure ";

return 0;

}

qDebug() << "StartCamera Start! width = " << width << " height = " << height << " strCameraVPid = " << strCameraVPid.c_str();

m_CurrentWidth = width;

m_CurrentHeight = height;

m_strCameraVPid = GetVPID(strCameraVPid);

bStart = true;

ret = open_device();

if(ret == 0)

{

qDebug() << "StartCamera Fail -> open_device Fail!";

return 0;

}

printSolution(fd);

ret = init_device();

if(ret == 0)

{

qDebug() << "StartCamera Fail -> init_device Fail!";

return 0;

}

start_capturing();

sleep(1);

initFrameCallBackFun();//读取数据

//适配摄像头大小

if(m_CurrentWidth != width || m_CurrentHeight != height)

{

width = m_CurrentWidth;

height = m_CurrentHeight;

}

m_pCacheThread = new Poco::Thread();

m_pCacheRa = new Poco::RunnableAdapter<CMBCamera>(*this,&CMBCamera::CacheVideoFrame);//处理数据

m_pCacheThread->start(*m_pCacheRa);

qDebug() << "StartCamera Success! m_CurrentWidth = " << m_CurrentWidth << " m_CurrentHeight = " << m_CurrentHeight;

return 1;

}

int CMBCamera::StopCamera()

{

//输出YUV文件测试

delete []filename;

filename = NULL;

fclose(fpfile);

fpfile = NULL;

bStart = false;

StopReadThread();

stop_capturing();

uninit_device();

close_device();

this->fd = -1;

free(setfps);

setfps = NULL;

//free(CaptureVideoBuffer);

sleep(1);

qDebug() << "StopCamera Success!";

return 0;

}

void CMBCamera::close_device(void)

{

g_vcV4l2Pix.clear();

if (-1 == close(fd))

qDebug() << "close";

fd = -1;

qDebug() << "close_device() ok\n";

}

void CMBCamera::uninit_device(void)

{

unsigned int i;

switch (io)

{

case IO_METHOD_READ:

free(buffers[0].start);

break;

case IO_METHOD_MMAP:

for (i = 0; i < n_buffers; ++i)

if (-1 == munmap(buffers[i].start, buffers[i].length))

qDebug() << "munmap";

break;

case IO_METHOD_USERPTR:

for (i = 0; i < n_buffers; ++i)

free(buffers[i].start);

break;

}

free(buffers);

buffers = NULL;

qDebug() << "uninit_device() ok\n";

//MBLogInfo("uninit_device() ok");

}

void CMBCamera::stop_capturing(void)

{

enum v4l2_buf_type type;

switch (io)

{

case IO_METHOD_READ:

break;

case IO_METHOD_MMAP:

case IO_METHOD_USERPTR:

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (-1 == xioctl(fd, VIDIOC_STREAMOFF, &type))

qDebug() << "VIDIOC_STREAMOFF";

break;

}

qDebug() << "stop_capturing() ok\n";

//MBLogInfo("stop_capturing() ok");

}

bool CMBCamera::StopReadThread()

{

qDebug() << "StopReadThread()\n";

//CMediaEngineUtils::MBSleepThread(100);

QThread::msleep(100);

// C_CONFIG::CConfig::SleepMS(100);

//MBLogInfo("enter");

if (m_ThreadRead)

{

//MBLogInfo("Release Thread[%p].", m_ThreadRead);

m_ThreadRead->join();

delete (m_ThreadRead);

m_ThreadRead = NULL;

}

//MBLogInfo("leave");

return true;

}

void CMBCamera::initFrameCallBackFun()

{

StartReadThread();

}

bool CMBCamera::StartReadThread()

{

qDebug() << "StartReadThread()";

if (NULL == m_ThreadRead)

{

m_ThreadRead = new Poco::Thread();

qDebug() << "Create m_Thread=" << m_ThreadRead;

}

m_ThreadRead->start(*this);

//bInit = true;

qDebug() << "bReadThreadStarted leave.";

return true;

}

void CMBCamera::run()

{

ThreadWrapperThreadFunc(this);

printf("run() end!!\n");

}

void* CMBCamera::ThreadWrapperThreadFunc(void* me)

{

CMBCamera* reporter = static_cast<CMBCamera*>(me);

if (NULL != reporter)

reporter->FrameThreadFunc();

else

qDebug() << "me = " << me ;

return NULL;

}

void CMBCamera::FrameThreadFunc()

{

void* buf = NULL;

lock_dev.lock();

for (;;)

{

// printf("FrameThreadFunc() bStart:%d\n", bStart);

fd_set fds;

struct timeval tv;

int r;

if (fd <= 0 || !bStart)

{

break;

// usleep(100);

// continue;

}

FD_ZERO(&fds);

FD_SET(fd, &fds);

tv.tv_sec = 1;

tv.tv_usec = 0;

if (fd <= 0 || !bStart)

{

break;

}

r = select(fd + 1, &fds, NULL, NULL, &tv);

if (-1 == r)

{

if (EINTR == errno)

continue;

// errno_exit("=======================================select");

}

if (0 == r)

{

fprintf(stderr, "======================================select timeout\n");

exit(EXIT_FAILURE);

continue;

}

if (fd <= 0 || !bStart)

{

break;

}

read_frame();

}

qDebug() << "FrameThreadFunc() return";

//MBLogInfo("FrameThreadFunc() return");

// StopReadThread();

lock_dev.unlock();

return;

}

int CMBCamera::read_frame(void)

{

//printf("read_frame()\n");

struct v4l2_buffer buf;

unsigned int i;

switch (io)

{

case IO_METHOD_READ:

if (-1 == read(fd, buffers[0].start, buffers[0].length))

{

switch (errno)

{

case EAGAIN:

return 0;

case EIO:

default:

qDebug() << "read";

return 0;

}

}

if (fd <= 0 || !bStart)

break;

printf("--------222-----\r\n");

//videoFrameCB(buffers[0].start, buffers[0].length, NULL, NULL);

m_cacheBufMutex.lock();

m_cacheBuf.assign((const unsigned char*)buffers[0].start, buffers[0].length);

m_cacheBufMutex.unlock();

m_cacheEvent.set();

// process_image(buffers[0].start, buffers[0].length);

break;

case IO_METHOD_MMAP:

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

if (-1 == xioctl(fd, VIDIOC_DQBUF, &buf))//从队列中取出帧

{

switch (errno)

{

case EAGAIN:

return 0;

case EIO:

default:

char buf_[50] = { 0x0 };

sprintf(buf_, "VIDIOC_DQBUF fd:%d,isStart:%d", fd, bStart);

//errno_exit(buf_);

if (errno == 22)

return 0;

// return 0;

}

}

if (fd <= 0 || !bStart)

break;

assert(buf.index < n_buffers);

// printf("---------3333----\r\n");

//videoFrameCB(buffers[buf.index].start, buffers[buf.index].length, NULL,NULL);

m_cacheBufMutex.lock();

m_cacheBuf.assign((const unsigned char *)(buffers[buf.index].start), buffers[buf.index].length);

m_cacheBufMutex.unlock();

m_cacheEvent.set();

// process_image(buffers[buf.index].start, buffers[buf.index].length);

if (-1 == xioctl(fd, VIDIOC_QBUF, &buf)) //把帧放入队列

{

qDebug() << "VIDIOC_QBUF";

return 0;

}

break;

case IO_METHOD_USERPTR:

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_USERPTR;

if (-1 == xioctl(fd, VIDIOC_DQBUF, &buf))

{

switch (errno)

{

case EAGAIN:

return 0;

case EIO:

default:

qDebug() << "VIDIOC_DQBUF";

return 0;

}

}

for (i = 0; i < n_buffers; ++i)

if (buf.m.userptr == (unsigned long)buffers[i].start &&

buf.length == buffers[i].length)

break;

if (fd <= 0 || !bStart)

break;

assert(i < n_buffers);

printf("----------hhjjj=======\r\n");

//videoFrameCB((void*)buf.m.userptr, 0, NULL, NULL);

// process_image((void*)buf.m.userptr, 0);

if (-1 == xioctl(fd, VIDIOC_QBUF, &buf))

{

qDebug() << "VIDIOC_QBUF";

return 0;

}

break;

}

return 1;

}

int CMBCamera::open_device(void)

{

qDebug() << "CMBCamera::open_device Start!";

bool bFind = false;

int nCameraId = 0;

for (int i = 0; i < 10; i++)

{

if (isVaildCamera(i, m_strCameraVPid) == 0)

{

bFind = true;

nCameraId = i;

break;

}

}

if(!bFind)

{

qDebug() << "CMBCamera::open_device isVaildCamera Fail!";

return 0;

}

// nCameraId = SmartSeeMediaConfigManager::GetInstance()->mVideoCameraId;

char szDevName[256] = {0};

sprintf(szDevName, "/dev/video%d", nCameraId);

qDebug() << "CMBCamera::open_device" << szDevName;

struct stat st;

dev_name = szDevName;//"/dev/video0";

if (-1 == stat(szDevName, &st))

{

fprintf(stderr, "Cannot identify %s: %d, %s\n", szDevName, errno,strerror(errno));

qDebug() << "open_device() stat Fail!";

return 0;

//exit(EXIT_FAILURE);

}

if (!S_ISCHR(st.st_mode))

{

fprintf(stderr, "%s is no device\n", szDevName);

qDebug() << "open_device() S_ISCHR Fail!";

return 0;

//exit(EXIT_FAILURE);

}

fd = open(szDevName, O_RDWR | O_NONBLOCK, 0);

if (-1 == fd)

{

fprintf(stderr, "Cannot open \E2\80?%s\E2\80?: %d, %s\n", szDevName, errno,

strerror(errno));

qDebug() << "open_device() open Fail!";

return 0;

//exit(EXIT_FAILURE);

}

qDebug() << "open_device() ok";

return 1;

}

void CMBCamera::printSolution(int fd)

{ //获取摄像头所支持的分辨率

enum v4l2_buf_type type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

struct v4l2_fmtdesc fmt_1;

struct v4l2_frmsizeenum frmsize;

structFromatRecord formatRecord;

// struct v4l2_pix_format pixFormat;

// ClearVideoInParamsList();

qDebug() << "printSolution Camera Support param";

fmt_1.index = 0;

fmt_1.type = type;

while (ioctl(fd, VIDIOC_ENUM_FMT, &fmt_1) >= 0)

{

frmsize.pixel_format = fmt_1.pixelformat;

frmsize.index = 0;

while (ioctl(fd, VIDIOC_ENUM_FRAMESIZES, &frmsize) >= 0)

{

//获取支持的宽高格式

//V4L2_PIX_FMT_YUYV 1448695129

//V4L2_PIX_FMT_YYUV 1448434009

//V4L2_PIX_FMT_MJPEG 1196444237

formatRecord.width = frmsize.discrete.width;

formatRecord.height = frmsize.discrete.height;

formatRecord.pixfromat = fmt_1.pixelformat;//fmt_1.pixelformat;

//formatRecord.fps = getfps.parm.capture.timeperframe.denominator;

g_vcV4l2Pix.push_back(formatRecord);

if (frmsize.type == V4L2_FRMSIZE_TYPE_DISCRETE)

{

qDebug() << "1.V4L2_FRMSIZE_TYPE_DISCRETE line:" << __LINE__ << " : " << formatRecord.pixfromat << "- " << frmsize.discrete.width << "X" << frmsize.discrete.height;

}

else if (frmsize.type == V4L2_FRMSIZE_TYPE_STEPWISE)

{

qDebug() << "2.V4L2_FRMSIZE_TYPE_STEPWISE line:" << __LINE__ << " : " << formatRecord.pixfromat << "- " << frmsize.discrete.width << "X" << frmsize.discrete.height;

}

frmsize.index++;

}

fmt_1.index++;

}

}

int CMBCamera::xioctl(int fd, int request, void* arg)

{

int r;

do

r = ioctl(fd, request, arg);

while (-1 == r && EINTR == errno);

return r;

}

int CMBCamera::init_device()

{

struct v4l2_capability cap;

struct v4l2_cropcap cropcap;

struct v4l2_crop crop;

struct v4l2_format fmt;

unsigned int min;

int i_pixret = -1;

if (-1 == xioctl(fd, VIDIOC_QUERYCAP, &cap))

{

if (EINVAL == errno)

{

fprintf(stderr, "%s is no V4L2 device\n", dev_name);

// exit(EXIT_FAILURE);

qDebug() << "no V4L2 device!";

return 0;

}

else

{

qDebug() << "VIDIOC_QUERYCAP Success";

}

}

if (!(cap.capabilities & V4L2_CAP_VIDEO_CAPTURE))

{

fprintf(stderr, "%s is no video capture device\n", dev_name);

qDebug() << "no video capture device";

//exit(EXIT_FAILURE);

return 0;

}

switch (io)

{

case IO_METHOD_READ:

if (!(cap.capabilities & V4L2_CAP_READWRITE))

{

fprintf(stderr, "%s does not support read i/o\n", dev_name);

qDebug() << "not support read i/o";

return 0;

}

break;

case IO_METHOD_MMAP:

case IO_METHOD_USERPTR:

if (!(cap.capabilities & V4L2_CAP_STREAMING))

{

fprintf(stderr, "%s does not support streaming i/o\n", dev_name);

qDebug() << "not support streaming i/o";

return 0;

}

break;

}

CLEAR(cropcap);

cropcap.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (0 == xioctl(fd, VIDIOC_CROPCAP, &cropcap))

{

crop.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

crop.c = cropcap.defrect;

if (-1 == xioctl(fd, VIDIOC_S_CROP, &crop))

{

switch (errno)

{

case EINVAL:

break;

default:

break;

}

}

}

else

{

}

CLEAR(fmt);

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

// int w = WWIDTH;

// int h = HHEIGHT;

// getSolution(params, w, h);

// setCurrentVideo(m_bDisplayBG, w, h, m_bRestartEncode);

// fmt.fmt.pix.width = w;

// fmt.fmt.pix.height = h;

// //fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_YUYV;

// fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_MJPEG;

// m_iCameraFormat = fmt.fmt.pix.pixelformat;

//设置宽高采集样式

// fmt.fmt.pix.width = g_vcV4l2Pix[0].width;

// fmt.fmt.pix.height = g_vcV4l2Pix[0].height;

//返回合适的宽高

i_pixret = GetCurrentCamera();

if(i_pixret == 1)

{

fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_MJPEG;

}

else if(i_pixret == 0)

{

fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_YUYV;

}

else {

qDebug() << "GetCurrentCamera error!";

return 0;

}

fmt.fmt.pix.width = m_CurrentWidth;

fmt.fmt.pix.height = m_CurrentHeight;

//fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_YUYV;

//fmt.fmt.pix.pixelformat = g_vcV4l2Pix[0].pixfromat;

//fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_MJPEG;

m_iCameraFormat = fmt.fmt.pix.pixelformat;

fmt.fmt.pix.field = V4L2_FIELD_INTERLACED;

if (-1 == xioctl(fd, VIDIOC_S_FMT, &fmt))

qDebug() << "VIDIOC_S_FMT";

//设置帧率

setfps = (struct v4l2_streamparm*)calloc(1, sizeof(struct v4l2_streamparm));

if(set_camera_streamparm(fd, setfps, 30) != 0)

{

qDebug() << "set_camera_streamparm 30FPS fail!";

}

min = fmt.fmt.pix.width * 2;

if (fmt.fmt.pix.bytesperline < min)

fmt.fmt.pix.bytesperline = min;

min = fmt.fmt.pix.bytesperline * fmt.fmt.pix.height;

if (fmt.fmt.pix.sizeimage < min)

fmt.fmt.pix.sizeimage = min;

struct v4l2_streamparm getfps;

getfps.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

int ret = ioctl(fd, VIDIOC_G_PARM, &getfps);

if(ret)

{

qDebug() << "VIDIOC_G_PARM to get fps failed : " << ret;

}

else

{

qDebug() << "VIDIOC_G_PARM to get fps succeed : FPS = " << getfps.parm.capture.timeperframe.denominator;

}

switch (io)

{

case IO_METHOD_READ:

init_read(fmt.fmt.pix.sizeimage);

break;

case IO_METHOD_MMAP:

init_mmap();

break;

case IO_METHOD_USERPTR:

init_userp(fmt.fmt.pix.sizeimage);

break;

}

qDebug() << "init_device() ok\n";

return 1;

}

//根据传入的摄像头宽高 找到匹配的摄像头支持的宽高

int CMBCamera::GetCurrentCamera()

{

int iminMJPEG = (std::abs((int)g_vcV4l2Pix[0].width - m_CurrentWidth) + std::abs((int)g_vcV4l2Pix[0].height - m_CurrentHeight));

int iminYUY2 = (std::abs((int)g_vcV4l2Pix[0].width - m_CurrentWidth) + std::abs((int)g_vcV4l2Pix[0].height - m_CurrentHeight));

int temp;

int iIndexMJPEG = 0;

int iIndexYUY2 = 0;

bool bIsMJPEG = false;

bool bIsYUY2 = false;

for(int i = 0; i < g_vcV4l2Pix.size(); i++)

{

if(g_vcV4l2Pix[i].pixfromat == V4L2_PIX_FMT_MJPEG)

{

bIsMJPEG = true;

temp = (std::abs((int)g_vcV4l2Pix[i].width - m_CurrentWidth) + std::abs((int)g_vcV4l2Pix[i].height - m_CurrentHeight));

if(iminMJPEG > temp)

{

iminMJPEG = temp;

iIndexMJPEG = i;

}

}

else if(g_vcV4l2Pix[i].pixfromat == V4L2_PIX_FMT_YUYV)

{

bIsYUY2 = true;

temp = (std::abs((int)g_vcV4l2Pix[i].width - m_CurrentWidth) + std::abs((int)g_vcV4l2Pix[i].height - m_CurrentHeight));

if(iminYUY2 > temp)

{

iminYUY2 = temp;

iIndexYUY2 = i;

}

}

}

if(bIsMJPEG)

{

m_CurrentWidth = g_vcV4l2Pix[iIndexMJPEG].width;

m_CurrentHeight = g_vcV4l2Pix[iIndexMJPEG].height;

return 1;

}

else if (bIsYUY2)

{

m_CurrentWidth = g_vcV4l2Pix[iIndexYUY2].width;

m_CurrentHeight = g_vcV4l2Pix[iIndexYUY2].height;

return 0;

}

return -1;

}

void CMBCamera::start_capturing(void)

{

unsigned int i;

enum v4l2_buf_type type;

switch (io)

{

case IO_METHOD_READ:

break;

case IO_METHOD_MMAP:

for (i = 0; i < n_buffers; ++i)

{

struct v4l2_buffer buf;

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = i;

if (-1 == xioctl(fd, VIDIOC_QBUF, &buf))

qDebug() << "VIDIOC_QBUF";

}

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (-1 == xioctl(fd, VIDIOC_STREAMON, &type))

qDebug() << "VIDIOC_STREAMON";

break;

case IO_METHOD_USERPTR:

for (i = 0; i < n_buffers; ++i)

{

struct v4l2_buffer buf;

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_USERPTR;

buf.m.userptr = (unsigned long)buffers[i].start;

buf.length = buffers[i].length;

if (-1 == xioctl(fd, VIDIOC_QBUF, &buf))

qDebug() << "VIDIOC_QBUF";

}

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

**网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。**

**[需要这份系统化的资料的朋友,可以添加戳这里获取](https://bbs.csdn.net/topics/618668825)**

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

4l2_buffer buf;

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_USERPTR;

buf.m.userptr = (unsigned long)buffers[i].start;

buf.length = buffers[i].length;

if (-1 == xioctl(fd, VIDIOC_QBUF, &buf))

qDebug() << "VIDIOC_QBUF";

}

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

[外链图片转存中...(img-v3Yt2502-1715766780150)]

[外链图片转存中...(img-SMtE0ACz-1715766780151)]

**网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。**

**[需要这份系统化的资料的朋友,可以添加戳这里获取](https://bbs.csdn.net/topics/618668825)**

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

5384

5384

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?