节点规划

| IP地址 | 主机名 | 节点 |

| 192.168.220.10 | master | master节点 |

| 192.168.220.20 | node1 | node节点 |

| 192.168.220.30 | node2 | node节点 |

一:基础环境配置

所有节点都需要做环境配置

配置yum源(master,node1,node2)

[root@master ~]# tar -zxvf K8S.tar.gz 查看系统内核如果太低需要升级(master,node1,node2)

[root@master ~]# uname -r

3.10.0-327.el7.x86_64

[root@master ~]# yum upgrade -y配置主机映射(master,node1,node2)

[root@master ~]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.220.10 master

192.168.220.10 node1

192.168.220.10 node2配置防火墙、selinux、swap(master,node1,node2)

[root@master ~]# systemctl stop firewalld;systemctl disable firewalld

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

Removed symlink /etc/systemd/system/basic.target.wants/firewalld.service.

[root@master ~]# setenforce 0

[root@master ~]# vi /etc/selinux/config

SELINUX=disabled

[root@master ~]# swapoff -a

[root@master ~]# sed -i '/swap/s/^/#/' /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0配置时间同步(master,node1,node2)

[root@master ~]# yum install -y chronymaster 节点修改 /etc/chrony.conf 文件,注释默认 NTP 服务器,指定上游公共 NTP 服务器,并允许其他节点同步时间。注意操作对象

[root@master ~]# sed -i 's/^server/#&/' /etc/chrony.conf

[root@master ~]# vi /etc/chrony.conf

local stratum 10

server master iburst

allow allmaster 节点重启 chronyd 服务并设为开机启动,开启网络时间同步功能。

[root@master ~]# systemctl enable chronyd;systemctl restart chronyd

[root@master ~]# timedatectl set-ntp truenode 节点修改 /etc/chrony.conf 文件,指定内网 master 节点为上游 NTP 服务器,重启服务并设为开机启动。

[root@node1 ~]# sed -i 's/^server/#&/' /etc/chrony.conf

[root@node1 ~]# echo server 192.168.220.10 iburst >> /etc/chrony.conf

[root@node1 ~]# systemctl enable chronyd;systemctl restart chronyd[root@node2 ~]# sed -i 's/^server/#&/' /etc/chrony.conf

[root@node2 ~]# echo server 192.168.220.10 iburst >> /etc/chrony.conf

[root@node2 ~]# systemctl enable chronyd;systemctl restart chronyd所有节点执行 chronyc sources 命令,查询结果中存在 ^* 开头的行,说明同步成功

[root@master ~]# chronyc sources

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* master 10 7 377 578 -120ns[-4319ns] +/- 8856ns

[root@node1 ~]# chronyc sources

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* master 11 6 37 2 -798ns[ -509us] +/- 19ms

[root@node2 ~]# chronyc sources

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* master 11 6 17 54 -2430ns[ -17us] +/- 17ms配置路由转发,所有节点创建 /etc/sysctl.d/K8S.conf 文件添加如下内容。

[root@master ~]# vi /etc/sysctl.d/K8S.confnet.ipv4.ip_forward=1

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

[root@master ~]# modprobe br_netfilter

[root@master ~]# sysctl -p /etc/sysctl.d/K8S.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

配置 IPVS 由于 IPVS 已经加入到了内核主干,所以需要加载以下内核模块以便为 kube-proxy 开启 IPVS 功能。所有节点

为保证节点重启后能自动加载所需模块,最后可以用 lsmod | grep -e ip_vs -e 查看所加载模块

[root@master ~]# vi /etc/sysconfig/modules/ipvs.modules

#! /bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

[root@master ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules

[root@master ~]# bash /etc/sysconfig/modules/ipvs.modules ;lsmod | grep -e ip_vs -e nf_conntrack_ipv4

nf_conntrack_ipv4 19149 0

nf_defrag_ipv4 12729 1 nf_conntrack_ipv4

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145458 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 143411 2 ip_vs,nf_conntrack_ipv4

libcrc32c 12644 3 xfs,ip_vs,nf_conntrack所有节点安装 ipset 软件包

[root@master ~]# yum install -y ipet ipvsadm安装 Docker 案例使用 Docker 18.09 ,所有节点安装 Docker,启动 Docker 并设置开机自启

[root@master ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@master ~]# yum install -y docker-ce-18.09.6 docker-ce-cli-18.09.6 containerd.io

[root@master ~]# mkdir -p /etc/docker

[root@master ~]# vi /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl restart docker

[root@master ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@master ~]# docker info |grep Cgroup

Cgroup Driver: systemd二:安装 kubernetes 集群

所有节点安装 kubernetes 工具并启动 kubelet

[root@master ~]# yum install -y kubelet-1.14.1 kubeadm-1.14.1 kubectl-1.14.1

[root@master ~]# systemctl enable kubelet;systemctl start kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

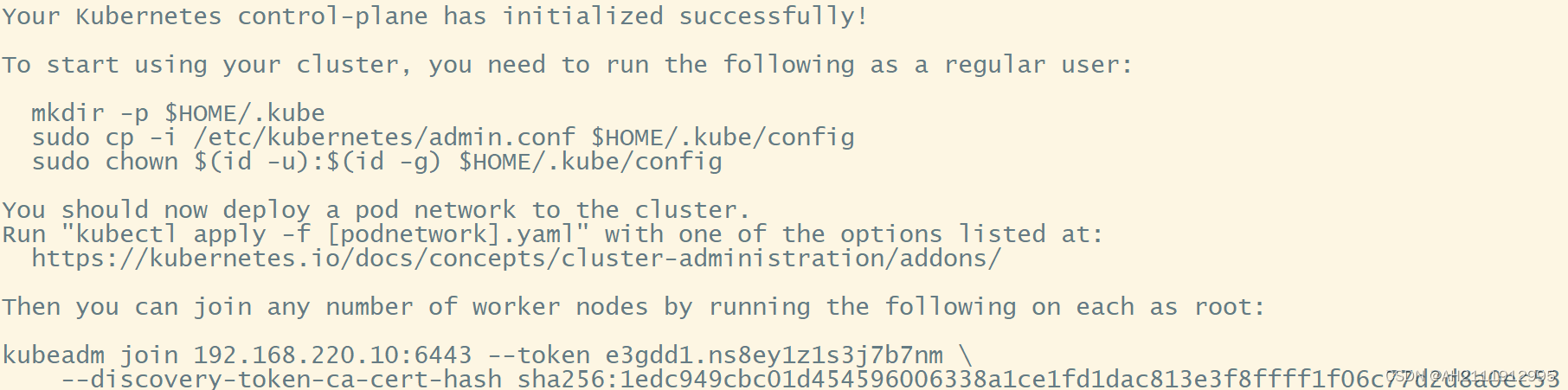

master 节点初始化 kubernetes 集群

[root@master ~]# ./kubernetes_base.sh

[root@master ~]# kubeadm init --kubernetes-version="v1.14.1" --apiserver-advertise-address=192.168.220.10 --pod-network-cidr=10.16.0.0/16 --image-repository=registry.aliyuncs.com/google_containers结果显示

kubectl 默认会在执行的用户 home 目录下面的 .kube 目录中寻找 config 文件,配置 kubectl 工具

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config查看集群状态

[root@master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"} 配置 kubernetes 网络,添加 flannel 网络

[root@master ~]# kubectl apply -f yaml/kube-flannel.yaml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x creatednode 节点加入集群

[root@node1 ~]# ./kubernetes_base.sh

[root@node1 ~]# kubeadm join 192.168.220.10:6443 --token e3gdd1.ns8ey1z1s3j7b7nm \

> --discovery-token-ca-cert-hash sha256:1edc949cbc01d454596006338a1ce1fd1dac813e3f8ffff1f06c77d2d8a0ec53[root@node2 ~]# ./kubernetes_base.sh

[root@node2 ~]# kubeadm join 192.168.220.10:6443 --token e3gdd1.ns8ey1z1s3j7b7nm \

> --discovery-token-ca-cert-hash sha256:1edc949cbc01d454596006338a1ce1fd1dac813e3f8ffff1f06c77d2d8a0ec53在 master 节点查看个节点状态

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 8m47s v1.14.1

node1 Ready <none> 76s v1.14.1

node2 Ready <none> 75s v1.14.1使用 kubectl create 命令安装 dashboard

[root@master ~]# kubectl create -f yaml/kubernetes-dashboard.yaml

secret/kubernetes-dashboard-certs created

serviceaccount/kubernetes-dashboard created

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

deployment.apps/kubernetes-dashboard created

service/kubernetes-dashboard created创建管理员

[root@master ~]# kubectl create -f yaml/dashboard-adminuser.yaml

serviceaccount/kubernetes-dashboard-admin created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-admin created检查所有 pod 状态

[root@master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-8686dcc4fd-n4rqq 1/1 Running 0 12m

coredns-8686dcc4fd-zfzwt 1/1 Running 0 12m

etcd-master 1/1 Running 0 11m

kube-apiserver-master 1/1 Running 0 11m

kube-controller-manager-master 1/1 Running 0 11m

kube-flannel-ds-amd64-cd4wr 1/1 Running 0 11m

kube-flannel-ds-amd64-pqtqq 1/1 Running 0 5m8s

kube-flannel-ds-amd64-vz4hb 1/1 Running 0 5m9s

kube-proxy-2srjf 1/1 Running 0 12m

kube-proxy-8pfz2 1/1 Running 0 5m9s

kube-proxy-sps84 1/1 Running 0 5m8s

kube-scheduler-master 1/1 Running 0 11m

kubernetes-dashboard-5f7b999d65-lkwmj 1/1 Running 0 100s查看 dashboard 端口号

[root@master ~]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 13m

kubernetes-dashboard NodePort 10.98.126.163 <none> 443:30000/TCP 2m52s可以查看到 kubernetes-dashboard 对外暴露的端口号为 30000 ,在 firefox 浏览器中输入地址 https://192.168.220.10:30000,即可访问 kubernetes dashboard

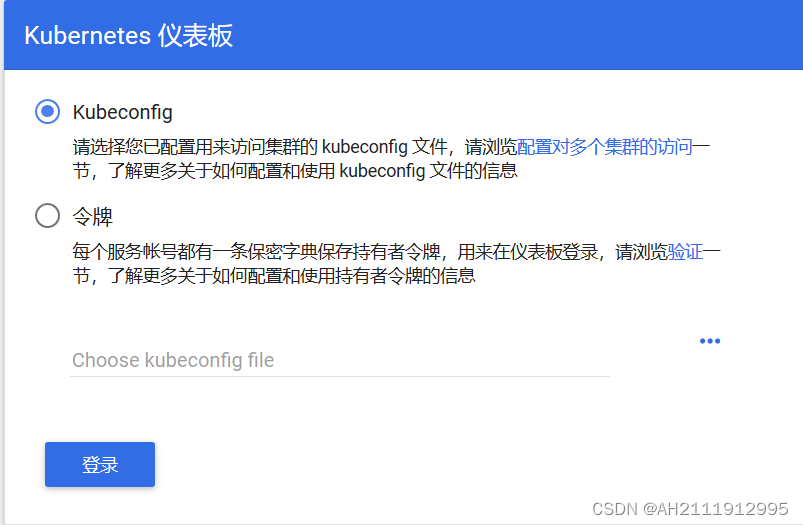

点击高级,点击 “接受风险并继续” 即可进入 kubernetes dashboard 认证界面

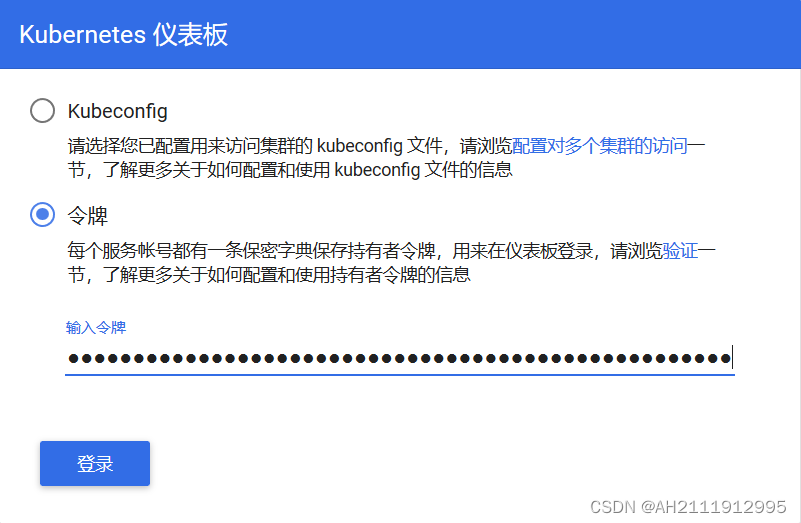

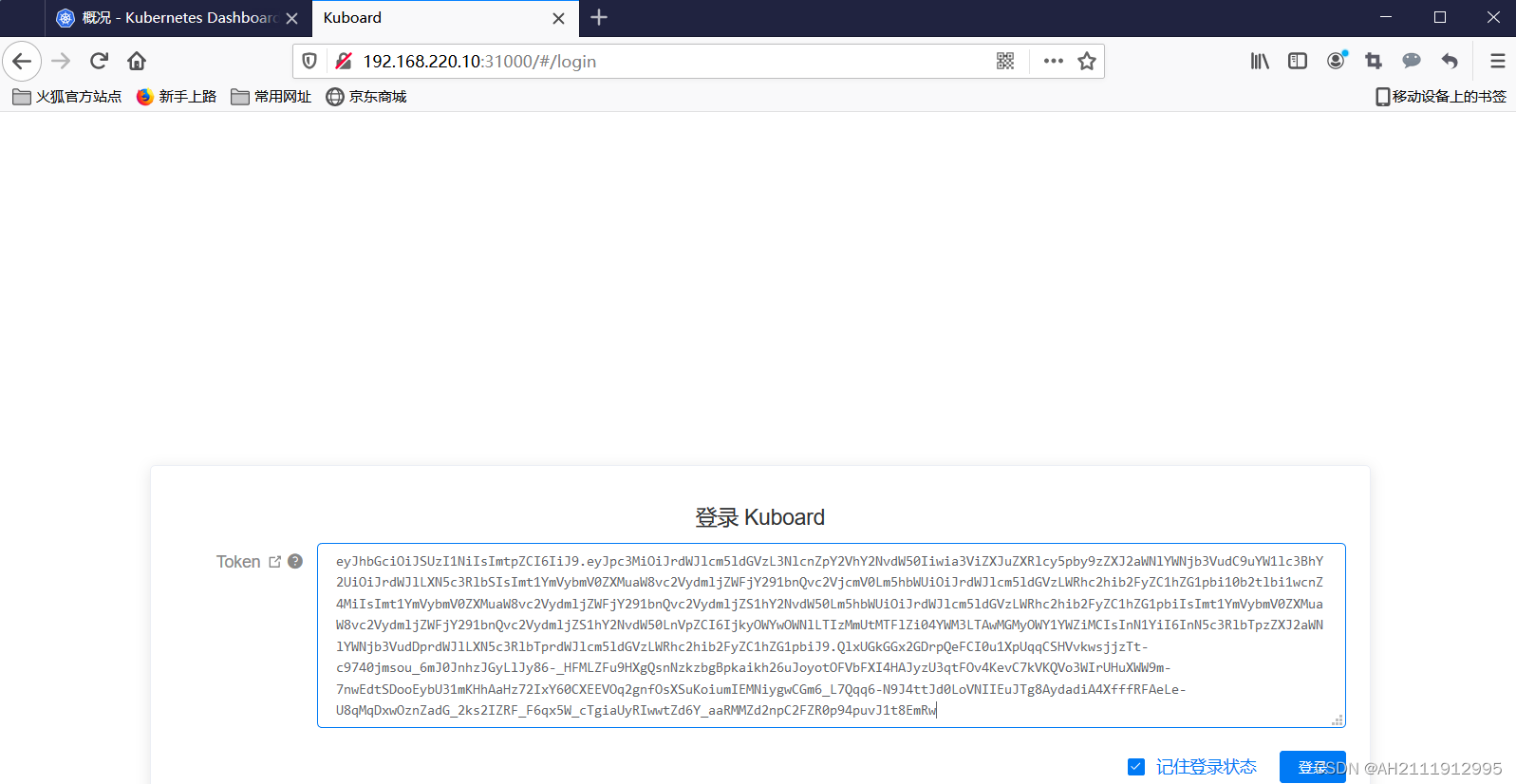

登录 kubernetes dashboard 需要输入令牌,获取命令如下

[root@master ~]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret|grep kubernetes-dashboard-admin-token|awk '{print $1}')

Name: kubernetes-dashboard-admin-token-prvx2

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard-admin

kubernetes.io/service-account.uid: 929f09ce-232e-11ef-8ac7-000c29f5afb0

Type: kubernetes.io/service-account-token

Data

====

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbi10b2tlbi1wcnZ4MiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjkyOWYwOWNlLTIzMmUtMTFlZi04YWM3LTAwMGMyOWY1YWZiMCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiJ9.QlxUGkGGx2GDrpQeFCI0u1XpUqqCSHVvkwsjjzTt-c9740jmsou_6mJ0JnhzJGyLlJy86-_HFMLZFu9HXgQsnNzkzbgBpkaikh26uJoyotOFVbFXI4HAJyzU3qtFOv4KevC7kVKQVo3WIrUHuXWW9m-7nwEdtSDooEybU31mKHhAaHz72IxY60CXEEVOq2gnfOsXSuKoiumIEMNiygwCGm6_L7Qqq6-N9J4ttJd0LoVNIIEuJTg8AydadiA4XfffRFAeLe-U8qMqDxwOznZadG_2ks2IZRF_F6qx5W_cTgiaUyRIwwtZd6Y_aaRMMZd2npC2FZR0p94puvJ1t8EmRw

ca.crt: 1025 bytes

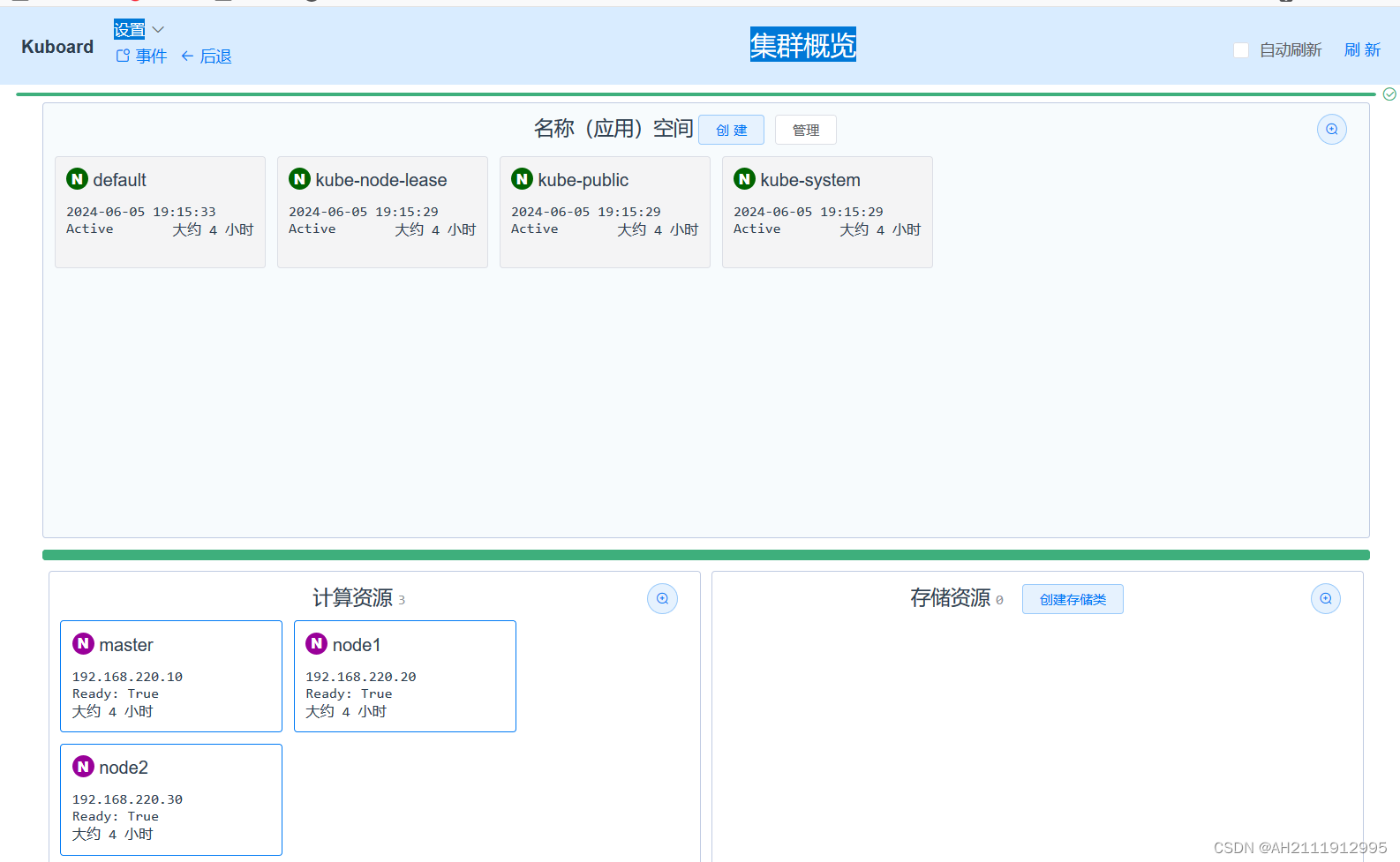

配置 kuboard

配置 kuboard

[root@master ~]# kubectl create -f yaml/kuboard.yaml

deployment.apps/kuboard created

service/kuboard created

serviceaccount/kuboard-user created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-user created

serviceaccount/kuboard-viewer created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-viewer created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-viewer-node created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-viewer-pvp created

ingress.extensions/kuboard created在浏览器中输入地址 http://192.168.220.10:3100,进入 kuboard 的认证界面

在 token 中输入令牌后静茹 kuboard 控制台

三:配置 kubernetes 集群

开启 IPVS 在 master 节点,将 ConfigMap 的 kube-system/kube-proxy 中的 config. conf 文件修改为 mod: “ipvs”

[root@master ~]# kubectl edit cm kube-proxy -n kube-system

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: ""

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs" //修改此处

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

resourceContainer: /kube-proxy

udpIdleTimeout: 250ms重启 kube-proxy

[root@master ~]# kubectl get pod -n kube-system | grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'

pod "kube-proxy-2srjf" deleted

pod "kube-proxy-8pfz2" deleted

pod "kube-proxy-sps84" deleted由于已经通过 ConfigMap 修改了 kube-proxy 的配置,所以后期增加的 node 节点,会直接使用 IPVS 模式,查看日志

[root@master ~]# kubectl logs kube-proxy-l8n8v -n kube-system

I0605 11:51:06.365930 1 server_others.go:177] Using ipvs Proxier.

#正在使用 ipvs

#根据个人容器情况查看 kube-proxy- 编号好像不一样 书上名称为 kube-proxy-9zv8x测试 IPVS ,使用 ipvsadm 命令测试,可以查看之前创建的 server 是否已经使用 LVS 创建了集群

[root@master ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.17.0.1:30000 rr

-> 10.16.1.2:8443 Masq 1 0 0

TCP 192.168.220.10:30000 rr

-> 10.16.1.2:8443 Masq 1 0 0

TCP 192.168.220.10:31000 rr

-> 10.16.2.2:80 Masq 1 1 0

TCP 10.16.0.0:30000 rr

-> 10.16.1.2:8443 Masq 1 0 0

TCP 10.16.0.0:31000 rr

-> 10.16.2.2:80 Masq 1 0 0

TCP 10.16.0.1:30000 rr

-> 10.16.1.2:8443 Masq 1 0 0

TCP 10.16.0.1:31000 rr

-> 10.16.2.2:80 Masq 1 0 0

TCP 10.96.0.1:443 rr

-> 192.168.220.10:6443 Masq 1 0 0

TCP 10.96.0.10:53 rr

-> 10.16.0.2:53 Masq 1 0 0

-> 10.16.0.4:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.16.0.2:9153 Masq 1 0 0

-> 10.16.0.4:9153 Masq 1 0 0

TCP 10.97.79.80:80 rr

-> 10.16.2.2:80 Masq 1 0 0

TCP 10.98.126.163:443 rr

-> 10.16.1.2:8443 Masq 1 0 0

TCP 127.0.0.1:30000 rr

-> 10.16.1.2:8443 Masq 1 0 0

TCP 127.0.0.1:31000 rr

-> 10.16.2.2:80 Masq 1 0 0

TCP 172.17.0.1:31000 rr

-> 10.16.2.2:80 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.16.0.2:53 Masq 1 0 0

-> 10.16.0.4:53 Masq 1 0 0 调度 master 节点,可以查看 kubernetes 不会将 pod 跳度到 master 节点。查看 master 节点 Taints 字段默认配置

[root@master ~]# kubectl describe node master

Name: master

Roles: master

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=master

kubernetes.io/os=linux

node-role.kubernetes.io/master=

Annotations: flannel.alpha.coreos.com/backend-data: {"VtepMAC":"9a:b5:22:92:3c:cb"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 192.168.220.10

kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Wed, 05 Jun 2024 07:15:29 -0400

Taints: node-role.kubernetes.io/master:NoSchedule //状态为 NoSchedule

Unschedulable: false

......如果希望将 K8S-master 也当作 Node 节点使用

[root@master ~]# kubectl taint node master node-role.kubernetes.io/master-node/master untainted

1424

1424

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?