import numpy as np

from emo_utils import *

import emoji

import matplotlib.pyplot as plt

%matplotlib inline

X_train, Y_train = read_csv('data/train_emoji.csv')

X_test, Y_test = read_csv('data/tesss.csv')maxLen = len(max(X_train, key=len).split())

print(maxLen)10

![]()

index = 1

print(X_train[index], label_to_emoji(Y_train[index]))I am proud of your achievements ?

Y_oh_train = convert_to_one_hot(Y_train, C = 5)

Y_oh_test = convert_to_one_hot(Y_test, C = 5)index = 50

print(Y_train[index], "is converted into one hot", Y_oh_train[index])0 is converted into one hot [1. 0. 0. 0. 0.]

word_to_index, index_to_word, word_to_vec_map = read_glove_vecs('data/glove.6B.50d.txt')

word = "cucumber"

index = 289846

print("the index of", word, "in the vocabulary is", word_to_index[word])

print("the", str(index) + "th word in the vocabulary is", index_to_word[index])the index of cucumber in the vocabulary is 113317 the 289846th word in the vocabulary is potatos

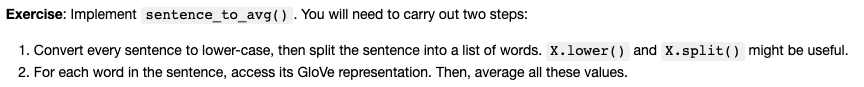

# GRADED FUNCTION: sentence_to_avg

def sentence_to_avg(sentence, word_to_vec_map):

"""

Converts a sentence (string) into a list of words (strings). Extracts the GloVe representation of each word

and averages its value into a single vector encoding the meaning of the sentence.

Arguments:

sentence -- string, one training example from X

word_to_vec_map -- dictionary mapping every word in a vocabulary into its 50-dimensional vector representation

Returns:

avg -- average vector encoding information about the sentence, numpy-array of shape (50,)

"""

### START CODE HERE ###

# Step 1: Split sentence into list of lower case words (≈ 1 line)

words = sentence.lower().split()

# Initialize the average word vector, should have the same shape as your word vectors.

avg = np.zeros(word_to_vec_map[words[0]].shape)

# Step 2: average the word vectors. You can loop over the words in the list "words".

for w in words:

avg += word_to_vec_map[w]

avg = avg / float(len(words))

### END CODE HERE ###

return avgavg = sentence_to_avg("Morrocan couscous is my favorite dish", word_to_vec_map)

print("avg = ", avg)avg = [-0.008005 0.56370833 -0.50427333 0.258865 0.55131103 0.03104983 -0.21013718 0.16893933 -0.09590267 0.141784 -0.15708967 0.18525867 0.6495785 0.38371117 0.21102167 0.11301667 0.02613967 0.26037767 0.05820667 -0.01578167 -0.12078833 -0.02471267 0.4128455 0.5152061 0.38756167 -0.898661 -0.535145 0.33501167 0.68806933 -0.2156265 1.797155 0.10476933 -0.36775333 0.750785 0.10282583 0.348925 -0.27262833 0.66768 -0.10706167 -0.283635 0.59580117 0.28747333 -0.3366635 0.23393817 0.34349183 0.178405 0.1166155 -0.076433 0.1445417 0.09808667]

# GRADED FUNCTION: model

def model(X, Y, word_to_vec_map, learning_rate = 0.01, num_iterations = 400):

"""

Model to train word vector representations in numpy.

Arguments:

X -- input data, numpy array of sentences as strings, of shape (m, 1)

Y -- labels, numpy array of integers between 0 and 7, numpy-array of shape (m, 1)

word_to_vec_map -- dictionary mapping every word in a vocabulary into its 50-dimensional vector representation

learning_rate -- learning_rate for the stochastic gradient descent algorithm

num_iterations -- number of iterations

Returns:

pred -- vector of predictions, numpy-array of shape (m, 1)

W -- weight matrix of the softmax layer, of shape (n_y, n_h)

b -- bias of the softmax layer, of shape (n_y,)

"""

np.random.seed(1)

# Define number of training examples

m = Y.shape[0] # number of training examples

n_y = 5 # number of classes

n_h = 50 # dimensions of the GloVe vectors

# Initialize parameters using Xavier initialization

W = np.random.randn(n_y, n_h) / np.sqrt(n_h)

b = np.zeros((n_y,))

# Convert Y to Y_onehot with n_y classes

Y_oh = convert_to_one_hot(Y, C = n_y)

# Optimization loop

for t in range(num_iterations): # Loop over the number of iterations

for i in range(m): # Loop over the training examples

### START CODE HERE ### (≈ 4 lines of code)

# Average the word vectors of the words from the i'th training example

avg = sentence_to_avg(X[i], word_to_vec_map)

# Forward propagate the avg through the softmax layer

z = np.dot(W, avg) + b

a = softmax(z)

# Compute cost using the i'th training label's one hot representation and "A" (the output of the softmax)

cost = -np.sum( Y_oh[i,:] * np.log(a))

### END CODE HERE ###

# Compute gradients

dz = a - Y_oh[i]

dW = np.dot(dz.reshape(n_y,1), avg.reshape(1, n_h))

db = dz

# Update parameters with Stochastic Gradient Descent

W = W - learning_rate * dW

b = b - learning_rate * db

if t % 100 == 0:

print("Epoch: " + str(t) + " --- cost = " + str(cost))

pred = predict(X, Y, W, b, word_to_vec_map)

return pred, W, bprint(X_train.shape)

print(Y_train.shape)

print(np.eye(5)[Y_train.reshape(-1)].shape)

print(X_train[0])

print(type(X_train))

Y = np.asarray([5,0,0,5, 4, 4, 4, 6, 6, 4, 1, 1, 5, 6, 6, 3, 6, 3, 4, 4])

print(Y.shape)

X = np.asarray(['I am going to the bar tonight', 'I love you', 'miss you my dear',

'Lets go party and drinks','Congrats on the new job','Congratulations',

'I am so happy for you', 'Why are you feeling bad', 'What is wrong with you',

'You totally deserve this prize', 'Let us go play football',

'Are you down for football this afternoon', 'Work hard play harder',

'It is suprising how people can be dumb sometimes',

'I am very disappointed','It is the best day in my life',

'I think I will end up alone','My life is so boring','Good job',

'Great so awesome'])

print(X.shape)

print(np.eye(5)[Y_train.reshape(-1)].shape)

print(type(X_train))

(132,) (132,) (132, 5) never talk to me again <class 'numpy.ndarray'> (20,) (20,) (132, 5) <class 'numpy.ndarray'>

pred, W, b = model(X_train, Y_train, word_to_vec_map)

print(pred)

print("Training set:")

pred_train = predict(X_train, Y_train, W, b, word_to_vec_map)

print('Test set:')

pred_test = predict(X_test, Y_test, W, b, word_to_vec_map)Training set: Accuracy: 0.9772727272727273 Test set: Accuracy: 0.8571428571428571

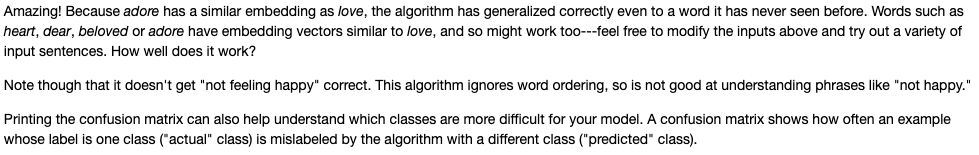

X_my_sentences = np.array(["i adore you", "i love you", "funny lol", "lets play with a ball", "food is ready", "not feeling happy"])

Y_my_labels = np.array([[0], [0], [2], [1], [4],[3]])

pred = predict(X_my_sentences, Y_my_labels , W, b, word_to_vec_map)

print_predictions(X_my_sentences, pred)Accuracy: 0.8333333333333334 i adore you ❤️ i love you ❤️ funny lol ? lets play with a ball ⚾ food is ready ? not feeling happy ?

print(Y_test.shape)

print(' '+ label_to_emoji(0)+ ' ' + label_to_emoji(1) + ' ' + label_to_emoji(2)+ ' ' + label_to_emoji(3)+' ' + label_to_emoji(4))

print(pd.crosstab(Y_test, pred_test.reshape(56,), rownames=['Actual'], colnames=['Predicted'], margins=True))

plot_confusion_matrix(Y_test, pred_test)

import numpy as np

np.random.seed(0)

from keras.models import Model

from keras.layers import Dense, Input, Dropout, LSTM, Activation

from keras.layers.embeddings import Embedding

from keras.preprocessing import sequence

from keras.initializers import glorot_uniform

np.random.seed(1)

# GRADED FUNCTION: sentences_to_indices

def sentences_to_indices(X, word_to_index, max_len):

"""

Converts an array of sentences (strings) into an array of indices corresponding to words in the sentences.

The output shape should be such that it can be given to `Embedding()` (described in Figure 4).

Arguments:

X -- array of sentences (strings), of shape (m, 1)

word_to_index -- a dictionary containing the each word mapped to its index

max_len -- maximum number of words in a sentence. You can assume every sentence in X is no longer than this.

Returns:

X_indices -- array of indices corresponding to words in the sentences from X, of shape (m, max_len)

"""

m = X.shape[0] # number of training examples

### START CODE HERE ###

# Initialize X_indices as a numpy matrix of zeros and the correct shape (≈ 1 line)

X_indices = np.zeros((m, max_len))

for i in range(m): # loop over training examples

# Convert the ith training sentence in lower case and split is into words. You should get a list of words.

sentence_words = X[i].lower().split()

# Initialize j to 0

j = 0

# Loop over the words of sentence_words

for w in sentence_words:

# Set the (i,j)th entry of X_indices to the index of the correct word.

X_indices[i, j] = word_to_index[w]

# Increment j to j + 1

j = j + 1

### END CODE HERE ###

return X_indicesX1 = np.array(["funny lol", "lets play baseball", "food is ready for you"])

X1_indices = sentences_to_indices(X1,word_to_index, max_len = 5)

print("X1 =", X1)

print("X1_indices =", X1_indices)X1 = ['funny lol' 'lets play baseball' 'food is ready for you'] X1_indices = [[155345. 225122. 0. 0. 0.] [220930. 286375. 69714. 0. 0.] [151204. 192973. 302254. 151349. 394475.]]

# GRADED FUNCTION: pretrained_embedding_layer

def pretrained_embedding_layer(word_to_vec_map, word_to_index):

"""

Creates a Keras Embedding() layer and loads in pre-trained GloVe 50-dimensional vectors.

Arguments:

word_to_vec_map -- dictionary mapping words to their GloVe vector representation.

word_to_index -- dictionary mapping from words to their indices in the vocabulary (400,001 words)

Returns:

embedding_layer -- pretrained layer Keras instance

"""

vocab_len = len(word_to_index) + 1 # adding 1 to fit Keras embedding (requirement)

emb_dim = word_to_vec_map["cucumber"].shape[0] # define dimensionality of your GloVe word vectors (= 50)

### START CODE HERE ###

# Initialize the embedding matrix as a numpy array of zeros of shape (vocab_len, dimensions of word vectors = emb_dim)

emb_matrix = np.zeros((vocab_len, emb_dim))

# Set each row "index" of the embedding matrix to be the word vector representation of the "index"th word of the vocabulary

for word, index in word_to_index.items():

emb_matrix[index, :] = word_to_vec_map[word]

# Define Keras embedding layer with the correct output/input sizes, make it trainable. Use Embedding(...). Make sure to set trainable=False.

embedding_layer = Embedding(vocab_len, emb_dim, trainable=False)

### END CODE HERE ###

# Build the embedding layer, it is required before setting the weights of the embedding layer. Do not modify the "None".

embedding_layer.build((None,))

# Set the weights of the embedding layer to the embedding matrix. Your layer is now pretrained.

embedding_layer.set_weights([emb_matrix])

return embedding_layerembedding_layer = pretrained_embedding_layer(word_to_vec_map, word_to_index)

print("weights[0][1][3] =", embedding_layer.get_weights()[0][1][3])weights[0][1][3] = -0.3403

# GRADED FUNCTION: Emojify_V2

def Emojify_V2(input_shape, word_to_vec_map, word_to_index):

"""

Function creating the Emojify-v2 model's graph.

Arguments:

input_shape -- shape of the input, usually (max_len,)

word_to_vec_map -- dictionary mapping every word in a vocabulary into its 50-dimensional vector representation

word_to_index -- dictionary mapping from words to their indices in the vocabulary (400,001 words)

Returns:

model -- a model instance in Keras

"""

### START CODE HERE ###

# Define sentence_indices as the input of the graph, it should be of shape input_shape and dtype 'int32' (as it contains indices).

sentence_indices = Input(shape=input_shape, dtype='int32')

# Create the embedding layer pretrained with GloVe Vectors (≈1 line)

embedding_layer = pretrained_embedding_layer(word_to_vec_map, word_to_index)

# Propagate sentence_indices through your embedding layer, you get back the embeddings

embeddings = embedding_layer(sentence_indices)

# Propagate the embeddings through an LSTM layer with 128-dimensional hidden state

# Be careful, the returned output should be a batch of sequences.

X = LSTM(128, return_sequences=True)(embeddings)

# Add dropout with a probability of 0.5

X = Dropout(0.5)(X)

# Propagate X trough another LSTM layer with 128-dimensional hidden state

# Be careful, the returned output should be a single hidden state, not a batch of sequences.

X = LSTM(128)(X)

# Add dropout with a probability of 0.5

X = Dropout(0.5)(X)

# Propagate X through a Dense layer with softmax activation to get back a batch of 5-dimensional vectors.

X = Dense(5)(X)

# Add a softmax activation

X = Activation(activation='softmax')(X)

# Create Model instance which converts sentence_indices into X.

model = Model(inputs=[sentence_indices], outputs=X)

### END CODE HERE ###

return model

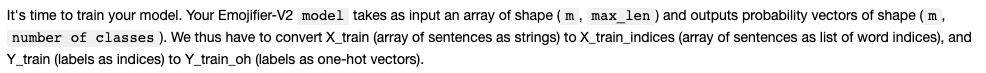

model = Emojify_V2((maxLen,), word_to_vec_map, word_to_index)

model.summary()

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

X_train_indices = sentences_to_indices(X_train, word_to_index, maxLen)

Y_train_oh = convert_to_one_hot(Y_train, C = 5)print(X_train_indices.shape)(132, 10)

model.fit(X_train_indices, Y_train_oh, epochs = 70, batch_size = 32, shuffle=True)

X_test_indices = sentences_to_indices(X_test, word_to_index, max_len = maxLen)

Y_test_oh = convert_to_one_hot(Y_test, C = 5)

loss, acc = model.evaluate(X_test_indices, Y_test_oh)

print()

print("Test accuracy = ", acc)56/56 [==============================] - 1s 17ms/step Test accuracy = 0.839285705770765

# Change the sentence below to see your prediction. Make sure all the words are in the Glove embeddings.

x_test = np.array(['not feeling happy'])

X_test_indices = sentences_to_indices(x_test, word_to_index, maxLen)

print(x_test[0] +' '+ label_to_emoji(np.argmax(model.predict(X_test_indices))))not feeling happy ?

5万+

5万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?