DL学习笔记 T8

【TF NOTE】T8 猫狗识别

前言

- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊

一、设置GPU

import tensorflow as tf

gpus = tf.config.list_physical_devices("GPU")

if gpus:

tf.config.experimental.set_memory_growth(gpus[0],True)

tf.config.set_visible_devices(gpus[0],"GPU")

print(gpus)

输出

二、使用步骤

1.导入数据

#导入数据

import matplotlib.pyplot as plt

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

import os, PIL, pathlib

import warnings

warnings.filterwarnings('ignore')

data_dir = "D:/BaiduNetdiskDownload/T8"

data_dir = pathlib.Path(data_dir)

image_count = len(list(data_dir.glob('*/*')))

print("图片总数为:",image_count)

输出

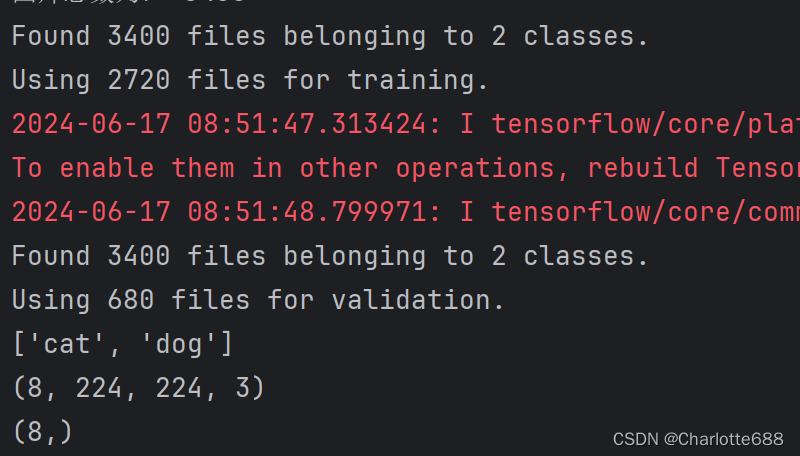

2.加载数据

#加载数据

batch_size = 8

img_height = 224

img_width = 224

train_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="training",

seed=12,

image_size=(img_height, img_width),

batch_size=batch_size)

val_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="validation",

seed=12,

image_size=(img_height,img_width),

batch_size=batch_size)

class_names = train_ds.class_names

print(class_names)

for image_batch, labels_batch in train_ds:

print(image_batch.shape)

print(labels_batch.shape)

break

AUTOTUNE = tf.data.AUTOTUNE

def preprocess_image(image, label):

return (image/255.0,label)

输出

3.归一化

#归一化

train_ds = train_ds.map(preprocess_image, num_parallel_calls = AUTOTUNE)

val_ds = val_ds.map(preprocess_image,num_parallel_calls = AUTOTUNE)

train_ds = train_ds.cache().shuffle(1000).prefetch(buffer_size=AUTOTUNE)

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)

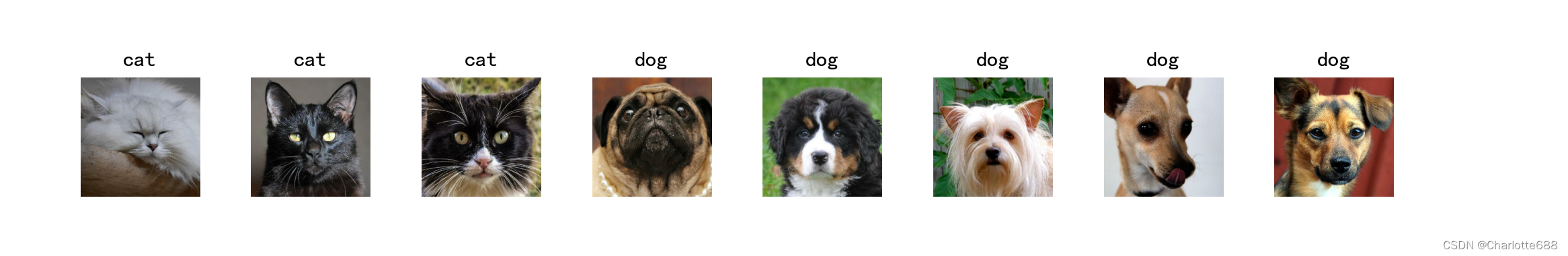

4.可视化数据

#可视化数据

plt.figure(figsize=(15,10))

for images, labels in train_ds.take(1):

for i in range(8):

ax = plt.subplot(5,8,i+1)

plt.imshow(images[i])

plt.title(class_names[labels[i]])

plt.axis("off")

输出

5.建立模型

VGG模型

我感觉bug是这里的model = VGG16(1000, (img_width, img_height, 3)),这里1000是nb_classes,应该只有两类,我这里改成model = VGG16(2, (img_width, img_height, 3))

from tensorflow.keras import layers, models, Input

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Dense, Flatten, Dropout

def VGG16(nb_classes, input_shape):

input_tensor = Input(shape=input_shape)

#block1

x= Conv2D(64,(3,3),activation='relu',padding='same', name='block1_conv1')(input_tensor)

x = Conv2D(64, (3,3), activation='relu',padding='same', name='block1_conv2')(x)

x = MaxPooling2D((2,2), strides=(2,2), name='block1_pool')(x)

#block2

x= Conv2D(128,(3,3), activation='relu', padding='same', name='block2_conv1')(x)

x = Conv2D(128, (3,3),activation='relu',padding='same', name='block2_conv2')(x)

x = MaxPooling2D((2,2), strides=(2,2), name='block2_pool')(x)

#block3

x = Conv2D(256,(3,3),activation='relu', padding='same',name='block3_conv1')(x)

x = Conv2D(256, (3,3),activation='relu', padding='same',name='block3_conv2')(x)

x = Conv2D(256, (3,3), activation='relu',padding='same', name='block3_conv3')(x)

x = MaxPooling2D((2,2), strides=(2,2),name='block3_pool')(x)

#block4

x= Conv2D(512, (3,3), activation='relu',padding='same', name='block4_conv1')(x)

x = Conv2D(512,(3,3),activation='relu',padding='same',name='block4_conv2')(x)

x = Conv2D(512,(3,3),activation='relu', padding='same', name='block4_conv3')(x)

x = MaxPooling2D((2,2), strides=(2,2),name='block4_pool')(x)

#block5

x = Conv2D(512,(3,3),activation='relu',padding='same',name='block5_conv1')(x)

x = Conv2D(512, (3,3),activation='relu', padding='same', name='block5_conv2')(x)

x = Conv2D(512,(3,3),activation='relu', padding='same', name='block5_conv3')(x)

x = MaxPooling2D((2,2), strides=(2,2),name='block5_pool')(x)

#全连接

x = Flatten()(x)

x = Dense(4096, activation='relu', name='fc1')(x)

x = Dense(4096, activation='relu', name='fc2')(x)

output_tensor = Dense(nb_classes, activation='softmax', name='predictions')(x)

model = Model(input_tensor, output_tensor)

return model

model = VGG16(2, (img_width, img_height, 3))

model.summary()

输出模型简介

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 224, 224, 3)] 0

block1_conv1 (Conv2D) (None, 224, 224, 64) 1792

block1_conv2 (Conv2D) (None, 224, 224, 64) 36928

block1_pool (MaxPooling2D) (None, 112, 112, 64) 0

block2_conv1 (Conv2D) (None, 112, 112, 128) 73856

block2_conv2 (Conv2D) (None, 112, 112, 128) 147584

block2_pool (MaxPooling2D) (None, 56, 56, 128) 0

block3_conv1 (Conv2D) (None, 56, 56, 256) 295168

block3_conv2 (Conv2D) (None, 56, 56, 256) 590080

block3_conv3 (Conv2D) (None, 56, 56, 256) 590080

block3_pool (MaxPooling2D) (None, 28, 28, 256) 0

block4_conv1 (Conv2D) (None, 28, 28, 512) 1180160

block4_conv2 (Conv2D) (None, 28, 28, 512) 2359808

block4_conv3 (Conv2D) (None, 28, 28, 512) 2359808

block4_pool (MaxPooling2D) (None, 14, 14, 512) 0

block5_conv1 (Conv2D) (None, 14, 14, 512) 2359808

block5_conv2 (Conv2D) (None, 14, 14, 512) 2359808

block5_conv3 (Conv2D) (None, 14, 14, 512) 2359808

block5_pool (MaxPooling2D) (None, 7, 7, 512) 0

flatten (Flatten) (None, 25088) 0

fc1 (Dense) (None, 4096) 102764544

fc2 (Dense) (None, 4096) 16781312

predictions (Dense) (None, 2) 8194

=================================================================

Total params: 134,268,738

Trainable params: 134,268,738

Non-trainable params: 0

_________________________________________________________________

6.编译模型

#编译模型

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

7.训练模型

改用model.train_on_batch方法训练模型

#训练模型

from tqdm import tqdm

import tensorflow.keras.backend as K

epochs = 10

lr = 1e-4

history_train_loss = []

history_train_accuracy = []

history_val_loss = []

history_val_accuracy = []

for epoch in range(epochs):

train_total = len(train_ds)

val_total = len(val_ds)

with tqdm(total=train_total, desc=f'Epoch{epoch +1}/{epochs}', mininterval = 1, ncols = 100) as pbar:

lr = lr*0.92

K.set_value(model.optimizer.lr, lr)

for image, label in train_ds:

history = model.train_on_batch(image,label)

train_loss = history[0]

train_accuracy = history[1]

pbar.set_postfix({"loss":"%.4f"%train_loss,

"accuracy":"%.4f"%train_accuracy,

"lr":K.get_value(model.optimizer.lr)})

pbar.update(1)

history_train_loss.append(train_loss)

history_train_accuracy.append(train_accuracy)

print("开始验证")

with tqdm(total = val_total, desc=f'Epoch{epoch+1}/{epochs}',mininterval = 0.3, ncols =100) as pbar:

for image, label in val_ds:

history = model.test_on_batch(image, label)

val_loss = history[0]

val_accuracy = history[1]

pbar.set_postfix({"loss":"%.4f"%val_loss,

"accuracy":"%.4f"%val_accuracy})

pbar.update(1)

history_val_loss.append(val_loss)

history_val_accuracy.append(val_accuracy)

print('结束验证')

print("验证loss为:%.4f"%val_loss)

print("验证准确率为:%.4f"%val_accuracy)

8.评估模型

#模型评估

epochs_range = range(epochs)

plt.figure(figsize=(12,4))

plt.subplot(1,2,1)

plt.plot(epochs_range, history_train_accuracy, label = 'Training Accuracy')

plt.plot(epochs_range, history_val_accuracy, label = 'Validation Accuracy')

plt.legend(loc = 'lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1,2,2)

plt.plot(epochs_range, history_train_loss, label = 'Training Loss')

plt.plot(epochs_range, history_val_loss, label = 'Validation Loss')

plt.legend(loc = 'upper right')

plt.title('Training and Validation Loss')

plt.show()

输出

这遍跑的指标特别好,其他跑的就很拉跨

9.预测

#预测

import numpy as np

plt.figure(figsize=(18,3))

plt.suptitle("预测结果展示")

for images, labels in val_ds.take(1):

for i in range(8):

ax = plt.subplot(1,8,i+1)

plt.imshow(images[i].numpy())

img_array = tf.expand_dims(images[i],0)

predictions = model.predict(img_array)

plt.title(class_names[np.argmax(predictions)])

plt.axis("off")

总结

本次运用了model.train_on_batch()进行模型训练,比model.fit()更加多元灵活,t并使用tqdm实现可视化进度条。

1423

1423

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?