情感分析作业说明:本次任务是有关自然语言处理领域中的情感识别问题(也叫观点分析问题,也就是将数据分类成正向、负向),包含六万多条购物评价,分别来自书籍、平板、手机、水果、洗发水、热水器、蒙牛、衣服、计算机、酒店,共十个类别。

一、数据读入及预处理

import pandas as pd

import jieba

import re

import os

from gensim.models.word2vec import Word2Vec

from sklearn.model_selection import train_test_split

import torch

from torch import nn

from torch.utils.data import Dataset,DataLoader

import torch.optim as optim

from torch.nn.utils.rnn import pad_sequence

from torch.utils.tensorboard import SummaryWriter

from tqdm.notebook import tqdm#tqdm是进度条库

from torch.nn.utils.rnn import pad_sequence,pack_padded_sequence,pad_packed_sequence(1)数据读入

path="online_shopping_10_cats.csv"

df=pd.read_csv(path)

df.head()(2)数据筛选及处理

df=df[["review","label"]]

df.head()(3)去重

print(df.shape)

df.drop_duplicates()数据清洗

info=re.compile("[0-9a-zA-Z]|作者|当当网|京东|洗发水|蒙牛|衣服|酒店|房间")

# print(df["review"].dtype)

df["review"]=df["review"].apply(lambda x:info.sub("",str(x)))

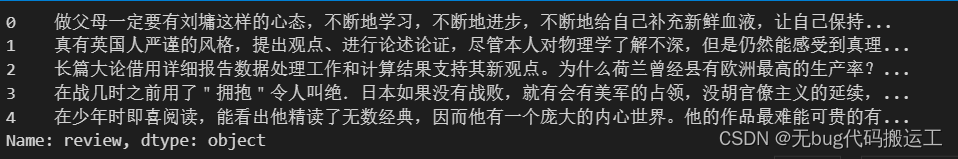

df["review"].head()运行如图:

(5)分词

df["words"]=df["review"].apply(jieba.lcut)

df.head()(6)建立词表

words = []

for sentence in df["words"].values:

for word in sentence:

words.append(word)

len(words)

words = list(set(words))#对词表去重

words = sorted(words)

word2idx = {w:i+1 for i,w in enumerate(words)}

idx2word = {i+1:w for i,w in enumerate(words)}

word2idx['<unk>'] = 0

idx2word[0] = '<unk>'(7)将中文词数字化表示

data = []

label = []

for sentence in df['words']:

words_to_idx = []

for word in sentence:

index = word2idx[word]

words_to_idx.append(index)

data.append(torch.tensor(words_to_idx))

label = torch.from_numpy(df['label'].values)

len(data)

len(label)(8)划分训练集和验证集

x_train,x_val,y_train,y_val=train_test_split(data,label,test_size=0.2)(9)设置DataSet和DataLoader

提供现成的数据变长处理的方法,可以直接在DataLoader的参数中设置collate_fn=mycollate_fn来使用这个方法

def mycollate_fn(data):

data.sort(key=lambda x: len(x[0]), reverse=True)

data_length = [len(sq[0]) for sq in data]

input_data = []

label_data = []

for i in data:

input_data.append(i[0])

label_data.append(i[1])

input_data = pad_sequence(input_data, batch_first=True, padding_value=0)

label_data = torch.tensor(label_data)

return input_data, label_data, data_length

class mDataSet(Dataset):

def __init__(self, data, label):

self.data = data

self.label = label

def __getitem__(self, idx: int):

return self.data[idx], self.label[idx]

def __len__(self):

return len(self.data)

train_dataset=mDataSet(x_train, y_train)

train_loader = DataLoader(train_dataset, batch_size=16, shuffle=True,num_workers=0,collate_fn=mycollate_fn)

val_dataset = mDataSet(x_val, y_val)

val_loader = DataLoader(val_dataset, batch_size=16, shuffle=True,num_workers=0, collate_fn=mycollate_fn) 二、建立模型

(1)定义模型

class Model(nn.Module):

def __init__(self, num_embeddings, embedding_dim, hidden_dim, num_layers):

super(Model, self).__init__()

self.hidden_dim = hidden_dim

self.embeddings = nn.Embedding(num_embeddings,embedding_dim)#nn.Embedding随机初始化词向量,词典的大小,嵌入词向量的维度

self.lstm = nn.LSTM(embedding_dim, self.hidden_dim,num_layers)#

self.dropout = nn.Dropout(0.5)

self.fc1 = nn.Linear(self.hidden_dim,256)

self.fc2 = nn.Linear(256,32)

self.fc3 = nn.Linear(32,2)

def forward(self, input, batch_seq_len):

embeds = self.embeddings(input)

embeds = pack_padded_sequence(embeds,batch_seq_len, batch_first=True)

batch_size, seq_len = input.size()

h_0 = input.data.new(3, batch_size, self.hidden_dim).fill_(0).float()

c_0 = input.data.new(3, batch_size, self.hidden_dim).fill_(0).float()

output, hidden = self.lstm(embeds, (h_0, c_0))

output,_ = pad_packed_sequence(output,batch_first=True)

output = self.dropout(torch.tanh(self.fc1(output)))

output = torch.tanh(self.fc2(output))

output = self.fc3(output)

last_outputs = self.get_last_output(output, batch_seq_len)

return last_outputs,hidden

def get_last_output(self,output,batch_seq_len):

last_outputs = torch.zeros((output.shape[0],output.shape[2]))

for i in range(len(batch_seq_len)):

last_outputs[i] = output[i][batch_seq_len[i]-1]#index 是长度 -1

last_outputs = last_outputs.to(output.device)

return last_outputs(2)初始化模型

# 实例化模型

model = Model(num_embeddings=len(words) + 1,embedding_dim=50,hidden_dim=100,num_layers=3)

# 定义优化器

optimizer = torch.optim.SGD(model.parameters(),lr=0.008)

# 学习率调整(可选)

# 定义损失函数

criterion = nn.CrossEntropyLoss()(3)准确率指标

class AvgrageMeter(object):

def __init__(self):

self.reset()

def reset(self):

self.avg = 0

self.sum = 0

self.cnt = 0

def update(self, val, n=1):

self.sum += val * n

self.cnt += n

self.avg = self.sum / self.cnt

def accuracy(output, label, topk=(1,)):

maxk = max(topk)

batch_size = label.size(0)

# 获取前K的索引

_, pred = output.topk(maxk, 1, True, True) #使用topk来获得前k个的索引

pred = pred.t() # 进行转置

# eq按照对应元素进行比较 view(1,-1) 自动转换到行为1,的形状, expand_as(pred) 扩展到pred的shape

# expand_as 执行按行复制来扩展,要保证列相等

correct = pred.eq(label.view(1, -1).expand_as(pred)) # 与正确标签序列形成的矩阵相比,生成True/False矩阵

# print(correct)

rtn = []

for k in topk:

correct_k = correct[:k].reshape(-1).float().sum(0) # 前k行的数据 然后平整到1维度,来计算true的总个数

rtn.append(correct_k.mul_(100.0 / batch_size)) # mul_() ternsor 的乘法 正确的数目/总的数目 乘以100 变成百分比

return rtn(4)训练

def train(epoch,epochs, train_loader, device, model, criterion, optimizer,tensorboard_path,k):

model.train()

top1 = AvgrageMeter()

model = model.to(device)

train_loss = 0.0

for i, data in enumerate(train_loader, 0): # 0是下标起始位置默认为0

inputs, labels, batch_seq_len = data[0].to(device), data[1].to(device), data[2]

if batch_seq_len[-1] <= 0:

continue

# 初始为0,清除上个batch的梯度信息

optimizer.zero_grad()

outputs,hidden = model(inputs,batch_seq_len)

loss = criterion(outputs,labels)

loss.backward()

optimizer.step()

_,pred = outputs.topk(1)

prec1, prec2= accuracy(outputs, labels, topk=(1,2))

n = inputs.size(0)

top1.update(prec1.item(), n)

train_loss += loss.item()

postfix = {'train_loss': '%.6f' % (train_loss / (i + 1)), 'train_acc': '%.6f' % top1.avg}

train_loader.set_postfix(log=postfix)

# ternsorboard 曲线绘制

# if os.path.exists(tensorboard_path) == False:

# os.mkdir(tensorboard_path)

if i%3==0:

k = k + 1

writer = SummaryWriter(tensorboard_path)

writer.add_scalar('Train/Loss', loss.item(), k)

writer.add_scalar('Train/Accuracy', top1.avg, k)

writer.flush()

return k

device = torch.device("cuda" if torch.cuda.is_available() else "cpu") # 让torch判断是否使用GPU,建议使用GPU环境,因为会快很多

epochs=12

k = 0

for epoch in range(epochs):

train_loader = tqdm(train_loader)

k = train(epoch, epochs, train_loader, device, model, criterion, optimizer, "runs/train",k)

#'train_loss': '0.381668', 'train_acc': '84.044568'

def validate(epoch,validate_loader, device, model, criterion, tensorboard_path,k):

val_acc = 0.0

model = model.to(device)

model.eval()

with torch.no_grad(): # 进行评测的时候网络不更新梯度

val_top1 = AvgrageMeter()

validate_loader = tqdm(validate_loader)

validate_loss = 0.0

for i, data in enumerate(validate_loader, 0): # 0是下标起始位置默认为0

inputs, labels, batch_seq_len = data[0].to(device), data[1].to(device), data[2]

# print('data0',data[0].shape)

# print('data2',data[2])

# print(i)

if batch_seq_len[-1] <= 0:

continue

outputs,_ = model(inputs, batch_seq_len)

loss = criterion(outputs, labels)

#print('lables',labels)

prec1, prec2 = accuracy(outputs, labels, topk=(1, 2))

n = inputs.size(0)

val_top1.update(prec1.item(), n)

validate_loss += loss.item()

postfix = {'validate_loss': '%.6f' % (validate_loss / (i + 1)), 'validate_acc': '%.6f' % val_top1.avg}

validate_loader.set_postfix(log=postfix)

# ternsorboard 曲线绘制

# if os.path.exists(tensorboard_path) == False:

# os.mkdir(tensorboard_path)

if i % 5==0:

k = k + 1

writer = SummaryWriter(tensorboard_path)

writer.add_scalar('Validate/Loss', loss.item(), k)

writer.add_scalar('Validate/Accuracy', val_top1.avg, k)

writer.flush()

val_acc = val_top1.avg

print("val_acc: " + str(val_acc) + "%")

return val_acc

k = 0

validate(epoch, val_loader,device,model,criterion,"runs/val",k)

#val_acc: 84.74806356060708%

#对模型进行测试

string = '商品外观有问题转来转去 解决不了'

words = jieba.lcut(string)

wtoid = []

for word in words:

wtoid.append(word2idx[word])

#print(wtoid)

model = model.to(device)

model.eval()

batch_seq_len = []

batch_seq_len.append(len(wtoid))

inputs = torch.tensor(wtoid).reshape(1,-1)

# print(inputs.shape)

inputs = inputs.to(device)

# print(batch_seq_len)

# print(inputs.shape)

with torch.no_grad():

outputs,_ = model(inputs,batch_seq_len)

if outputs[0][0] < outputs[0][1]:

print("positive")

else:

print("nagetive")

3931

3931

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?