该系列仅在原课程基础上部分知识点添加个人学习笔记,或相关推导补充等。如有错误,还请批评指教。在学习了 Andrew Ng 课程的基础上,为了更方便的查阅复习,将其整理成文字。因本人一直在学习英语,所以该系列以英文为主,同时也建议读者以英文为主,中文辅助,以便后期进阶时,为学习相关领域的学术论文做铺垫。- ZJ

转载请注明作者和出处:ZJ 微信公众号-「SelfImprovementLab」

知乎:https://zhuanlan.zhihu.com/c_147249273

CSDN:http://blog.csdn.net/junjun_zhao/article/details/79082732

1.14 Gradient Checking implementation notes (关于梯度检验实现的注记)

(字幕来源:网易云课堂)

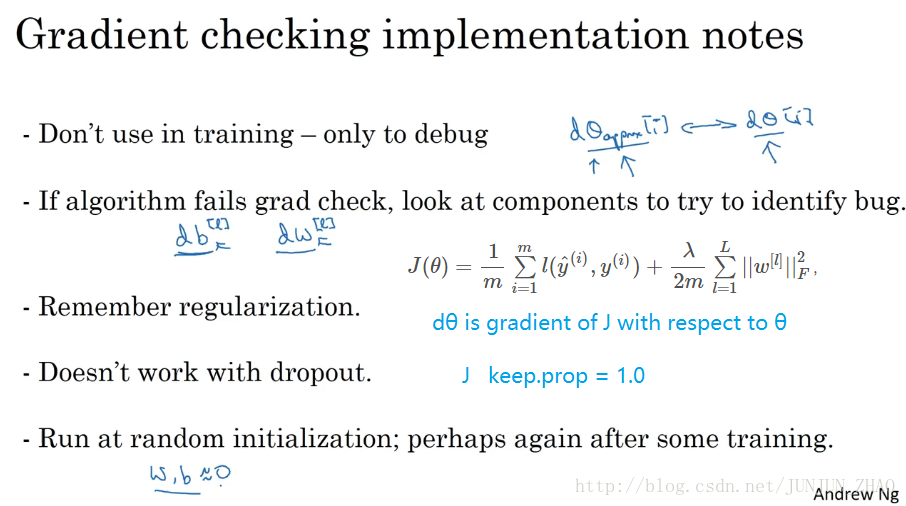

In the last video you learned about gradient checking.In this video, I want to share with you some practical tips or some notes on how to actually go about implementing this for your neural network.First, don’t use grad check in training, only to debug.So what I mean is that, computing d theta approx i, for all the values of i,this is a very slow computation.So to implement gradient descent, you’d use backprop to compute d theta andjust use backprop to compute the derivative.And it’s only when you’re debugging that you would compute this to make sure it’s close to d theta.But once you’ve done that, then you would turn off the grad check, and don’t run this during every iteration of gradient descent,because that’s just much too slow.Second, if an algorithm fails grad check, look at the components,look at the individual components, and try to identify the bug.So what I mean by that is if d theta approx is very far from d theta,what I would do is look at the different values of i to see which are the values ofd theta approx that are really very different than the values of d theta.

上节课我们讲了梯度检验,这节课我想分享一些关于,如何在神经网络实施梯度检验的实用技巧和注意事项,首先不要在训练中使用梯度检验,它只用于调试,我的意思是计算所有 i 值的 dθ[i]approx ,是一个非常慢长的计算过程,为了实施梯度下降 你必须使用 backprop 来计算 dθ,并使用 backprop 来计算导数,只有调试的时候 你才会计算它,来确认数值是否接近 dθ,完成后 你会关闭梯度检验,梯度检验的每一个迭代过程都不执行它,因为它太慢了,第二点 如果算法的梯度检验失败,要检查所有项,检查每一项并试着找出 bug,也就是说 如果 dθ[i]approx 与 dθ 的值相差很大,我们要做的就是查找不同的 i 值 看看是哪个导致, dθ[i]approx 与 dθ 的值相差这么多。

So for example,if you find that the values of theta or d theta, they’re very far off,all correspond to dbl for some layer or for some layers,but the components for dw are quite close, right?Remember, different components of theta correspond to different components of b and w.When you find this is the case, then maybe you find that the bug is in how you’re computing db, the derivative with respect to parameters b.And similarly, vice versa, if you find that the values that are very far,the values from d theta approx that are very far from d theta,you find all those components came from dw or from dw in a certain layer,then that might help you hone in on the location of the bug.This doesn’t always let you identify the bug right away, but sometimes it helps you give you some guesses about where to track down the bug.

举个例子,如果你发现 相对于某些层或某层的 db[l],θ 或 dθ 的值相差很大,但是 dw[l] 的各项非常接近,注意 θ 的各项与 b 和 w 的各项都是一一对应的,这时你可能会发现,在计算参数 b 的导数 db 的过程中存在 bug,反过来也一样 如果你发现它们的值相差很大, dθapprox 的值与 dθ 相差很大,你会发现所有这些项都来自 dw 或某层的 dw,可能帮你定位 bug 的位置,虽然未必能够帮你准确定位 bug 的位置,但它可以帮你估测需要在哪些地方追踪 bug。

Next, when doing grad check,remember your regularization term if you’re using regularization.So if your cost function is J of theta equals 1 over m sum of your losses and then plus this regularization term, and sum of the l of wl squared,then this is the definition of J.And you should have that d theta is gradient of J with respect to theta,including this regularization term.So just remember to include that term.Next, grad check doesn’t work with dropout,because in every iteration, dropout is randomly eliminating different subsets of the hidden units.There isn’t an easy to compute cost function J that dropout is doing gradient descent on.It turns out that dropout can be viewed as optimizing some cost function J, but it’s cost function J defined by summing over all exponentially large subsets of nodes, they could eliminate in any iteration.So the cost function J is very difficult to compute,and you’re just sampling the cost function,every time you eliminate different random subsets in those, we use dropout.So it’s difficult to use grad check to double check your computation with dropouts.So what I usually do is implement grad check without dropout.So if you want, you can set keep-prob and dropout to be equal to 1.0.And then turn on dropout and hope that my implementation of dropout was correct.

第三点 在实施梯度检验时,如果使用正则化,请注意正则项,如果代价函数 J 等于, J(θ)=1m∑i=1ml(y^(i),y(i))+λ2m∑l=1L||w[l]||2F ,这就是代价函数 J 的定义,dθ 等于与 θ 相关的 J 函数的梯度,包括这个正则项,记住一定要包括这个正则项,第四点 梯度检验不能与 dropout 同时使用,因为每次迭代过程中 dropout 会随机消除隐层单元的不同子集,难以计算 dropout 在梯度下降上的代价函数 J,因此 dropout 可作为优化代价函数J的一种方法,但是代价函数 J 被定义为对所有指数极大的节点子集求和,而在任何迭代过程中 这些节点都有可能被消除,所以很难计算代价函数 J,你只是对成本函数做抽样,用 dropout 每次随机消除不同的子集,所以很难用梯度检验来双重检验 dropout 的计算,所以我一般不同时使用梯度检验和 dropout,如果你想这样做 可以把 dropout 中的 keep.prob 设置为1,然后打开 dropout 并寄希望于 dropout 的实施是正确的。

There are some other things you could do, like fix the pattern of nodes dropped and verify that grad check for that pattern of [INAUDIBLE] is correct,but in practice I don’t usually do that.So my recommendation is turn off dropout, use grad check to double check that your algorithm is at least correct without dropout,and then turn on dropout.Finally, this is a subtlety.It is not impossible, rarely happens, but it’s not impossible that your implementation of gradient descent is correct when w and b are close to 0,so at random initialization,But that as you run gradient descent and w and b become bigger,maybe your implementation of backprop is correct only when w and b is close to 0,but it gets more inaccurate when w and b become large.So one thing you could do, I don’t do this very often,but one thing you could do is run grad check at random initializationand then train the network for a whileso that w and b have some time to wander away from 0,from your small random initial values.And then run grad check again after you’ve trained for some number of iterations.

你还可以做点别的 比如修改节点丢失的模式,确认梯度检验是正确的,实际上 我一般不这么做,我建议关闭 dropout 用梯度检验进行双重检查,在没有 dropout 的情况下 你的算法至少是正确的,然后打开 dropout,最后一点 也是比较微妙的一点,现实中几乎不会出现这种情况,当 W 和 b 接近 0 时 梯度下降的实施是正确的,在随机初始化过程中…,但是在运行梯度下降时 W 和 b 变得更大,可能只有在 W 和 b 接近 0 时 backprop 的实施才是正确的,但是当 W 和 b 变大时 它会变得越来越不准确,你需要做一件事 我不经常这么做,就是在随机初始化过程中运行梯度检验。然后再训练网络,W 和 b 会有一段时间远离 0,如果随机初始化值比较小,反复训练网络之后 再重新运行梯度检验。

So that’s it for gradient checking.And congratulations for coming to the end of this week’s materials.In this week, you’ve learned about how to set up your train, dev, and test sets,how to analyze bias and variance and what things to do if you have high bias versus high variance versus maybe high bias and high variance.You also saw how to apply different forms of regularization, like L2 regularizationand dropout on your neural network.So some tricks for speeding up the training of your neural network.And then finally, gradient checking.So I think you’ve seen a lot in this week and you get to exercise a lot of these ideas in this week’s programming exercise.So best of luck with that, andI look forward to seeing you in the week two materials.

这就是梯度检验,恭喜大家 这是本周的最后一课了,回顾这一周 我们讲了如何配置训练集 验证集和测试集,如何分析偏差和方差,如何处理高偏差或高方差,以及高偏差和高方差并存的问题,如何在神经网络中应用不同形式的正则化 如 L2 正则化和 dropout,,还有加快神经网络训练速度的技巧,最后是梯度检验,这一周我们学习了很多内容,你可以在本周的编码作业中多多练习这些概念,祝你好运,期待下周再见。

重点总结:

实现梯度检验 Notes

- 不要在训练过程中使用梯度检验,只在 debug 的时候使用,使用完毕关闭梯度检验的功能;

- 如果算法的梯度检验出现了错误,要检查每一项,找出错误,也就是说要找出哪个 dθ[i]approx 与 dθ 的值相差比较大;

- 不要忘记了正则化项;

- 梯度检验不能与 dropout 同时使用。因为每次迭代的过程中,dropout 会随机消除隐层单元的不同神经元,这时是难以计算 dropout 在梯度下降上的代价函数 J <script type="math/tex" id="MathJax-Element-8">J</script>;

- 在随机初始化的时候运行梯度检验,或许在训练几次后再进行。

参考文献:

[1]. 大树先生.吴恩达Coursera深度学习课程 DeepLearning.ai 提炼笔记(2-1)– 深度学习的实践方面

PS: 欢迎扫码关注公众号:「SelfImprovementLab」!专注「深度学习」,「机器学习」,「人工智能」。以及 「早起」,「阅读」,「运动」,「英语 」「其他」不定期建群 打卡互助活动。

404

404

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?