Python Basics with Numpy

1 - Building basic functions with numpy

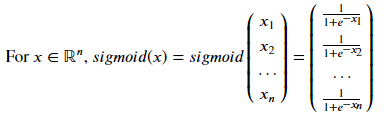

1.1 - sigmoid function, np.exp():

△1:𝑠𝑖𝑔𝑚𝑜𝑖𝑑(𝑥) = 1 / 1+e^-x^ apply a real number

import math

def basic_sigmoid(x):

s = 1.0/(1+1/np.exp(x))

return s

basic_sigmoid(3) #𝑜𝑢𝑡 1

x = [1, 2, 3]

basic_sigmoid(x) #𝑜𝑢𝑡 2

𝑜𝑢𝑡1: 0.9525741268224334

𝑜𝑢𝑡2:array([0.73105858, 0.88079708, 0.95257413])

△2:𝑠𝑖𝑔𝑚𝑜𝑖𝑑(𝑥) = 1 / 1+e^-x^ apply a row vector

import numpy as np

import math

def basic_sigmoid(x):

s = 1.0/(1+1/np.exp(x))

return s

# example of np.exp

x = np.array([1, 2, 3]) #𝑜𝑢𝑡 1

print(np.exp(x))

# example of vector operation

x = np.array([1, 2, 3]) #𝑜𝑢𝑡 2

print (x + 3)

𝑜𝑢𝑡1:[ 2.71828183 7.3890561 20.08553692]

𝑜𝑢𝑡2:[4 5 6]

△3:Implement the sigmoid function using numpy

(x could now be either a real number, a vector, or a matrix. )

import numpy as np

def sigmoid(x):

s = 1.0/(1+1/np.exp(x))

return s

x = np.array([1, 2, 3])

sigmoid(x)

𝑜𝑢𝑡:array([0.73105858, 0.88079708, 0.95257413])

1.2 - Sigmoid gradient

△1:𝑠𝑖𝑔𝑚𝑜𝑖𝑑_𝑑𝑒𝑟𝑖𝑣𝑎𝑡𝑖𝑣𝑒(𝑥)=𝜎′(𝑥)=𝜎(𝑥)(1−𝜎(𝑥))

Compute 𝜎′(𝑥)=𝑠(1−𝑠):

def sigmoid_derivative(x):

s = 1/(1+1/np.exp(x))

ds = s * (1 - s)

return ds

x = np.array([1, 2, 3])

print ("sigmoid_derivative(x) = " + str(sigmoid_derivative(x)))

𝑜𝑢𝑡:gmoid_derivative(x) = [0.19661193 0.10499359 0.04517666]

1.3 - Reshaping arrays

△:X.reshape(...) is used to reshape X into some other dimension.

v = v.reshape((v.shape[0]*v.shape[1], v.shape[2]))

#v.shape[0] = a ; v.shape[1] = b ; v.shape[2] = c

def image2vector(image):

v = image.reshape((image.shape[0] * image.shape[1] * image.shape[2],1))

return v

image = np.array([[[ 0.67826139, 0.29380381],

[ 0.90714982, 0.52835647],

[ 0.4215251 , 0.45017551]],

[[ 0.92814219, 0.96677647],

[ 0.85304703, 0.52351845],

[ 0.19981397, 0.27417313]],

[[ 0.60659855, 0.00533165],

[ 0.10820313, 0.49978937],

[ 0.34144279, 0.94630077]]])

print ("image2vector(image) = " + str(image2vector(image)))

𝑜𝑢𝑡:[[ 0.67826139] [ 0.29380381] [ 0.90714982] [ 0.52835647] [ 0.4215251 ]

[ 0.45017551] [ 0.92814219] [ 0.96677647] [ 0.85304703] [ 0.52351845]

[ 0.19981397] [ 0.27417313] [ 0.60659855] [ 0.00533165] [ 0.10820313]

[ 0.49978937] [ 0.34144279] [ 0.94630077]]

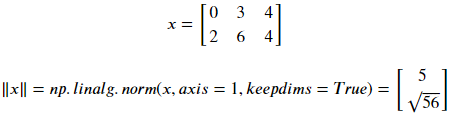

1.4 - Normalizing rows

△:𝑥/‖𝑥‖

if:

then:

def normalizeRows(x):

𝑥𝑛 = 𝑛𝑝.𝑙𝑖𝑛𝑎𝑙𝑔.𝑛𝑜𝑟𝑚(𝑥,𝑎𝑥𝑖𝑠=1,𝑘𝑒𝑒𝑝𝑑𝑖𝑚𝑠=𝑇𝑟𝑢𝑒)

𝑥 = 𝑥 / 𝑥𝑛

return x

𝑥 = np.array([

[0, 3, 4],

[1, 6, 4]])

print("normalizeRows(x) = " + str(normalizeRows(x)))

𝑜𝑢𝑡:

normalizeRows(x) = [[0. 0.6 0.8 ]

[0.13736056 0.82416338 0.54944226]]

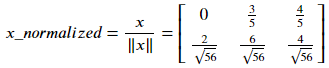

1.5 - Broadcasting and the softmax function

△:Implement a softmax function using numpy.

def softmax(x):

𝑥𝑒𝑥𝑝 = 𝑛𝑝.𝑒𝑥𝑝(𝑥)

𝑥𝑠𝑢𝑚 = 𝑛𝑝.𝑠𝑢𝑚(𝑥𝑒𝑥𝑝, 𝑎𝑥𝑖𝑠=1,𝑘𝑒𝑒𝑝𝑑𝑖𝑚𝑠=𝑇𝑟𝑢𝑒)

𝑠 = 𝑥𝑒𝑥𝑝 / 𝑥𝑠𝑢𝑚

return s

x = np.array([

[9, 2, 5, 0, 0],

[7, 5, 0, 0 ,0]])

print("softmax(x) = " + str(softmax(x)))

𝑜𝑢𝑡:

softmax(x) = [[9.80897665e-01 8.94462891e-04 1.79657674e-02 1.21052389e-04

1.21052389e-04]

[8.78679856e-01 1.18916387e-01 8.01252314e-04 8.01252314e-04

8.01252314e-04]]

2 - Vectorization

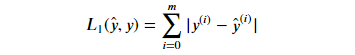

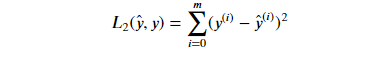

2.1 Implement the L1 and L2 loss functions

△1:Implement the numpy vectorized version of the L1 loss

import numpy as np

def L1(yhat, y):

loss = np.abs( yhat - y)

return loss

yhat = np.array([.9, 0.2, 0.1, .4, .9])

y = np.array([1, 0, 0, 1, 1])

print("L1 = " + str(L1(yhat,y)))

𝑜𝑢𝑡:L1 = [0.1 0.2 0.1 0.6 0.1]

△2:Implement the numpy vectorized version of the L2 loss

def L2(yhat, y):

loss = np.sum((y - yhat) * (y - yhat))

return loss

yhat = np.array([.9, 0.2, 0.1, .4, .9])

y = np.array([1, 0, 0, 1, 1])

print("L2 = " + str(L2(yhat,y)))

𝑜𝑢𝑡:L2 = 0.43

2.2 The difference between the ‘for loop’ and vectorization

△1:For Loop

import time

x1 = [9, 2, 5, 0, 0, 7, 5, 0, 0, 0, 9, 2, 5, 0, 0]

x2 = [9, 2, 2, 9, 0, 9, 2, 5, 0, 0, 9, 2, 5, 0, 0]

### CLASSIC DOT PRODUCT OF VECTORS IMPLEMENTATION ###

tic = time.process_time()

dot = 0

for i in range(len(x1)):

dot+= x1[i]*x2[i]

toc = time.process_time()

print ("dot = " + str(dot) + "\n ----- Computation time = " + str(1000*(toc - tic)) + "ms")

### CLASSIC OUTER PRODUCT IMPLEMENTATION ###

tic = time.process_time()

outer = np.zeros((len(x1),len(x2))) # we create a len(x1)*len(x2) matrix with only zeros

for i in range(len(x1)):

for j in range(len(x2)):

outer[i,j] = x1[i]*x2[j]

toc = time.process_time()

print ("outer = " + str(outer) + "\n ----- Computation time = " + str(1000*(toc - tic)) + "ms")

### CLASSIC ELEMENTWISE IMPLEMENTATION ###

tic = time.process_time()

mul = np.zeros(len(x1))

for i in range(len(x1)):

mul[i] = x1[i]*x2[i]

toc = time.process_time()

print ("elementwise multiplication = " + str(mul) + "\n ----- Computation time = " + str(1000*(toc - tic)) + "ms")

### CLASSIC GENERAL DOT PRODUCT IMPLEMENTATION ###

W = np.random.rand(3,len(x1)) # Random 3*len(x1) numpy array

tic = time.process_time()

gdot = np.zeros(W.shape[0])

for i in range(W.shape[0]):

for j in range(len(x1)):

gdot[i] += W[i,j]*x1[j]

toc = time.process_time()

print ("gdot = " + str(gdot) + "\n ----- Computation time = " + str(1000*(toc - tic)) + "ms")

𝑜𝑢𝑡:

dot = 278

----- Computation time = 0.0ms

outer = [[81. 18. 18. 81. 0. 81. 18. 45. 0. 0. 81. 18. 45. 0. 0.]

[18. 4. 4. 18. 0. 18. 4. 10. 0. 0. 18. 4. 10. 0. 0.]

[45. 10. 10. 45. 0. 45. 10. 25. 0. 0. 45. 10. 25. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[63. 14. 14. 63. 0. 63. 14. 35. 0. 0. 63. 14. 35. 0. 0.]

[45. 10. 10. 45. 0. 45. 10. 25. 0. 0. 45. 10. 25. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[81. 18. 18. 81. 0. 81. 18. 45. 0. 0. 81. 18. 45. 0. 0.]

[18. 4. 4. 18. 0. 18. 4. 10. 0. 0. 18. 4. 10. 0. 0.]

[45. 10. 10. 45. 0. 45. 10. 25. 0. 0. 45. 10. 25. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]]

----- Computation time = 0.0ms

elementwise multiplication = [81. 4. 10. 0. 0. 63. 10. 0. 0. 0. 81. 4. 25. 0. 0.]

----- Computation time = 0.0ms

gdot = [18.27150184 18.97955343 27.88885399]

----- Computation time = 0.0ms

△2: vectorization

x1 = [9, 2, 5, 0, 0, 7, 5, 0, 0, 0, 9, 2, 5, 0, 0]

x2 = [9, 2, 2, 9, 0, 9, 2, 5, 0, 0, 9, 2, 5, 0, 0]

### VECTORIZED DOT PRODUCT OF VECTORS ###

tic = time.process_time()

dot = np.dot(x1,x2)

toc = time.process_time()

print ("dot = " + str(dot) + "\n ----- Computation time = " + str(1000*(toc - tic)) + "ms")

### VECTORIZED OUTER PRODUCT ###

tic = time.process_time()

outer = np.outer(x1,x2)

toc = time.process_time()

print ("outer = " + str(outer) + "\n ----- Computation time = " + str(1000*(toc - tic)) + "ms")

### VECTORIZED ELEMENTWISE MULTIPLICATION ###

tic = time.process_time()

mul = np.multiply(x1,x2)

toc = time.process_time()

print ("elementwise multiplication = " + str(mul) + "\n ----- Computation time = " + str(1000*(toc - tic)) + "ms")

### VECTORIZED GENERAL DOT PRODUCT ###

tic = time.process_time()

dot = np.dot(W,x1)

toc = time.process_time()

print ("gdot = " + str(dot) + "\n ----- Computation time = " + str(1000*(toc - tic)) + "ms")

𝑜𝑢𝑡:

dot = 278

----- Computation time = 0.0ms

outer = [[81 18 18 81 0 81 18 45 0 0 81 18 45 0 0]

[18 4 4 18 0 18 4 10 0 0 18 4 10 0 0]

[45 10 10 45 0 45 10 25 0 0 45 10 25 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[63 14 14 63 0 63 14 35 0 0 63 14 35 0 0]

[45 10 10 45 0 45 10 25 0 0 45 10 25 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[81 18 18 81 0 81 18 45 0 0 81 18 45 0 0]

[18 4 4 18 0 18 4 10 0 0 18 4 10 0 0]

[45 10 10 45 0 45 10 25 0 0 45 10 25 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]]

----- Computation time = 0.0ms

elementwise multiplication = [81 4 10 0 0 63 10 0 0 0 81 4 25 0 0]

----- Computation time = 0.0ms

gdot = [18.27150184 18.97955343 27.88885399]

----- Computation time = 0.0ms

NumPy基础与向量化操作:Sigmoid函数、梯度、重塑、归一化及softmax

NumPy基础与向量化操作:Sigmoid函数、梯度、重塑、归一化及softmax

1074

1074

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?