linux,windows都适用,有用的话可以点赞~

安装Python3.11

更:在Python3.6上也能正常运行。

cd /root

wget https://www.python.org/ftp/python/3.11.0/Python-3.11.0.tgz

tar -xzf Python-3.11.0.tgz

yum -y install gcc zlib zlib-devel libffi libffi-devel

yum install readline-devel

yum install openssl-devel openssl11 openssl11-devel

export CFLAGS=$(pkg-config --cflags openssl11)

export LDFLAGS=$(pkg-config --libs openssl11)

cd /root/Python-3.11.0

./configure --prefix=/usr/python --with-ssl

make

make install

ln -s /usr/python/bin/python3 /usr/bin/python3

ln -s /usr/python/bin/pip3 /usr/bin/pip3

运行依赖

python3.11 Firefox最新的 Firefox驱动0.33

pip3 install requests beautifulsoup4 selenium Pillow urllib3 argparse -i https://pypi.tuna.tsinghua.edu.cn/simple

Centos7自带了火狐浏览器,先给卸载,然后安装新的:

sudo yum install firefox然后去:火狐浏览器下载链接,选择geckodriver-v0.33.0-linux64.tar.gz的

tar -zxvf geckodriver-v0.23.0-linux64.tar.gz

mv geckodriver /usr/bin

pip3 install selenium

pip3 install pillow克隆网页

import os

import requests

import base64

import time

from bs4 import BeautifulSoup

from selenium import webdriver

from urllib.parse import urljoin, urlparse

from requests.packages.urllib3.exceptions import InsecureRequestWarning

import argparse

from os.path import dirname, abspath

# 禁用由于不验证SSL证书产生的警告

requests.packages.urllib3.disable_warnings(InsecureRequestWarning)

# 根据资源的URL和基本路径来创建本地的目录结构和文件路径

def create_local_path(base_url, folder, resource_url):

# 如果是数据URI,则直接返回路径

if resource_url.startswith('data:'):

mime_info, _ = resource_url.split(',', 1)

mime_type = mime_info.split(';')[0].split(':')[1]

ext = mime_type.split('/')[1] if '/' in mime_type else 'png'

filename = f"datauri_{int(time.time() * 1000)}.{ext}"

local_path = os.path.join(folder, filename)

return local_path

# 解析资源的URL,创建文件夹结构

parsed_url = urlparse(urljoin(base_url, resource_url))

path_segments = parsed_url.path.lstrip('/').split('/')

filename = path_segments[-1]

local_dir = os.path.join(folder, *path_segments[:-1])

os.makedirs(local_dir, exist_ok=True)

local_path = os.path.join(local_dir, filename)

return local_path

# 下载资源,保存到本地,并保留原网站的目录结构

def download_resource(base_url, folder, resource_url, retries=2):

local_path = create_local_path(base_url, folder, resource_url)

if not os.path.exists(local_path):

attempt = 0

while attempt < retries:

try:

# 如果是数据URI,则处理数据并保存

if resource_url.startswith('data:'):

header, encoded = resource_url.split(',', 1)

data = base64.b64decode(encoded)

with open(local_path, 'wb') as f:

f.write(data)

break # 成功后跳出循环

else:

# 处理普通的URL资源

response = requests.get(urljoin(base_url, resource_url), stream=True, verify=False)

if response.status_code == 200:

with open(local_path, 'wb') as f:

for chunk in response.iter_content(chunk_size=8192):

f.write(chunk)

break # 成功后跳出循环

else:

print(f"Attempt {attempt+1}: Error downloading {resource_url}: Status code {response.status_code}")

attempt += 1

time.sleep(2) # 等待2秒后重试

except Exception as e:

print(f"Attempt {attempt+1}: An error occurred while downloading {resource_url}: {e}")

attempt += 1

time.sleep(2) # 等待2秒后重试

if attempt == retries:

print(f"Failed to download {resource_url} after {retries} attempts. Skipping...")

return local_path

# 修改HTML中的链接指向本地路径

def update_links(soup, tag, attribute, folder, base_url):

for element in soup.find_all(tag, {attribute: True}):

original_url = element[attribute]

local_path = download_resource(base_url, folder, original_url)

relative_path = os.path.relpath(local_path, folder)

element[attribute] = relative_path

def clone_website(url, output_folder_name):

# 获取脚本所在目录的绝对路径

script_dir = dirname(abspath(__file__))

# 创建输出目录的完整路径

output_folder = os.path.join(script_dir, output_folder_name)

# 确保输出目录存在

if not os.path.exists(output_folder):

os.makedirs(output_folder)

# 配置Selenium驱动程序以使用Firefox浏览器

options = webdriver.FirefoxOptions()

options.add_argument('--headless')

driver = webdriver.Firefox(options=options)

try:

# 打开目标URL

driver.get(url)

# 获取页面源代码

soup = BeautifulSoup(driver.page_source, 'html.parser')

# 下载并更新所有资源的链接

update_links(soup, 'link', 'href', output_folder, url)

update_links(soup, 'script', 'src', output_folder, url)

update_links(soup, 'img', 'src', output_folder, url)

# 处理页面上的所有超链接,但这里只处理指向文件的链接

for a_tag in soup.find_all('a', href=True):

href = a_tag['href']

if any(href.endswith(ext) for ext in ['.pdf', '.docx', '.doc', '.pptx', '.ppt', '.xlsx', '.xls']):

download_resource(url, output_folder, href)

except Exception as e:

print(f"An error occurred while processing the page: {e}")

finally:

# 关闭浏览器

driver.quit()

# 将处理后的HTML写入文件

with open(os.path.join(output_folder, 'index.html'), 'w', encoding='utf-8') as file:

file.write(str(soup))

if __name__ == "__main__":

parser = argparse.ArgumentParser(description='Clone a website for offline viewing.')

parser.add_argument('target_url', help='The URL of the website to clone.')

args = parser.parse_args()

# 你可以将文件夹名称定义为一个变量,例如 "tempUrlCopyFolder"

output_folder_name = "tempUrlCopyFolder"

clone_website(args.target_url, output_folder_name)

对网页长截图

#python xxx.py url

from selenium import webdriver

from PIL import Image

import io

import sys

import os # 导入os模块

import time

import urllib3

from urllib3.exceptions import InsecureRequestWarning

urllib3.disable_warnings(InsecureRequestWarning)

def capture_full_page_screenshot(url, output_file):

# 初始化浏览器选项

options = webdriver.FirefoxOptions()

options.add_argument('--headless')

# 启动Firefox浏览器

driver = webdriver.Firefox(options=options)

# 设置浏览器窗口大小

window_width = 1920

window_height = 1080

driver.set_window_size(window_width, window_height)

try:

# 访问网页

driver.get(url)

time.sleep(5) # 给页面时间加载内容

# 获取页面总高度

total_height = driver.execute_script("return document.body.parentNode.scrollHeight")

# 开始截图

slices = []

offset = 0

while offset < total_height:

# 滚动到新的截图位置

driver.execute_script(f"window.scrollTo(0, {offset});")

time.sleep(2) # 等待页面加载

# 获取截图

png = driver.get_screenshot_as_png()

screenshot = Image.open(io.BytesIO(png))

slices.append(screenshot)

offset += window_height

if offset < total_height:

# 避免重叠部分,滚动少于一屏的高度

offset -= (window_height // 10)

# 将截图拼接为一张完整的图片

screenshot = Image.new('RGB', (window_width, total_height))

offset = 0

for img in slices[:-1]:

screenshot.paste(img, (0, offset))

offset += (window_height - (window_height // 10)) # 减去重叠的部分

# 添加最后一部分截图

last_img = slices[-1]

screenshot.paste(last_img, (0, total_height - last_img.size[1]))

# 保存截图到脚本所在的目录

screenshot.save(output_file)

print('Image saved successfully')

except Exception as e:

print(f'An error occurred: {e}')

finally:

# 关闭浏览器

driver.quit()

if __name__ == "__main__":

if len(sys.argv) > 1:

url = sys.argv[1]

else:

print('Usage: python screenshot.py <URL>')

sys.exit(1)

# 获取脚本所在的目录

script_dir = os.path.dirname(os.path.abspath(__file__))

# 创建输出文件的路径

output_file = os.path.join(script_dir, 'tempUrlImg.png')

# 调用函数

capture_full_page_screenshot(url, output_file)

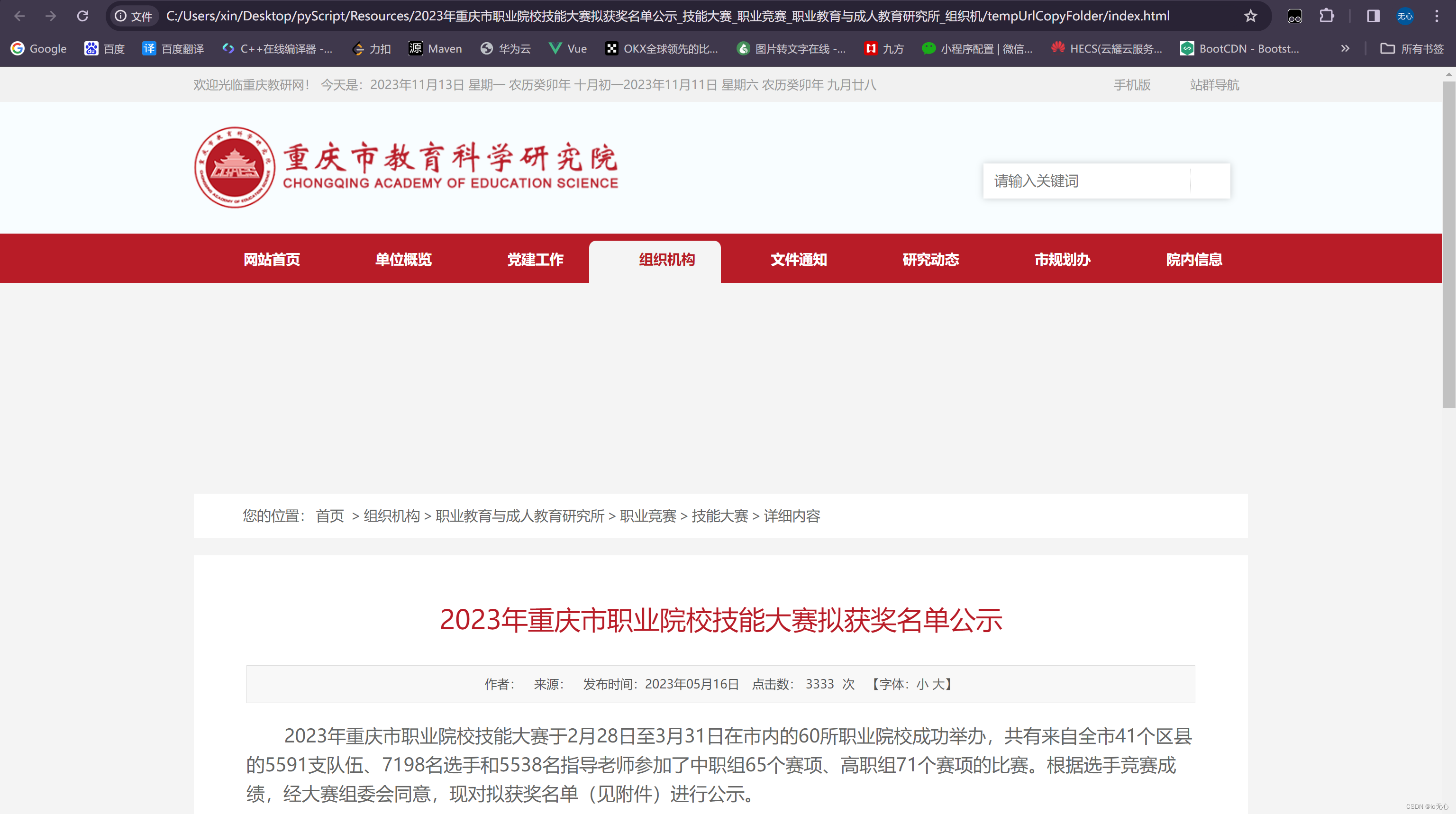

效果如图:

使用格式:python3 xxx.py url

418

418

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?