@article{samangouei2018defense-gan:,

title={Defense-GAN: Protecting Classifiers Against Adversarial Attacks Using Generative Models.},

author={Samangouei, Pouya and Kabkab, Maya and Chellappa, Rama},

journal={arXiv: Computer Vision and Pattern Recognition},

year={2018}}

概

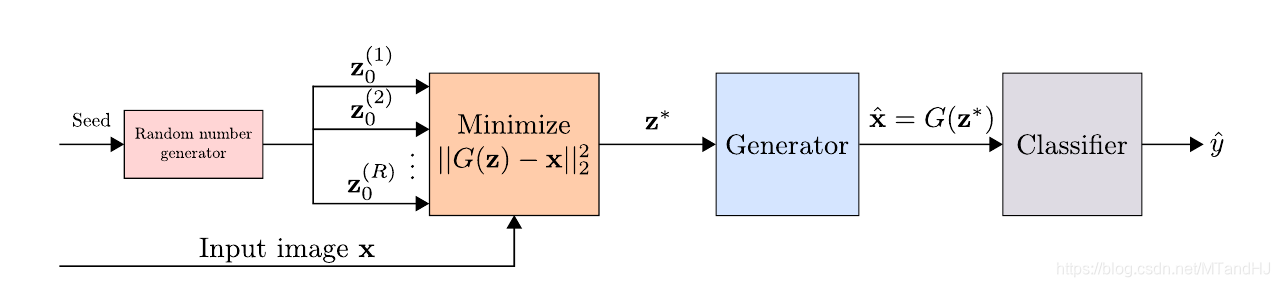

本文介绍了一种针对对抗样本的defense方法, 主要是利用GAN训练的生成器, 将样本 x x x投影到干净数据集上 x ^ \hat{x} x^.

主要内容

我们知道, GAN的损失函数到达最优时, p d a t a = p G p_{data}=p_G pdata=pG, 又倘若对抗样本的分布是脱离于 p d a t a p_{data} pdata的, 则如果我们能将 x x x投影到真实数据的分布 p d a t a p_{data} pdata(如果最优也就是 p G p_G pG), 则我们不就能找到一个防御方法了吗?

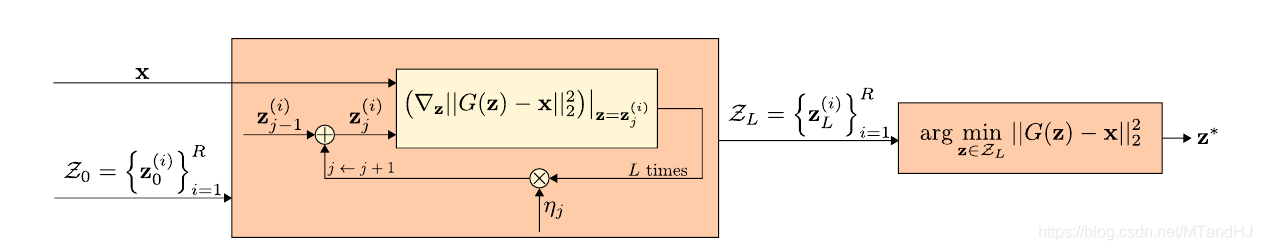

对于每一个样本, 首先初始化

R

R

R个随机种子

z

0

(

1

)

,

…

,

z

0

(

R

)

z_0^{(1)}, \ldots, z_0^{(R)}

z0(1),…,z0(R), 对每一个种子, 利用梯度下降(

L

L

L步)以求最小化

min

∥

G

(

z

)

−

x

∥

2

2

,

(DGAN)

\tag{DGAN} \min \quad \|G(z)-x\|_2^2,

min∥G(z)−x∥22,(DGAN)

其中

G

(

z

)

G(z)

G(z)为利用训练样本训练的生成器.

得到 R R R个点 z ∗ ( 1 ) , … , z ∗ ( R ) z_*^{(1)},\ldots, z_*^{(R)} z∗(1),…,z∗(R), 设使得(DGAN)最小的为 z ∗ z^* z∗, 以及 x ^ = G ( z ∗ ) \hat{x} = G(z^*) x^=G(z∗), 则 x ^ \hat{x} x^就是我们要的, 样本 x x x在普通样本数据中的投影. 将 x ^ \hat{x} x^喂入网络, 判断其类别.

另外, 作者还在实验中说明, 可以直接用 ∥ G ( z ∗ ) − x ∥ 2 2 < > θ \|G(z^*)-x\|_2^2 \frac{<}{>} \theta ∥G(z∗)−x∥22><θ 来判断是否是对抗样本, 并计算AUC指标, 结果不错.

注: 这个方法, 利用梯度方法更新的难处在于, x → x ^ x \rightarrow \hat{x} x→x^这一过程, 包含了 L L L步的内循环, 如果直接反向传梯度会造成梯度爆炸或者消失.

4216

4216

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?