此处记录的是onnxruntime-cpu 推理,不涉及任何cuda的操作

introduction

最近一直在考虑推理加速的问题,奈何本人在C++水平实在弱鸡, 网上的很多教程看得有点懵逼,不太适合新手,这里记录一下 从搭建windows环境环境 ,到使用onnxruntime框架yolov8推理过程。

VS环境搭建

网上很多教程是在vs2019上实现,官网上没法直接下载,提供下载的版本是2022下载 Visual Studio Tools - 免费安装 Windows、Mac、Linux (microsoft.com),我这里也使用是2022-17.10.3, 安装时选择工作负荷如下:

下载opencvReleases - OpenCV安装(解压),本人使用的版本是4.8.0,添加环境变量,

下载onnxruntimeRelease ONNX Runtime v1.18.0 · microsoft/onnxruntime · GitHub安装(解压),本人使用的版本是1.17.1,添加环境变量

vs 新建空项目,在项目属性中添加依赖,一共要添加三处地方,包含目录, 库目录,外部依赖项,添加方法不懂可以百度,巨多教程。

相关代码

本人代码分为三个部分,infer.h , infer.cpp, main.cpp,其中源码部分做了详尽的注释,对新手友好

代码参考了如下来源

yolov8 ONNX Runtime C++ 部署_yolov8 c++ onnx-CSDN博客

ultralytics/examples/YOLOv8-ONNXRuntime-CPP/main.cpp at main · ultralytics/ultralytics · GitHub

YOLOv5-Lite/cpp_demo/ort/main.cpp at master · ppogg/YOLOv5-Lite · GitHub

infer.h

#pragma once

#include <iostream>

#include <windows.h>

#include <string>

#include <cmath>

#include <opencv2/opencv.hpp> // 来自外部依赖库

#include <onnxruntime_cxx_api.h> // 来自外部依赖库

using namespace std;

struct OutputDet {

int id;

float confidence;

cv::Rect box;

};

struct Net_config

{

string imagepath;// image path

const ORTCHAR_T* modelpath;// model path

float confThreshold; // Confidence threshold

float iouThreshold; // IoU threshold

};

class V8

{

public:

V8(); // 无参数构造函数

void detect(Net_config config);

std::vector<std::string> _className = {

"person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light",

"fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard",

"tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

"sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear",

"hair drier", "toothbrush"

};

private:

int inpWidth, inpHeight, maxSide, Padwl, Padwr, Padht, Padhd;

float ratio;

vector<float> input_image_;

cv::Mat letter_(cv::Mat& img);

void normalize_(cv::Mat img);

Ort::Session* ort_session;

vector<const char*> input_names;

vector<const char*> output_names;

vector<vector<int64_t>> input_node_dims; // >=1 outputs

vector<vector<int64_t>> output_node_dims; // >=1 outputs

};

infer.cpp

#include <iostream>

#include <windows.h>

#include <string>

#include <cmath>

#include <opencv2/opencv.hpp>

#include <onnxruntime_cxx_api.h>

#include "infer.h" // 导入头文件,头文件定义了需要的结构体及类

using namespace std;

// 构造函数

V8::V8() {

cout << "infer start" << endl;

}

// 图片读取

cv::Mat get_img(string imgpath) {

cv::Mat img = cv::imread(imgpath);

return img;

}

// 打印设置参数

void show_cfg(Net_config cfg) {

const ORTCHAR_T* modelpath = cfg.modelpath;

float confThreshold = cfg.confThreshold;

float iouThreshold = cfg.iouThreshold;

cout << "model path:" << " " << modelpath << " " << "conf Threshold:" << confThreshold << " " << "IoU Threshold:" << iouThreshold << endl;

}

// 图片数组归一化

void V8::normalize_(cv::Mat img)

{

int row = img.rows;

int col = img.cols;

this->input_image_.resize(row * col * img.channels());

for (int c = 0; c < 3; c++)

{

for (int i = 0; i < row; i++)

{

for (int j = 0; j < col; j++)

{

float pix = img.ptr<uchar>(i)[j * 3 + 2 - c];

this->input_image_[c * row * col + i * col + j] = pix / 255.0;

}

}

}

}

// 图片数组resize及pad

cv::Mat V8::letter_(cv::Mat& img)

{

cv::Mat dstimg;

this->maxSide = img.rows > img.cols ? img.rows : img.cols; //三元运算符表达式

this->ratio = this->inpWidth / float(this->maxSide);

int fx = int(img.cols * ratio);

int fy = int(img.rows * ratio);

this->Padwl = int(round((this->inpWidth - fx) * 0.5-0.1));

this->Padwr = int(round((this->inpWidth - fx) * 0.5+0.1));

this->Padht = int(round((this->inpHeight - fy) * 0.5-0.1));

this->Padhd = int(round((this->inpHeight - fy) * 0.5 + 0.1));

cv::resize(img, dstimg, cv::Size(fx, fy));

cv::copyMakeBorder(dstimg, dstimg, Padht, Padhd, Padwl, Padwr, cv::BORDER_CONSTANT, cv::Scalar::all(127));

return dstimg;

}

void V8::detect(Net_config config)

{

show_cfg(config); //打印设置

Ort::Env env(ORT_LOGGING_LEVEL_WARNING, "your_app");

// 配置会话选项

Ort::SessionOptions session_options;

session_options.SetLogId("MySession");

session_options.SetIntraOpNumThreads(1);

session_options.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_ALL);

// 加载模型

ort_session = new Ort::Session(env, config.modelpath, session_options);

// 获取输入及输出个数

size_t numInputNodes = ort_session->GetInputCount();

size_t numOutputNodes = ort_session->GetOutputCount();

Ort::AllocatorWithDefaultOptions allocator;

for (int i = 0; i < numInputNodes; i++)

{

std::shared_ptr<char> inputName = std::move(ort_session->GetInputNameAllocated(i, allocator));

this->input_names.push_back(inputName.get()); // 获取输入名字

Ort::TypeInfo input_type_info = ort_session->GetInputTypeInfo(i);

auto input_tensor_info = input_type_info.GetTensorTypeAndShapeInfo();

auto input_dims = input_tensor_info.GetShape();

this->input_node_dims.push_back(input_dims); // 获取输入维度

}

cout << "input_names:" << input_names[0] << endl;

for (int i = 0; i < numOutputNodes; i++)

{

std::shared_ptr<char> outputName = std::move(ort_session->GetOutputNameAllocated(i, allocator));

this->output_names.push_back(outputName.get()); //获取输出名字

Ort::TypeInfo output_type_info = ort_session->GetOutputTypeInfo(i);

auto output_tensor_info = output_type_info.GetTensorTypeAndShapeInfo();

auto output_dims = output_tensor_info.GetShape();

this->output_node_dims.push_back(output_dims); //获取输出维度

}

cout << "output_names:" << output_names[0] << endl;

this->inpHeight = input_node_dims[0][2];

this->inpWidth = input_node_dims[0][3];

cout << "inpHeight:" << this->inpHeight << endl;

cout << "inpWidth:" << this->inpWidth << endl;

cout << "input_names:" << input_names[0] << endl;

cv::Mat frame = get_img(config.imagepath);

cv::Mat dstimg = this->letter_(frame);

this->normalize_(dstimg);

//cout << "input_image_.size:" << input_image_.size() << endl;

//cout << "dstimg.size:" << dstimg.rows << " " << dstimg.cols <<" " << dstimg.channels() << endl;

//cout << "input_shape:" << this->inpHeight << " " << this->inpWidth << " " << endl;

array<int64_t, 4> input_shape_{ 1, 3, this->inpHeight, this->inpWidth };

auto allocator_info = Ort::MemoryInfo::CreateCpu(OrtDeviceAllocator, OrtMemTypeCPU);

Ort::Value input_tensor_ = Ort::Value::CreateTensor<float>(allocator_info, input_image_.data(), input_image_.size(), input_shape_.data(), input_shape_.size());

// 获取张量的类型和形状信息

Ort::TensorTypeAndShapeInfo tensor_info = input_tensor_.GetTensorTypeAndShapeInfo();

// 获取张量的形状

std::vector<int64_t> tensor_shape = tensor_info.GetShape();

// 打印张量的形状

std::cout << "Tensor Shape: [";

for (size_t i = 0; i < tensor_shape.size(); ++i) {

std::cout << tensor_shape[i];

if (i != tensor_shape.size() - 1) {

std::cout << ", ";

}

}

std::cout << "]" << std::endl;

std::vector<const char*> input_node_names = {"images"};

std::vector<const char*> output_node_names = { "output0" };

cout << "input_node_names:" << input_node_names[0] << endl; // 上文已经获得了input name,但是测试打印,打印不出来?所以重新定义

/*

try {

vector<Ort::Value> ort_outputs = ort_session->Run(Ort::RunOptions{ nullptr },

input_node_names.data(),

&input_tensor_,

1,

output_node_names.data(),

output_node_names.size());

// Process ort_outputs as needed

}

catch (const Ort::Exception& ex) {

std::cerr << "ONNX Runtime error: " << ex.what() << std::endl;

}

*/

vector<Ort::Value> ort_outputs = this->ort_session->Run(Ort::RunOptions{ nullptr },// onnx推理

input_node_names.data(),

&input_tensor_,

1,

output_node_names.data(),

output_node_names.size());

Ort::Value& predictions = ort_outputs.at(0);

auto pred_dims = predictions.GetTensorTypeAndShapeInfo().GetShape();

std::cout << "输出节点shape:";

for (int i = 0; i < pred_dims.size(); i++)

{

std::cout << pred_dims[i] << " ";

}

std::cout << "\n";

//post-process

int net_width = _className.size() + 4;

float* all_data = ort_outputs[0].GetTensorMutableData<float>(); // 获取推理的输出

auto _outputTensorShape = ort_outputs[0].GetTensorTypeAndShapeInfo().GetShape(); // 图片输出的维度信息 [1, 84, 8400]

cv::Mat output0 = cv::Mat(cv::Size((int)_outputTensorShape[2], (int)_outputTensorShape[1]), CV_32F, all_data).t(); // [1, 84 ,8400] -> [1, 8400, 84]

// 获取矩阵的行数和列数

int rows = output0.rows; // 行数 预测框的数量 8400

int cols = output0.cols; // 列数

// 输出矩阵的形状信息

std::cout << "Matrix shape: " << rows << " x " << cols << std::endl;

float* pdata = (float*)output0.data; // 获取结果指针

vector<int> class_ids; // 准备放入结构体的数据

vector<float> confidences;

vector<cv::Rect> boxes;

for (int r = 0; r < rows; ++r) {

cv::Mat scores(1, _className.size(), CV_32F, pdata + 4); // 创建80个类别的概率矩阵

cv::Point classIdPoint;

double max_class_soces;

minMaxLoc(scores, 0, &max_class_soces, 0, &classIdPoint); // 得到最大类别概率、类别索引

max_class_soces = (float)max_class_soces;

if (max_class_soces > config.confThreshold) // 得到最大类别分数与阈值比较

{

confidences.push_back(max_class_soces);

class_ids.push_back(classIdPoint.x);

float x = pdata[0];// 前四个是x,y,w,h

float y = pdata[1];

float w = pdata[2];

float h = pdata[3];

int left = int((x -this->Padwl -0.5 * w) / this->ratio); // x,y,w,h还原至原始尺寸

int top = int((y - this->Padht - 0.5 * h) / this->ratio);

int width = int(w / this->ratio);

int height = int(h / this->ratio);

boxes.push_back(cv::Rect(left, top, width, height));

}

pdata += net_width; //下一个预测框, 一个预测框的长度是84

}

cout << "ratio:" << " " << ratio << endl;

// 预测框执行NMS处理

vector<int> nms_result;

cv::dnn::NMSBoxes(boxes, confidences, config.confThreshold, config.iouThreshold, nms_result); // 还需要classThreshold?

cout << "识别总数量:" << nms_result.size() << endl;

if (nms_result.size() > 0) {

vector<OutputDet> temp_output;// 依据NMS结果,获取预测框,所有预测框放入一个容器

for (size_t i = 0; i < nms_result.size(); ++i) {

int idx = nms_result[i];

OutputDet result;

result.id = class_ids[idx];

result.confidence = confidences[idx];

result.box = boxes[idx];

temp_output.push_back(result);

}

// 画图

for (size_t i = 0; i < temp_output.size(); ++i) {

OutputDet out = temp_output[i];

std::string label = this->_className[out.id] + " " +

std::to_string(out.confidence).substr(0, std::to_string(out.confidence).size() - 4);

//cout << "label:" << " " << label << endl;

cv::RNG rng(cv::getTickCount());

cv::Scalar color(rng.uniform(0, 256), rng.uniform(0, 256), rng.uniform(0, 256));

//cout << "x1:" << " " << out.box.x <<" " << "y1:" << " " << out.box.y <<" " << "x2:" << " " << out.box.x + out.box.width <<" " << "y2:" << " " << out.box.y + out.box.height << endl;

cv::rectangle(frame, cv::Point(out.box.x, out.box.y), cv::Point(out.box.x + out.box.width, out.box.y + out.box.height), color, 3);

cv::rectangle(

frame,

cv::Point(out.box.x, out.box.y - 25),

cv::Point(out.box.x + out.box.width, out.box.y),

color,

cv::FILLED

);

cv::putText(

frame,

label,

cv::Point(out.box.x, out.box.y - 5),

cv::FONT_HERSHEY_SIMPLEX,

0.75,

cv::Scalar(0, 0, 0),

2

);

};

}

std::cout << "Press any key to exit" << std::endl;

cv::namedWindow("Result of Detection", cv::WINDOW_NORMAL);

cv::imshow("Result of Detection", frame);

cv::waitKey(0);

cv::destroyAllWindows();

}

main.cpp

#include "infer.h"

#include <string>

#include <filesystem>

#include <windows.h>

#include <wchar.h>

using namespace std;

namespace fs = std::filesystem;

string modelpath;

std::string getparentpath() {

std::filesystem::path filePath(__FILE__);

std::filesystem::path dirPath = filePath.parent_path();

return dirPath.string();

}

std::vector<std::string> listFiles(const std::string& path) {

std::vector<std::string> imgpath;

try {

// 遍历指定路径下的所有文件和目录

for (const auto& entry : fs::directory_iterator(path)) {

string name = entry.path().filename().string();

if (name.substr(name.rfind('.') + 1) == "jpg") {

// 输出当前 entry 的路径

fs::path path1 = path;

fs::path path2 = name;

string absolutepath = path +"\\"+ name;

imgpath.push_back(absolutepath);

};

if (name.substr(name.rfind('.') + 1) == "onnx") {

fs::path path1 = path;

fs::path path2 = name;

string absolutepath = path + "\\" + name;

modelpath = absolutepath;

};

}

}

catch (const std::filesystem::filesystem_error& e) {

std::cerr << "Error accessing directory: " << e.what() << std::endl;

}

cout << modelpath << endl;

return imgpath;

}

int main() {

std::string dirpath = getparentpath();

std::vector<std::string> imgpath = listFiles(dirpath);

// string 转wchar_t*

int ModelPathSize = MultiByteToWideChar(CP_UTF8, 0, modelpath.c_str(), static_cast<int>(modelpath.length()), nullptr, 0);

wchar_t* wide_cstr = new wchar_t[ModelPathSize + 1];

MultiByteToWideChar(CP_UTF8, 0, modelpath.c_str(), -1, wide_cstr, ModelPathSize);

wide_cstr[ModelPathSize] = L'\0';

const wchar_t* model_Path = wide_cstr;

//const wchar_t* model_Path = L"D:\yolov8s.onnx";

for (int i = 0; i < imgpath.size(); i++) {

string path = imgpath[i];

Net_config cfg = { path, model_Path, 0.5, 0.5 };

V8 model;

model.detect(cfg);

}

return 0;

}

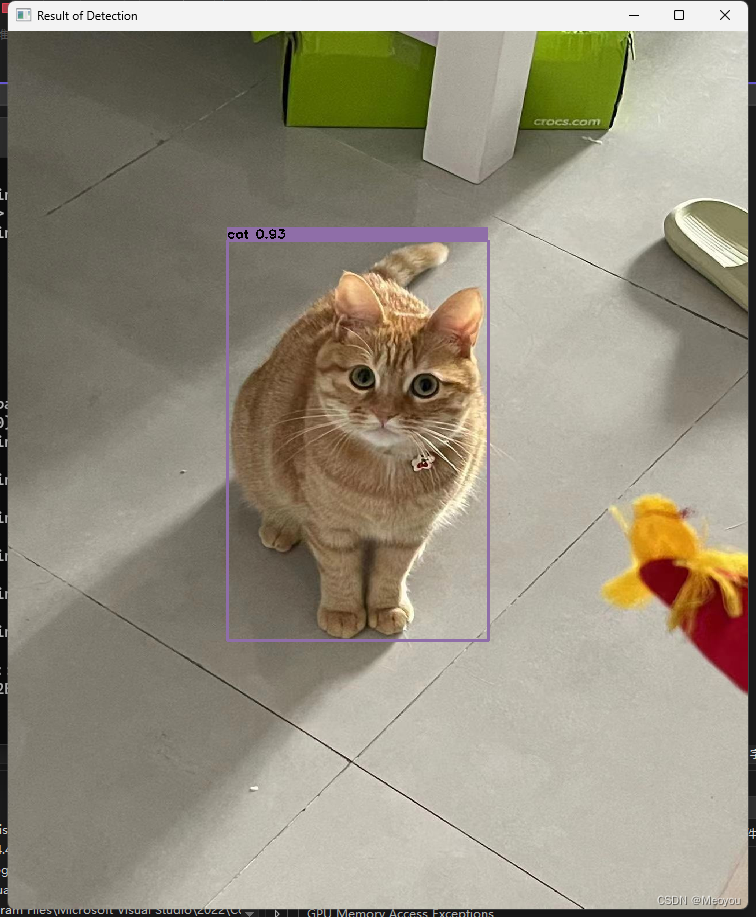

推理结果

目前推理时间还未测试

补充

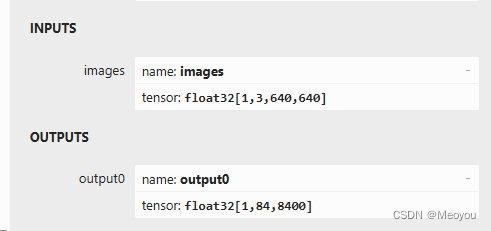

模型使用的是yolov8s对应的onnx,输入及输出信息如下:

在win11系统上,由于系统优先级的问题,直接调试运行会报错:Ort::GetApi(...) 返回 nullptr。解决方案看如下链接:报错信息如下:引发了异常: 读取访问权限冲突。Ort::GetApi(...) 返回 nullptr。_引发了异常: 读取访问权限冲突。 ort::getapi(...) 返回 nullptr。-CSDN博客

更新(2024.06.27)

修改了main.cpp代码,实现自适应识别相同路径下所有图片,onnx也放置于同样文件夹

onnx文件下载置顶

1557

1557

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?