introduction

最近学习使用C++搞yolo部署,对于手边没有GPU, NPU的小伙伴而言,使用CPU加速推理显得更加重要,前期本人已经实现在windows上使用onnx框架完成yolov8的推理,有兴趣的小伙伴可以去看看windows环境下 C++ onnxruntime框架yolov8推理_onnxruntime 1.18推理-CSDN博客,本次内容真对于NCNN

模型准备

github下载yolov8n

pt转ncnn使用yolo官方的转化脚本

model = YOLO('yolov8n.pt')

model.export(format='ncnn')树莓派环境配置

可以参考如下

完整代码

头文件

#pragma once

#ifndef YOLOV8_H

#define YOLOV8_H

#include <opencv2/core/core.hpp>

#include <ncnn/net.h>

struct Config

{

int imgsize;

float IoU;

float confidence;

const char* para_path;

const char* bin_path;

};

struct Object

{

cv::Rect_<float> rect;

int label;

float prob;

};

class YoloV8

{

public:

YoloV8(Config cfg);

void load(Config cfg);

void detect(const cv::Mat& rgb, std::vector<Object>& objects, Config cfg);

void draw(cv::Mat& rgb, const std::vector<Object>& objects);

private:

ncnn::Net model;

float scale;

int Padwl, Padwr, Padht, Padhd;

int num_thread;

float norm_vals[3];

};

#endif // YOLOV8_H

源文件

#include "yoloV8.h"

#include <ncnn/net.h>

#include <iostream>

#include <string>

#include <cmath>

#include <opencv2/opencv.hpp>

#include <stdio.h>

#include <thread>

using namespace std;

const char* class_names[] = {

"person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light",

"fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard",

"tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

"sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear",

"hair drier", "toothbrush"

};

YoloV8::YoloV8(Config cfg)

{

cout << "ncnn infer start!" << endl;

this->num_thread = std::thread::hardware_concurrency();

norm_vals[0] = 1.0 / 255.0f;

norm_vals[1] = 1.0 / 255.0f;

norm_vals[2] = 1.0 / 255.0f;

cout << "number threads" << " " << num_thread << endl;

cout << "IoU threshold" << " " << cfg.IoU << endl;

cout << "conf threshold" << " " << cfg.confidence << endl;

cout << "param path" << " " << &cfg.para_path << endl;

cout << "bin path" << " " << &cfg.bin_path << endl;

}

void YoloV8::load(Config cfg) {

model.clear();

model.opt = ncnn::Option();

//yolo.opt.num_threads = 4;

model.opt.num_threads = this->num_thread;

model.load_param(cfg.para_path);

model.load_model(cfg.bin_path);

}

void YoloV8::detect(const cv::Mat& rgb, std::vector<Object>& objects, Config cfg)

{

int width = rgb.cols;

int height = rgb.rows;

//cout << "ori_width" << " " << width << endl;

//cout << "ori_height" << " " << height << endl;

// pad to multiple of 32

int w = 0;

int h = 0;

int max_length = std::max(width, height);

float scale = (float)max_length /cfg.imgsize ;

if (width > height)

{

w = cfg.imgsize;

h = (int)height / scale;

}

else

{

h = cfg.imgsize;

w = (int)width / scale;

}

//cout << "resize_width" << " " << w << endl;

// cout << "resize_height" << " " << h << endl;

ncnn::Mat in = ncnn::Mat::from_pixels_resize(rgb.data, ncnn::Mat::PIXEL_RGB2BGR, width, height, w, h);

// pad to target_size rectangle

//int wpad = (w + 31) / 32 * 32 - w;

//int hpad = (h + 31) / 32 * 32 - h;

this->Padwl = int(round((cfg.imgsize - w) * 0.5 - 0.1));// 推理大小保持640*640

this->Padwr = int(round((cfg.imgsize - w) * 0.5 + 0.1));

this->Padht = int(round((cfg.imgsize - h) * 0.5 - 0.1));

this->Padhd = int(round((cfg.imgsize - h) * 0.5 + 0.1));

ncnn::Mat in_pad;

ncnn::copy_make_border(in, in_pad, Padht, Padhd, Padwl, Padwr, ncnn::BORDER_CONSTANT, 0.f);

in_pad.substract_mean_normalize(0, norm_vals);

ncnn::Extractor ex = model.create_extractor();

ex.input("in0", in_pad); // 输入名称images

ncnn::Mat out;

ex.extract("out0", out); // 输出名称output

// 获取输出张量的形状信息

//std::cout << "Output Shape: " << out.c << " x " << out.h << " x " << out.w << std::endl;

//post-process

int cls_num = sizeof(class_names) / sizeof(class_names[0]);

int row_length = sizeof(class_names) / sizeof(class_names[0]) + 4;// 矩阵列数

cv::Mat output = cv::Mat(cv::Size((int)out.w, (int)out.h), CV_32F, out).t(); // [8400, 84]

// 获取矩阵的行数和列数

int rows = output.rows; // 行数 预测框的数量 8400

int cols = output.cols; // 列数

// 输出矩阵的形状信息

//std::cout << "Matrix shape: " << rows << " x " << cols << std::endl;

float* pdata = (float*)output.data; // 获取结果指针

vector<int> class_ids; // 准备放入结构体的数据

vector<float> confidences;

vector<cv::Rect> boxes;

for (int r = 0; r < rows; ++r) {

cv::Mat scores(1, cls_num, CV_32F, pdata + 4); // 创建80个类别的概率矩阵

cv::Point classIdPoint;

double max_class_soces;

minMaxLoc(scores, 0, &max_class_soces, 0, &classIdPoint); // 得到最大类别概率、类别索引

max_class_soces = (float)max_class_soces;

if (max_class_soces > cfg.confidence) // 得到最大类别分数与阈值比较

{

confidences.push_back(max_class_soces);

class_ids.push_back(classIdPoint.x);

float x = pdata[0];// 前四个是x,y,w,h

float y = pdata[1];

float w = pdata[2];

float h = pdata[3];

int left = int((x - this->Padwl - 0.5 * w) * scale); // x,y,w,h还原至原始尺寸

int top = int((y - this->Padht - 0.5 * h) * scale);

int width = int(w * scale);

int height = int(h * scale);

boxes.push_back(cv::Rect(left, top, width, height));

}

pdata += row_length; //下一个预测框, 一个预测框的长度是84

}

//cout << "ratio:" << " " << scale << endl;

// 预测框执行NMS处理

vector<int> nms_result;

cv::dnn::NMSBoxes(boxes, confidences, cfg.confidence, cfg.IoU, nms_result); // 还需要classThreshold?

//cout << "num sum:" << nms_result.size() << endl;

if (nms_result.size() > 0) {

for (size_t i = 0; i < nms_result.size(); ++i) {

int idx = nms_result[i];

Object result;

result.label = class_ids[idx];

result.prob = confidences[idx];

result.rect = boxes[idx];

objects.push_back(result);

};

}

}

void YoloV8::draw(cv::Mat& rgb, const std::vector<Object>& objects)

{

for (size_t i = 0; i < objects.size(); i++)

{

const Object& obj = objects[i];

// fprintf(stderr, "%d = %.5f at %.2f %.2f %.2f x %.2f\n", obj.label, obj.prob,

// obj.rect.x, obj.rect.y, obj.rect.width, obj.rect.height);

cv::rectangle(rgb, obj.rect, cv::Scalar(255, 0, 0));

char text[256];

sprintf(text, "%s %.1f%%", class_names[obj.label], obj.prob * 100);

int baseLine = 0;

cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

int x = obj.rect.x;

int y = obj.rect.y - label_size.height - baseLine;

if (y < 0)

y = 0;

if (x + label_size.width > rgb.cols)

x = rgb.cols - label_size.width;

cv::rectangle(rgb, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)),

cv::Scalar(255, 255, 255), -1);

cv::putText(rgb, text, cv::Point(x, y + label_size.height),

cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0));

}

}

main.cpp 模型文件默认放入yolov8n_ncnn_model文件夹

#include "yoloV8.h"

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <vector>

#include <opencv2/imgproc/imgproc.hpp>

#include <iostream>

#include <sys/time.h>

#include <cmath>

using namespace std;

// 0 : Image

// 1 : Camera

#define USE_CAMERA 1

#if USE_CAMERA

int main(int argc, char** argv)

{

Config cfg = { 640, 0.5, 0.5, "./yolov8n_ncnn_model/model.ncnn.param", "./yolov8n_ncnn_model/model.ncnn.bin" };

YoloV8 v8ncnn(cfg);

v8ncnn.load(cfg);

char text[10];

cv::VideoCapture capture;

const char* videopath = argv[1];

capture.open(videopath); //修改这个参数可以选择打开想要用的摄像头

cv::Mat frame;

cv::namedWindow("yolov8_ncnn", cv::WINDOW_NORMAL);

while (true)

{

capture >> frame;

if (frame.empty())

break;

cv::Mat img = frame;

std::vector<Object> objects;

//struct timespec begin, end;

//long time;

//clock_gettime(CLOCK_MONOTONIC, &begin);

auto start = std::chrono::steady_clock::now();

v8ncnn.detect(img, objects, cfg); //recognize the objects

v8ncnn.draw(img, objects); //show the outcome

//clock_gettime(CLOCK_MONOTONIC, &end);

//time = (end.tv_sec - begin.tv_sec) + (end.tv_nsec - begin.tv_nsec);

//sprintf(text, "%.2f fps", 1000 / (double)(time/1000000));

auto end = std::chrono::steady_clock::now();

auto duration = std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count();

float fps = 1000.0 / duration;

sprintf(text, "FPS: %.2f", fps);

cv::putText(img, text, cv::Point(30, 50),cv::FONT_HERSHEY_PLAIN, 3, cv::Scalar(245, 45, 226), 3, 8);

//cout << "fps" << " " << text << endl;

cv::imshow("yolov8_ncnn", img);

if (cv::waitKey(25) >= 0)

break;

}

capture.release();

}

#else

int main(int argc, char** argv)

{

Config cfg = { 640, 0.5, 0.5, "./yolov8n_ncnn_model/model.ncnn.param", "./yolov8n_ncnn_model/model.ncnn.bin" };

YoloV8 v8ncnn(cfg);

if (argc != 2)

{

fprintf(stderr, "Usage: %s [imagepath]\n", argv[0]);

return -1;

}

const char* imagepath = argv[1];

cv::Mat img = cv::imread(imagepath, 1);

if (img.empty())

{

fprintf(stderr, "cv::imread %s failed\n", imagepath);

return -1;

}

std::vector<Object> objects;

v8ncnn.load(cfg);

struct timespec begin, end;

long time;

clock_gettime(CLOCK_MONOTONIC, &begin);

v8ncnn.detect(img, objects, cfg ); //recognize the objects

v8ncnn.draw(img, objects); //show the outcome

clock_gettime(CLOCK_MONOTONIC, &end);

time = (end.tv_sec - begin.tv_sec) + (end.tv_nsec - begin.tv_nsec);

char text[10];

sprintf(text, "%.2f fps", 1000 / (double)(time/1000000));

cv::putText(img, text, cv::Point(30, 50),

cv::FONT_HERSHEY_PLAIN, 3, cv::Scalar(245, 45, 226), 3, 8);

cout << "fps" <<" " <<text << endl;

cv::namedWindow("yolov8_ncnn", cv::WINDOW_NORMAL);

cv::imshow("yolov8_ncnn", img);

cv::waitKey(0);

return 0;

}

#endif

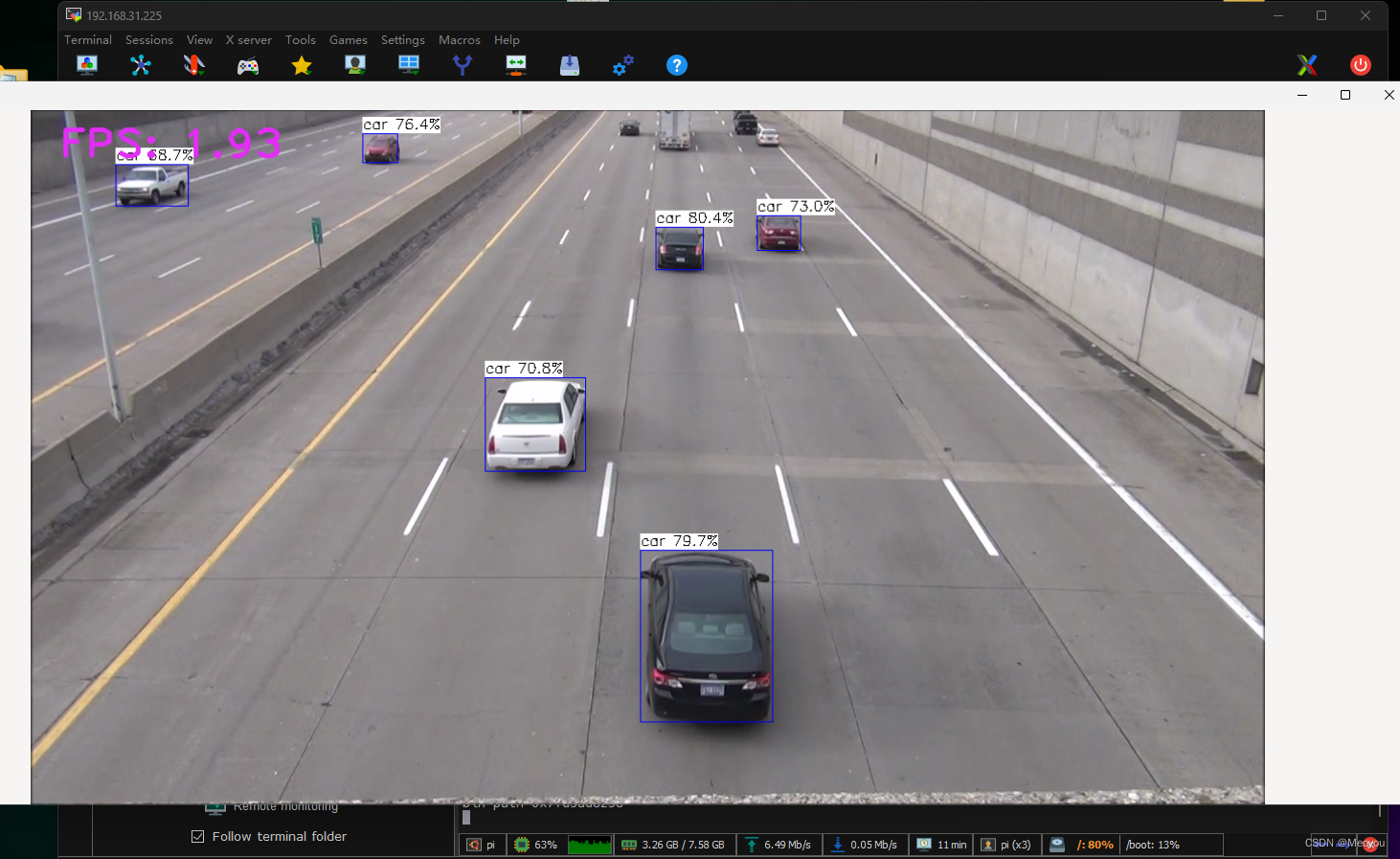

测试结果

使用树莓派4B测试上述代码,视频尺寸为1280*720,速度在2帧左右,速度慢怀疑一方面可能是模型精度的原因, 使用yolo转的ncnn 应该是fp16;另外,模型输入固定为640,转化后的ncnn模型是否支持其他大小还未考究,减小输入应该会提速一些

有需要可以自行优化尝试

在windows上也做了测试,视频尺寸544*960,速度在6~7帧,CPU型号:i3-10100 CPU @ 3.60GHz (垃圾货)

2746

2746

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?