本文为[P365天深度学习训练营](https://mp.weixin.qq.com/s/0 dvHCa0oFnW8SCp3 JpzKxg)中的学习记录博客

原作者:[K同学啊](https://mtyjkh.blog.csdn.net/)

一、前期工作

1.设置GPU

import tensorflow as tf

gpus = tf.config.list_physical_devices("GPU")

if gpus:

tf.config.experimental.set_memory_growth(gpus[0], True)

tf.config.set_visible_devices(gpus[0],"GPU")

2.导入数据

import matplotlib.pyplot as plt

plt.rcParams['axes.unicode_minus'] = False

import os, PIL, pathlib

import numpy as np

from tensorflow import keras

from tensorflow.keras import layers, models

data_dir = './bird_photos'

data_dir = pathlib.Path(data_dir)image_count = len(list(data_dir.glob('*/*')))

print("The number of images: ", image_count)![]()

二、数据处理

1.加载数据

使用image._dataset_from_directory方法将磁盘中的数据加载到tf.data.Dataset中

batch_size = 8

img_width = 224

img_height = 224 train_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="training",

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size

)

val_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="validation",

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size

)![]()

class_names = train_ds.class_names

print(class_names)![]()

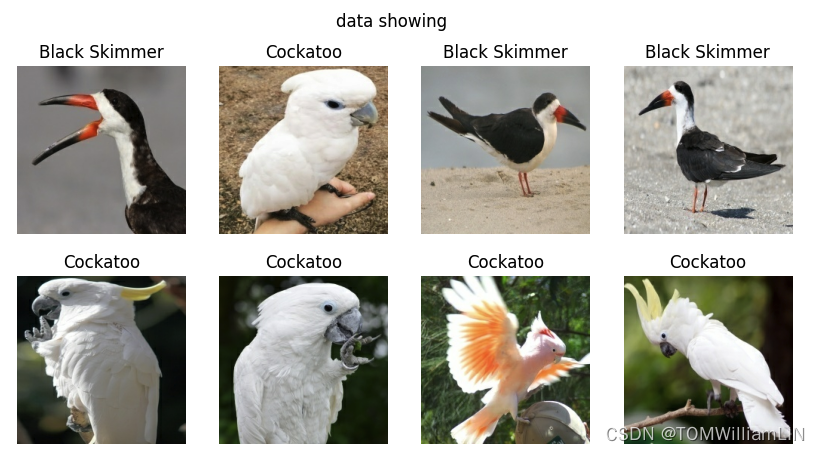

2.可视化数据

plt.figure(figsize=(10,5))

plt.suptitle("data showing")

for images, labels in train_ds.take(1):

for i in range(8):

ax = plt.subplot(2, 4, i+1)

plt.imshow(images[i].numpy().astype("uint8"))

plt.title(class_names[labels[i]])

plt.axis("off")

plt.imshow(images[1].numpy().astype("uint8"))

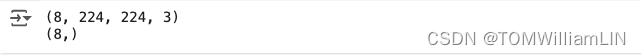

3.再次检查数据

for image_batch, labels_batch in train_ds:

print(image_batch.shape)

print(labels_batch.shape)

break

4.配置数据集

AUTOTUNE = tf.data.AUTOTUNE

train_ds = train_ds.cache().shuffle(1000).prefetch(buffer_size=AUTOTUNE)

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)三、残差网络(ResNet)介绍

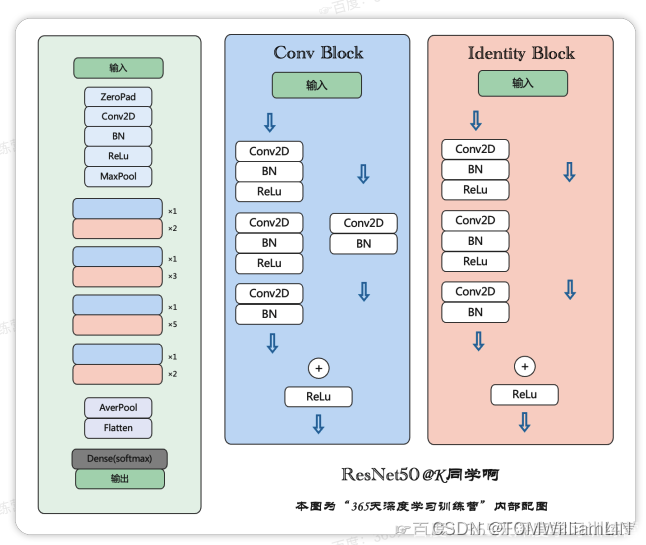

1. 残差网络解决了什么

残差网络是为了解决神经网络隐藏层过多时,而引起的网络退化问题。退化(degradation)问题是指:当网络隐藏层变多时,网络的准确度达到饱和然后急剧退化,而且这个退化不是由于过拟合引起的。

2. ResNet-50介绍

ResNet-.50有两个基本的块,分别名为Conv Block和Identity Block

四、构建ResNet-50网络模型

from keras import layers

from keras.models import Model

from keras.layers import Input, Activation, BatchNormalization, Flatten

from keras.layers import Dense, Conv2D, MaxPooling2D, ZeroPadding2D, AveragePooling2D

def identity_block(input_tensor, kernel_size, filters, stage, block):

filters1, filters2, filters3 = filters

name_base = str(stage) + block + '_identity_block_block_'

x = Conv2D(filters1, (1,1), name=name_base + 'conv1')(input_tensor)

x = BatchNormalization(name=name_base + 'bn1')(x)

x = Activation('relu', name=name_base + 'relu1')(x)

x = Conv2D(filters2, kernel_size, padding='same', name=name_base + 'conv2')(x)

x = BatchNormalization(name=name_base + 'bn2')(x)

x = Activation('relu', name=name_base + 'relu2')(x)

x = Conv2D(filters3, (1,1), name=name_base + 'conv3')(x)

x = BatchNormalization(name=name_base + 'bn3')(x)

x = layers.add([x, input_tensor], name=name_base + 'add')

x = Activation('relu', name=name_base + 'relu4')(x)

return x

def conv_block(input_tensor, kernel_size, filters, stage, block, strides=(2, 2)):

filters1, filters2, filters3 = filters

res_name_base = str(stage) + block + '_conv_block_res_'

name_base = str(stage) + block + '_conv_block_'

x = Conv2D(filters1, (1,1), strides=strides, name=name_base + 'conv1')(input_tensor)

x = BatchNormalization(name=name_base + 'bn1')(x)

x = Activation('relu', name=name_base + 'relu1')(x)

x = Conv2D(filters2, kernel_size, padding='same', name=name_base + 'conv2')(x)

x = BatchNormalization(name=name_base + 'bn2')(x)

x = Activation('relu', name=name_base + 'relu2')(x)

x = Conv2D(filters3, (1,1), name=name_base + 'conv3')(x)

x = BatchNormalization(name=name_base + 'bn3')(x)

shortcut = Conv2D(filters3, (1,1), strides=strides, name=res_name_base + 'conv')(input_tensor)

shortcut = BatchNormalization(name=name_base + 'bn')(shortcut)

x = layers.add([x, shortcut], name=name_base + 'add')

x = Activation('relu', name=name_base + 'relu4')(x)

return x

def ResNet50(input_shape=[224,224,3], classes=1000):

img_input = Input(shape=input_shape)

x = ZeroPadding2D((3, 3))(img_input)

x = Conv2D(64, (7,7), strides=(2,2), name='conv1')(x)

x = BatchNormalization(name='bn_conv1')(x)

x = Activation('relu')(x)

x = MaxPooling2D((3, 3), strides=(2,2))(x)

x = conv_block(x, 3, [64, 64, 256], stage=2, block='a', strides=(1, 1))

x = identity_block(x, 3, [64, 64, 256], stage=2, block='b')

x = identity_block(x, 3, [64, 64, 256], stage=2, block='c')

x = conv_block(x, 3, [128, 128, 512], stage=3, block='a')

x = identity_block(x, 3, [128, 128, 512], stage=3, block='b')

x = identity_block(x, 3, [128, 128, 512], stage=3, block='c')

x = identity_block(x, 3, [128, 128, 512], stage=3, block='d')

x = conv_block(x, 3, [256, 256, 1024], stage=4, block='a')

x = identity_block(x, 3, [256, 256, 1024], stage=4, block='b')

x = identity_block(x, 3, [256, 256, 1024], stage=4, block='c')

x = identity_block(x, 3, [256, 256, 1024], stage=4, block='d')

x = identity_block(x, 3, [256, 256, 1024], stage=4, block='e')

x = identity_block(x, 3, [256, 256, 1024], stage=4, block='f')

x = conv_block(x, 3, [512, 512, 2048], stage=5, block='a')

x = identity_block(x, 3, [512, 512, 2048], stage=5, block='b')

x = identity_block(x, 3, [512, 512, 2048], stage=5, block='c')

x = AveragePooling2D((7,7), name='avg_pool')(x)

x = Flatten()(x)

x = Dense(classes, activation='softmax', name='fc1000')(x)

model = Model(img_input, x, name='resnet50')

model.load_weights("/content/drive/MyDrive/Colab Notebooks/第8天/resnet50_weights_tf_dim_ordering_tf_kernels.h5")

return model

model = ResNet50()

model.summary()Model: "resnet50"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_2 (InputLayer) [(None, 224, 224, 3)] 0 []

zero_padding2d_1 (ZeroPadd (None, 230, 230, 3) 0 ['input_2[0][0]']

ing2D)

conv1 (Conv2D) (None, 112, 112, 64) 9472 ['zero_padding2d_1[0][0]']

bn_conv1 (BatchNormalizati (None, 112, 112, 64) 256 ['conv1[0][0]']

on)

activation_1 (Activation) (None, 112, 112, 64) 0 ['bn_conv1[0][0]']

max_pooling2d_1 (MaxPoolin (None, 55, 55, 64) 0 ['activation_1[0][0]']

g2D)

2a_conv_block_conv1 (Conv2 (None, 55, 55, 64) 4160 ['max_pooling2d_1[0][0]']

D)

2a_conv_block_bn1 (BatchNo (None, 55, 55, 64) 256 ['2a_conv_block_conv1[0][0]']

rmalization)

2a_conv_block_relu1 (Activ (None, 55, 55, 64) 0 ['2a_conv_block_bn1[0][0]']

ation)

2a_conv_block_conv2 (Conv2 (None, 55, 55, 64) 36928 ['2a_conv_block_relu1[0][0]']

D)

2a_conv_block_bn2 (BatchNo (None, 55, 55, 64) 256 ['2a_conv_block_conv2[0][0]']

rmalization)

2a_conv_block_relu2 (Activ (None, 55, 55, 64) 0 ['2a_conv_block_bn2[0][0]']

ation)

2a_conv_block_conv3 (Conv2 (None, 55, 55, 256) 16640 ['2a_conv_block_relu2[0][0]']

D)

2a_conv_block_res_conv (Co (None, 55, 55, 256) 16640 ['max_pooling2d_1[0][0]']

nv2D)

2a_conv_block_bn3 (BatchNo (None, 55, 55, 256) 1024 ['2a_conv_block_conv3[0][0]']

rmalization)

2a_conv_block_bn (BatchNor (None, 55, 55, 256) 1024 ['2a_conv_block_res_conv[0][0]

malization) ']

2a_conv_block_add (Add) (None, 55, 55, 256) 0 ['2a_conv_block_bn3[0][0]',

'2a_conv_block_bn[0][0]']

2a_conv_block_relu4 (Activ (None, 55, 55, 256) 0 ['2a_conv_block_add[0][0]']

ation)

2b_identity_block_block_co (None, 55, 55, 64) 16448 ['2a_conv_block_relu4[0][0]']

nv1 (Conv2D)

2b_identity_block_block_bn (None, 55, 55, 64) 256 ['2b_identity_block_block_conv

1 (BatchNormalization) 1[0][0]']

2b_identity_block_block_re (None, 55, 55, 64) 0 ['2b_identity_block_block_bn1[

lu1 (Activation) 0][0]']

2b_identity_block_block_co (None, 55, 55, 64) 36928 ['2b_identity_block_block_relu

nv2 (Conv2D) 1[0][0]']

2b_identity_block_block_bn (None, 55, 55, 64) 256 ['2b_identity_block_block_conv

2 (BatchNormalization) 2[0][0]']

2b_identity_block_block_re (None, 55, 55, 64) 0 ['2b_identity_block_block_bn2[

lu2 (Activation) 0][0]']

2b_identity_block_block_co (None, 55, 55, 256) 16640 ['2b_identity_block_block_relu

nv3 (Conv2D) 2[0][0]']

2b_identity_block_block_bn (None, 55, 55, 256) 1024 ['2b_identity_block_block_conv

3 (BatchNormalization) 3[0][0]']

2b_identity_block_block_ad (None, 55, 55, 256) 0 ['2b_identity_block_block_bn3[

d (Add) 0][0]',

'2a_conv_block_relu4[0][0]']

2b_identity_block_block_re (None, 55, 55, 256) 0 ['2b_identity_block_block_add[

lu4 (Activation) 0][0]']

2c_identity_block_block_co (None, 55, 55, 64) 16448 ['2b_identity_block_block_relu

nv1 (Conv2D) 4[0][0]']

2c_identity_block_block_bn (None, 55, 55, 64) 256 ['2c_identity_block_block_conv

1 (BatchNormalization) 1[0][0]']

2c_identity_block_block_re (None, 55, 55, 64) 0 ['2c_identity_block_block_bn1[

lu1 (Activation) 0][0]']

2c_identity_block_block_co (None, 55, 55, 64) 36928 ['2c_identity_block_block_relu

nv2 (Conv2D) 1[0][0]']

2c_identity_block_block_bn (None, 55, 55, 64) 256 ['2c_identity_block_block_conv

2 (BatchNormalization) 2[0][0]']

2c_identity_block_block_re (None, 55, 55, 64) 0 ['2c_identity_block_block_bn2[

lu2 (Activation) 0][0]']

2c_identity_block_block_co (None, 55, 55, 256) 16640 ['2c_identity_block_block_relu

nv3 (Conv2D) 2[0][0]']

2c_identity_block_block_bn (None, 55, 55, 256) 1024 ['2c_identity_block_block_conv

3 (BatchNormalization) 3[0][0]']

2c_identity_block_block_ad (None, 55, 55, 256) 0 ['2c_identity_block_block_bn3[

d (Add) 0][0]',

'2b_identity_block_block_relu

4[0][0]']

2c_identity_block_block_re (None, 55, 55, 256) 0 ['2c_identity_block_block_add[

lu4 (Activation) 0][0]']

3a_conv_block_conv1 (Conv2 (None, 28, 28, 128) 32896 ['2c_identity_block_block_relu

D) 4[0][0]']

3a_conv_block_bn1 (BatchNo (None, 28, 28, 128) 512 ['3a_conv_block_conv1[0][0]']

rmalization)

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

9078

9078

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?