C++编译安装Tensorflow遇见了很多大坑。。。爬了两天爬出来了。

1.下载bazel。默认安装的是bazel0.27.1。

> echo "deb [arch=amd64] http://storage.googleapis.com/bazel-apt stable jdk1.8" | sudo tee /etc/apt/sources.list.d/bazel.list

>curl https://bazel.build/bazel-release.pub.gpg | sudo apt-key add -

>sudo apt-get update && sudo apt-get install bazel为了编译tensorflow1.13.1需要使用bazel0.21.0.

卸载bazel0.27.1

sudo apt-get remove bazel

在Github上下载0.21.0,输入以下命令安装,下载地址

2.下载Tensorflow源码

3.进入源码根目录,打开终端,输入以下命令,对Tensorflow进行配置。

./configure

thorking@thorking:~/tensorflow-r1.13$ ./configure

Extracting Bazel installation...

WARNING: --batch mode is deprecated. Please instead explicitly shut down your Bazel server using the command "bazel shutdown".

INFO: Invocation ID: 8dbb128b-33cc-4574-924b-6fbc04e22246

You have bazel 0.21.0 installed.

Please specify the location of python. [Default is /home/thorking/anaconda3/bin/python]:

Found possible Python library paths:

/home/thorking/anaconda3/lib/python3.5/site-packages

Please input the desired Python library path to use. Default is [/home/thorking/anaconda3/lib/python3.5/site-packages]

Do you wish to build TensorFlow with XLA JIT support? [Y/n]: n

No XLA JIT support will be enabled for TensorFlow.

Do you wish to build TensorFlow with OpenCL SYCL support? [y/N]: n

No OpenCL SYCL support will be enabled for TensorFlow.

Do you wish to build TensorFlow with ROCm support? [y/N]: n

No ROCm support will be enabled for TensorFlow.

Do you wish to build TensorFlow with CUDA support? [y/N]: y

CUDA support will be enabled for TensorFlow.

Please specify the CUDA SDK version you want to use. [Leave empty to default to CUDA 10.0]:

Please specify the location where CUDA 10.0 toolkit is installed. Refer to README.md for more details. [Default is /usr/local/cuda]:

Please specify the cuDNN version you want to use. [Leave empty to default to cuDNN 7]:

Please specify the location where cuDNN 7 library is installed. Refer to README.md for more details. [Default is /usr/local/cuda]:

Do you wish to build TensorFlow with TensorRT support? [y/N]: N

No TensorRT support will be enabled for TensorFlow.

Please specify the locally installed NCCL version you want to use. [Default is to use https://github.com/nvidia/nccl]:

Please specify a list of comma-separated Cuda compute capabilities you want to build with.

You can find the compute capability of your device at: https://developer.nvidia.com/cuda-gpus.

Please note that each additional compute capability significantly increases your build time and binary size. [Default is: 6.1]:

Do you want to use clang as CUDA compiler? [y/N]: n

nvcc will be used as CUDA compiler.

Please specify which gcc should be used by nvcc as the host compiler. [Default is /usr/bin/gcc]:

Do you wish to build TensorFlow with MPI support? [y/N]: N

No MPI support will be enabled for TensorFlow.

Please specify optimization flags to use during compilation when bazel option "--config=opt" is specified [Default is -march=native -Wno-sign-compare]:

Would you like to interactively configure ./WORKSPACE for Android builds? [y/N]: N

Not configuring the WORKSPACE for Android builds.

Preconfigured Bazel build configs. You can use any of the below by adding "--config=<>" to your build command. See .bazelrc for more details.

--config=mkl # Build with MKL support.

--config=monolithic # Config for mostly static monolithic build.

--config=gdr # Build with GDR support.

--config=verbs # Build with libverbs support.

--config=ngraph # Build with Intel nGraph support.

--config=dynamic_kernels # (Experimental) Build kernels into separate shared objects.

Preconfigured Bazel build configs to DISABLE default on features:

--config=noaws # Disable AWS S3 filesystem support.

--config=nogcp # Disable GCP support.

--config=nohdfs # Disable HDFS support.

--config=noignite # Disable Apacha Ignite support.

--config=nokafka # Disable Apache Kafka support.

--config=nonccl # Disable NVIDIA NCCL support.

Configuration finished

4.使用bazel命令进行编译。这里是需要有GPU的

bazel build --config=opt --config=cuda //tensorflow:libtensorflow_cc.so5.编译出现问题。

问题一:抓取前两个包icu和grpc一直报错

再次编译观察发现是因为网络问题,抓取包抓取的时候网络暂停,于是切换手机热点下载,快很多。将两个包下载完后开始正式编译。

问题二:编译完成后,发现编译失败。。。

主要是_U_S_Stensorflow_Scc_Cops_Scandidate_Usampling_Uops_Ugen_Ucc___Utensorflow/libtensorflow_framework.so:

等未定义。

将/etc/ld.so.conf.d/libc.conf文件打开修改,添加

/usr/local/cuda-10.0/lib64然后保存关闭,运行一下生效

sudo ldconfig再次编译。

编译成功。

6.编译Protobuf和Eigen

# protobuf

mkdir /tmp/proto

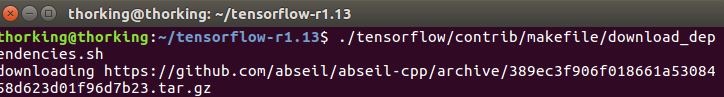

./tensorflow/contrib/makefile/download_dependencies.sh

cd tensorflow/contrib/makefile/downloads/protobuf/

./autogen.sh

./configure --prefix=/tmp/proto/

make

make install

# eigen

mkdir /tmp/eigen

cd ../eigen

mkdir build_dir

cd build_dir

cmake -DCMAKE_INSTALL_PREFIX=/tmp/eigen/ ../

make install

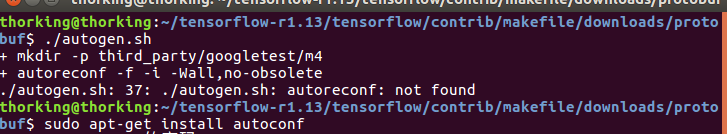

(1)在执行./autogen.sh的时候报错

输入sudo apt-get install autoconf 安装autoreconf

执行完./autogen.sh后文件夹下才会出现configure脚本,才可以编译。

(2)在编译安装好protobuf和eigen之后,最好将存储在/tmp文件夹中的protobuf和eigen拷贝一份,防止电脑下次开机之后文件丢失。

(3)在执行./tensorflow/contrib/makefile/download_dependencies.sh的时候,可能会因为网络速度与延迟问题而导致某个包下载失败。重新运行这个脚本会重新开始下载已经下载过的文件。打开这个脚本文件,对下载过的文件进行注释,就可以选择性下载。

7.在进行例子测试的时候发现bug

fatal error: absl/strings/string_view.h: 没有那个文件或目录

解决办法:

直接将absl文件夹加入include_directories

测试的时候遇到的其他bug及解决办法:

test.cc文件直接编译:https://blog.csdn.net/u011285477/article/details/93975689

加载pb文件预测结果:https://blog.csdn.net/qq_37541097/article/details/86232687

2416

2416

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?