在Pytorch 训练模型的时候,需要日志帮助开发者记录些重要信息和参数,以方便开发者更好的调节模型及参数,常见的日志非 Tensorboard不可,但是Pytorch 对 Tensorboard 的支持不是十分完美,在记录模型重要参数时 Tensorboard 的 writer.add_text() 记录日志不是很方便,Logger 便可替代 Tensorboard 记录文本日志,Tensorboard 专注 模型训练图,两者搭配使用极大提高效率,且讲两者放在 Tensorboard 日志文件夹下,方便调用查看。

- 【解析】TensorBoard 可视化:https://blog.csdn.net/ViatorSun/article/details/116275485

1、train 文件

# !/usr/bin/env python

# -*- coding:utf-8 -*-

# @Time : 2022.01

# @Author : 绿色羽毛

# @Email : lvseyumao@foxmail.com

# @Blog : https://blog.csdn.net/ViatorSun

# @version : "1.0"

# @Note : Tensorboard + logger 日志使用

import os

import socket

from datetime import datetime

from torch.utils.tensorboard import SummaryWriter

""" ********************************* Tensorboard/日志 配置 ********************************* """

current_time = datetime.now().strftime('%b%d_%H-%M-%S')

log_dir = os.path.join('runs', current_time + '_' + args.run_model + '_' + socket.gethostname())

writer = SummaryWriter(log_dir)

logger = create_logger(log_dir, current_time + args.log_name)

config_from_args = args.__dict__

total_parameters = sum(p.numel() for p in model.parameters() if p.requires_grad )

logger.info(f"=============================== Modle Arguments ===============================")

logger.info(f">>> Model Name : {args.model_name, args.run_model}")

logger.info(f">>> Training time : {current_time}")

logger.info(f">>> Computation with : {current_time}")

logger.info(f"== Model Total params : %.2f MB \n" % (total_parameters / 1e6))

logger.info(f"== ArgumentParser:{json.dumps(config_from_args, indent=20)}")

logger.info(f"=============================== Train Arguments ===============================")

logger.info(f"== criterion :{criterion}")

logger.info(f"== optimizer :{optimizer.__class__}")

logger.info(f" :{json.dumps(optimizer.defaults, indent=20)}")

logger.info(f"== lr_scheduler :{lr_scheduler.__class__}")

logger.info(f" :{json.dumps(lr_scheduler.state_dict(), indent=20)}")

logger.info(f"==============================================================================")

logger.info(f"Creating model:{args.model_name}")

logger.info(model)

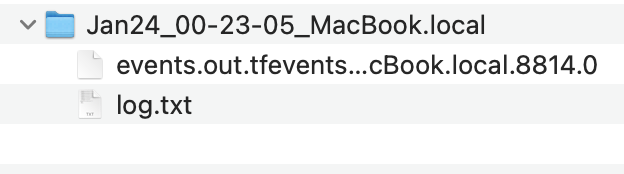

生成的日志

2、创建logger 日志

# !/usr/bin/env python

# -*- coding:utf-8 -*-

# @Time : 2022.01

# @Author : 绿色羽毛

# @Email : lvseyumao@foxmail.com

# @Blog : https://blog.csdn.net/ViatorSun

# @version : "1.0"

# @Note : Pytorch 文本日志

import os

import logging

def create_logger(log_dir, filename, verbosity=1, name=None):

level_dict = {0: logging.DEBUG, 1: logging.INFO, 2: logging.WARNING}

formatter = logging.Formatter( "[%(asctime)s][%(filename)s][line:%(lineno)d][%(levelname)s] %(message)s" )

logger = logging.getLogger(name)

logger.setLevel(level_dict[verbosity])

# fh = logging.FileHandler(filename, "w")

fh = logging.FileHandler(os.path.join(log_dir, filename+'.txt'), mode='a')

fh.setFormatter(formatter)

logger.addHandler(fh)

sh = logging.StreamHandler()

sh.setFormatter(formatter)

logger.addHandler(sh)

return logger

3、Tensorboard 日志

在此截取一部分 Tensorboard 接口代码,可见在没有用户输入 log_dir 时,Tensorboard 会自动创建 runs文件夹,然后以当前时间 current_time + gethostname() + comment 命名Tensorboard日志的文件名,

class SummaryWriter(object):

"""Writes entries directly to event files in the log_dir to be

consumed by TensorBoard.

The `SummaryWriter` class provides a high-level API to create an event file

in a given directory and add summaries and events to it. The class updates the

file contents asynchronously. This allows a training program to call methods

to add data to the file directly from the training loop, without slowing down

training.

"""

def __init__(self, log_dir=None, comment='', purge_step=None, max_queue=10,

flush_secs=120, filename_suffix=''):

"""Creates a `SummaryWriter` that will write out events and summaries

to the event file.

Args:

log_dir (string): Save directory location. Default is

runs/**CURRENT_DATETIME_HOSTNAME**, which changes after each run.

Use hierarchical folder structure to compare

between runs easily. e.g. pass in 'runs/exp1', 'runs/exp2', etc.

for each new experiment to compare across them.

comment (string): Comment log_dir suffix appended to the default

``log_dir``. If ``log_dir`` is assigned, this argument has no effect.

purge_step (int):

When logging crashes at step :math:`T+X` and restarts at step :math:`T`,

any events whose global_step larger or equal to :math:`T` will be

purged and hidden from TensorBoard.

Note that crashed and resumed experiments should have the same ``log_dir``.

max_queue (int): Size of the queue for pending events and

summaries before one of the 'add' calls forces a flush to disk.

Default is ten items.

flush_secs (int): How often, in seconds, to flush the

pending events and summaries to disk. Default is every two minutes.

filename_suffix (string): Suffix added to all event filenames in

the log_dir directory. More details on filename construction in

tensorboard.summary.writer.event_file_writer.EventFileWriter.

Examples::

from torch.utils.tensorboard import SummaryWriter

# create a summary writer with automatically generated folder name.

writer = SummaryWriter()

# folder location: runs/May04_22-14-54_s-MacBook-Pro.local/

# create a summary writer using the specified folder name.

writer = SummaryWriter("my_experiment")

# folder location: my_experiment

# create a summary writer with comment appended.

writer = SummaryWriter(comment="LR_0.1_BATCH_16")

# folder location: runs/May04_22-14-54_s-MacBook-Pro.localLR_0.1_BATCH_16/

"""

torch._C._log_api_usage_once("tensorboard.create.summarywriter")

if not log_dir:

import socket

from datetime import datetime

current_time = datetime.now().strftime('%b%d_%H-%M-%S')

log_dir = os.path.join(

'runs', current_time + '_' + socket.gethostname() + comment)

self.log_dir = log_dir

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?