文章目录

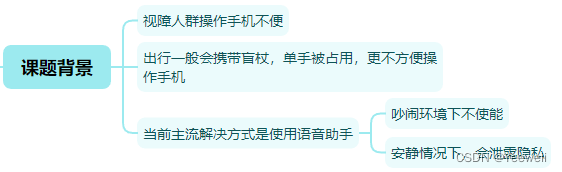

一、课题背景

二、本文解决方案

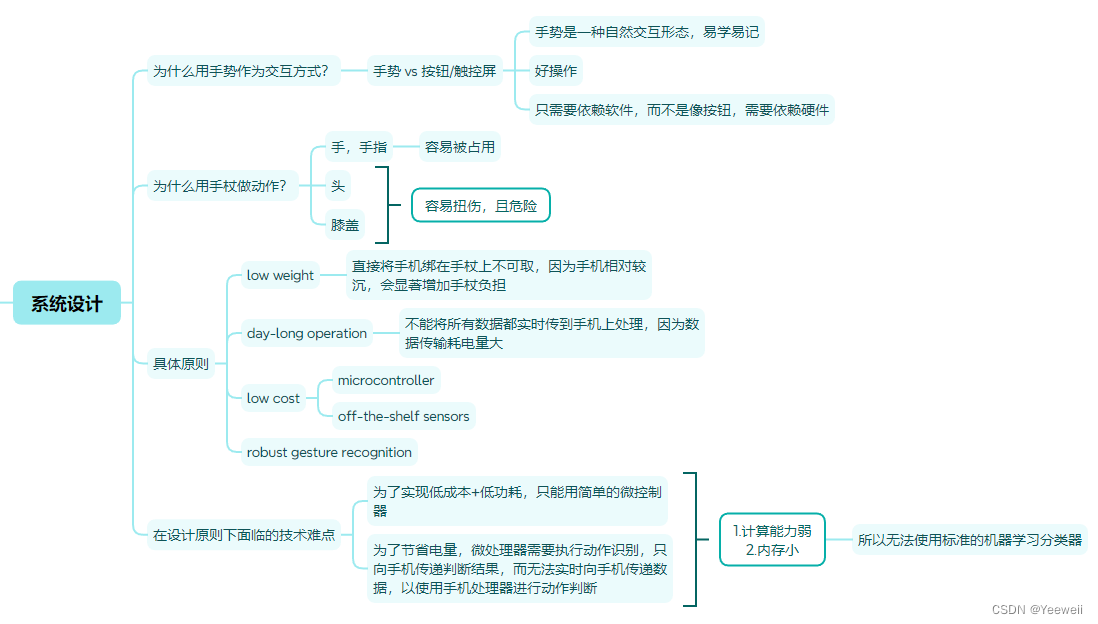

在盲杖上配置轻量、低成本、低功耗的设备,识别盲杖动作,用作手机交互模态

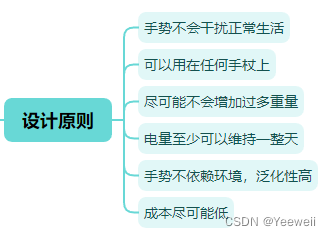

三、设计原则

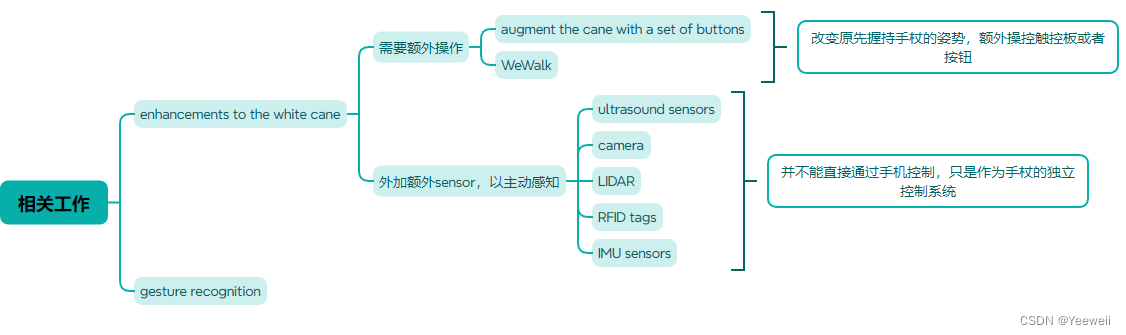

四、相关工作

1. take cane as navigation device

- Yuhang Zhao, Cynthia L. Bennett, Hrvoje Benko, Edward Cutrell, Christian Holz, Meredith Ringel Morris, and Mike Sinclair. 2018b. Enabling People with Visual Impairments to Navigate Virtual Reality with a Haptic and Auditory Cane Simulation. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI ’18). ACM, New York, NY, USA, Article 116, 14 pages. DOI:http://dx.doi.org/10.1145/3173574.3173690

- WhiteCaneDay 2017. White Cane Day. (2017). http://www.whitecaneday.org/canes/.

- Michele A. Williams, Caroline Galbraith, Shaun K. Kane, and Amy Hurst. 2014. “Just Let the Cane Hit It”: How the Blind and Sighted See Navigation Differently. In Proceedings of the 16th International ACM SIGACCESS Conference on Computers & Accessibility (ASSETS ’14). ACM, New York, NY, USA, 217–224. DOI:http://dx.doi.org/10.1145/2661334.2661380

2. augment the cane with a set of buttons

- Jared M. Batterman, Vincent F. Martin, Derek Yeung, and Bruce N. Walker. 2018. Connected cane: Tactile button input for controlling gestures of iOS voiceover embedded in a white cane. Assistive Technology 30, 2 (2018), 91–99.

3. replace the cane handle with one that contains a touchpad

- WeWALK. 2018. WeWALK SMART CANE. (2018). https://get.wewalk.io/.

4. add sensors to canes

I. ultrasound sensor

- Vaibhav Singh, Rohan Paul, Dheeraj Mehra, Anurag Gupta, Vasu Dev Sharma, Saumya Jain, Chinmay Agarwal, Ankush Garg, Sandeep Singh Gujral, M. Balakrishnan, Kolin Paul, P.V.M. Rao, and Dipendra Manocha. 2010. Smart cane for the visually impaired: Design and controlled field testing of an affordable obstacle detection system. In 12th International Conference on Mobility and Transport for Elderly and Disabled Persons (TRANSED ’10).

II. camera

- Jin Sun Ju, Eunjeong Ko, and Eun Yi Kim. 2009. EYECane: Navigating with Camera Embedded White Cane for Visually Impaired Person. In Proceedings of the 11th International ACM SIGACCESS Conference on Computers and Accessibility (Assets ’09). ACM, New York, NY, USA, 237–238. DOI:http://dx.doi.org/10.1145/1639642.1639693

III. LIDAR

- Tomàs Pallejà, Marcel Tresanchez, Mercè Teixidá, and Jordi Palacin. 2010. Bioinspired Electronic White Cane Implementation Based on a LIDAR, a Tri-Axial Accelerometer and a Tactile Belt. Sensors 10, 12 (2010), 11322–11339. DOI:http://dx.doi.org/10.3390/s101211322

IV. RFID tags

- Je Seok Lee, Heeryung Choi, and Joonhwan Lee. 2015. TalkingCane: Designing Interactive White Cane for Visually Impaired People’s Bus Usage. In Proceedings of the 17th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct (MobileHCI ’15). ACM, New York, NY, USA, 668–673.

- J. Faria, S. Lopes, H. Fernandes, P. Martins, and J. Barroso. 2010. Electronic white cane for blind people navigation assistance. In 2010 World Automation Congress. 1–7.

- A. J. Fukasawa and K. Magatani. 2012. A navigation system for the visually impaired an intelligent white cane. In 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society. 4760–4763. DOI: http://dx.doi.org/10.1109/EMBC.2012.6347031

V. IMU sensors

- German H. Flores and Roberto Manduchi. 2016. WeAllWalk: An Annotated Data Set of Inertial Sensor Time Series from Blind Walkers. In Proceedings of the 18th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS ’16). ACM, New York, NY, USA, 141–150. DOI: http://dx.doi.org/10.1145/2982142.2982179

- Daniel Ashbrook, Carlos Tejada, Dhwanit Mehta,Anthony Jiminez, Goudam Muralitharam, SangeetaGajendra, and Ross Tallents. 2016. Bitey: An Exploration of Tooth Click Gestures for Hands-free User Interface Control. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI ’16). ACM, New York, NY, USA, 158–169. DOI: http://dx.doi.org/10.1145/2935334.2935389

- Sven Kratz and Maribeth Back. 2015. Towards Accurate Automatic Segmentation of IMU-Tracked Motion Gestures. In Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems (CHI EA ’15). ACM, New York, NY, USA, 1337–1342. DOI: http://dx.doi.org/10.1145/2702613.2732922

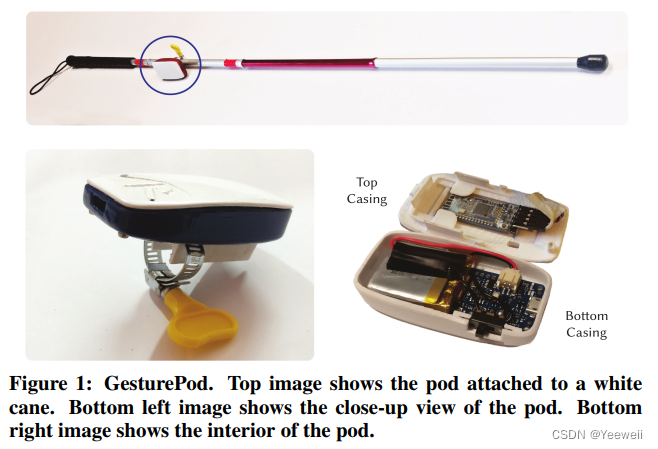

五、本文系统设计

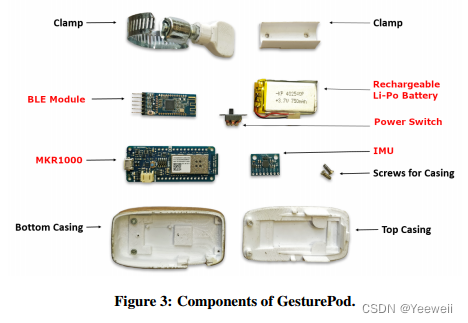

六、硬件组成

2859

2859

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?