文章目录

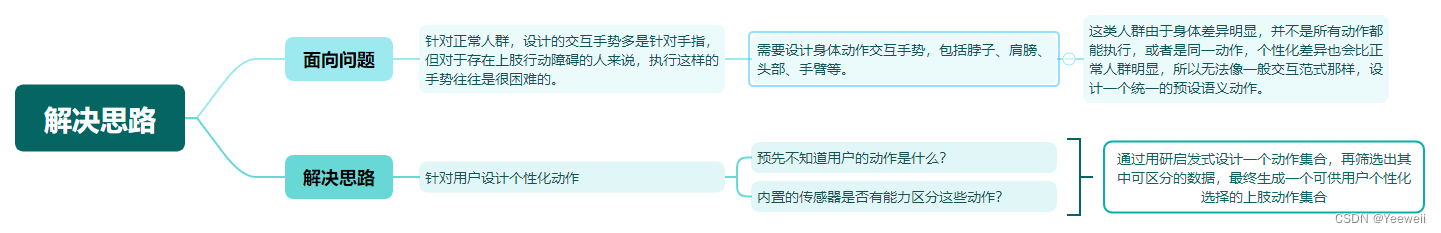

一、面向问题与本文思路

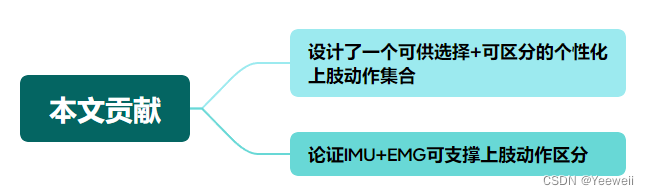

二、本文贡献

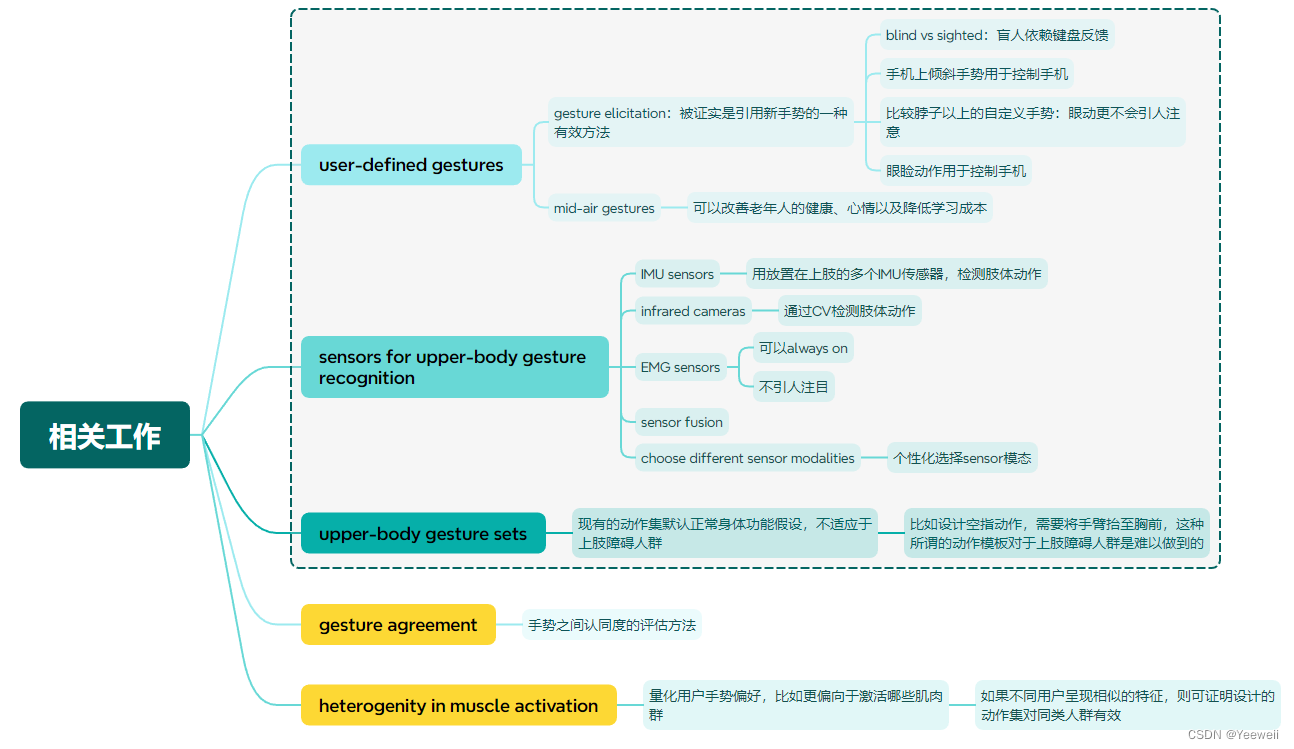

三、相关工作与比较

1. 相关工作

I. user-defined gestures

- (1) gesture elicitation

- Jacob O Wobbrock, Htet Htet Aung, Brandon Rothrock, and Brad A Myers. 2005. Maximizing the guessability of symbolic input. In CHI’05 extended abstracts on Human Factors in Computing Systems. 1869–1872.

- Jacob O Wobbrock, Meredith Ringel Morris, and Andrew D Wilson. 2009. Userdefned gestures for surface computing. In Proceedings of the SIGCHI conference on human factors in computing systems. 1083–1092.

- Santiago Villarreal-Narvaez, Jean Vanderdonckt, Radu-Daniel Vatavu, and Jacob O Wobbrock. 2020. A systematic review of gesture elicitation studies: What can we learn from 216 studies?. In Proceedings of the 2020 ACM designing interactive systems conference. 855–872.

- Meredith Ringel Morris, Jacob O Wobbrock, and Andrew D Wilson. 2010. Understanding users’ preferences for surface gestures. In Proceedings of graphics interface 2010. 261–268.

- Shaun K Kane, Jacob O Wobbrock, and Richard E Ladner. 2011. Usable gestures for blind people: understanding preference and performance. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. 413–422.

- Nem Khan Dim and Xiangshi Ren. 2014. Designing motion gesture interfaces in mobile phones for blind people. Journal of Computer Science and technology 29, 5 (2014), 812–824.

- Xuan Zhao, Mingming Fan, and Teng Han. 2022. “I Don’t Want People to Look At Me Diferently” Designing User-Defned Above-the-Neck Gestures for People with Upper Body Motor Impairments. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems. 1–15.

- Mingming Fan, Zhen Li, and Franklin Mingzhe Li. 2020. Eyelid gestures on mobile devices for people with motor impairments. In Proceedings of the 22nd International ACM SIGACCESS Conference on Computers and Accessibility. 1–8.

- (2) mid-air gestures

- Kathrin Gerling, Ian Livingston, Lennart Nacke, and Regan Mandryk. 2012. Fullbody motion-based game interaction for older adults. In Proceedings of the SIGCHI conference on human factors in computing systems. 1873–1882.- Kathrin M Gerling, Kristen K Dergousof, Regan L Mandryk, et al. 2013. Is movement better? Comparing sedentary and motion-based game controls for older adults. In Proceedings-Graphics Interface. Canadian Information Processing Society, 133–140.

- Jan Bobeth, Susanne Schmehl, Ernst Kruijf, Stephanie Deutsch, and Manfred Tscheligi. 2012. Evaluating performance and acceptance of older adults using freehand gestures for TV menu control. In Proceedings of the 10th European conference on Interactive tv and video. 35–44.

- Michela Ferron, Nadia Mana, and Ornella Mich. 2019. Designing mid-air gesture interaction with mobile devices for older adults. Perspectives on human-computer interaction research with older people (2019), 81–100.

- Michela Ferron, Nadia Mana, Ornella Mich, and Christopher Reeves. 2018. Design of multimodal interaction with mobile devices. Challenges for visually impaired and elderly users. In Proceedings of the 3rd International Conference on Human Computer Interaction Theory and Applications (HUCAPP). 140–146.

- Micael Carreira, Karine Lan Hing Ting, Petra Csobanka, and Daniel Gonçalves. 2017. Evaluation of in-air hand gestures interaction for older people. Universal Access in the Information Society 16 (2017), 561–580.

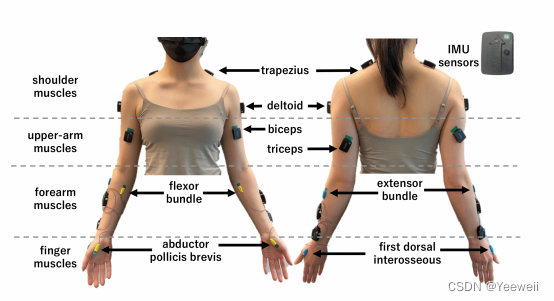

II. sesors for upper-body gesture recognition

- (1) IMU sensors

- Daniel Roetenberg, Henk Luinge, Per Slycke, et al. 2009. Xsens MVN: Full 6DOF human motion tracking using miniature inertial sensors. Xsens Motion Technologies BV, Tech. Rep 1 (2009), 1–7.

- (2) infrared camera

- Ross A Clark, Benjamin F Mentiplay, Emma Hough, and Yong Hao Pua. 2019. Three-dimensional cameras and skeleton pose tracking for physical function assessment: A review of uses, validity, current developments and Kinect alternatives. Gait & posture 68 (2019), 193–200

- (3) EMG sensors

- Momona Yamagami, Keshia M Peters, Ivana Milovanovic, Irene Kuang, Zeyu Yang, Nanshu Lu, and Katherine M Steele. 2018. Assessment of dry epidermal electrodes for long-term electromyography measurements. Sensors 18, 4 (2018), 1269

- Arc Media. 2016. Thalmic Labs – Myo Retail Launch. https://www.youtube.com/watch?v=A8lGstYAY14&ab_channel=ArcMedia [Accessed: April 12, 2023].

- T Scott Saponas, Desney S Tan, Dan Morris, Ravin Balakrishnan, Jim Turner, and James A Landay. 2009. Enabling always-available input with muscle-computer interfaces. In Proceedings of the 22nd annual ACM symposium on User interface software and technology. 167–176.

- (4) sensor fusion

- Yangjian Huang, Weichao Guo, Jianwei Liu, Jiayuan He, Haisheng Xia, Xinjun Sheng, Haitao Wang, Xuetao Feng, and Peter B Shull. 2015. Preliminary testing of a hand gesture recognition wristband based on emg and inertial sensor fusion. In Intelligent Robotics and Applications: 8th International Conference, ICIRA 2015, Portsmouth, UK, August 24-27, 2015, Proceedings, Part I 8. Springer, 359–367.

- (5) choose different sensor modalitues based on the user’s abilities

- Ovidiu-Andrei Schipor, Laura-Bianca Bilius, and Radu-Daniel Vatavu. 2022. WearSkill: personalized and interchangeable input with wearables for users with motor impairments. In Proceedings of the 19th International Web for All Conference. 1–5.

III. upper-body gesture sets

- Jacob O Wobbrock, Krzysztof Z Gajos, Shaun K Kane, and Gregg C Vanderheiden. 2018. Ability-based design. Commun. ACM 61, 6 (2018), 62–71.

- Panagiotis Vogiatzidakis and Panayiotis Koutsabasis. 2018. Gesture elicitation studies for mid-air interaction: A review. Multimodal Technologies and Interaction 2, 4 (2018), 65.

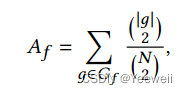

IV. gesture agreement

- Leah Findlater, Ben Lee, and Jacob Wobbrock. 2012. Beyond QWERTY: augmenting touch screen keyboards with multi-touch gestures for non-alphanumeric input. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. 2679–2682.

- Jacob O Wobbrock, Meredith Ringel Morris, and Andrew D Wilson. 2009. Userdefned gestures for surface computing. In Proceedings of the SIGCHI conference on human factors in computing systems. 1083–1092.

2. 与现有工作比较

| 现有工作 | 本文工作 |

|---|---|

| user-defined gestures |

|

| sensors | 只关注IMU和EMG,因为这两种传感器有如下特点:

|

| upper-body gesture sets |

|

四、具体内容

4459

4459

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?