搭建环境

搭建mmdetection环境:mmdetection环境搭建(linux)-CSDN博客

数据集

将数据集放在detection-master/data/coco下

配置文件config设置

看到网上有很多方法是直接修改的官方的模型院配置文件,但是我不太想修改原文件,一是麻烦,得改很多文件,二是担心越改越乱容易出问题,于是选择配置自己的config文件,训练时直接调用自己的config文件,不修改官方提供的模型原配置文件。

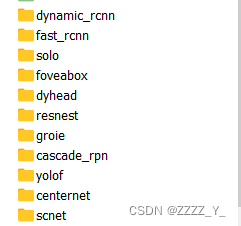

目录 mmdetection-master/configs/ 下有各模型文件夹,文件夹下为各模型的配置文件,如下图所示这里我使用mask_rcnn_r50_fpn_1x_coco.py实现目标检测。

准备工作

在 mmdetection-master/configs/ 下新建demo文件夹,用于存放自己的config文件,这里将自己的config文件命名为demo_mask_rcnn_r50_fpn_1x_coco.py

在mmdetection-master下新建checkpoints文件夹,将要用的预训练模型权重文件下载到此文件夹下

开始配置自己的config文件

首先继承基础的模型结构

_base_ = '../mask_rcnn/mask_rcnn_r50_fpn_1x_coco.py'然后修改配置文件中的各项参数完成继承

由于要实现分类任务,因此需要对模型结构进行修改:

1. 删掉mask_head和mask_roi_extractor,通过将对应节点设置为key即可删除

2. 修改bbox_head的输出,即输出类别数,这里我的类别数是5

model = dict(

roi_head=dict(

bbox_head=dict(num_classes=5),

mask_head=None,

mask_roi_extractor=None))采用的数据集为coco数据集

dataset_type = 'COCODataset'设置检测类别

classes = ('Inlet', 'Slightshort', 'Generalshort', 'Severeshort', 'Outlet')修改配置文件中的中间变量

1.由于仅实现检测任务,将train_pipeline中的with_mask设置为False,默认为True

2.train_pipeline中的 dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels', 'gt_labels'])为默认设置,删掉'gt_labels'

ps. train中要加上pipeline = train_pipeline将train_pipeline传递到data里

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True, with_mask=False),

dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=32),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels'])

]设置数据集路径、batchsize、num_workers等参数,train中要加上pipeline = train_pipeline将train_pipeline传递到data里

data = dict(

samples_per_gpu=8, # batch size

workers_per_gpu=4, # num_workers

train=dict(

#img_prefix='/data/zy/dataset/project/Cooper001_withlabel/coco/train2017/',

img_prefix='/data/zy/dataset/project/Cooper001_withlabel/coco/train2017_alpha2/',

#img_prefix='/data/zy/dataset/project/Cooper001_withlabel/coco/train2017_rec/',

classes=classes,

pipeline = train_pipeline, #这里将前面修改的train_pipeline传递到pipeline里,不然修改不生效

ann_file='/data/zy/dataset/project/Cooper001_withlabel/coco/annotations/instances_train2017.json'),

val=dict(

img_prefix='/data/zy/dataset/project/Cooper001_withlabel/coco/val2017_alpha2/',

classes=classes,

ann_file='/data/zy/dataset/project/Cooper001_withlabel/coco/annotations/instances_val2017.json'),

test=dict(

img_prefix='/data/zy/dataset/project/Cooper001_withlabel/coco/test2017_alpha2/',

classes=classes,

ann_file='/data/zy/dataset/project/Cooper001_withlabel/coco/annotations/instances_test2017.json'))evalua的metric设为bbox,classwise设为True打印每个类别的ap

evaluation = dict(interval=1, metric='bbox', classwise=True) 设置epoch

runner = dict(type='EpochBasedRunner', max_epochs=20)optimizer

optimizer = dict(type='SGD', lr=0.02, momentum=0.9, weight_decay=0.0001)加载预训练模型权重文件

load_from = '/data/zy/code/mmdetection-master/checkpoints/mask_rcnn_r50_fpn_1x_coco_20200205-d4b0c5d6.pth'最终的config文件

_base_ = '../mask_rcnn/mask_rcnn_r50_fpn_1x_coco.py'

model = dict(

roi_head=dict(

bbox_head=dict(num_classes=5),

mask_head=None,

mask_roi_extractor=None))

evaluation = dict(interval=1, metric='bbox', classwise=True)

#optimizer = dict(type='SGD', lr=0.02, momentum=0.9, weight_decay=0.0001)

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True, with_mask=False),

dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=32),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels'])

]

runner = dict(type='EpochBasedRunner', max_epochs=20)

dataset_type = 'COCODataset'

classes = ('Inlet', 'Slightshort', 'Generalshort', 'Severeshort', 'Outlet')

data = dict(

samples_per_gpu=8, # batch size

workers_per_gpu=4, # num_workers

train=dict(

img_prefix='/data/zy/dataset/project/Cooper001_withlabel/coco/train2017/',

classes=classes,

pipeline = train_pipeline,

ann_file='/data/zy/dataset/project/Cooper001_withlabel/coco/annotations/instances_train2017.json'),

val=dict(

img_prefix='/data/zy/dataset/project/Cooper001_withlabel/coco/val2017/',

classes=classes,

ann_file='/data/zy/dataset/project/Cooper001_withlabel/coco/annotations/instances_val2017.json'),

test=dict(

img_prefix='/data/zy/dataset/project/Cooper001_withlabel/coco/test2017/',

classes=classes,

ann_file='/data/zy/dataset/project/Cooper001_withlabel/coco/annotations/instances_test2017.json'))

load_from = '/data/zy/code/mmdetection-master/checkpoints/mask_rcnn_r50_fpn_1x_coco_20200205-d4b0c5d6.pth'训练

在mmdetection-master下新建文件夹work_dirs,用于存放训练过程生成的文件

修改tools/train.py文件中的config和work-dir设置

parser.add_argument('--config', default='./configs/demo/demo_faster_rcnn_r50_fpn_2x_coco.py', help='train config file path')

parser.add_argument('--work-dir', default='./work_dirs/', help='the dir to save logs and models')命令行输入一下命令进行训练,--config后为自己的配置文件路径,--work-dir后为用于存放训练过程生成的文件的文件夹路径

python tools/train.py --config ./configs/demo/demo_mask_rcnn_r50_fpn_1x_coco.py --work-dir ./work_dirs/maskrcnn 开始训练

绘制map曲线

python tools/analysis_tools/analyze_logs.py plot_curve ./work_dirs/maskrcnn_orig/20231030_153716.log.json --keys bbox_mAP_50 --out ./work_dirs/maskrcnn_orig/map.jpg

测试

python tools/test.py \

--config configs/demo/demo_mask_rcnn_r50_fpn_1x_coco.py \

--work-dir work_dirs/maskrcnn/ \

--checkpoint work_dirs/maskrcnn/latest.pth \

--eval bbox \

--out work_dirs/maskrcnn/result.pkl \

--show-score-thr 0.5 \ #这一行应该可以删掉

--show-dir work_dirs/maskrcnn/infered_images/ \ # 保存推理后的图片

--eval-options "classwise=True" # 计算类别的APVisualize

修改标签文字背景颜色、字体颜色、位置等

在mmdetection-master/mmdet/core/visualization/image.py中找到draw_labels函数修改facecolor

def draw_labels(ax,

labels,

positions,

scores=None,

class_names=None,

color='w',

font_size=12,

scales=None,

horizontal_alignment='left'):

"""Draw labels on the axes.

Args:

ax (matplotlib.Axes): The input axes.

labels (ndarray): The labels with the shape of (n, ).

positions (ndarray): The positions to draw each labels.

scores (ndarray): The scores for each labels.

class_names (list[str]): The class names.

color (list[tuple] | matplotlib.color): The colors for labels.

font_size (int): Font size of texts. Default: 8.

scales (list[float]): Scales of texts. Default: None.

horizontal_alignment (str): The horizontal alignment method of

texts. Default: 'left'.

Returns:

matplotlib.Axes: The result axes.

"""

for i, (pos, label) in enumerate(zip(positions, labels)):

label_text = class_names[

label] if class_names is not None else f'class {label}'

if scores is not None:

label_text += f'|{scores[i]:.02f}'

text_color = color[i] if isinstance(color, list) else color

font_size_mask = font_size if scales is None else font_size * scales[i]

ax.text(

pos[0],

pos[1],

f'{label_text}',

bbox={

'facecolor': 'white', # here to rectify

'alpha': 0.8,

'pad': 0.7,

'edgecolor': 'none'

},

color=text_color,

fontsize=font_size_mask,

verticalalignment='top',

horizontalalignment=horizontal_alignment)

return ax修改bbox相关

def draw_bboxes(ax, bboxes, color='g', alpha=0.8, thickness=2):

"""Draw bounding boxes on the axes.

Args:

ax (matplotlib.Axes): The input axes.

bboxes (ndarray): The input bounding boxes with the shape

of (n, 4).

color (list[tuple] | matplotlib.color): the colors for each

bounding boxes.

alpha (float): Transparency of bounding boxes. Default: 0.8.

thickness (int): Thickness of lines. Default: 2.

Returns:

matplotlib.Axes: The result axes.

"""

polygons = []

for i, bbox in enumerate(bboxes):

bbox_int = bbox.astype(np.int32)

poly = [[bbox_int[0], bbox_int[1]], [bbox_int[0], bbox_int[3]],

[bbox_int[2], bbox_int[3]], [bbox_int[2], bbox_int[1]]]

np_poly = np.array(poly).reshape((4, 2))

polygons.append(Polygon(np_poly))

p = PatchCollection(

polygons,

facecolor='none',

edgecolors=color,

linewidths=thickness,

alpha=alpha)

ax.add_collection(p)

return ax下面这些文件里面可能也有可修改的地方,但是没有研究

mmdetection-master/mmdet/apis/inference.py

mmdetection-master/mmdet/apis/test.py

参考文件:

613

613

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?