Note book

线性回归与softmax回归区别

y1 = x1w11+ x2w21 + x3 *w31 + b1

y2 = x1w12+ x2w22+ x3 *w32 + b3

本质差不多还是线性回归,但是通过预测结果 相加 算出每个结果的占比,取占比最大为输出结果

损失函数

平方均差

交叉熵损失函数

损失函数

例如现在有 y1,y2,y3,y4的预测结果分别为[0.8,0.2,0.3,0.1]

正确标签为 [0,1,0,0]

交叉熵损失函数为 Y = -[0 * log 0.8 + 1 * log 0.2 + 0 * log 0.3 + 0 * log 0.1]

计算的结果就是损失函数,预测结果为 1 则 log 1 = 0 ,代表损失为0

为什么取符号,log 1/2,其反函数 X^a = 1/2,结果a只能取负号了

输出函数

softmax = yi = exp(out1) / sum(exp(outi))

图像分类

import d2lzh as d2l

from mxnet.gluon import data as gdata

import sys

import timemnist_train = gdata.vision.FashionMNIST(train=True)

mnist_test = gdata.vision.FashionMNIST(train=False)len(mnist_train)60000

len(mnist_test)10000

feature,label = mnist_train[0]mnist_train[0](

[[[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]]

[[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]]

[[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]]

[[ 0]

[ 0]

[ 0]

[ 0]

[ 4]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 62]

[ 61]

[ 21]

[ 29]

[ 23]

[ 51]

[136]

[ 61]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]]

[[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 88]

[201]

[228]

[225]

[255]

[115]

[ 62]

[137]

[255]

[235]

[222]

[255]

[135]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]]

[[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 47]

[252]

[234]

[238]

[224]

[215]

[215]

[229]

[108]

[180]

[207]

[214]

[224]

[231]

[249]

[254]

[ 45]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]]

[[ 0]

[ 0]

[ 1]

[ 0]

[ 0]

[214]

[222]

[210]

[213]

[224]

[225]

[217]

[220]

[254]

[233]

[219]

[221]

[217]

[223]

[221]

[240]

[254]

[ 0]

[ 0]

[ 1]

[ 0]

[ 0]

[ 0]]

[[ 1]

[ 0]

[ 0]

[ 0]

[128]

[237]

[207]

[224]

[224]

[207]

[216]

[214]

[210]

[208]

[211]

[221]

[208]

[219]

[213]

[226]

[211]

[237]

[150]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]]

[[ 0]

[ 2]

[ 0]

[ 0]

[237]

[222]

[215]

[207]

[210]

[212]

[213]

[206]

[214]

[213]

[214]

[213]

[210]

[215]

[214]

[206]

[199]

[218]

[255]

[ 13]

[ 0]

[ 2]

[ 0]

[ 0]]

[[ 0]

[ 4]

[ 0]

[ 85]

[228]

[210]

[218]

[200]

[211]

[208]

[203]

[215]

[210]

[209]

[209]

[210]

[213]

[211]

[210]

[217]

[206]

[213]

[231]

[175]

[ 0]

[ 0]

[ 0]

[ 0]]

[[ 0]

[ 0]

[ 0]

[217]

[224]

[215]

[206]

[205]

[204]

[217]

[230]

[222]

[215]

[224]

[233]

[228]

[232]

[228]

[224]

[207]

[212]

[215]

[213]

[229]

[ 31]

[ 0]

[ 4]

[ 0]]

[[ 1]

[ 0]

[ 21]

[225]

[212]

[212]

[203]

[211]

[225]

[193]

[139]

[136]

[195]

[147]

[156]

[139]

[128]

[162]

[197]

[223]

[207]

[220]

[213]

[232]

[177]

[ 0]

[ 0]

[ 0]]

[[ 0]

[ 0]

[123]

[226]

[207]

[211]

[209]

[205]

[228]

[158]

[ 90]

[103]

[186]

[138]

[100]

[121]

[147]

[158]

[183]

[226]

[208]

[214]

[209]

[216]

[255]

[ 13]

[ 0]

[ 1]]

[[ 0]

[ 0]

[226]

[219]

[202]

[208]

[206]

[205]

[216]

[184]

[156]

[150]

[193]

[170]

[164]

[168]

[188]

[186]

[200]

[219]

[216]

[213]

[213]

[211]

[233]

[148]

[ 0]

[ 0]]

[[ 0]

[ 45]

[227]

[204]

[214]

[211]

[218]

[222]

[221]

[230]

[229]

[221]

[213]

[224]

[233]

[226]

[220]

[219]

[221]

[224]

[223]

[217]

[210]

[218]

[213]

[254]

[ 0]

[ 0]]

[[ 0]

[157]

[226]

[203]

[207]

[211]

[209]

[215]

[205]

[198]

[207]

[208]

[201]

[201]

[197]

[203]

[205]

[210]

[207]

[213]

[214]

[214]

[214]

[213]

[208]

[234]

[107]

[ 0]]

[[ 0]

[235]

[213]

[204]

[211]

[210]

[209]

[213]

[202]

[197]

[204]

[215]

[217]

[213]

[212]

[210]

[206]

[212]

[203]

[211]

[218]

[215]

[214]

[208]

[209]

[222]

[230]

[ 0]]

[[ 52]

[255]

[207]

[200]

[208]

[213]

[210]

[210]

[208]

[207]

[202]

[201]

[209]

[216]

[216]

[216]

[216]

[214]

[212]

[205]

[215]

[201]

[228]

[208]

[214]

[212]

[218]

[ 25]]

[[118]

[217]

[201]

[206]

[208]

[213]

[208]

[205]

[206]

[210]

[211]

[202]

[199]

[207]

[208]

[209]

[210]

[207]

[210]

[210]

[245]

[139]

[119]

[255]

[202]

[203]

[236]

[114]]

[[171]

[238]

[212]

[203]

[220]

[216]

[217]

[209]

[207]

[205]

[210]

[211]

[206]

[204]

[206]

[209]

[211]

[215]

[210]

[206]

[221]

[242]

[ 0]

[224]

[234]

[230]

[181]

[ 26]]

[[ 39]

[145]

[201]

[255]

[157]

[115]

[250]

[200]

[207]

[206]

[207]

[213]

[216]

[206]

[205]

[206]

[207]

[206]

[215]

[207]

[221]

[238]

[ 0]

[ 0]

[188]

[ 85]

[ 0]

[ 0]]

[[ 0]

[ 0]

[ 0]

[ 31]

[ 0]

[129]

[253]

[190]

[207]

[208]

[208]

[208]

[209]

[211]

[211]

[209]

[209]

[209]

[212]

[201]

[226]

[165]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]]

[[ 2]

[ 0]

[ 0]

[ 0]

[ 0]

[ 89]

[254]

[199]

[199]

[192]

[196]

[198]

[199]

[201]

[202]

[203]

[204]

[203]

[203]

[200]

[222]

[155]

[ 0]

[ 3]

[ 3]

[ 3]

[ 2]

[ 0]]

[[ 0]

[ 0]

[ 1]

[ 5]

[ 0]

[ 0]

[255]

[218]

[226]

[232]

[228]

[224]

[222]

[220]

[219]

[219]

[217]

[221]

[220]

[212]

[236]

[ 95]

[ 0]

[ 2]

[ 0]

[ 0]

[ 0]

[ 0]]

[[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[155]

[194]

[168]

[170]

[171]

[173]

[173]

[179]

[177]

[175]

[172]

[171]

[167]

[161]

[180]

[ 0]

[ 0]

[ 1]

[ 0]

[ 1]

[ 0]

[ 0]]

[[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]]

[[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]]

[[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]

[ 0]]]

<NDArray 28x28x1 @cpu(0)>,

2)

feature.shape(28, 28, 1)

label.shape()

label2

feature.dtypenumpy.uint8

def get_fashion_mnist_lables(lables):

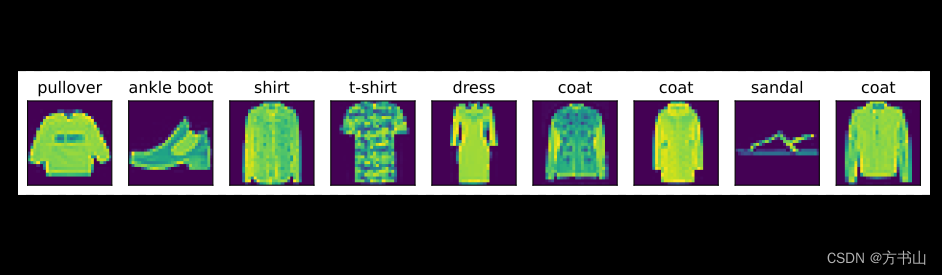

text_labels= ['t-shirt','trouser','pullover','dress','coat','sandal','shirt','sneaker','bag','ankle boot']

return [text_labels[int(i)] for i in lables]get_fashion_mnist_lables([0,1,0,0,1])['t-shirt', 'trouser', 't-shirt', 't-shirt', 'trouser']

def show_fasion_mnist(images,labels):

d2l.use_svg_display()

_,figs= d2l.plt.subplots(1,len(images),figsize=(12,12))

for f,img,lbl in zip(figs,images,labels):

f.imshow(img.reshape((28,28)).asnumpy())

f.set_title(lbl)

f.axes.get_xaxis().set_visible(False)

f.axes.get_yaxis().set_visible(False)

X,y = mnist_train[0:9]yarray([2, 9, 6, 0, 3, 4, 4, 5, 4], dtype=int32)

show_fasion_mnist(X,get_fashion_mnist_lables(y))读取小批量代码

import d2lzh as d2l

from mxnet import autograd,ndnum_inputs = 784

num_output = 10

W = nd.random.normal(scale=0.01,shape=(num_inputs,num_output))

b = nd.zeros(num_output)b[0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

<NDArray 10 @cpu(0)>

W[[ 2.21220627e-02 7.74003798e-03 1.04344031e-02 ... -4.51384438e-03

5.79383550e-03 -1.85608193e-02]

[-1.97687950e-02 -2.08019209e-03 2.44421791e-03 ... 1.46612618e-02

6.86290348e-03 3.54961026e-03]

[ 1.07316952e-02 1.20174605e-03 -9.71110165e-03 ... -1.07309278e-02

-1.04248272e-02 -1.32788485e-02]

...

[ 7.16084335e-03 -1.48858363e-02 1.59336086e-02 ... 6.20343257e-03

6.53620530e-03 -3.52914026e-03]

[-2.51065241e-03 1.38896573e-02 -1.53285675e-02 ... -2.01650523e-02

-9.52582434e-03 2.50978395e-03]

[-1.18692117e-02 1.19075924e-03 4.90005547e-03 ... 4.05345066e-03

1.36841787e-03 -4.33261557e-05]]

<NDArray 784x10 @cpu(0)>

W.attach_grad()

b.attach_grad()SoftMax 运算

X = nd.array([[1,2,3],[4,5,6]])

X.sum(axis=0,keepdims=True),X.sum(axis=1,keepdims=True)(

[[5. 7. 9.]]

<NDArray 1x3 @cpu(0)>,

[[ 6.]

[15.]]

<NDArray 2x1 @cpu(0)>)

nd.sum的运算 && softmax

def softmax(x):

X_exp = X.exp()

print("X_exp")

print(X_exp)

partition = X_exp.sum(axis=1,keepdims=True)

print("partition:")

print(partition)

return X_exp / partitionsoftmax([[1,2,3],[4,5,6]])X_exp

[[ 2.7182817 7.389056 20.085537 ]

[ 54.59815 148.41316 403.4288 ]]

<NDArray 2x3 @cpu(0)>

partition:

[[ 30.192875]

[606.4401 ]]

<NDArray 2x1 @cpu(0)>

[[0.09003057 0.24472848 0.66524094]

[0.09003057 0.24472846 0.66524094]]

<NDArray 2x3 @cpu(0)>

通过这个证明 传入的X值越大占的就越高

定义的模型

def net(x):

return softmax(nd.dot(X.reshape((-1,num_inputs)),W)+b)定义损失函数

y_hat = nd.array([[0.1,0.3,0.6],[0.3,0.2,0.5]])

y = nd.array([0,2],dtype='int32')

nd.pick(y_hat,y)[0.1 0.5]

<NDArray 2 @cpu(0)>

定义交叉熵损失函数

def cross_entropy(y_hat,y):

return -nd.pick(y_hat,y).log()计算机分类准确率

def accuracy(y_hat,y):

print(y_hat.argmax(axis=1))

print(y)

print(y_hat.argmax(axis=1) == y.astype('float32'))

return (y_hat.argmax(axis=1) == y.astype('float32')).mean().asscalar()测试计算分类准确率

accuracy(y_hat,y)[2. 2.]

<NDArray 2 @cpu(0)>

[0 2]

<NDArray 2 @cpu(0)>

[0. 1.]

<NDArray 2 @cpu(0)>

0.5

[2. 2.]

<NDArray 2 @cpu(0)>

[0 2]

<NDArray 2 @cpu(0)>

[0. 1.]

<NDArray 2 @cpu(0)>

最后一行数字代表识别是否成功,然后进行运算。

def evaluate_accuracy(data_iter,net):

acc_sum,n = 0.0,0

for X,y in data_iter:

y = y.astype('float32')

acc_sum += (net(X).argmax(axis=1) == y).sum().asscalar()

n+=y.size

return acc_sum/nevaluate_accuracy(mnist_test,net)---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Cell In[31], line 1

----> 1 evaluate_accuracy(mnist_test,net)

Cell In[30], line 5, in evaluate_accuracy(data_iter, net)

3 for X,y in data_iter:

4 y = y.astype('float32')

----> 5 acc_sum += (net(X).argmax(axis=1) == y).sum().asscalar()

6 n+=y.size

7 return acc_sum/n

Cell In[25], line 2, in net(x)

1 def net(x):

----> 2 return softmax(nd.dot(X.reshape((-1,num_inputs)),W)+b)

File ~/anaconda3/envs/network/lib/python3.9/site-packages/mxnet/ndarray/ndarray.py:1524, in NDArray.reshape(self, *shape, **kwargs)

1522 # Array size should not change

1523 if np.prod(res.shape) != np.prod(self.shape):

-> 1524 raise ValueError('Cannot reshape array of size {} into shape {}'.format(np.prod(self.shape), shape))

1525 return res

ValueError: Cannot reshape array of size 6 into shape (-1, 784)

读取小批量的代码

batch_size = 256

transformer = gdata.vision.transforems.ToTensor()

if sys.platform.startswith('win'):

num_workers = 0

else:

num_workers = 4

train_iter = gdata.DataLoader(mnist_train.transform_first(transformer),batch_size,shuffle=False)

test_iter = gdata.DataLoader(mnist_test.transform_first(transformer),batch_size,shuffle=False)gdata.vision.transforms.ToTensor()是MXNet Gluon提供的一个图像转换函数,用于将PIL格式的图像转换成MXNet支持的张量格式,并对像素值进行标准化。

具体来说,该函数将PIL格式的图像转换成(C, H, W)的张量格式,并对像素值进行标准化。其中,C表示通道数,H和W表示高度和宽度。默认情况下,C=3,即表示RGB三个通道。

图像的转换过程包括以下步骤:

将PIL图像转换成MXNet NDArray格式的图像;

对图像进行通道重排,即将HWC格式的图像转换成CHW格式;

对图像的数据类型进行转换,由uint8转换成float32;

对图像的像素值进行标准化,即将像素值除以255.0,使得像素值的范围被缩放到[0, 1]之间。

from mxnet.gluon.data.vision import transforms

from PIL import Image

# 创建ToTensor实例

to_tensor = transforms.ToTensor()

# 读取图像并转换成PIL格式

img_pil = Image.open('example.jpg')

# 调用ToTensor函数进行图像转换

img_tensor = to_tensor(img_pil)DataLoader数据加载器例子

import mxnet as mx

from mxnet.gluon.data import DataLoader, ArrayDataset

# Create an instance of the dataset

data = mx.nd.array([[1, 2], [3, 4], [5, 6], [7, 8], [9, 10]])

labels = mx.nd.array([0, 1, 0, 1, 0])

dataset = ArrayDataset(data, labels)

# Create a data loader

batch_size = 2

shuffle = True

loader = DataLoader(dataset, batch_size=batch_size, shuffle=shuffle)

# Iterate over the data loader

for data, label in loader:

print(data, label)训练模型

num_epochs , lr = 5 ,0.1def train_ch3(net,train_iter,test_iter,loss,num_epchs,batch_size,params=None,lr=None,trainer=None):

print(epoch)

for epoch in range(num_epochs):

print(epoch)

train_l_sum,train_acc_sum,n=0.0,0.0,0

for X,y in train_iter:

with autograd.record():

y_hat = net(X)

l = loss(y_hat,y).sum()

l.backward()

if trainer is None:

d2l.sgd(params,lr,batch_size)

else:

trainer.step(batch_size)

y = y.astype('float32')

train_l_sum += l.asscalar()

train_acc_sum+=(y_hat.argmax(axis=1) == y).sum().asscalar()

n+=y.size()

test_acc = evaluate_accuracy(test_iter,net)

print('epoch %d loss %.4f train acc %.3f test acc %.3f' %(epoch+1, train_l_sum /n ,train_acc_sum/n, test_acc))

train_ch3(net,mnist_train,mnist_test,cross_entropy,num_epochs,batch_size,[W,b],lr)补充MXnet框架

Mxnet ND pick

import mxnet as mx

from mxnet import nd

# 创建一个2x3的数组

data = nd.array([[1, 2, 3], [4, 5, 6]])

# 提取第一行和第二行的元素

index = nd.array([0, 1])

result = nd.pick(data, index)

print(result) # 输出: [ 1. 5.][1. 5.]

<NDArray 2 @cpu(0)>

在MXNet库中,nd.pick是一个NDArray(n维数组)操作,用于从另一个NDArray中根据给定的索引提取元素。

Mxnet argmax参数

import mxnet as mx

# 创建一个2x3的张量

a = mx.nd.array([[1, 2, 3], [4, 5, 6]])

# 沿着轴1计算最大值索引

b = mx.nd.argmax(a, axis=1)

print(b) # 输出 [2. 2.][2. 2.]

<NDArray 2 @cpu(0)>

在上面的代码中,我们使用argmax函数计算了一个2x3的张量a沿轴1的最大值索引,并将结果存储在变量b中。在这个例子中,最大值索引为2的元素分别位于第一行的第三个位置和第二行的第三个位置。因此,输出结果为[2. 2.]。

argmax找出 沿着某个纬度的最大值

def accuracy(y_hat,y):

return (y_hat.argmax(axis=1) == y.astype('float32')).mean().asscalar()这段Python代码定义了一个名为accuracy的函数,它接受两个参数:预测结果y_hat和真实结果y。该函数返回预测结果与真实结果相匹配的样本所占的比例,即预测准确率。

具体来说,该函数首先使用argmax函数获取预测结果张量y_hat中每个样本最大值所在的索引,这里默认沿着axis=1轴计算,表示对每一行进行处理。然后将这个索引张量与真实结果y进行比较,得到一个元素类型为布尔型的张量,其每个元素对应一个样本的预测结果是否正确。接着,将这个张量中所有元素的平均值作为函数的返回值,这个平均值即为预测准确率。

需要注意的是,函数中出现了一个名为yhat的变量,这是代码中的一个笔误,应该是y_hat。此外,由于mean()函数返回的是一个MXNet NDArray对象,因此需要使用asscalar()函数将其转换为Python标量值。

这段Python代码定义了一个名为accuracy的函数,它接受两个参数:预测结果y_hat和真实结果y。该函数返回预测结果与真实结果相匹配的样本所占的比例,即预测准确率。

具体来说,该函数首先使用argmax函数获取预测结果张量y_hat中每个样本最大值所在的索引,这里默认沿着axis=1轴计算,表示对每一行进行处理。然后将这个索引张量与真实结果y进行比较,得到一个元素类型为布尔型的张量,其每个元素对应一个样本的预测结果是否正确。接着,将这个张量中所有元素的平均值作为函数的返回值,这个平均值即为预测准确率。

需要注意的是,函数中出现了一个名为yhat的变量,这是代码中的一个笔误,应该是y_hat。此外,由于mean()函数返回的是一个MXNet NDArray对象,因此需要使用asscalar()函数将其转换为Python标量值。

——————————————————————

import mxnet as mx

from mxnet.gluon.data import DataLoader, ArrayDataset

# 创建一个标准的数据集

data = mx.nd.array([[1, 2], [3, 4], [5, 6], [7, 8], [9, 10]])

labels = mx.nd.array([0, 1, 0, 1, 0])

dataset = ArrayDataset(data, labels)

# 创建数据加载器

batch_size = 2

shuffle = True

loader = DataLoader(dataset, batch_size=batch_size, shuffle=shuffle)

# 数据加载器开始

for data, label in loader:

print(data, label)[[1. 2.]

[3. 4.]]

<NDArray 2x2 @cpu(0)>

[0. 1.]

<NDArray 2 @cpu(0)>

[[ 9. 10.]

[ 5. 6.]]

<NDArray 2x2 @cpu(0)>

[0. 0.]

<NDArray 2 @cpu(0)>

[[7. 8.]]

<NDArray 1x2 @cpu(0)>

[1.]

<NDArray 1 @cpu(0)>

shuffle是一个常用的数据处理操作,表示将数据集的样本随机打乱,使得样本之间的顺序变得随机。这个操作常常用于训练神经网络时,因为训练神经网络时需要使用大量的数据样本进行梯度下降优化,如果数据集中的样本顺序是有规律的(比如按照时间顺序或者按照类别顺序排列),那么在训练时会使得神经网络只学习到特定的样本顺序规律,而不能很好地泛化到新的数据集上。

944

944

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?