相关博客

ExoPlayer播放器剖析(一)进入ExoPlayer的世界

ExoPlayer播放器剖析(二)编写exoplayer的demo

ExoPlayer播放器剖析(三)流程分析—从build到prepare看ExoPlayer的创建流程

ExoPlayer播放器剖析(四)从renderer.render函数分析至MediaCodec

ExoPlayer播放器剖析(五)ExoPlayer对AudioTrack的操作

ExoPlayer播放器剖析(六)ExoPlayer同步机制分析

ExoPlayer播放器剖析(七)ExoPlayer对音频时间戳的处理

ExoPlayer播放器扩展(一)DASH流与HLS流简介

一、前言:

在exoplayer的同步机制分析中,我们知道所有的同步处理前提都是基于准确的音频的时间戳来执行的。因为exoplayer对音频的时间戳处理很繁琐,所以,单独编写一篇博客来分析。

二、代码分析:

1.音视频时间戳的更新点:

时间戳的更新是在doSomeWork那个大循环里面去执行的,也就是说,每10ms进行一次更新:

doSomeWork@ExoPlayer\library\core\src\main\java\com\google\android\exoplayer2\ExoPlayerImplInternal.java:

private void doSomeWork() throws ExoPlaybackException, IOException {

...

/* 更新音频时间戳 */

updatePlaybackPositions();

...

/* 调用各个类型的render进行数据处理 */

for (int i = 0; i < renderers.length; i++) {

...

renderer.render(rendererPositionUs, rendererPositionElapsedRealtimeUs);

...

}

...

}

updatePlaybackPositions函数将会去更新并处理pts,跟进到这个函数:

private void updatePlaybackPositions() throws ExoPlaybackException {

MediaPeriodHolder playingPeriodHolder = queue.getPlayingPeriod();

if (playingPeriodHolder == null) {

return;

}

// Update the playback position.

long discontinuityPositionUs =

playingPeriodHolder.prepared

? playingPeriodHolder.mediaPeriod.readDiscontinuity()

: C.TIME_UNSET;

if (discontinuityPositionUs != C.TIME_UNSET) {

resetRendererPosition(discontinuityPositionUs);

// A MediaPeriod may report a discontinuity at the current playback position to ensure the

// renderers are flushed. Only report the discontinuity externally if the position changed.

if (discontinuityPositionUs != playbackInfo.positionUs) {

playbackInfo =

handlePositionDiscontinuity(

playbackInfo.periodId,

discontinuityPositionUs,

playbackInfo.requestedContentPositionUs);

playbackInfoUpdate.setPositionDiscontinuity(Player.DISCONTINUITY_REASON_INTERNAL);

}

} else {

/* 在这里面处理pts */

rendererPositionUs =

mediaClock.syncAndGetPositionUs(

/* isReadingAhead= */ playingPeriodHolder != queue.getReadingPeriod());

long periodPositionUs = playingPeriodHolder.toPeriodTime(rendererPositionUs);

maybeTriggerPendingMessages(playbackInfo.positionUs, periodPositionUs);

playbackInfo.positionUs = periodPositionUs;

}

// Update the buffered position and total buffered duration.

MediaPeriodHolder loadingPeriod = queue.getLoadingPeriod();

playbackInfo.bufferedPositionUs = loadingPeriod.getBufferedPositionUs();

playbackInfo.totalBufferedDurationUs = getTotalBufferedDurationUs();

}

继续更进:

public long syncAndGetPositionUs(boolean isReadingAhead) {

syncClocks(isReadingAhead);

return getPositionUs();

}

这里需要注意下入参isReadingAhead,它的值是由上面的表达式playingPeriodHolder != queue.getReadingPeriod()来得到的,也就是说,如果码流里面解析出来有带pts,那么将会使用码流中的pts来进行后续的同步处理,如果没有,就使用exoplayer自己维护的系统时间来进行同步,显然,正常情况下,都是使用码流中的pts来处理的。我们进入到getPositionUs():

@Override

public long getPositionUs() {

return isUsingStandaloneClock

? standaloneClock.getPositionUs()

: Assertions.checkNotNull(rendererClock).getPositionUs();

}

有了上面的分析,我们直接去看后者,注意这里的rendererClock实现类是

MediaCodecAudioRenderer:

@Override

public long getPositionUs() {

if (getState() == STATE_STARTED) {

updateCurrentPosition();

}

return currentPositionUs;

}

如果播放器的状态为STATE_STARTED后,将会调用updateCurrentPosition()持续处理:

private void updateCurrentPosition() {

long newCurrentPositionUs = audioSink.getCurrentPositionUs(isEnded());

if (newCurrentPositionUs != AudioSink.CURRENT_POSITION_NOT_SET) {

currentPositionUs =

allowPositionDiscontinuity

? newCurrentPositionUs

: max(currentPositionUs, newCurrentPositionUs);

allowPositionDiscontinuity = false;

}

}

这个函数的意义实际上就是在更新类变量newCurrentPositionUs的值,我们去看

getCurrentPositionUs()函数,audioSink的实现类是DefaultAudioSink:

@Override

public long getCurrentPositionUs(boolean sourceEnded) {

if (!isAudioTrackInitialized() || startMediaTimeUsNeedsInit) {

return CURRENT_POSITION_NOT_SET;

}

/* 从AudioTrack获取pts */

long positionUs = audioTrackPositionTracker.getCurrentPositionUs(sourceEnded);

/* 返回标准为audiotrack和本地记录获取的二者的最小值 */

positionUs = min(positionUs, configuration.framesToDurationUs(getWrittenFrames()));

return applySkipping(applyMediaPositionParameters(positionUs));

}

经过多层封装,找到了最终从audiotrack获取pts的地方,下面将着重分析函数getCurrentPositionUs。

2.getCurrentPositionUs函数通讲:

这个函数有点复杂,先注释好列出如下:

public long getCurrentPositionUs(boolean sourceEnded) {

/* 三件重要的事:*/

/* 1.根据AudioTrack.getPlaybackHeadPosition的值来计算平滑抖动值 */

/* 2.校验timestamp */

/* 3.校验latency */

if (Assertions.checkNotNull(this.audioTrack).getPlayState() == PLAYSTATE_PLAYING) {

maybeSampleSyncParams();

}

// If the device supports it, use the playback timestamp from AudioTrack.getTimestamp.

// Otherwise, derive a smoothed position by sampling the track's frame position.

long systemTimeUs = System.nanoTime() / 1000;

long positionUs;

AudioTimestampPoller audioTimestampPoller = Assertions.checkNotNull(this.audioTimestampPoller);

boolean useGetTimestampMode = audioTimestampPoller.hasAdvancingTimestamp();

/* if分支为设备支持的timestamp模式,else为AudioTrack.getPlaybackHeadPosition获取的处理方式 */

/* 使用手机实测走的if分支 */

if (useGetTimestampMode) {

// Calculate the speed-adjusted position using the timestamp (which may be in the future).

long timestampPositionFrames = audioTimestampPoller.getTimestampPositionFrames();

long timestampPositionUs = framesToDurationUs(timestampPositionFrames);

long elapsedSinceTimestampUs = systemTimeUs - audioTimestampPoller.getTimestampSystemTimeUs();

elapsedSinceTimestampUs =

Util.getMediaDurationForPlayoutDuration(elapsedSinceTimestampUs, audioTrackPlaybackSpeed);

positionUs = timestampPositionUs + elapsedSinceTimestampUs;

} else {

if (playheadOffsetCount == 0) {

// The AudioTrack has started, but we don't have any samples to compute a smoothed position.

positionUs = getPlaybackHeadPositionUs();

} else {

// getPlaybackHeadPositionUs() only has a granularity of ~20 ms, so we base the position off

// the system clock (and a smoothed offset between it and the playhead position) so as to

// prevent jitter in the reported positions.

positionUs = systemTimeUs + smoothedPlayheadOffsetUs;

}

if (!sourceEnded) {

/* 获取到的position还要再减去一个latency */

positionUs = max(0, positionUs - latencyUs);

}

}

/* 如果模式有切换,做下保存 */

if (lastSampleUsedGetTimestampMode != useGetTimestampMode) {

// We've switched sampling mode.

previousModeSystemTimeUs = lastSystemTimeUs;

previousModePositionUs = lastPositionUs;

}

long elapsedSincePreviousModeUs = systemTimeUs - previousModeSystemTimeUs;

if (elapsedSincePreviousModeUs < MODE_SWITCH_SMOOTHING_DURATION_US) {

// Use a ramp to smooth between the old mode and the new one to avoid introducing a sudden

// jump if the two modes disagree.

long previousModeProjectedPositionUs =

previousModePositionUs

+ Util.getMediaDurationForPlayoutDuration(

elapsedSincePreviousModeUs, audioTrackPlaybackSpeed);

// A ramp consisting of 1000 points distributed over MODE_SWITCH_SMOOTHING_DURATION_US.

long rampPoint = (elapsedSincePreviousModeUs * 1000) / MODE_SWITCH_SMOOTHING_DURATION_US;

positionUs *= rampPoint;

positionUs += (1000 - rampPoint) * previousModeProjectedPositionUs;

positionUs /= 1000;

}

if (!notifiedPositionIncreasing && positionUs > lastPositionUs) {

notifiedPositionIncreasing = true;

long mediaDurationSinceLastPositionUs = C.usToMs(positionUs - lastPositionUs);

long playoutDurationSinceLastPositionUs =

Util.getPlayoutDurationForMediaDuration(

mediaDurationSinceLastPositionUs, audioTrackPlaybackSpeed);

long playoutStartSystemTimeMs =

System.currentTimeMillis() - C.usToMs(playoutDurationSinceLastPositionUs);

listener.onPositionAdvancing(playoutStartSystemTimeMs);

}

lastSystemTimeUs = systemTimeUs;

lastPositionUs = positionUs;

lastSampleUsedGetTimestampMode = useGetTimestampMode;

return positionUs;

}

想要充分理解这个函数,需要做一下准备功课,AudioTrack提供了两种api来查询音频pts:

/* 1 */

public boolean getTimestamp(AudioTimestamp timestamp);

/* 2 */

public int getPlaybackHeadPosition();

前者需要设备底层有实现,可以理解为这是一个精确获取pts的函数,但是该函数有一个特点,不会频繁刷新值,所以不宜频繁调用,从官方文档的给的参考来看,建议10s~60s调用一次,看一下入参类AudioTimestamp 包含的两个成员变量:

public long framePosition; /* 写入帧的位置 */

public long nanoTime; /* 更新帧位置时的系统时间 */

后者则是可以频繁调用的接口,其值返回的是从播放开始,audiotrack持续写入到hal层的数据,对这两个函数有了了解后,我们就知道有两种方式可以从audiotrack获取音频的pts,因此,getCurrentPositionUs实际上就是对两种方式都做了处理。我们先对整个函数进行通讲,然后再具体分析各个函数的细节:

/* 三件重要的事:*/

/* 1.根据AudioTrack.getPlaybackHeadPosition的值来计算平滑抖动值 */

/* 2.校验timestamp */

/* 3.校验latency */

if (Assertions.checkNotNull(this.audioTrack).getPlayState() == PLAYSTATE_PLAYING) {

maybeSampleSyncParams();

}

首先看最上面对maybeSampleSyncParams()函数的注释,一共干了三件重要的事,第一件事,是根据AudioTrack.getPlaybackHeadPosition的值来计算平滑抖动值,因为AudioTrack.getPlaybackHeadPosition拿到的pts是频繁调用后得到的,为了避免偶尔的大抖动会影响后续的同步,所以exoplayer这里做了一个算法处理,用一个变量smoothedPlayheadOffsetUs来记录针对该接口的抖动值,便于后续处理,第二件事是去校验timestamp,这个值就是调getTimestamp()接口拿到的,第三件事,是去校验latency,什么是latency呢?就是音频数据从audiotrack到写入硬件,最终出声的一个延时,尤其是在蓝牙连接场景非常明显,需要注意的是,这个接口需要由底层芯片厂商来实现,应用能够直接调用获取,如果应用层想要自己去计算是非常困难的。在做完了整个maybeSampleSyncParams的准备工作后,我们看该函数的下面是如何操作的:

/* if分支为设备支持的timestamp模式,else为AudioTrack.getPlaybackHeadPosition获取的处理方式 */

/* 使用手机实测走的if分支 */

if (useGetTimestampMode) {

// Calculate the speed-adjusted position using the timestamp (which may be in the future).

long timestampPositionFrames = audioTimestampPoller.getTimestampPositionFrames();

long timestampPositionUs = framesToDurationUs(timestampPositionFrames);

long elapsedSinceTimestampUs = systemTimeUs - audioTimestampPoller.getTimestampSystemTimeUs();

elapsedSinceTimestampUs =

Util.getMediaDurationForPlayoutDuration(elapsedSinceTimestampUs, audioTrackPlaybackSpeed);

positionUs = timestampPositionUs + elapsedSinceTimestampUs;

} else {

if (playheadOffsetCount == 0) {

// The AudioTrack has started, but we don't have any samples to compute a smoothed position.

positionUs = getPlaybackHeadPositionUs();

} else {

// getPlaybackHeadPositionUs() only has a granularity of ~20 ms, so we base the position off

// the system clock (and a smoothed offset between it and the playhead position) so as to

// prevent jitter in the reported positions.

positionUs = systemTimeUs + smoothedPlayheadOffsetUs;

}

if (!sourceEnded) {

/* 获取到的position还要再减去一个latency */

positionUs = max(0, positionUs - latencyUs);

}

}

整个if-else分支就是根据两个audiotrack的api接口来区分的,如果代码执行的平台支持从getTimestamp获取,那么就走if分支去处理,如果是不支持的话,那么就从getPlaybackHeadPosition获取值去处理,这里先抛出一个结论,exoplayer是每10s去调用一次getTimestamp来获取底层精确的pts值的,我们来看下对if函数的注释:

/* 通过getTimestamp来获取pts */

if (useGetTimestampMode) {

// Calculate the speed-adjusted position using the timestamp (which may be in the future).

/* 得到最新调用getTimestamp()接口时拿到的写入帧数 */

long timestampPositionFrames = audioTimestampPoller.getTimestampPositionFrames();

/* 将总帧数转化为持续时间 */

long timestampPositionUs = framesToDurationUs(timestampPositionFrames);

/* 计算当前系统时间与底层更新帧数时系统时间的差值 */

long elapsedSinceTimestampUs = systemTimeUs - audioTimestampPoller.getTimestampSystemTimeUs();

/* 对差值时间做一个校准,基于倍速进行校准 */

elapsedSinceTimestampUs =

Util.getMediaDurationForPlayoutDuration(elapsedSinceTimestampUs, audioTrackPlaybackSpeed);

/* 得到最新的音频时间戳 */

positionUs = timestampPositionUs + elapsedSinceTimestampUs;

}

这段代码实际上可以这么理解,每10s更新一次基准时间戳,然后在基准时间戳的基础上不断累加校准过后的时间差值,就是最终送给视频做同步的音频pts。

来看下else里面是怎么做的:

else {

if (playheadOffsetCount == 0) {

// The AudioTrack has started, but we don't have any samples to compute a smoothed position.

positionUs = getPlaybackHeadPositionUs();

} else {

// getPlaybackHeadPositionUs() only has a granularity of ~20 ms, so we base the position off

// the system clock (and a smoothed offset between it and the playhead position) so as to

// prevent jitter in the reported positions.

positionUs = systemTimeUs + smoothedPlayheadOffsetUs;

}

if (!sourceEnded) {

/* 获取到的position还要再减去一个latency */

positionUs = max(0, positionUs - latencyUs);

}

}

else的代码就很好理解了,就是调用AudioTrack.getPlaybackHeadPosition()拿到值之后,做一个平滑处理,然后再减去latency就行了。

三、关键函数分析:

maybeSampleSyncParams分析:

来看下这个函数是如何做准备工作的:

private void maybeSampleSyncParams() {

/* 1.从audiotrack获取播放时长 */

long playbackPositionUs = getPlaybackHeadPositionUs();

if (playbackPositionUs == 0) {

// The AudioTrack hasn't output anything yet.

return;

}

long systemTimeUs = System.nanoTime() / 1000;

/* 2.每30ms进行一次平滑计算 */

if (systemTimeUs - lastPlayheadSampleTimeUs >= MIN_PLAYHEAD_OFFSET_SAMPLE_INTERVAL_US) {

// Take a new sample and update the smoothed offset between the system clock and the playhead.

playheadOffsets[nextPlayheadOffsetIndex] = playbackPositionUs - systemTimeUs;

nextPlayheadOffsetIndex = (nextPlayheadOffsetIndex + 1) % MAX_PLAYHEAD_OFFSET_COUNT;

if (playheadOffsetCount < MAX_PLAYHEAD_OFFSET_COUNT) {

playheadOffsetCount++;

}

lastPlayheadSampleTimeUs = systemTimeUs;

smoothedPlayheadOffsetUs = 0;

for (int i = 0; i < playheadOffsetCount; i++) {

smoothedPlayheadOffsetUs += playheadOffsets[i] / playheadOffsetCount;

}

}

if (needsPassthroughWorkarounds) {

// Don't sample the timestamp and latency if this is an AC-3 passthrough AudioTrack on

// platform API versions 21/22, as incorrect values are returned. See [Internal: b/21145353].

return;

}

/* 3.校验从audiotrack获取的timestamp和系统时间及getPlaybackHeadPostion获取的时间 */

maybePollAndCheckTimestamp(systemTimeUs, playbackPositionUs);

/* 4.校验latency(如果底层实现了接口的话) */

maybeUpdateLatency(systemTimeUs);

}

注释一:

private long getPlaybackHeadPositionUs() {

return framesToDurationUs(getPlaybackHeadPosition());

}

继续跟进getPlaybackHeadPosition():

private long getPlaybackHeadPosition() {

...

int state = audioTrack.getPlayState();

if (state == PLAYSTATE_STOPPED) {

// The audio track hasn't been started.

return 0;

}

...

/* 调用AudioTrack.getPlaybackHeadPosition:返回为帧数 */

long rawPlaybackHeadPosition = 0xFFFFFFFFL & audioTrack.getPlaybackHeadPosition();

...

if (lastRawPlaybackHeadPosition > rawPlaybackHeadPosition) {

// The value must have wrapped around.

rawPlaybackHeadWrapCount++;

}

lastRawPlaybackHeadPosition = rawPlaybackHeadPosition;

return rawPlaybackHeadPosition + (rawPlaybackHeadWrapCount << 32);

}

我们只看关键部分,进来首先检查了一下状态,然后调用AudioTrack.getPlaybackHeadPosition()拿到pts,最后确认下这个pts是否有覆盖的情况,没有的话就做一个数据更新。

回到maybeSampleSyncParams(),我们看下exoplayer是如何计算平滑值的:

注释二:

/* MIN_PLAYHEAD_OFFSET_SAMPLE_INTERVAL_US值为30ms */

if (systemTimeUs - lastPlayheadSampleTimeUs >= MIN_PLAYHEAD_OFFSET_SAMPLE_INTERVAL_US) {

// Take a new sample and update the smoothed offset between the system clock and the playhead.

playheadOffsets[nextPlayheadOffsetIndex] = playbackPositionUs - systemTimeUs;

nextPlayheadOffsetIndex = (nextPlayheadOffsetIndex + 1) % MAX_PLAYHEAD_OFFSET_COUNT;

if (playheadOffsetCount < MAX_PLAYHEAD_OFFSET_COUNT) {

playheadOffsetCount++;

}

lastPlayheadSampleTimeUs = systemTimeUs;

smoothedPlayheadOffsetUs = 0;

for (int i = 0; i < playheadOffsetCount; i++) {

smoothedPlayheadOffsetUs += playheadOffsets[i] / playheadOffsetCount;

}

}

这段代码的原理如下:每30ms更新一次平滑抖动偏差处理,每次处理会先用获取的pts值减去当前的系统时间作为一个基准差值,然后记录在数组playheadOffsets[]中,接着,将近10次的所有偏差平均到每次偏差中进行计算再累加,即得到最新的平滑抖动偏差值。关于为什么平滑处理是30ms,从exoplayer给的注释来看是因为getPlaybackHeadPositionUs()更新的时间间隔为20ms:

else {

if (playheadOffsetCount == 0) {

...

} else {

// getPlaybackHeadPositionUs() only has a granularity of ~20 ms, so we base the position off

// the system clock (and a smoothed offset between it and the playhead position) so as to

// prevent jitter in the reported positions.

positionUs = systemTimeUs + smoothedPlayheadOffsetUs;

}

...

}

英文注释了getPlaybackHeadPositionUs()会有一个20ms的时间间隔,实际上smoothedPlayheadOffsetUs就是一个经过算法处理的平滑抖动值,加上当前的系统时间就可以认为是此时的音频pts了。

回到maybeSampleSyncParams,继续往下看:

注释三:

/* 校验从audiotrack获取的timestamp和系统时间及getPlaybackHeadPostion获取的时间 */

maybePollAndCheckTimestamp(systemTimeUs, playbackPositionUs);

来看下这个函数是如何校验timestamp的:

private void maybePollAndCheckTimestamp(long systemTimeUs, long playbackPositionUs) {

/* 确认平台底层是否更新了timestamp */

AudioTimestampPoller audioTimestampPoller = Assertions.checkNotNull(this.audioTimestampPoller);

if (!audioTimestampPoller.maybePollTimestamp(systemTimeUs)) {

return;

}

// Check the timestamp and accept/reject it.

long audioTimestampSystemTimeUs = audioTimestampPoller.getTimestampSystemTimeUs();

long audioTimestampPositionFrames = audioTimestampPoller.getTimestampPositionFrames();

/* 确认timestamp更新时的系统时间与当前系统时间是否差距大过5s */

if (Math.abs(audioTimestampSystemTimeUs - systemTimeUs) > MAX_AUDIO_TIMESTAMP_OFFSET_US) {

listener.onSystemTimeUsMismatch(

audioTimestampPositionFrames,

audioTimestampSystemTimeUs,

systemTimeUs,

playbackPositionUs);

audioTimestampPoller.rejectTimestamp();

/* 确认AudioTrack.getTimeStamp和AudioTrack.getPlaybackHeadPostion获取的时间差值是否大于5s */

} else if (Math.abs(framesToDurationUs(audioTimestampPositionFrames) - playbackPositionUs)

> MAX_AUDIO_TIMESTAMP_OFFSET_US) {

listener.onPositionFramesMismatch(

audioTimestampPositionFrames,

audioTimestampSystemTimeUs,

systemTimeUs,

playbackPositionUs);

audioTimestampPoller.rejectTimestamp();

} else {

audioTimestampPoller.acceptTimestamp();

}

}

函数很长,但是很好理解。就是确认底层是否更新了timestamp,如果更新了的话,就将最新更新的这个timestamp中的系统时间拿来和应用层当前的系统时间对比,如果超过5s则不接收这个timestamp,同理,下面也对比了AudioTrack.getTimeStamp和AudioTrack.getPlaybackHeadPostion获取的时间差值。这个函数的关键还是要看下层是如何确定是否更新timestamp的,以及在支持timestamp模式下10S的限制时间是如何来的,来看audioTimestampPoller.maybePollTimestamp():

@TargetApi(19) // audioTimestamp will be null if Util.SDK_INT < 19.

public boolean maybePollTimestamp(long systemTimeUs) {

/* if循环确保每10s调用一次 */

if (audioTimestamp == null || (systemTimeUs - lastTimestampSampleTimeUs) < sampleIntervalUs) {

return false;

}

lastTimestampSampleTimeUs = systemTimeUs;

/* 从AudioTrack.getTimestamp获取最近的timestamp */

boolean updatedTimestamp = audioTimestamp.maybeUpdateTimestamp();

switch (state) {

case STATE_INITIALIZING:

if (updatedTimestamp) {

if (audioTimestamp.getTimestampSystemTimeUs() >= initializeSystemTimeUs) {

// We have an initial timestamp, but don't know if it's advancing yet.

initialTimestampPositionFrames = audioTimestamp.getTimestampPositionFrames();

updateState(STATE_TIMESTAMP);

} else {

// Drop the timestamp, as it was sampled before the last reset.

updatedTimestamp = false;

}

} else if (systemTimeUs - initializeSystemTimeUs > INITIALIZING_DURATION_US) {

// We haven't received a timestamp for a while, so they probably aren't available for the

// current audio route. Poll infrequently in case the route changes later.

// TODO: Ideally we should listen for audio route changes in order to detect when a

// timestamp becomes available again.

updateState(STATE_NO_TIMESTAMP);

}

break;

case STATE_TIMESTAMP:

if (updatedTimestamp) {

long timestampPositionFrames = audioTimestamp.getTimestampPositionFrames();

if (timestampPositionFrames > initialTimestampPositionFrames) {

updateState(STATE_TIMESTAMP_ADVANCING);

}

} else {

reset();

}

break;

case STATE_TIMESTAMP_ADVANCING:

if (!updatedTimestamp) {

// The audio route may have changed, so reset polling.

reset();

}

break;

case STATE_NO_TIMESTAMP:

if (updatedTimestamp) {

// The audio route may have changed, so reset polling.

reset();

}

break;

case STATE_ERROR:

// Do nothing. If the caller accepts any new timestamp we'll reset polling.

break;

default:

throw new IllegalStateException();

}

return updatedTimestamp;

}

注意,该函数要求在SDK版本大于Android4.4以上才行。我们可以从代码的最前面的if循环看到,是否往下去调用audioTimestamp.maybeUpdateTimestamp()是由sampleIntervalUs这个类变量来决定的。先来看下sampleIntervalUs值是在哪里改变的。首先,exoplayer初始化完成后,这个函数中类变量state是处于STATE_INITIALIZING状态的,看下switch中的选择分支:

case STATE_INITIALIZING:

if (updatedTimestamp) {

if (audioTimestamp.getTimestampSystemTimeUs() >= initializeSystemTimeUs) {

// We have an initial timestamp, but don't know if it's advancing yet.

initialTimestampPositionFrames = audioTimestamp.getTimestampPositionFrames();

/* 第一次更新状态 */

updateState(STATE_TIMESTAMP);

} else {

// Drop the timestamp, as it was sampled before the last reset.

updatedTimestamp = false;

}

...

break;

这里会去更新状态为STATE_TIMESTAMP,看下updateState函数:

private void updateState(@State int state) {

this.state = state;

switch (state) {

case STATE_INITIALIZING:

// Force polling a timestamp immediately, and poll quickly.

lastTimestampSampleTimeUs = 0;

initialTimestampPositionFrames = C.POSITION_UNSET;

initializeSystemTimeUs = System.nanoTime() / 1000;

sampleIntervalUs = FAST_POLL_INTERVAL_US; /* 10ms */

break;

case STATE_TIMESTAMP:

sampleIntervalUs = FAST_POLL_INTERVAL_US; /* 10ms */

break;

case STATE_TIMESTAMP_ADVANCING:

case STATE_NO_TIMESTAMP:

sampleIntervalUs = SLOW_POLL_INTERVAL_US; /* 10s */

break;

case STATE_ERROR:

sampleIntervalUs = ERROR_POLL_INTERVAL_US; /* 500ms */

break;

default:

throw new IllegalStateException();

}

}

这里面可以看到STATE_TIMESTAMP状态时依然是10ms的访问,但是更新了状态之后回到上面的函数,如果下一次查询到timestamp更新了,那么就会再次去更新状态为STATE_TIMESTAMP_ADVANCING,故sampleIntervalUs 就变成了10S了。

总结下这的状态:

STATE_INITIALIZING(10ms)—>STATE_TIMESTAMP(10ms)—>STATE_TIMESTAMP_ADVANCING(10s)

看懂了这里的状态切换,再看下audioTimestamp.maybeUpdateTimestamp()是怎么更新的:

public boolean maybeUpdateTimestamp() {

/* 调用Android api进行访问 */

boolean updated = audioTrack.getTimestamp(audioTimestamp);

if (updated) {

long rawPositionFrames = audioTimestamp.framePosition;

if (lastTimestampRawPositionFrames > rawPositionFrames) {

// The value must have wrapped around.

rawTimestampFramePositionWrapCount++;

}

lastTimestampRawPositionFrames = rawPositionFrames;

/* 更新timestamp */

lastTimestampPositionFrames =

rawPositionFrames + (rawTimestampFramePositionWrapCount << 32);

}

return updated;

}

逻辑很简单,就是查询底层api,返回为true,说明下层有最新值可用,然后应用读取出这个最新值更新下就行了。

注释四:

校验下latency,看下maybeUpdateLatency()函数:

private void maybeUpdateLatency(long systemTimeUs) {

/* 底层实现了getLatencyMethod的前提下每50ms时差进行一次latency校验 */

if (isOutputPcm

&& getLatencyMethod != null

&& systemTimeUs - lastLatencySampleTimeUs >= MIN_LATENCY_SAMPLE_INTERVAL_US) {

try {

// Compute the audio track latency, excluding the latency due to the buffer (leaving

// latency due to the mixer and audio hardware driver).

latencyUs =

castNonNull((Integer) getLatencyMethod.invoke(Assertions.checkNotNull(audioTrack)))

* 1000L

- bufferSizeUs;

// Check that the latency is non-negative.

latencyUs = max(latencyUs, 0);

// Check that the latency isn't too large.

if (latencyUs > MAX_LATENCY_US) {

listener.onInvalidLatency(latencyUs);

latencyUs = 0;

}

} catch (Exception e) {

// The method existed, but doesn't work. Don't try again.

getLatencyMethod = null;

}

lastLatencySampleTimeUs = systemTimeUs;

}

}

这个函数的逻辑也很简单,首先前提是需要底层实现getLatencyMethod这个方法,否则的话直接不考虑latency,在拿到了latency之后,还要减去bufferSizeUs,注释说的很简单,至于为什么要减去这个latency,我不是特别能理解。

三、总结:

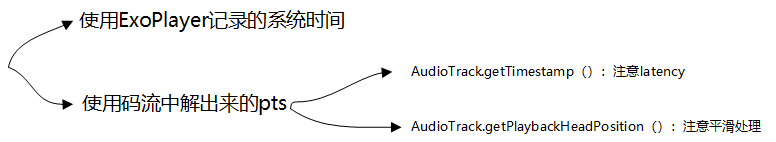

ExoPlayer对音频pts的处理繁琐而精确,首先是确定了两种音频pts的获取方式,一种是使用码流中解析出来的,另一种则是exoplayer自己维护的系统时间。正常情况下,我们都是使用前者。从码流中解析出来的pts有两种方式去从audiotrack获取,且分别进行了校验处理,最终得到的值将送往video的渲染操作中去进行同步:

978

978

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?