运行hdfs命令时,比如hadoop fs -put a.txt /

18/12/14 16:19:02 WARN hdfs.DFSClient: DataStreamer Exception

org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /a.txt._COPYING_ could only be replicated to 0o node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget(BlockManager.java:1471)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:2791)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:606)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNameno

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBloc

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:585)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:928)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2013)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2009)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1614)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2007)

at org.apache.hadoop.ipc.Client.call(Client.java:1411)

at org.apache.hadoop.ipc.Client.call(Client.java:1364)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:206)

at com.sun.proxy.$Proxy9.addBlock(Unknown Source)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy9.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientNamenodeProtocol

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.locateFollowingBlock(DFSOutputStream.java:1449)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1270)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:526)

put: File /a.txt._COPYING_ could only be replicated to 0 nodes instead of minReplication (=1). There are 0 datanode(s) running and no node(s) are excluded in this operation.

[liushuai@master1 ~]$ hadoop dfsadmin -report

运行hadoop dfsadmin -report查看信息,发现如下:

Configured Capacity: 0 (0 B)

Present Capacity: 0 (0 B)

DFS Remaining: 0 (0 B)

DFS Used: 0 (0 B)

DFS Used%: NaN%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

网上有说重新格式 core-site.xml中 hadoop.tmp.dir下的目录文件,重新

hdfs namenode -format,发现不是这个原因

查看 datanode节点下的 hadoop-user-datanode-slave.log 日志,发现是:

2018-12-14 16:04:22,655 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master1/192.168.246.136:9000. Already tried 3 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

办法:

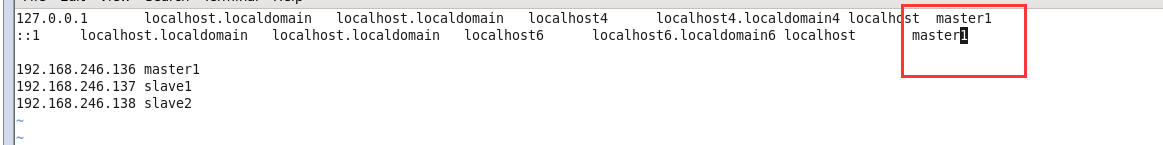

结果是安装centos时,配置了master和slave的映射导致的。

由于core-site-xml中设定namenode为域名master,使用start-all.sh时,发现namenode将主机名master解析为127.0.1.1,原来是/etc/hosts文件中master 127.0.1.1的映射在master 192.168.246.136前面,hadoop默认将其解析为127.0.1.1

将其删掉红方框内即可

测试

运行 hadoop dfsadmin -report

Configured Capacity: 100551770112 (93.65 GB)

Present Capacity: 86632554496 (80.68 GB)

DFS Remaining: 86632169472 (80.68 GB)

DFS Used: 385024 (376 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Live datanodes (2):

Name: 192.168.246.137:50010 (slave1)

Hostname: slave1

Decommission Status : Normal

Configured Capacity: 50275885056 (46.82 GB)

DFS Used: 192512 (188 KB)

Non DFS Used: 6966996992 (6.49 GB)

DFS Remaining: 43308695552 (40.33 GB)

DFS Used%: 0.00%

DFS Remaining%: 86.14%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Fri Dec 14 17:06:38 HKT 2018

Name: 192.168.246.138:50010 (slave2)

Hostname: slave2

Decommission Status : Normal

Configured Capacity: 50275885056 (46.82 GB)

DFS Used: 192512 (188 KB)

Non DFS Used: 6952218624 (6.47 GB)

DFS Remaining: 43323473920 (40.35 GB)

DFS Used%: 0.00%

DFS Remaining%: 86.17%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Fri Dec 14 17:06:40 HKT 2018

文章,转载请附上博文链接!

https://blog.csdn.net/AdvancingStone/article/details/85006714

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?