前注:

学习书籍<AWS Certified Solutions Architect Associate All-in-One Exam Guide (Exam SAA-C01)>时记录的笔记。

由于是全英文书籍,所以笔记记录大部分为英文。

PS: 此章看得我十分头疼,虽说都是applications相关,但服务众多,服务与服务之间可以继续细分为很多组,各个组之间相关性较小,不像前几章都围绕一个主题,此章读起来感觉冗长且不连贯。

Index

- Deploying and Monitoring Applications on AWS

- 1. AWS Lambda

- 2. Amazon API Gateway

- 3. Amazon Kinesis

- 4. Reference Architectures using Serverless Services

- 5. Amazon CloudFront

- 6. Amazon Route53

- 7. AWS Web Application Firewall

- 8. Amazon Simple Queue Service

- 9. Amazon Simple Notification Service

- 10.AWS Step Functions and Amazon Simple Workflow (SWF)

- 11.AWS Elastic BeanStalk

- 12.AWS OpsWorks

- 13.Amazon Cognito

- 14.Amazon Elastic MapReduce (EMR)

- 15.AWS CloudFormation

- 16.Amazon CloudWatch

- 17.AWS CloudTrail

- 18.AWS Config

- 19.Amazon VPC Flow Logs

- 20.AWS Trusted Advisor

- 21.AWS Organizations

Deploying and Monitoring Applications on AWS

1. AWS Lambda

AWS Lambda is a compute service that runs your back-end code in response to events such as object uploads to Amazon S3 buckets, updates to Amazon DynamoDB tables, data in Amazon Kinesis Data Streams or in-app activity.

The code you run on AWS Lambda is called a Lambda function.

With Lambda, any event can trigger your function, making it easy to build applications that respond quickly to new information.

(1) Serverless

A serverless platform should provide these capabilities at a minimum:

· No infrastructure to manage

· Scalability

· Built-in redundancy

· Pay only for usage

Serverless AWS services:

· Amazon S3

· Amazon DynamoDB

· Amazon API Gateway

· AWS Lambda

· Amazon SNS and SQS

· Amazon CloudWatch Events

· Amazon Kinesis

(2) Understanding AWS Lambda

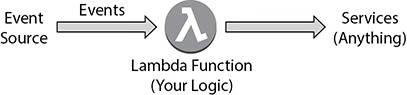

Each Lambda function you create contains the code you want to execute, the configuration that defines how your code is executed and optionally one or more event sources that detect events and invoke your function as they occur.

Architecture of a running AWS Lambda function

Architecture of a running AWS Lambda function

Can run as many Lambda functions in parallel as you need.

No limit to the number of Lambda functions you can run at any particular point of time, they can scale on their own.

Lambda functions are “stateless”, with no affinity to the underlying infrastructure so that Lambda can rapidly launch as many copies of the function as needed to scale to the rate of incoming events. (与底层基础架构没有亲和力,因此Lambda可以根据需要迅速启动功能的多个副本,以适应传入事件的速率)

Building microservices using Lambda functions and API Gateway is a great use case.

AWS Lambda supports: Java, Node.js, Python, C#.

Steps to use AWS Lambda:

· Upload the code to AWS Lambda in ZIP format

· Schedule the Lambda function (Specify how often the function will run or whether the function is driven by an event, if yes, the source of the event)

· Specify the compute resource for the event

· Specify the timeout period for the event

· Specify the Amazon VPC details, if any

· Launch the function

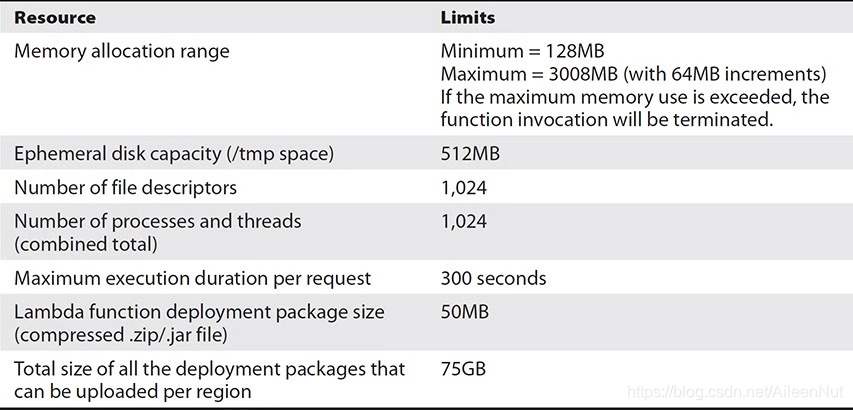

(3) AWS Lambda Resource Limits per Invocation

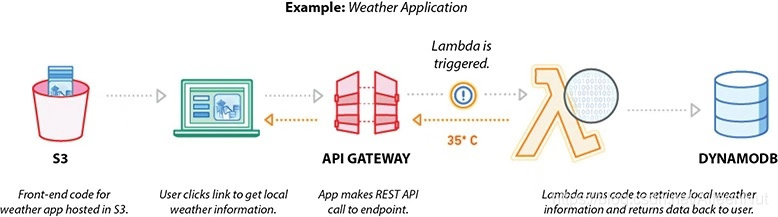

2. Amazon API Gateway

API Gateway is a fully managed service that makes it easy for developers to define, publish, deploy, maintain, monitor and secure APIs at any scale.

Clients integrate with the APIs using standard HTTPs requests.

API Gateway serves as a front door (to access data, business logic, or functionality from your back-end services) to any web application running on Amazon EC2, Amazon ECS, AWS Lambda, or on-premises environment. (API网关用作访问Amazon EC2,Amazon ECS,AWS Lambda或本地环境上运行的任何Web应用程序的前门(从后端服务访问数据,业务逻辑或功能))

It can take care of any problem you have when managing an API, such as traffic management, authorization and access control, the monitoring aspect, version control and so on.

Pay-as-you-go pricing model, pay only for the API calls you receive and the amount of data transferred out.

(1) Use cases

· To create, deploy, and manage a RESTful API to expose back-end HTTP endpoints, AWS Lambda functions or other AWS services.

· To invoke exposed API methods through the front-end HTTP endpoints.

(2) Benefits

· Resiliency and performance at any scale

Can manage any amount of traffic with throttling so that back-end operations can withstand traffic spikes.

Don’t have to manage any infrastructure.

· Caching

Cache the output of API calls to improve the performance of your API calls and reduce the latency since you don’t have to call the back end every time.

· Security

Can use AWS native tools such as IAM and Amazon Cognito to authorize access to your APIs.

Can verify signed API calls. (leverage signature version 4)

· Metering

API Gateway helps developers create, monitor, and manage API keys that they can distribute to third-party developers, so It can help you define plans that meter and restrict third-party developer access to your APIs.

· Monitoring

Once you deploy an API, API Gateway provides you with a dashboard to view all the metrics and monitor the calls to your services.

It can integrated with Amazon CloudWatch and you can see all the statistics related to API calls, latency, error rates and so on.

· Lifecycle management

Allow you to maintain and run several versions of the same API at the same time. Have built-in stages, can enable developers to deploy multiple stages of each version.

· Integration with other AWS products

Integrate with AWS Lambda, can create completely serverless APIs.

Integrate with Amazon CloudFront, can get protection against DDoS attacks.

· Open API specification (Swagger) support

Support open source Swagger

· SDK generation for iOS, Android and JavaScript

Can automatically generate client SDKs based on your customer’s API definition.

3. Amazon Kinesis

(1) Real-time Application Scenarios

Two types of use case scenarios for streaming data applications:

· Evolving from batch to streaming analytics (从批处理演变为流分析)

You can perform real-time analytics on data that has been traditionally analyzed using batch processing in data warehouse or using Hadoop frameworks.

The most common use cases include data lakes, data science and machine learning.

· Building real-time applications

You can use streaming data services for real-time applications such as application monitoring, fraud detection, and live leaderboards.

(2) Differences between Batch and Stream Processing

Compared with the traditional batch analytics, you need a different set of tools to collect, prepare and process real-time streaming data.

Traditional analytics: gather the data, load it periodically into a database, analyze it hours, days or weeks later.

Real-time analytics: process the data continuously in real time, even before it is stored, instead of running database queries over stored data.

Amazon Kinesis family consists of the following products: Amazon Kinesis Data Streams, Amazon Kinesis Data Firehose, Amazon Kinesis Data Analytics.

(3) Amazon Kinesis Data Streams

Amazon Kinesis Data Streams enables you to build custom applications that process or analyze streaming data for specialized needs.

Kinesis Data Streams can continuously capture and store TB data per hour from hundreds of thousands of sources, such as website clickstreams, financial transactions, social media feeds, IT logs and location-tracking events…

With Kinesis Client Library (KCL), you can build Kinesis applications and use streaming data to power real-time dashboards, generate alerts, implement dynamic pricing and advertising and more…

You can also emit data from Kinesis Data Streams to other AWS services such as Amazon S3, Amazon Redshift, Amazon EMR and AWS Lambda.

可以将Kinesis Data Streams用于快速而持续的数据引入和聚合,使用的数据类型可以包括IT基础设施日志数据,应用程序日志,社交媒体,市场数据源和Web点击流数据。

典型使用场景:加速的日志和数据源引入和处理,实时指标和报告,实时数据分析,复杂流处理。

Benefits:

· Real time

· Secure

· Easy to use

· Parallel processing

· Elastic

· Low cost

· Reliable

(4) Amazon Kinesis Data Firehose

Amazon Kinesis Data Firehose is the easiest way to load streaming data into data stores and analytics tools.

It can capture, transform and load streaming data into Amazon S3, Amazon Redshift, Amazon Elasticsearch and Splunk, enabling near real-time analytics with the existing business intelligence tools and dashboards.

Moreover, Kinesis Data Firehose synchronously replicates data across three facilities in an AWS region, providing high availability and durability for the data as it is transported to the destinations.

完全托管服务,用于将实时流数据传输到目标。使用时无需编写应用程序或管理资源,可以配置数据创建器将数据发送到Kinesis Data Firehose,其会自动将数据传输到指定目标,也可以配置Kinesis

Data Firehose在传输前转换数据。

Benefits:

· Easy to use

· Integrated with AWS data stores

· Serverless data transformation

· Near real time

· No ongoing administration

· Pay only for what you use

(5) Amazon Kinesis Data Analytics

Amazon Kinesis Data Analytics is the easiest way to process and analyze real-time, streaming data.

Simply point Kinesis Data Analytics at an incoming data stream, write your SQL queries, and specify where you want to load the results. (只需将Kinesis Data Analytics指向传入的数据流,编写SQL查询,然后指定要将结果加载到的位置。)

Kinesis Data Analytics takes care of running your SQL queries continuously on data while it’s in transit and then sends the results to the destinations. (Kinesis Data Analytics负责在数据传输过程中对数据连续运行SQL查询,然后将结果发送到目的地。)

使用Kinesis Data Analytics的示例方案: 生成时间序列分析,为实时控制面板提供信息,创建实时指标。

Benefits:

· Powerful real-time processing

· Fully managed

· Automatic elasticity

· Easy to use

· Standard SQL

· Pay only for what you use

4. Reference Architectures using Serverless Services

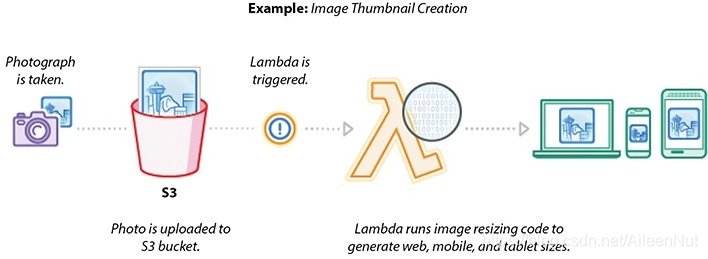

(1) Real-time File Processing

Can use Amazon S3 to trigger AWS Lambda to process data immediately after an upload.

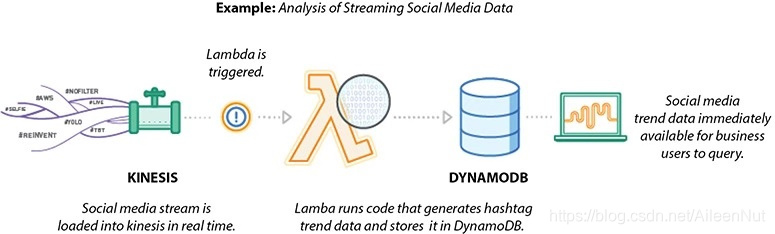

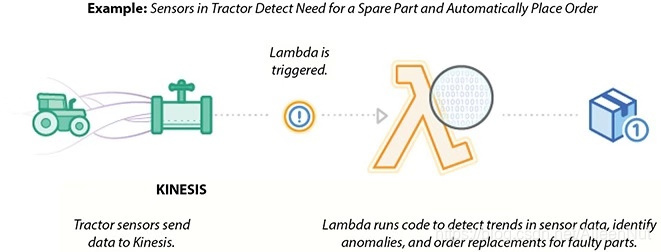

(2) Real-time Stream Processing

Can use AWS Lambda and Amazon Kinesis to process real-time streaming data for application activity tracking, transaction order processing, clickstream analysis, data cleansing, metrics generation, log filtering, indexing, social media analysis, and IoT device data telemetry and metering.

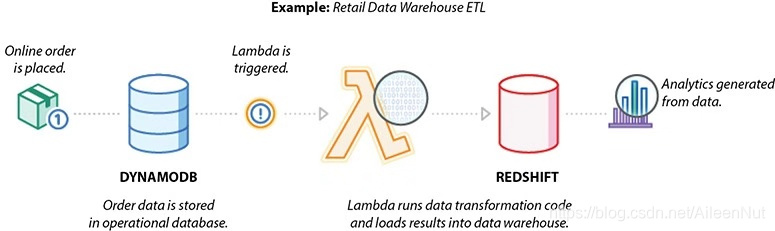

(3) Extract, Transformation, and Load (ETL) Processing

Can use AWS Lambda to perform data validation, filtering, sorting, or other transformations for every data change in a DynamoDB table and load the transformed data into another data store.

(4) IoT Back Ends

Can build a serverless architecture for back ends using AWS Lambda to handle web, mobile, IoT and third-party API requests.

5. Amazon CloudFront

Amazon CloudFront is a global content delivery network (CDN) service that allows you to distribute content with low latency and provides high data transfer speeds.

Amazon CloudFront employs a global network of edge locations and regional edge caches that cache copies of your content close to your viewers. It routes viewers to the best location.

In addition to caching static content, Amazon CloudFront accelerates dynamic content.

(1) Use Cases

· Caching static asset

· Accelerating dynamic content

· Helping protect against distributed DDoS attacks

Can be integrated with AWS Shield and WAF, which can protect layer3, 4, 7.

· Improving security

Can serve the content securely with SSL (HTTPS).

· Accelerating API calls

Can be integrated with Amazon API Gateway and be used to secure and accelerate your API calls.

· Distributing software

· Streaming videos

Can be used for video streaming both live and on demand.

(2) Key Concepts

· Edge location

· Regional edge location

The regional edge caches are located between your origin web server and the global edge locations that serve content directly to your viewers. (An origin web server is often referred to as an orgin, which is the location where your actual noncached data resides.)

Regional edge caches have a larger cache width than any individual edge location, so objects remain in the cache longer at the nearest regional edge caches.

It is enabled by default.

· Distribution

It specifies the location or locations of the original version of your files.

· Origin

CloudFront can accept any publicly addressable Amazon S3 or HTTP server, an ELB/ALB, or a custom origin server outside of AWS as an origin.

· Behaviors

Behaviors allow you to have granular control of the CloudFront CDN, enforce certain policies, change results based on request type, control the cacheablity of objects and more.

The important behaviors that can be configured with Amazon CloudFront: Path Pattern Matching, Headers, Query Strings/Cookies, Signed URL or Signed Cookies, Protocol Policy, Time to Live (TTL), Gzip Compression.

Can create two types of distributions via CloudFront: wed and RTMP.

(3) Geo Restriction

When a user requests your content, CloudFront typically serves the request content regardless of where the user is located.

If you need to prevent users in specific countries from accessing your content, you can use CloudFront geo restriction feature to allow access on a whitelist, or prevent access on a blacklist.

(4) Error Handling

You can configure CloudFront to respond to requests using a custom error page when your origin returns an HTTP 4xx or 5xx status code.

6. Amazon Route53

Amazon Route 53 is the managed DNS of Amazon.

DNS translates human-readable names into the numeric IP addresses that servers/computers use to connect to each other.

Route 53 connects user requests to infrastructure running in AWS, such as EC2 instances, ELB, S3, and it can also be used to route users to infrastructure outside of AWS.

It is the only service that has a 100 percent SLA.

It is region independent, you can configure it with resources running across multiple regions.

In addition to managing your public DNS record, Route 53 can be used to register a domain, create DNS records for a new domain, or transfer DNS records for an existing domain.

Route 53 supports alias records (别名记录) (also known as zone apex support (区域定点支持)). The zone apex is the root domain of a website.

Since the DNS specification requires a zone apex to point to an IP address (an A record), not a CNAME (such as the name AWS provides for a CloudFront distribution, ELB, or S3 website bucket), you can use Route 53’s alias record to solve this problem.

Route 53 offers health checks, which allow you to monitor the health and performance of your application, web servers, and other resources that leverage this service. It is useful when you have two or more resources that are performing the same function. It is going to leverage only the healthy EC2 servers from healthy AZs in a region.

Route 53 supports the following routing policies: Weighted round robin, Latency-based routing, Failover routing, Geo DNS routing.

7. AWS Web Application Firewall

AWS Web Application Firewall (WAF) is a web application firewall that protects your web applications from various forms of attack.

Against the attacks that could affect application availability, result in data breaches, cause downtime, compromise security or consume excessive resources.

Some use cases: Vulnerability protection, Malicious requests, DDoS mitigation (HTTP/HTTPS floods).

To use WAF with CloudFront or ALB, you need to identify the resource, and then deploy the rules and filters.

Rules are collections of WAF filter conditions, it either can be one condition or can be a combination of two of more conditions.

All the conditions you can create using WAF: Cross-site scripting match conditions, IP match conditions, Geographic match conditions, Size constraint conditions, SQL injection match conditions, String match conditions, Regex matches.

Two types of rules in WAF: regular rules and rate-based rules.

Regular rules use only conditions to target specific requests.

Rate-based rules are similar to regular rules, with one addition: a rate limit in five-minute intervals.

Creating a web access control list (web ACL) is the first thing you need to do to use AWS WAF. Once you combine your conditions into rules, you combine the rules into a web ACL.

8. Amazon Simple Queue Service

A message queue is a form of asynchronous service-to-service communication used in serverless and microservice architectures.

Message queues allow different parts of a system to communicate and process operations asynchronously.

A message queue provides a buffer, which temporarily stores messages and endpoints, which allows software components to connect to the queue to send and receive messages.

The messages are usually small and can be things such as requests, replies, error messages or just plain information.

Each message is processed only once, by a single consumer.

A message producer: the software that puts messages into a queue.

A message consumer: the software that retrieves messages.

(1) Key features

SQS is redundant across multiple AZs in each region. Even if an AZ is lost, the service will be accessible.

Multiple copies of messages are stored across multiple AZs, and messages are retained up to 14 days.

If you consumer or producer application fails, your message won’t be lost.

Because of the distributed architecture, SQS scales without any preprovisioning. It scales up automatically as and when more traffic comes. Similarly, when the traffic is low, it automatically scales down.

The message can contain up to 256KB of text data, including XML, JSON and unformatted text.

(2) Two types of SQS queues

Standard

Default queue type. Support almost unlimited transactions per second.

Best-effort ordering: ensure messages are generally delivered in the same order as they’re sent and at nearly unlimited scale.

Although it tries to preserve the order of messages, it could be possible that sometimes a message is delivered out of order.

FIFO

Guarantee first in, first out delivery and also exactly once processing.

Support up to 300 transactions per second (send, receive or delete). For batch processing, support up to 3000 transactions per second.

Differences

· Number of transactions per second

Standard: nearly unlimited

FIFO: up to 300

· Message delivery

Standard: at least once, but occasionally more than one copy if delivered

FIFO: deliver once and remain available until a consumer processes and deletes it. Duplicates aren’t introduced into the queue.

· Deliver order

Standard: may be in an order different from which they were sent.

FIFO: the order in which messages are sent and received is strictly preserved.

(3) Terminology and parameters for configuring SQS

Visibility timeout

The length of time (in seconds) that a message received from a queue will be invisible to other receiving components.

Message retention period

The amount of time that Amazon SQS will retain a message if it doesn’t get deleted.

Delivery delay

The amount of time to delay or postpone the delivery of all messages added to the queue.

Receive message wait time

Using this to specify short polling or long polling

Short polling: return immediately, even if the message queue being polled is empty.

Long polling: reduce the cost of using SQS by eliminating the number of empty responses and false empty responses.

Content-based deduplication

Onlyfor FIFO queue, can use an SHA-256 hash of the body of the message to generate the content-based message deduplication ID.

Amazon SQS supports dead-letter queues, which other queues (source queues) can target for messages that can’t be processed (consumed) successfully.

Dead-letter queues are useful for debugging your application or messaging system because they let you isolate problematic messages to determine why their processing doesn’t succeed.

Using server-side encryption (SSE), you can transmit sensitive data in encrypted queues.

9. Amazon Simple Notification Service

Amazon SNS is a web service and used to send notifications from the cloud.

SNS has the capacity to publish a message from an application and then immediately deliver it to subscribes.

pub-sub messaging.

Like SQS, SNS is used to enable event-driven architectures or to decouple applications to increase performance, reliability and scalability.

To use SNS, you must first create a “topic” identifying a specific subject or event type.

A topic is used for publishing messages and allowing clients to subscribe for notifications.

Subscribes are clients interested in receiving notifications from topics of interest. They can subscribe to a topic or be subscribed by the topic owner.

Subscribes specify the protocol and endpoint (URL, email address, etc.) for notifications to be delivered.

The publishers and subscribers can operate independent of each other.

(1) Features

· Reliable: messages are stored across multiple AZs by default.

· Flexible message delivery over multiple transport protocols.

· Messages can be delivered instantly or can be delayed.

· Provide monitoring capability. Can integrate with SNS and CloudWatch.

· Can be access from AWS Management Console, AWS Command Line Interface (CLI), AWS Tools for Windows PowerShell, AWS SDKs and Amazon SNS Query API.

· Contain up to 256KB of text data with the exception of SMS, which can contain up to 140 bytes. If the message exceeds the size limit, SNS will send it as multiple messages.

(2) Steps

· Create a topic

· Subscribe to a topic

· Publish to a topic

10.AWS Step Functions and Amazon Simple Workflow (SWF)

AWS Step Functions is replacing Amazon Simple Workflow Service (SWF).

AWS Step Function is a fully managed service that makes it easy to coordinate the components of distributed applications and microservices using visual workflow.

With AWS Step Function, you define your application as a state machine, a series of steps that together capture the behavior of the app.

States in the state machine may be tasks, sequential steps, parallel steps, branching paths (choice), and/or timers (wait).

AWS Step Functions has seven state types:

· Task: a single unit of work

· Choice: can use branching logic to your state machine

· Parallel: fork the same input across multiple states and join the results into a combined output

· Wait: delay for a specified time

· Fail: stop an execution and mark it as a failure

· Succeed: stop an execution successfully

· Pass: pass its input to its output

11.AWS Elastic BeanStalk

Deploy, monitor and scale an application on AWS quickly and easily.

Maintain complete control over the infrastructure which is provisioned and managed by Elastic BeanStalk.

Can be deployed either in a single instance or with multiple instances with the database (optional) in both cases. The single instance is mainly used for development or testing purposes, whereas multiple instances can be used for production workloads.

Elastic BeanStalk configures each EC2 instance in your environment with the components necessary to run applications for the selected platform.

Can add AWS Elastic BeanStalk configuration files (either a JSON file or YAML) to your web application’s source code to configure your environment and customize the AWS resources that it contains.

(1) 3 Key Components

· Environment

Consist of the infrastructure supporting the application, such as the EC2 instances, RDS, ELB, Auto Scaling and so on.

An environment runs a single application version at a time for better scalability.

Can create many different environments for an application.

· Application Version

The actual application code that is stored in Amazon S3.

Can have multiple versions of an application, and each version will be stored separately.

· Saved Configuration

Define how an environment and its resources should behave.

Can be used to launch new environments quickly or roll back configuration.

An application can have many saved configurations.

(2) Two types of environment tiers

· Web servers are standard applications that listen for and then process HTTP requests, typically over port 80.

· Workers are specialized applications that have a background processing task that listens for messages on an Amazon SQS queue. Worker applications post those messages to your application by using HTTP.

12.AWS OpsWorks

AWS OpsWorks is a configuration management service that helps you deploy and operate applications of all shapes and sizes.

It allows you to quickly configure, deploy and update your applications of all shapes and sizes, and even gives you tools to automate operations such as automatic instant scaling and health monitoring.

OpsWorks provides managed instances of Chef and Puppet. Chef and Puppet are automation

platforms that allow you to use code to automate the configurations of your servers.

OpsWorks offers three tools: AWS OpsWorks for Chef Automate, AWS OpsWorks for Puppet Enterprise and AWS OpsWorks Stacks.

OpsWorks provides prebuilt layers for common components, including Ruby, PHP, Node.js, Java, Amazon RDS, HA Proxy, MySQL and Memcached.

13.Amazon Cognito

Amazon Cognito is a user identity and data synchronization service that makes it really easy for you to manage user data for you apps across multiple mobile or connected devices.

Amazon Cognito 为Web 和移动应用程序提供身份验证、授权和用户管理。用户可使用用户名和密码直接登录,也可以通过第三方 (如 Facebook、Amazon 或 Google) 登录。

Amazon Cognito 的两个主要组件是用户池和身份池。用户池是为应用程序提供注册和登录选项的用户目录。使用身份池,可以授予用户访问其他 AWS 服务的权限。可以单独或配合使用身份池和用户池。

(1) 用户池

Amazon Cognito中的用户目录。

使用用户池,用户可以通过Amazon Cognito登录web或移动应用程序或通过第三方身份提供商联合登录。

无论通过何种方式登录,用户池的所有成员都有一个可通过开发工具包访问的目录配置文件。

(2) 身份池

使用身份池,用户可以获取临时AWS凭证来访问AWS 服务(如S3和DynamoDB)。

身份池支持匿名访客用户以及可以用来验证身份池用户身份的供应商。

14.Amazon Elastic MapReduce (EMR)

Using the elastic infrastructure of Amazon EC2 and Amazon S3, Amazon EMR provides a managed Hadoop framework that distributes the computation of your data over multiple Amazon EC2 instances.

Amazon EMR automatically configures the security groups for the cluster and makes it easy to control access.

(1) Steps

Load the data into Amazon S3 → Launch EMR clusters in minutes → Focus on the analysis of your data → When your job is completed, you can retrieve the output from Amazon S3

The data remains decoupled between the EC2 servers and Amazon S3. EC2 only processes the data and the actual data resides in S3.

(2) 3 Types of Nodes

· Master Node

This node takes care of coordinating the distribution of the job across core and task nodes.

· Core Node

This node takes care of running the task that the master node assigns. It also stores the data in the Hadoop Distributed File System (HDFS) on your cluster.

· Task Node

This node runs only the task and does not store any data. It is optional and provide pure compute to your cluster.

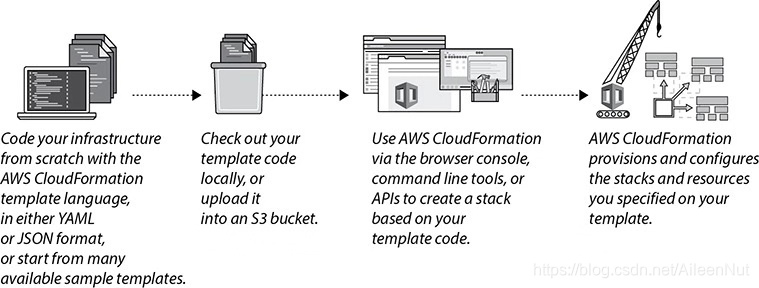

15.AWS CloudFormation

CloudFormation provisions and manages stacks of AWS resources based on templates you create to model your infrastructure architecture.

A CloudFormation template is a JSON-or YAML-formatted text file.

All the AWS resources collected together is called a stack.

16.Amazon CloudWatch

(1) Metrics Collection and Tracking

Amazon CloudWatch provides more than 100 types of metrics available among all the different services.

Apart from the default metrics available, you can also create your own custom metrics using your application and monitor them via Amazon CloudWatch.

The metrics are retained depending on the metric interval:

· For the one-minute data point, the retention is 15 days

· For the five-minute data point, the retention is 63 days.

· For the one-hour data point, the retention is 15 months or 455 days.

(2) Capture Real-time Changes using Amazon CloudWatch Events

Can help you detect any changes made to your AWS resources.

When CloudWatch Events detects a change, it delivers a notification in almost real time to a target you choose.

The target can be a Lambda function, an SNS queue, an Amazon SNS topic or a Kinesis Stream or built-in target.

(3) Monitoring and Storing Logs

Can use CloudWatch Logs to monitor and troubleshoot your systems and applications using your existing system, application and custom log files.

Can send your existing log files to CloudWatch Logs and monitor these logs in near real time.

Can further export data to S3 for analytics and/or archival or stream to the Amazon Elasticsearch Service or third-party tools like Splunk.

(4) Set Alarms

Can create a CloudWatch alarm that sends an SNS message when the alarm changes state.

An alarm watches a single metric over a time period you specify and performs one or more actions based on the value of the metric relative to a given threshold over a number of time periods.

Alarm invoke actions for sustained state changes only.

An alarm has the following possible states:

OK: means the metric is within the defined threshold

ALARM: means the metric is outside the defined threshold

INSUFFICIENT_DATA: means the alarm has just started, the metric is not available or not enough data is available to determine the alarm state.

(5) View Graph and Statistics

Can use Amazon CloudWatch dashboards to view different types of graphs and statistics of the resources you have deployed.

Can create your own dashboard and can have a consolidated view across your resources.

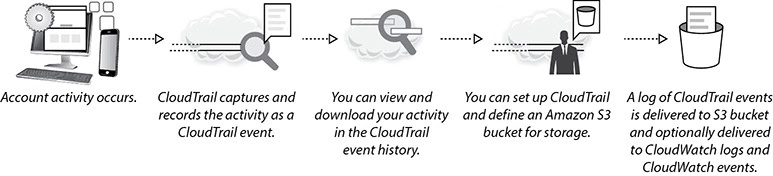

17.AWS CloudTrail

AWS CloudTrail is a service that logs all API calls, including console activities and command-line instructions.

It logs exactly who did what, when and from where.

You can save these logs into your S3 buckets.

Different accounts can send their trails to a central account and then the central account can do analytics. After that, the central account can redistribute the trails and grant access to the trails.

You can have the CloudTrail trail going to CloudWatch Logs and Amazon CloudWatch Events in addition to Amazon S3.

Can create up to five trails in an AWS region.

Best Practices for setting up CloudTrail:

· Enable AWS CloudTrail in all regions to get logs of API calls by setting up a trail that applies to all regions.

· Enable log file validation using industry-standard algorithms, SHA-256 for hashing and SHA-256 with RSA for digital signing.

· By default, the log files delivered by CloudTrail to your bucket are encrypted by Amazon server-side encryption with Amazon S3 managed encryption keys (SSE-S3). To provide a security layer that is directly manageable, you can instead use server-side encryption with AWS KMS managed keys (SSE-KMS) for your CloudTrail log files.

· Set up real-time monitoring of CloudTrail logs by sending them to CloudWatch logs.

· If you are using multiple AWS accounts, centralize CloudTrail logs in a single account.

· For added durability, configure cross-region replication (CRR) for S3 buckets containing CloudTrail logs.

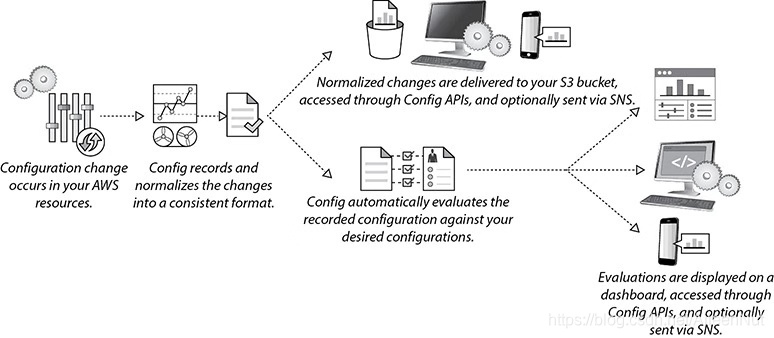

18.AWS Config

It is a fully managed service that provides you with a detailed inventory of your AWS resources and their current configuration in an AWS account.

It continuously records configuration changes to resources (eg. EC2 instances launch, ingress/egress rules of security groups, network ACL rules for VPCs…).

It lets you audit the resource configuration history and notifies you of resource configuration changes.

A config rule represents desired configurations for a resource and is evaluated against configuration changes on the relevant resources, as recorded by AWS Config.

Things can do with AWS Config:

· Continuous monitoring

Allow you to constantly monitor all your AWS resources and record any configuration changes in them.

· Continuous assessment

Provide the ability to define rules for provisioning and configuring AWS resources, and it can continuously audit and assess the overall compliance of your AWS resource configurations with your organization’s policies and guidelines.

· Change management

It can track what was changed, when it happened, and how the change might affect other AWS resources.

· Operational troubleshooting

Can help you to find the root cause by pointing out what change is causing the issue.

Can be integrated with AWS CloudTrail, you can correlate configuration changes to particular events in your account.

· Compliance monitoring

If a resource violates a rule, AWS Config flags the resource and the rule as noncompliant.

You can dive deeper to view the status for a specific region or a specific account across regions.

19.Amazon VPC Flow Logs

Amazon VPC Flow Logs captures information about the IP traffic going to and from network interfaces in your VPC.

The Flow Logs data is stored using Amazon CloudWatch Logs, which can be integrated with additional services, such as Elasticsearch/Kibana for visualization.

The flow logs can be used to troubleshoot why specific traffic is not reaching an instance.

The information captured includes information about allowed and denied traffic (based on security group and network ACL rules), and also includes source and destination IP addressed, ports, the IANA protocol number, packet and byte counts, a time interval during which the flow was observed and an action (ACCEPT or REJECT).

You can enable VPC Flow Logs at different levels: VPC, Subnet, Network interface.

Once you create a flow log, it takes several minutes to begin collecting data and publishing to CloudWatch Logs.

You can create CloudWatch metrics from VPC log data.

20.AWS Trusted Advisor

(1) Provide best practices (or checks) in five categories

· Cost Optimization

· Security

· Fault Tolerance

· Performance

· Service Limits

(2) The status of check shown on the dashboard page

· Red: means action is recommended

· Yellow: means investigation is recommended

· Green: means no problem is detected

(3) 7 available Trusted Advisor checks

· Service Limits

· S3 Bucket Permissions

· Security Groups-Specific Ports Unrestricted

· IAM Use

· MFA on Root Account

· EBS Public Snapshots

· RDS Public Snapshots

21.AWS Organizations

AWS Organizations’ service control policies (SCPs) help you centrally control AWS service use across multiple AWS accounts in your organization.

It offers policy-based management from multiple AWS accounts. You can create groups of accounts and then apply policies to those groups that centrally control the use of AWS services down to the API level across multiple accounts.

1342

1342

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?