TFlearn是一个高度封装的库,结构简洁明了,适用于新手,后期可以进一步去根据需要再进行深度学习!

文档在这:http://tflearn.org/

Alexnet用于Oxford 17 Category Flower Dataset分类,包含17个类,每个类80个图片;

Alexnet结构:

除了把最后一层的输出从1000个类改为17个类,别的结构都一样。

# -*- coding: utf-8 -*-

""" AlexNet.

Applying 'Alexnet' to Oxford's 17 Category Flower Dataset classification task.

References:

- Alex Krizhevsky, Ilya Sutskever & Geoffrey E. Hinton. ImageNet

Classification with Deep Convolutional Neural Networks. NIPS, 2012.

- 17 Category Flower Dataset. Maria-Elena Nilsback and Andrew Zisserman.

Links:

- [AlexNet Paper](http://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf)

- [Flower Dataset (17)](http://www.robots.ox.ac.uk/~vgg/data/flowers/17/)

"""

from __future__ import division, print_function, absolute_import

import tflearn

from tflearn.layers.core import input_data, dropout, fully_connected

from tflearn.layers.conv import conv_2d, max_pool_2d

from tflearn.layers.normalization import local_response_normalization

from tflearn.layers.estimator import regression

import tflearn.datasets.oxflower17 as oxflower17

X, Y = oxflower17.load_data(one_hot=True, resize_pics=(227, 227))

# Building 'AlexNet'

network = input_data(shape=[None, 227, 227, 3])

network = conv_2d(network, 96, 11, strides=4, activation='relu')

network = max_pool_2d(network, 3, strides=2)

network = local_response_normalization(network)

network = conv_2d(network, 256, 5, activation='relu')

network = max_pool_2d(network, 3, strides=2)

network = local_response_normalization(network)

network = conv_2d(network, 384, 3, activation='relu')

network = conv_2d(network, 384, 3, activation='relu')

network = conv_2d(network, 256, 3, activation='relu')

network = max_pool_2d(network, 3, strides=2)

network = local_response_normalization(network)

network = fully_connected(network, 4096, activation='tanh')

network = dropout(network, 0.5)

network = fully_connected(network, 4096, activation='tanh')

network = dropout(network, 0.5)

network = fully_connected(network, 17, activation='softmax')

network = regression(network, optimizer='momentum',

loss='categorical_crossentropy',

learning_rate=0.001)

# Training

model = tflearn.DNN(network, checkpoint_path='model_alexnet',

max_checkpoints=1, tensorboard_verbose=2)

model.fit(X, Y, n_epoch=10, validation_set=0.1, shuffle=True,

show_metric=True, batch_size=64, snapshot_step=200,

snapshot_epoch=False, run_id='alexnet_oxflowers17')

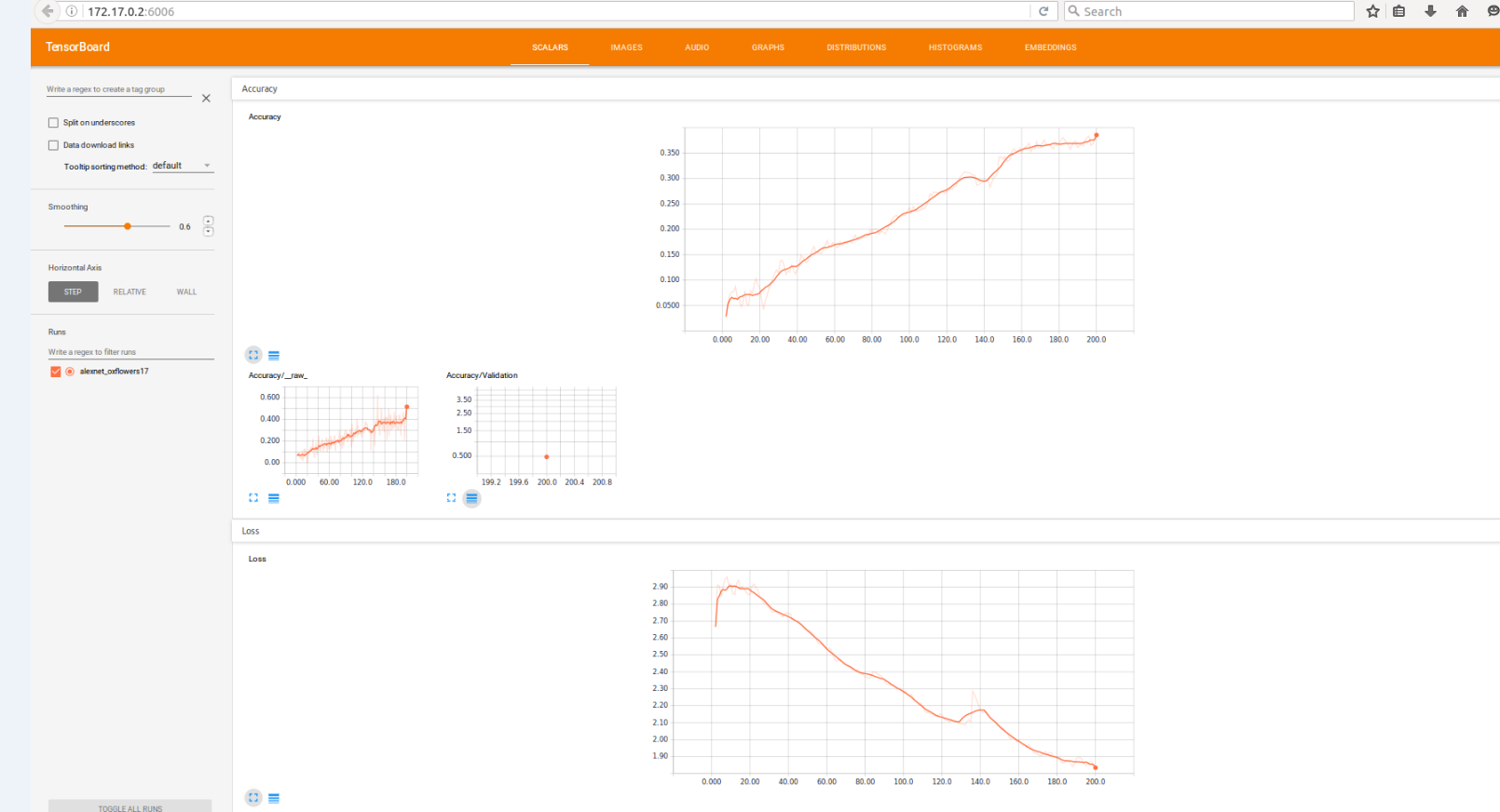

打开tensotboard: tensorboard --logdir=/tmp/tflearn_logs/

通过tensorboard查看准确率变化以及loss变化,上图是跑了10个epoch的结果。

注意:在前几天腾讯优图图像组的面试中,被问到dropout放在哪些层?以及为什么现在不用Dropout了?

在Alexnet中,在FC6和FC7中用了dropout。

1113

1113

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?