除了这个之外当然还看了几个demo, 把wordcount放到最后,打算单独实现一遍。

最后还是在继承类的泛型的地方存在一些盲区,日后补上。

代码:

package wordcount;

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

//import org.apache.hadoop.mapreduce.Reducer.Context;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class WordCount {

public static class Map extends Mapper<Object, Text, Text, IntWritable>{

private static Text line = new Text();//每行数据

private static IntWritable one = new IntWritable(1);

private static Text word = new Text();

public void map(Object key, Text value, Context context) throws IOException, InterruptedException{

line = value;

// context.write(line, new Text(""));one

StringTokenizer itr = new StringTokenizer(value.toString());

while(itr.hasMoreElements()){

word.set(itr.nextToken());

context.write(word, one);

}

}

}

public static class Reduce extends Reducer<Text,IntWritable,Text,IntWritable> {

public void reduce(Text key,Iterable<IntWritable> values, Context context) throws IOException, InterruptedException{

// context.write(key, new Text(""));

IntWritable res = new IntWritable();

int sum = 0;

for(IntWritable value: values){

sum+=value.get();//熟悉下intwritable方法,属性

}

res.set(sum);

context.write(key, res);

}

}

public static void main(String args[]) throws IOException, ClassNotFoundException, InterruptedException{

Configuration conf = new Configuration();

conf.set("mapred.job.tracker", "127.0.0.1:9000");

String[] ioArgs=new String[]{"hdfs://localhost:9000/count_in","hdfs://localhost:9000/count_out"};

//??

String[] otherArgs = new GenericOptionsParser(conf,ioArgs).getRemainingArgs();

if(otherArgs.length!=2){

System.err.println("xx");

System.exit(2);//2是什么状态

}

Job job = new Job(conf,"data dedup");

job.setJarByClass(WordCount.class);

job.setMapperClass(Map.class);

job.setCombinerClass(Reduce.class);

job.setReducerClass(Reduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true)?0:1);

}

} 截图:

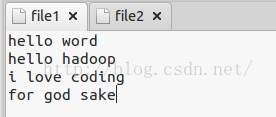

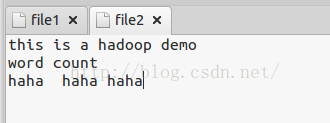

文本

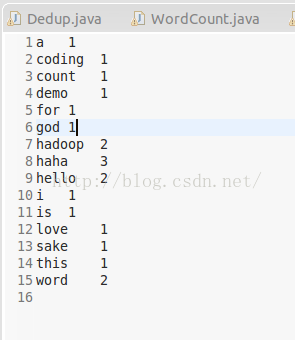

结果:

773

773

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?