一、tf-idf介绍

tf-idf(term frequency-inverse document frequency) 一种广泛用于文本挖掘的特征向量方法,用户反映术语对语料库中文档重要性,tf(Term Frequency):表示一个term与某个document的相关性,idf(Inverse Document Frequency):表示一个term表示document的主题的权重大小,tf(t,d)词频,idf(t,D)=log((|D|+1)/(DF(t,D)+1)),其中|D|表示文件集总数,DF词出现(t,D)文档数量,则tfidf(t,d,D)=tf(t,d)*idf(t,D)。 示例: 一篇文件总词语是100个,词语“胡歌”出现了3次,那么“胡歌”一词在该文件中的词频TF(t,d)=3/100; 如果“胡歌”一词在1000份文件中出现过,而文件总数是10000,其文件频率DF(t,D)=log((10000+1)/(1000+1)) * 那么“胡歌”一词在该文件集的tf-idf分数为TF(t,d)*DF(t,D)。

二、spark ml实现

1. 数据集预处理

以非常有名的文本分类数据集20 Newsgroups,由20个不同主题新闻消息组成,训练集:20news-bydate-train,测试集:20news-bydate-test。

1.1 加载数据

需要加载训练集path+id及其对应的文本内容,基于RDD的wholeTextFiles API可以实现,但spark ml只接受基于DataFrame操作的API,因此需要通过case class反射弧推断将RDD转换为DataFrame。

val files = spark.sparkContext

.wholeTextFiles("/opt/data/20news-bydate-train/*")

import spark.implicits._

val sentences = files.map(f=>News(f._1.split("/").slice(4, 6).mkString("."),f._2)).toDF("name","content").as[News]1.2 分词

通过查看源码发现,ml自带的Tokenizer分词器使用空格分割,不适合比较复杂的英文文本场景,为此重写分割方法改写为非数字/字母/下划线分割,并过滤含有数字字母。

val tokenizer = new Tokenizer(){

override protected def createTransformFunc: String => Seq[String] = {

_.toLowerCase.split("\\W+").filter("""[^0-9]*""".r.pattern.matcher(_).matches())

}

}.setInputCol("content").setOutputCol("raw")1.3 去除停用词

使用ml自带停用词表,过滤无用词语,为了更清楚ml自带停用词表,打印下来。

val remover = new StopWordsRemover().setInputCol("raw").setOutputCol("words")

println(remover.getStopWords.mkString("[",",","]"))[i,me,my,myself,we,our,ours,ourselves,you,your,yours,yourself,yourselves,he,him,his,himself,she,her,hers,herself,it,its,itself,they,them,their,theirs,themselves,what,which,who,whom,this,that,these,those,am,is,are,was,were,be,been,being,have,has,had,having,do,does,did,doing,a,an,the,and,but,if,or,because,as,until,while,of,at,by,for,with,about,against,between,into,through,during,before,after,above,below,to,from,up,down,in,out,on,off,over,under,again,further,then,once,here,there,when,where,why,how,all,any,both,each,few,more,most,other,some,such,no,nor,not,only,own,same,so,than,too,very,s,t,can,will,just,don,should,now,i'll,you'll,he'll,she'll,we'll,they'll,i'd,you'd,he'd,she'd,we'd,they'd,i'm,you're,he's,she's,it's,we're,they're,i've,we've,you've,they've,isn't,aren't,wasn't,weren't,haven't,hasn't,hadn't,don't,doesn't,didn't,won't,wouldn't,shan't,shouldn't,mustn't,can't,couldn't,cannot,could,here's,how's,let's,ought,that's,there's,what's,when's,where's,who's,why's,would]

2. TF-DIF训练

特征哈希是一种处理高维数据的技术,经常用在文本和分类数据集上,优势是不再需要构建映射并把它保存下来,内存使用量不会随数据和维度增加而增加。HashingTF使用特征哈希把每个输入本文的词映射为一个词频向量的下标,每个词频向量的下标就是一个哈希值,以此计算词频向量。

val hashingTF = new HashingTF().setInputCol("words").setOutputCol("rawFeatures").setNumFeatures(math.pow(2, 18).toInt)

val features = hashingTF.transform(words)利用词频向量作为输入来对文库中每个单词计算逆向文本频率,创建IDF实例并fit。

val idf = new IDF().setInputCol("rawFeatures").setOutputCol("features")

val idfModel = idf.fit(features)

val rescaled:Dataset[Row] = idfModel.transform(features)

rescaled.select("name","words","features").show(5, false)

+----------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

|name |words |features |

+----------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

|rec.motorcycles.105131|[santac, aix, rpi, edu, christopher, james, santarcangelo, subject, forsale, yamaha, seca, turbo, keywords, forsale, seca, turbo, nntp, posting, host, aix, rpi, edu, distribution, usa, lines, want, need, money, school, snappy, bike, needs, little, work, money, details, miles, mitsubishi, turbo, asthetically, beautiful, fast, one, factory, turboed, bikes, kit, must, see, ride, appreciate, fun, bike, asking, best, offer, bike, seen, bennington, vermont, e, mail, info, thanks, chris, santac, rpi, edu] |(262144,[1998,8443,10286,17222,18270,21688,34343,56246,57414,62655,66275,76673,83161,89231,94266,100314,105464,113503,119489,125372,130658,138677,138912,156045,162813,163197,166027,167918,171385,173702,180708,180865,181519,186593,190256,198131,203097,215995,216432,218005,219621,221012,221790,226693,227490,229407,235164,236274,236986,237656,239122,241599,258405,259167,260935],[5.114376852891531,4.169098584144124,3.3703052144491914,1.8756984007271504,13.251668713930856,11.118790333131003,1.993049003944363,1.9840108533293008,1.4576256757644113,3.2268616703253836,3.6201517525582685,5.129191938676672,1.8960894363957057,11.290010207907402,2.4742696544134355,4.152101007775553,3.467416501134341,3.352470346813157,7.947590196947747,0.9548046687810345,8.640737377507692,0.003275353700510253,3.7026727762462723,5.344900511503363,0.0,0.7994410132448727,2.3975418222335882,0.8490079681279433,4.633404192275221,8.640737377507692,2.8162132851553627,16.470544538799057,2.3163784151263815,2.6593231662532117,1.8710954006551896,2.126765701859882,4.812095981018597,1.7655052902311155,3.7809249731460204,8.640737377507692,3.913349558795352,9.735952878826108,1.8762752683520318,6.155830727719692,15.089458394590405,2.146983537656006,1.2763812557131817,3.7317657371879367,6.077237113255983,7.947590196947747,3.3374324694486166,14.603929318239162,7.031299465073592,4.867976439413054,0.8621072301818828]) |

|rec.motorcycles.103214|[joe, rider, cactus, org, joe, senner, subject, re, johs, dhhalden, reply, joe, rider, cactus, org, distribution, na, organization, lines, davet, interceptor, cds, tek, com, dave, tharp, cds, writes, article, rider, uucp, joe, rider, cactus, org, writes, cookson, mbunix, mitre, org, cookson, writes, bozo, posts, gifs, rec, moto, postmaster, also, gonna, get, copies, post, mailboxes, hey, great, picture, fault, taste, technique, chill, educate, instead, getting, panties, bunch, ditto, dave, m, using, picture, bacground, sun, haven, sent, single, message, guy, looks, like, get, keep, panties, joe, senner, joe, rider, cactus, org, austin, tx, warning, look, laser, remaining, eye, posted, radioactive, isotope, research, lab, r, h, f]|(262144,[300,6198,9103,10510,15554,18471,18910,21007,23178,23875,24038,24152,24258,24730,32890,34140,35669,42389,47800,52391,57400,57414,62425,63525,66130,74079,84868,96639,99823,99895,101702,103032,110693,120739,120959,121616,127101,127222,138356,138677,140532,143187,143720,144638,147136,153849,154604,156623,158223,162577,162813,167222,172596,172933,172985,182498,191947,192599,195181,198440,200749,203541,204743,205325,208258,212438,219964,220275,221968,223228,223763,234571,236195,240918,242448,249828,252859,254389,256376],[6.0016800478924335,7.387974409012324,3.2585385269789535,4.060884999503891,2.960564768490625,5.8998973535824915,1.2664218910575802,7.724446645633537,4.215890745650882,3.1918471524802814,6.198390342138488,3.310436965106605,5.868148655267912,6.500671214011422,2.6467759502011234,2.7164815800931605,7.724446645633537,8.18427508601599,7.724446645633537,14.775948818024649,2.18553881416757,1.4576256757644113,0.3723902347580378,6.625834356965428,3.836716332774436,3.057241068725993,6.951902807168357,13.711054130294897,4.718764041226378,2.6397591265763594,5.085389316018278,4.538094012470896,3.3499482773804474,3.393713305347206,6.0016800478924335,4.546392815285592,4.271289525040671,3.053488719107443,2.4586524707910606,0.003275353700510253,0.04030658752139948,17.281474755015385,2.630696444826775,6.7689352006061005,1.4802797449698013,2.8523074287912067,3.224636975303272,4.055769898837121,4.050680829329649,4.563199933601973,0.0,0.7633401911544059,13.697955816559276,2.483758391922137,23.72392322716307,2.653029879277423,2.988248197239042,3.857421006136127,7.542125088839582,4.0060083892780565,2.715145574550418,1.5914825362518559,4.689493658926265,0.8593901211845156,1.1468634907241333,5.003151217781307,21.530563357944843,5.003151217781307,11.736297310535823,25.49889026735189,2.3119081349954778,6.242842104709322,6.7689352006061005,8.640737377507692,5.057218439051582,2.0219317933566754,3.9222385062125977,3.6401524192649384,6.935989285269267]) |

|rec.motorcycles.104551|[cunixb, cc, columbia, edu, sebastian, c, sears, subject, msf, program, nntp, posting, host, cunixb, cc, columbia, edu, reply, cunixb, cc, columbia, edu, sebastian, c, sears, organization, columbia, university, distribution, usa, lines, someone, mail, archive, location, msf, program, ibm, right, thanks, wanna, sit, buy, drink, someday, temple, dog, sea, bass, sears, cunixb, cc, columbia, edu, dod, stanley, id, yamaha, bmw, toyota, nyc, ny] |(262144,[19984,25736,28698,40910,46112,56246,57414,60349,61008,70389,73341,75919,77293,92824,108540,118590,136496,138677,140532,148162,150925,154223,156045,162813,163197,167918,170988,193924,197304,198131,203541,205876,210452,215841,220792,221790,222294,227443,235164,249445,253534,259786,260935],[4.4586872348664865,5.549694924149376,4.2358191108323435,4.670445463955571,21.093898395220776,1.9840108533293008,1.4576256757644113,10.979832322136474,20.700005898831865,2.9864953484116277,4.571710623269881,5.016396444531328,3.8658244169325067,4.5889524297043875,5.62031249136333,1.9253539911730115,5.868148655267912,0.003275353700510253,0.04030658752139948,3.3806412237798535,5.099778053470378,5.462683547159747,5.344900511503363,0.0,0.7994410132448727,0.8490079681279433,4.428609779629208,0.9359254545750986,11.19242987956854,2.126765701859882,1.5914825362518559,4.856547743589432,5.506490841565378,12.57872424068843,16.51599648903187,1.8762752683520318,3.616856856661416,4.529863513334381,1.7018416742842424,5.256347114161918,2.235508919476851,3.3703052144491914,0.8621072301818828]) |

|rec.motorcycles.104720|[infante, acpub, duke, edu, andrew, infante, subject, insurance, lotsa, points, organization, duke, university, durham, n, c, lines, nntp, posting, host, acpub, duke, edu, well, looks, like, m, f, cked, insurance, dwi, beemer, rec, vehicle, ll, cost, almost, bucks, insure, year, probably, sell, bike, return, dod, number, andy, infante, listen, everybody, says, fact, remains, bmw, ve, got, get, thing, dod, joan, sutherland, opinions, dammit, nothing, anyone, else] |(262144,[2903,9990,10510,12438,18025,18910,19984,21688,24152,28698,31463,46252,67562,71481,82637,83926,92925,93036,93771,96822,99895,100743,107499,110693,123445,131312,138193,138677,140532,141048,141208,145238,146794,147820,148921,157533,161826,162813,163197,165267,167503,167918,186925,190208,193924,199099,208258,215263,216943,221315,223821,228901,229103,233631,235164,257807,259786,260935],[6.7689352006061005,6.935989285269267,4.060884999503891,5.966588728081164,4.071194369162752,1.2664218910575802,4.4586872348664865,3.706263444377001,3.310436965106605,2.1179095554161718,2.226459083956933,2.5281620046506523,2.5193416608076795,3.2268616703253836,8.742079855615462,2.715145574550418,15.049189333593983,4.738764707933048,6.3894455789011975,3.5562322348449813,1.3198795632881797,2.9622727108357814,2.243807722291546,3.3499482773804474,3.425801619898707,5.527222068297318,1.6827643823649379,0.003275353700510253,0.04030658752139948,6.848977908279638,5.3265513728351666,4.316604721252713,3.1747891695757047,11.736297310535823,2.3594056470425926,7.2544430163878015,2.5699996495052027,0.0,0.7994410132448727,1.8943252489343183,2.3907621352482096,0.8490079681279433,1.63494835825419,5.837376996601158,0.9359254545750986,4.152101007775553,1.1468634907241333,4.271289525040671,19.01445685354094,2.34178813065175,2.9917631393464865,5.750365619611528,2.5051724864259537,2.2029857277712908,0.8509208371421212,3.571833175287461,6.740610428898383,0.8621072301818828]) |

|rec.motorcycles.103127|[cleveland, freenet, edu, mike, sturdevant, subject, re, ed, must, daemon, child, article, d, usenet, reply, cleveland, freenet, edu, mike, sturdevant, organization, case, western, reserve, university, cleveland, oh, usa, lines, nntp, posting, host, ins, cwru, edu, previous, article, svoboda, rtsg, mot, com, david, svoboda, says, article, linus, mitre, org, cookson, mbunix, mitre, org, cookson, writes, wait, minute, ed, noemi, satan, wow, seemed, like, nice, boy, rcr, noemi, makes, think, cuddle, kotl, talking, bout, noemi, know, makes, think, big, bore, hand, guns, extreme, weirdness, babe, rode, across, desert, borrowed, death, ride, fuck, man, making, big, mistake, go, fast, take, chances, mike] |(262144,[3203,4660,5595,14742,18730,22346,23722,24918,27526,30006,32927,38765,55639,56246,56894,59414,61111,62425,70028,71101,71524,81534,85095,90072,96639,100314,107257,113503,114357,116940,126907,134838,136733,138677,140451,140532,140931,150069,161826,162813,163197,167222,167918,172477,172596,178640,181350,184358,188534,191973,192310,193924,200195,203541,205325,205974,206499,207588,208258,211324,215550,222564,227168,229407,232367,235164,236378,236852,237111,240617,242448,242819,247768,249828,250475,252462,256376,259708,260935],[7.907974409054357,5.159497288172001,3.401639370619627,11.140451077051463,3.5439243871703843,3.1014365415171152,4.436044758116727,2.7616020153736303,1.4072819588862537,5.393253053566362,4.055769898837121,3.4450065997347568,2.105496106494034,1.9840108533293008,14.273319961462837,5.114376852891531,4.296931955654008,0.3723902347580378,2.8524659267130974,3.982026424591571,3.115284438375909,4.699155569838002,5.837376996601158,3.926712786607519,5.484421652117959,4.152101007775553,4.4896974716090465,3.352470346813157,2.915519622131426,13.251668713930856,5.696298398341252,4.336672284303523,7.542125088839582,0.003275353700510253,3.8326263475229108,0.04030658752139948,1.2346339962706774,3.6884376604244,2.5699996495052027,0.0,0.7994410132448727,2.2900205734632175,0.8490079681279433,1.9271738278899975,13.697955816559276,7.724446645633537,9.467821332051113,5.549694924149376,4.615385686772544,4.399410624936946,2.242974735987654,0.9359254545750986,6.500671214011422,1.5914825362518559,0.8593901211845156,5.837376996601158,6.115008733199437,6.242842104709322,1.1468634907241333,2.4944081198387953,3.8408231147270895,3.6435251037435776,7.947590196947747,2.146983537656006,2.9725820804946426,1.2763812557131817,7.542125088839582,3.3963483529852114,5.868148655267912,6.242842104709322,10.114436878103165,6.7689352006061005,3.224636975303272,0.6739772644522252,5.588597204899936,7.314261511598712,6.935989285269267,22.163923227036975,0.8621072301818828])|

+----------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

only showing top 5 rows

3. TF-IDF验证

从新闻组中随即选取1篇新闻并与其他新闻比较选出top100,进行验证。注意:BLAS作用域为private[spark],这里需要将包改为org.apache.spark._

val selected = rescaled.sample(true, 0.2, 8).select("name","features").first()

val selected_feature:Vector = selected.getAs(1)

val sv1 = Vectors.norm(selected_feature, 2.0)

println("随机选取news=" + selected.getAs[String]("name"))

val sim = rescaled.select("name","features").map(row =>{

val id = row.getAs[String]("name")

val feature:Vector = row.getAs(1)

val sv2 = Vectors.norm(feature, 2.0)

val similarity = org.apache.spark.ml.linalg.BLAS.dot(selected_feature.toSparse, feature.toSparse)/(sv1 * sv2)

SimilarityData(id, similarity)

}).toDF("id","similarity").as[SimilarityData]

println("与之最相似top100新闻为:")

sim.orderBy("similarity").select("id","similarity").limit(100).show(false)随机选取news=rec.motorcycles.105131

与之最相似top100新闻为:

+----------------------------+---------------------+

|id |similarity |

+----------------------------+---------------------+

|sci.med.58989 |0.0 |

|sci.med.58996 |0.0 |

|rec.sport.hockey.52560 |6.678378038814563E-10|

|rec.sport.hockey.53613 |6.883230917124846E-10|

|rec.sport.hockey.52631 |6.883230917124846E-10|

|rec.sport.hockey.53861 |7.988839628487241E-10|

|rec.sport.hockey.52561 |1.020793686899755E-9 |

|rec.sport.hockey.53926 |1.0249368470002614E-9|

|rec.sport.hockey.53532 |1.3380913559871441E-9|

|rec.sport.baseball.102610 |1.350294440812042E-9 |

|rec.sport.hockey.53615 |1.3943156767595133E-9|

|talk.politics.mideast.76262 |1.4372625741845937E-9|

|talk.politics.guns.54160 |1.4421913240029777E-9|

|comp.os.ms-windows.misc.9736|1.5324400223673388E-9|

|talk.politics.misc.178307 |1.5441122915072916E-9|

|rec.sport.hockey.53983 |1.782033247511015E-9 |

|rec.sport.hockey.53748 |2.0556198612717205E-9|

|comp.sys.mac.hardware.51914 |2.073289915178155E-9 |

|comp.windows.x.66965 |2.2162593551251446E-9|

|comp.graphics.38355 |2.2897834922816747E-9|

+----------------------------+---------------------+

三、word2vec介绍

Word2vec 是 Google 在 2013 年开源的一款将词表征为实数值向量的高效工具,word2vec就是用一个一层的神经网络(CBOW的本质)把one-hot形式的词向量映射为分布式形式的词向量,为了加快训练速度,用了Hierarchical softmax,negative sampling 等trick,把对文本内容的处理简化为 K 维向量空间中的向量运算,而向量空间上的相似度可以用来表示文本语义上的相似度。

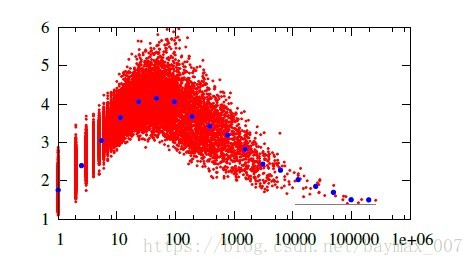

根据《Measuring Word Significance using Distributed Representations of Words》,高频低频两个极端的词的向量长度都会小,频度适中的词向量长度会较大。词频与词向量长度呈下图形状。随着词频增大,长度先增后降。

minCount设置最低频率,如果一个词语在文档中出现的次数小于5,那么就会丢弃。

四、spark ml实现

1. 训练word2vec模型

训练集为分词后本文集,采用spark ml自带word2vec训练模型,词向量长度设置为3,最低频率设置为1。

val word2vec = new Word2Vec()

.setInputCol("words")

.setOutputCol("result")

.setVectorSize(3)

.setMinCount(1)

val model = word2vec.fit(words)

val result = model.transform(words)2. word2vec模型验证

model.findSynonyms("space", 20).collect().foreach(println)[typein,0.9999505281448364]

[coursework,0.9999457001686096]

[billboards,0.9998683929443359]

[melinda,0.9998528361320496]

[apppreciated,0.9998492002487183]

[popularly,0.9998286366462708]

[slop,0.9998160600662231]

[planner,0.999790370464325]

[tikkanen,0.9997389912605286]

[dictionaries,0.999737560749054]

[eigil,0.9997144341468811]

[picker,0.9997084736824036]

[strolman,0.9996740221977234]

[relish,0.9996612071990967]

[alena,0.9995930194854736]

[gax,0.9995219111442566]

[moog,0.9995144605636597]

[atase,0.9995051026344299]

[podlogar,0.9995018839836121]

[detachments,0.9994955658912659]

附带所有代码

package org.apache.spark

import org.apache.spark.ml.feature._

import org.apache.spark.ml.linalg.{Vector, Vectors}

import org.apache.spark.sql.{Dataset, Row, SparkSession}

/**

* @Author: JZ.lee

* @Description: TODO

* @Date: 18-8-20 下午5:10

* @Modified By:

*/

object TextMineML {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder()

.appName("TextMineML")

.config("spark.some.config.option", "some-value")

.master("local[2]")

.getOrCreate()

val files = spark.sparkContext

.wholeTextFiles("/opt/data/20news-bydate-train/*")

import spark.implicits._

val sentences = files.map(f=>News(f._1.split("/").slice(4, 6).mkString("."),f._2)).toDF("name","content").as[News]

// ml中分词器分词(默认空白符分割,改写为非数字/字母/下划线分割,并过滤掉含有数字词语)

val tokenizer = new Tokenizer(){

override protected def createTransformFunc: String => Seq[String] = {

_.toLowerCase.split("\\W+").filter("""[^0-9]*""".r.pattern.matcher(_).matches())

}

}.setInputCol("content").setOutputCol("raw")

val raw = tokenizer.transform(sentences)

// 去除停用词

val remover = new StopWordsRemover().setInputCol("raw").setOutputCol("words")

// 打印停用词

println(remover.getStopWords.mkString("[",",","]"))

val words = remover.transform(raw)

words.select("name","words").show(5,false)

// // tf计算

// val hashingTF = new HashingTF().setInputCol("words").setOutputCol("rawFeatures").setNumFeatures(math.pow(2, 18).toInt)

// val features = hashingTF.transform(words)

// // idf计算

// val idf = new IDF().setInputCol("rawFeatures").setOutputCol("features")

// val idfModel = idf.fit(features)

// val rescaled:Dataset[Row] = idfModel.transform(features)

// rescaled.select("name","words","features").show(5, false)

//

// /**

// * 文本相似度检验

// * 从新闻组中随即选取1篇新闻并与其他新闻比较选出topN

// */

// val selected = rescaled.sample(true, 0.2, 8).select("name","features").first()

// val selected_feature:Vector = selected.getAs(1)

// val sv1 = Vectors.norm(selected_feature, 2.0)

// println("随机选取news=" + selected.getAs[String]("name"))

// val sim = rescaled.select("name","features").map(row =>{

// val id = row.getAs[String]("name")

// val feature:Vector = row.getAs(1)

// val sv2 = Vectors.norm(feature, 2.0)

// val similarity = org.apache.spark.ml.linalg.BLAS.dot(selected_feature.toSparse, feature.toSparse)/(sv1 * sv2)

// SimilarityData(id, similarity)

// }).toDF("id","similarity").as[SimilarityData]

// println("与之最相似top100新闻为:")

// sim.orderBy("similarity").select("id","similarity").limit(100).show(false)

/**

* word2vec

* 2013年google开源的一款将词表征为实数值向量的工具,利用深度学习思想,把对文本内容处理简化为K维向量空间的向量运算,而向量空间的相似度表示文本寓意相似度

*/

val word2vec = new Word2Vec()

.setInputCol("words")

.setOutputCol("result")

.setVectorSize(3)

.setMinCount(1)

val model = word2vec.fit(words)

// val result = model.transform(words)

model.findSynonyms("space", 20).collect().foreach(println)

// result.collect().foreach{

// case Row(text:Seq[_], features:Vector) =>

// println(s"Text: [${text.mkString(", ")}] => \nVector: $features\n")

// }

}

case class News(var name:String, var content:String)

case class Rescaled(var name:String, var words:String, var rawFeatures:String)

case class SimilarityData(var id:String, var similarity:Double)

}

参考文献

https://blog.csdn.net/u011239443/article/details/51728659

本文介绍了TF-IDF和Word2Vec两种文本挖掘方法的基本原理及在Spark MLlib中的实现过程。包括数据预处理、特征提取、模型训练与验证等关键步骤,并通过实际案例展示了如何使用这些技术进行文本相似度计算和词向量生成。

本文介绍了TF-IDF和Word2Vec两种文本挖掘方法的基本原理及在Spark MLlib中的实现过程。包括数据预处理、特征提取、模型训练与验证等关键步骤,并通过实际案例展示了如何使用这些技术进行文本相似度计算和词向量生成。

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?