Numpy-python科学计算库;Pandas-python数据分析处理库;Scikit-learn-python机器学习库;

2.泰坦尼克号数据介绍

乘客编号、是否幸存、等级、姓名、性别、年龄、兄弟姐妹个数、带老人孩子个数、船票、船票价格、上船地点;

3.数据预处理

- import pandas #ipython notebook

- titanic = pandas.read_csv("titanic_train.csv")

- #titanic.head(3) //前3行打印出来

- print titanic.describe() //统计特性:count、mean、std、min、25%、50%、75%、max

- titanic ["Age"] = titanic ['Age'] . fillna(titanic['Age'].median()) //Age列中的缺失值用Age均值进行填充

- printf titanic.describe()

- print titanic ["Sex"].unique() //male用0,female用1

- #Replace all the occurences of male with the number 0.

- titanic.loc[titanic["Sex"] == "male","Sex"] = 0

- titanic.loc[titanic["Sex"] == "female","Sex"] = 1

- print titanic ["Embarked"].unique()

- titanic["Embarked"] = titanic["Embarked"].fillna('S') //缺失值用最多的S进行填充

- titanic.loc[titanic["Embarked"] == "S","Embarked"] = 0 //地点用0,1,2

- titanic.loc[titanic["Embarked"] == "C","Embarked"] = 1

- titanic.loc[titanic["Embarked"] == "Q","Embarked"] = 2

4.回归模型

- #Import the linear regression class

- from sklearn.linear_model import LinearRegression //线性回归

- #Sklearn also has a helper that makes it easy to do cross validation

- from sklearn.cross_validation import KFold //训练集交叉验证,得到平均值

- #The columns we'll use to predict the target

- predictors = ["Pclass","Sex","Age","SibSp","Parch","Fare","Embarked"] //要输入的特征

- #Initialize our algorithm class

- alg = LinearRegression()

- #Generate cross validation folds for the titanic dataset. It return the row indices corresponding to train

- kf = KFold(titanic.shape[0],n_folds=3,random_state=1) //样本平均分成3份,交叉验证

- predictions = []

- for train,test in kf:

- #The predictors we're using to train the algorithm. Note how we only take then rows in the train folds.

- train_predictors = (titanic[predictors].iloc[train,:])

- #The target we're using to train the algorithm.

- train_target = titanic["Survived"].iloc[train]

- #Training the algorithm using the predictors and target.

- alg.fit(train_predictors,train_target)

- #We can now make predictions on the test fold

- test_predictions = alg.predict(titanic[predictors].iloc[test,:])

- predictions.append(test_predictions)

- import numpy as np

- #The predictions are in three aeparate numpy arrays. Concatenate them into one.

- #We concatenate them on axis 0,as they only have one axis.

- predictions = np.concatenate(predictions,axis=0)

- #Map predictions to outcomes(only possible outcomes are 1 and 0)

- predictions[predictions>.5] = 1

- predictions[predictions<=.5] = 0

- accuracy = sum(predictions[predictions == titanic["Survived"]]) / len(predictions)

- print accuracy

- from sklearn import cross_validation

- from sklearn.linear_model import LogisticRegression //逻辑回归

- #Initialize our algorithm

- alg=LogisticRegression(random_state=1)

- #Compute the accuracy score for all the cross validation folds.(much simpler than what we did before!)

- scores = cross_validation.cross_val_score(alg,titanic[predictors],titanic["Survived"],cv=3)

- #Take the mean of the scores (because we have one for each fold)

- print(scores.mean())

5.随机森林模型

(1)随机取样本(有放回的取样)

(2)随机选择特征

(3)多个决策树(投票机制)

- from sklearn import cross_validation

- from sklearn.ensemble import RandomForestClassifier

- predictors=["Pclass","Sex","Age","SibSp","Parch","Fare","Embarked"]

- #Initialize our algorithm with the default parameters

- #n_estimators is the number of tress we want to make

- #min_samples_split is the minimum number of rows we need to m,ake a split

- #min_samples_leaf is the minimum number of samples we can have at the place where a tree branch ends (the b)

- alg=RandomForestClassifier(random_state=1,n_estimators=10,min_samples_split=2,min_samples_leaf=1) //10棵决策树,停止的条件:样本个数为2,叶子节点个数为1

- #Compute the accuracy score for all the cross validation folds. (much simpler than what we did before!)

- kf=cross_validation.KFold(titanic.shape[0],n_folds=3,random_state=1)

- scores=cross_validation.cross_val_cross_val_score(alg,titanic[predictors],titanic["Survived"],cv=kf)

- #Take the mean of the scores (because we have one for each fold)

- print(scores.mean())

- #Generating a familysize column

- titanic["FamilySize"]=titanic["SibSp"]+titanic["Parch"]

- #The .apply method generates a new series

- titanic["NameLength"]=titanic["Name"].apply(lambda x:len(x))

- import re

- #A function to get the title from a name

- def get_title(name):

- #Use a regular expression to search for a title. Titles always consist of capital and lowercase letters

- title_search = re.search('([A-Za-z]+)\.',name)

- #If the title exists,extract and return it.

- if title_search:

- return title_search.group(1)

- return ""

- #Get all the titles and print how often each one occurs.

- titles=titanic["Name"].apply(get_title)

- print(pandas.value_counts(titles))

- #Map each titles to an integer. Some titles are very rare,and are compressed into the same codes as other

- title_mapping = {"Mr":1,"Miss":2,"Mrs":3,"Master":4,"Dr":5,"Rev":6,"Major":7,"Col":7,"Mile":8,"Mme":8,"Don":9,"Lady":10,"Countess":10,"Jonkheer":10,"Str":9,"Capt":7}

- for k,v in title_mapping.items()

- titles[titles==k]=v

- #Verify that we converted everything.

- print(pandas.value_counts(titles))

- #Add in the title column

- titanic["Title"]=titles

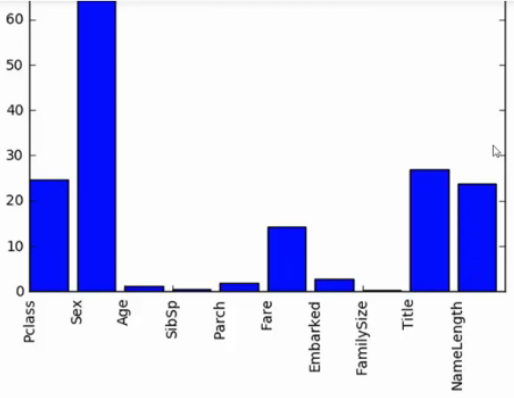

6.特征选择

通过加入噪音值前后的错误率的差值来判断特征值的重要程度。

- import numpy as np

- from sklearn.feature_selection import SelectKBest,f_classif

- import matplotlib.pyplot as plt

- predictors = ["Pclass","Sex","Age","SibSp","Parch","Fare","Embarked","FamilySize","Title","NameLength"]

- #Perform feature selection

- selector=SelectKBest(f_classif,k=5)

- selector.fit(titanic[predictors],titanic["Survived"])

- #Plot the raw p-values for each feature,and transform from p-values into scores

- scores=-np.log10(selector.pvalues_)

- #Plot the scores. See how "Pclass","Sex","Title",and "Fare" are the best?

- plt.bar(range(len(predictors)).scores)

- plt.xticks(range(len(predictors)).predictors,rotation='vertical')

- plt.show()

- #Pick only the four best features.

- predictors=["Pclass","Sex","Fare","Title"]

- alg=RandomForestClassifier(random_state=1,n_estimators=50,min_samples_split=8,min_samples_leaf=4)

- //集成多种算法求平均的方法来进行机器学习求解

- from sklearn.ensemble import GradientBoostingClassifier

- import numpy as np

- #The algorithms we want to ensemble.

- #We're using the more linear predictors for the logistic regression,and everything with the gradient boosting classifier

- algorithms=[

- [GradientBoostingClassifier(random_state=1,n_estimators=25,max_depth=3, ["Pclass","Sex","Age","Fare","FamilySize","Title","Age","Embarked"]]

- [LogisticRegression(random_state=1),["Pclass","Sex","Fare","FamilySize","Title","Age","Embarked"]]

- ]

- #Initialize the cross validation folds

- kf=KFold(titanic.shape[0],n_folds=3,random_state=1)

- predictions=[]

- for train,test in kf:

- train_target=titanic["Survived"].iloc[train]

- full_test_predictions=[]

- #Make predictions for each algorithm on each fold

- for alg,predictors in algorithms:

- #Fit the algorithm on the training data

- alg.fit(titanic[predictors].iloc[train,:],train_targegt)

- #Select and predict on the test fold

- #The .astype(float) is necessary to convert the dataframe to all floats and sklearn error.

- test_predictions=alg.predict_proba(titanic[predictors].iloc[test,:].astype(float))[:,1]

- #Use a simple ensembling scheme -- just average the predictions to get the final classification.

- test_predictions=(full_test_predictions[0]+full_test_predictions[1])/2

- #Any value over .5 is assumed to be a 1 prediction,and below .5 is a 0 prediction.

- test_predictions[test_predictions<=0.5]=0

- test_predictions[test_predictions>0.5]=1

- predictions.append(test_predictions)

- #Put all the predictions together into one array.

- predictions=np.concatenate(predictions,axis=0)

- #Compute accuracy by comparing to the training data

- accuracy=sum(predictions[predictions==titanic["Survived"]])/len(predictions)

- print(accuracy)

- #The gradient boosting classifier generates better predictions,so we weight it higher

- predictions=(full_predictions[0]*3+full_predictions[1]*1)/4

- predictions

891

891

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?